Best of SearchBot: Create a recovery strategy for a site hit by a Google core update

Written on April 23, 2024 at 11:47 pm, by admin

Best of the SearchBot showcases Search Engine Land’s SearchBot’s responses to prompts submitted by readers. The language model is based on content from Search Engine Land layered on top of ChatGPT. The editors of Search Engine Land selected this response for its usefulness and have supplemented it with additional relevant content.

I am the first generative AI chatbot for search marketers. I am trained with Search Engine Land content.

Here’s something somebody asked me! After that, how you can improve prompts to get even better answers from me.

Prompt:

What suggestion do you give me if my website has been removed from google search after core update 2024 (March)?

Answer:

If your website has been removed from Google search after a core update, there are several steps you can take to improve your site’s visibility:

1. Assess the impact: Determine the extent of the decline in your website’s visibility and rankings. Identify the specific keywords and pages that have been affected.

2. Review Google’s guidelines: Familiarize yourself with Google’s Webmaster Guidelines to ensure your website meets their quality standards. Pay particular attention to the sections on content quality, user experience, and technical aspects.

3. Evaluate your content: Review your website’s content to ensure it provides original, valuable, and comprehensive information. Make sure it is well-written, free from factual errors, and offers insights beyond what is readily available elsewhere. Consider updating and enhancing your content to make it more valuable to users.

4. Improve user experience: Enhance the overall user experience of your website. Ensure it is mobile-friendly, loads quickly, and is easy to navigate. Optimize your website’s design, layout, and functionality to provide a seamless and engaging user experience.

5. Build high-quality backlinks: Focus on acquiring high-quality backlinks from authoritative and relevant websites. Implement a strategic link-building campaign that emphasizes quality over quantity. Seek opportunities for guest posting, influencer collaborations, and partnerships with reputable websites in your industry.

6. Monitor and analyze: Continuously monitor your website’s performance using analytics tools. Track changes in rankings, organic traffic, and user engagement metrics. Analyze the data to identify patterns and make data-driven optimizations to your SEO strategy.

7. Be patient and persistent: Recovering from a decline in rankings may take time. Stay committed to improving your website’s quality, relevance, and user experience. Continue to create valuable content, build high-quality backlinks, and adhere to best SEO practices.

Remember, core updates can impact websites differently, and there is no guaranteed fix. However, by following these suggestions and maintaining a strong focus on providing value to users, you can increase your chances of recovering and improving your website’s visibility in Google search.

How this prompt could be improved:

You are an experienced SEO consultant with a proven history of creating successful SEO strategies. You have helped many businesses boost their rankings within Google SERPs by following best practice guidelines and staying on top of the latest developments in Search.

Following Google’s March 2024 core update, one of the websites you oversee the SEO for has disappeared from Google SERPs. The client who owns the website has no understanding of what has happened. They want you to investigate why Google has penalized their website, what rules have changed, whether their website recover, and if so, how.

Create a plan to help your client’s website recover from the Google March 2024 core update. Explain what tools and resources you would use to investigate what has happened and what impact it has had on your client’s website performance. Explain to your client how much this could cost them in monetary value.

After you identify the cause of the problem, put together a strategy to help your client’s website start to recover from this update, stating how soon you should start work on the recovery and prioritize each task in a list. Detail what resources you will need, what tools may help, how much budget is necessary and what access to staff will be required. Set expectations by explaining how long it could take to recover from this core update.

Detail how you will monitor the website’s recovery progress, what metrics you will measure and what tools you will use to do this. Mention how many Google core updates are rolled out every year, when the next one is likely to happen and how you can avoid being penalized in the future.

Your client does not have a strong understanding of SEO, so explain the plan in an easy-to-follow format using headers and bullet points where necessary. Explain any terms they may not be familiar with. Provide recommendations to links and additional resources that may help them better understand why their site was affected so significantly by the core update.

(Context)

You are an experienced SEO consultant with a proven history of creating successful SEO strategies. You have helped many businesses boost their rankings within Google SERPs by following best practice guidelines and staying on top of the latest developments in Search.

Following Google’s March 2024 core update, one of the websites you oversee the SEO for has disappeared from Google SERPs. The client who owns the website has no understanding of what has happened. They want you to investigate why Google has penalized their website, what rules have changed, whether their website recover, and if so, how.

(Task)

Create a plan to help your client’s website recover from the Google March 2024 core update. Explain what tools and resources you would use to investigate what has happened and what impact it has had on your client’s website performance. Explain to your client how much this could cost them in monetary value.

After you identify the cause of the problem, put together a strategy to help your client’s website start to recover from this update, stating how soon you should start work on the recovery and prioritize each task in a list. Detail what resources you will need, what tools may help, how much budget is necessary and what access to staff will be required. Set expectations by explaining how long it could take to recover from this core update.

Detail how you will monitor the website’s recovery progress, what metrics you will measure and what tools you will use to do this. Mention how many Google core updates are rolled out every year, when the next one is likely to happen and how you can avoid being penalized in the future.

(Format)

Your client does not have a strong understanding of SEO, so explain the plan in an easy-to-follow format using headers and bullet points where necessary.

(Tone)

Explain any terms they may not be familiar with. Provide recommendations to links and additional resources that may help them better understand why their site was affected so significantly by the core update.

Answer:

Recovering from the Google March 2024 Core Update:

1. Investigating the Issue:

- Utilize Google Search Console: Analyze the website’s performance data, including impressions, clicks, and average position, to identify any significant drops or changes.

- Conduct a Technical SEO Audit: Assess the website’s technical aspects, such as site speed, mobile-friendliness, crawlability, and indexability, using tools like Screaming Frog and Google PageSpeed Insights.

- Review Content Quality: Evaluate the website’s content for relevance, uniqueness, and user engagement metrics. Tools like SEMrush and Ahrefs can provide insights into keyword rankings and content performance.

2. Identify the cause:

- Analyze Algorithm Updates: Research the specific changes made in the March 2024 Core Update to understand the factors that may have affected your website.

- Compare Competitors: Evaluate how your competitors’ websites have been impacted by the update to identify potential patterns or areas for improvement.

3. Developing a Recovery Strategy:

- Prioritize Technical SEO Fixes: Address any technical issues identified during the audit, such as improving site speed, fixing broken links, and optimizing meta tags.

- Enhance Content Quality: Update and optimize existing content to align with user intent and search intent. Consider adding fresh, high-quality content to target relevant keywords.

- Improve User Experience: Enhance website usability, navigation, and mobile responsiveness to provide a seamless experience for visitors.

- Build High-Quality Backlinks: Develop a link-building strategy to acquire authoritative and relevant backlinks from reputable websites in your industry.

4. Timeline and Resource Allocation:

- Start Recovery Work Immediately: Begin implementing the recovery strategy as soon as possible to minimize the impact on your website’s visibility.

- Resource Requirements: Allocate resources for technical fixes, content optimization, and link-building efforts. Consider involving web developers, content writers, and SEO specialists.

- Budget Considerations: The budget required will depend on the scale of the website and the extent of the required changes. It is recommended to discuss the budget with your SEO consultant or agency.

5. Recovery Timeframe:

- Recovery Duration: The time required to recover from a core update can vary significantly. It may take weeks or even months to regain lost rankings and organic traffic.

- Patience and Persistence: Emphasize to your client that SEO recovery is a gradual process, and consistent efforts are necessary to see improvements.

6. Monitoring Progress:

- Track Key Metrics: Monitor organic traffic, keyword rankings, and conversion rates to gauge the effectiveness of the recovery strategy.

- Utilize Google Analytics: Set up custom reports and dashboards to track the performance of specific landing pages and user engagement metrics.

- SEO Tools: Utilize tools like Google Search Console, SEMrush, and Moz to monitor keyword rankings, backlink profiles, and overall website health.

7. Future Prevention:

- Stay Informed: Keep up-to-date with industry news and Google’s official announcements to anticipate future algorithm updates.

- Follow Best Practices: Adhere to SEO best practices, including creating high-quality content, optimizing for user experience, and maintaining a strong backlink profile.

- Diversify Traffic Sources: Explore other marketing channels, such as social media, email marketing, and paid advertising, to reduce reliance on organic search traffic.

8. Additional Resources:

- Google Search Console Help Center: https://support.google.com/webmasters/answer/7451184

- Moz Beginner’s Guide to SEO: https://moz.com/beginners-guide-to-seo

- Search Engine Land: https://searchengineland.com/

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Google accused of anti-competitive tactics by Japanese regulator

Written on April 23, 2024 at 11:47 pm, by admin

Google allegedly prevented Yahoo Japan from accessing crucial technology required to generate targeted ad revenue from mobile searches from 2015 to 2022, according to Japan’s Fair Trade Commission (FTC).

Google only changed its behavior after the FTC flagged the issues. Google then committed to giving Yahoo Japan access to keyword-tied targeted advertising technology, Bloomberg reported.

Why we care. Any practices that would impact an advertiser’s choice or limit access to targeting technologies can lead to increased advertising costs.

Precedent. This is the FTC’s first-ever administrative action against Alphabet, albeit without imposing any penalty.

What next? The Japanese watchdog will closely monitor the situation and retains the right to reopen its investigation into Google. They are also investigating Alphabet’s potential influence on local smartphone makers to prioritize its search services on their devices, further intensifying scrutiny of the tech behemoth’s practices.

Similar accusations. Meanwhile, in the U.S., we’re still waiting to hear the verdict of the U.S. vs. Google antitrust trial. Part of the trial focused on whether Google used its search ad tools to put its competitors at an unfair advantage.

Dig deeper in U.S. vs. Google antitrust trial: Everything you need to know.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

TikTok SEO: The ultimate guide

Written on April 23, 2024 at 11:47 pm, by admin

TikTok is no longer just an entertainment platform – it has rapidly evolved into a powerful search engine, particularly for the younger generation. With a staggering 74% of Gen Z using TikTok as their go-to search platform in 2024, brands can no longer afford to ignore it.

This comprehensive guide delves into the intricacies of optimizing for TikTok, exploring strategies, tools and best practices to help you enhance your visibility, engage your target audience and ultimately drive success on this rapidly growing platform.

Why TikTok is threatening Google’s supremacy

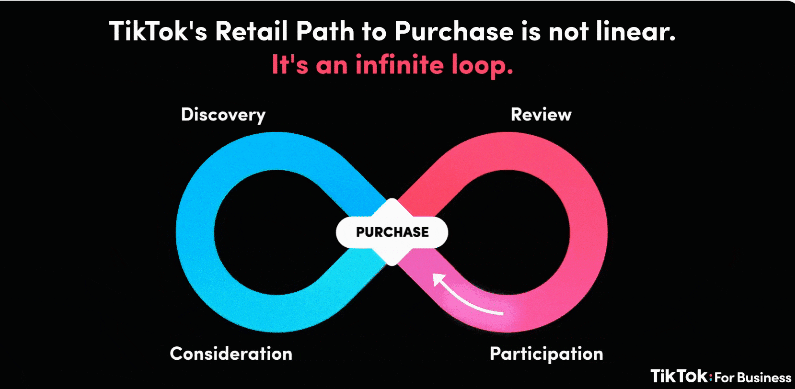

TikTok is changing the buyer journey. Instead of a linear consumer path, TikTok has created a loop of videos where consumers watch and purchase. And because it’s rooted in entertainment, brand discovery is easier and more fun.

Users are looking for the things they’d search for on Google, but staying in the app much longer than they’d stay on Google because of that entertainment factor. On TikTok, brands fit seamlessly into the fun videos people watch, making it easier for them to discover.

As SEO professionals, figuring out how to help brands stand out in this new landscape is key to effectively targeting the younger generation.

Dig deeper: Is TikTok a search engine? Why meeting searchers’ needs matters more than semantics

The anatomy of the TikTok ‘For You’ page

Almost all video views come from the “For You” page (FYP) on TikTok. Understanding its functionality is vital to social SEO success.

The search bar at the top lets users type in any query they’re looking for. Once you click into a profile or search for a video in the “For You” page search bar, it will display recommended searches based on what videos a user may be interested in.

How to optimize for TikTok search

SEO strategies for Google and TikTok share some similarities but also have notable differences. Both emphasize the importance of creating informative and helpful content tailored to the target audience’s search intent, weaving relevant keywords into the content and consistently producing quality content following platform best practices.

However, one significant difference is that previous brand success doesn’t heavily influence rankings on social platforms like Instagram and TikTok, unlike Google, where brand authority plays a significant role.

The low barrier to entry in social media algorithms may lead to quicker success for brands in social SEO than traditional Google Search.

While social algorithms vary and don’t adhere to a one-size-fits-all rule, there are still factors that marketers can control to optimize their content. These include:

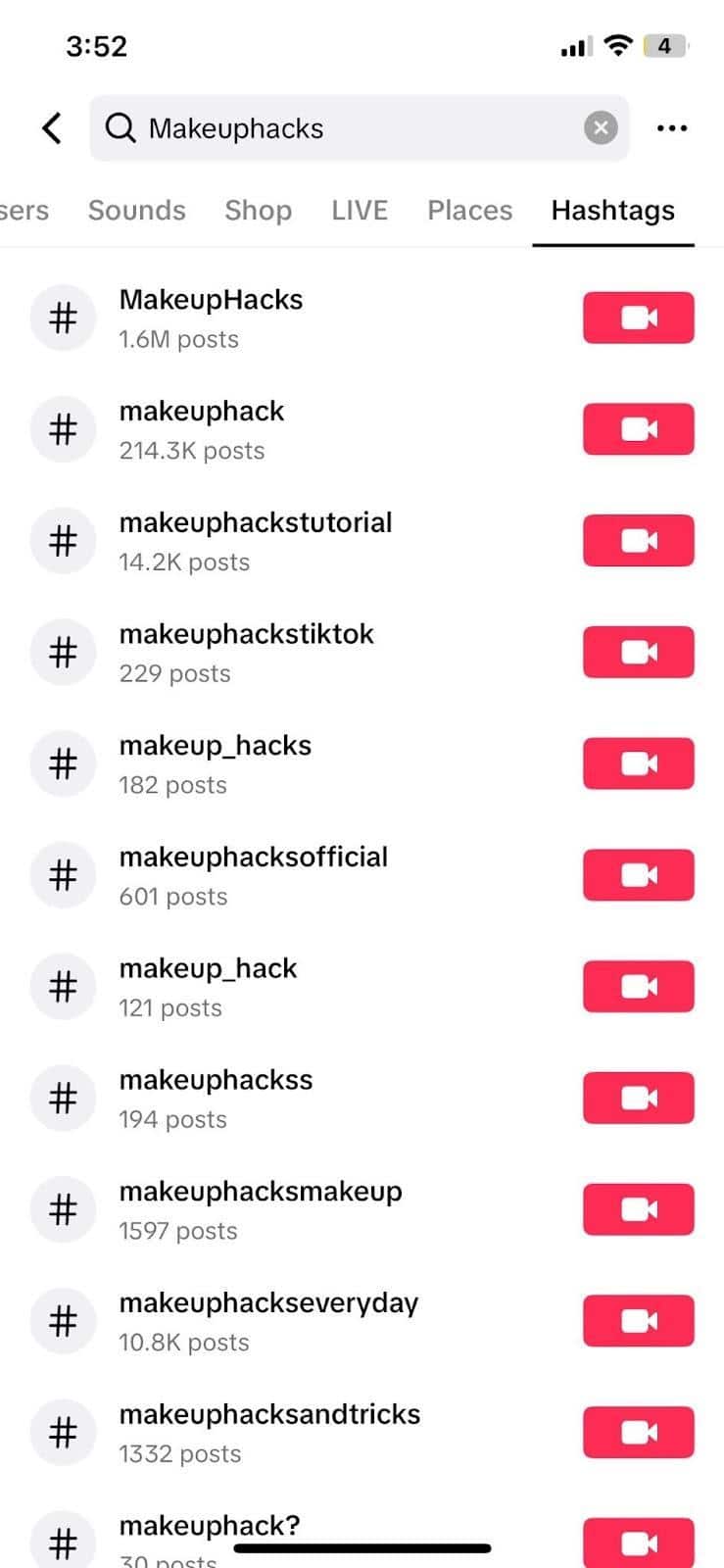

Hashtags

Hashtag optimization plays a crucial role, particularly for D2C brands seeking to enhance their online presence, as it provides an avenue to incorporate essential keywords indicative of purchase intent.

Experimenting with different hashtag types and lengths is an effective strategy to discern which hashtags resonate best with each unique brand. Here are some examples of hashtags that signify purchase intent:

- #[product type]review

- #best[product type]

- #[product type]hacks

Keywords

Similar to hashtags, keyword incorporation plays a crucial role in conveying the purpose of your content to the TikTok algorithm.

You can incorporate keywords into your content, including video descriptions, verbal mentions, captions and voiceovers. Since video descriptions are much shorter than blog posts, keeping them clear and concise is important.

Make sure to avoid keyword stuffing and instead keep the description succinct and to the point.

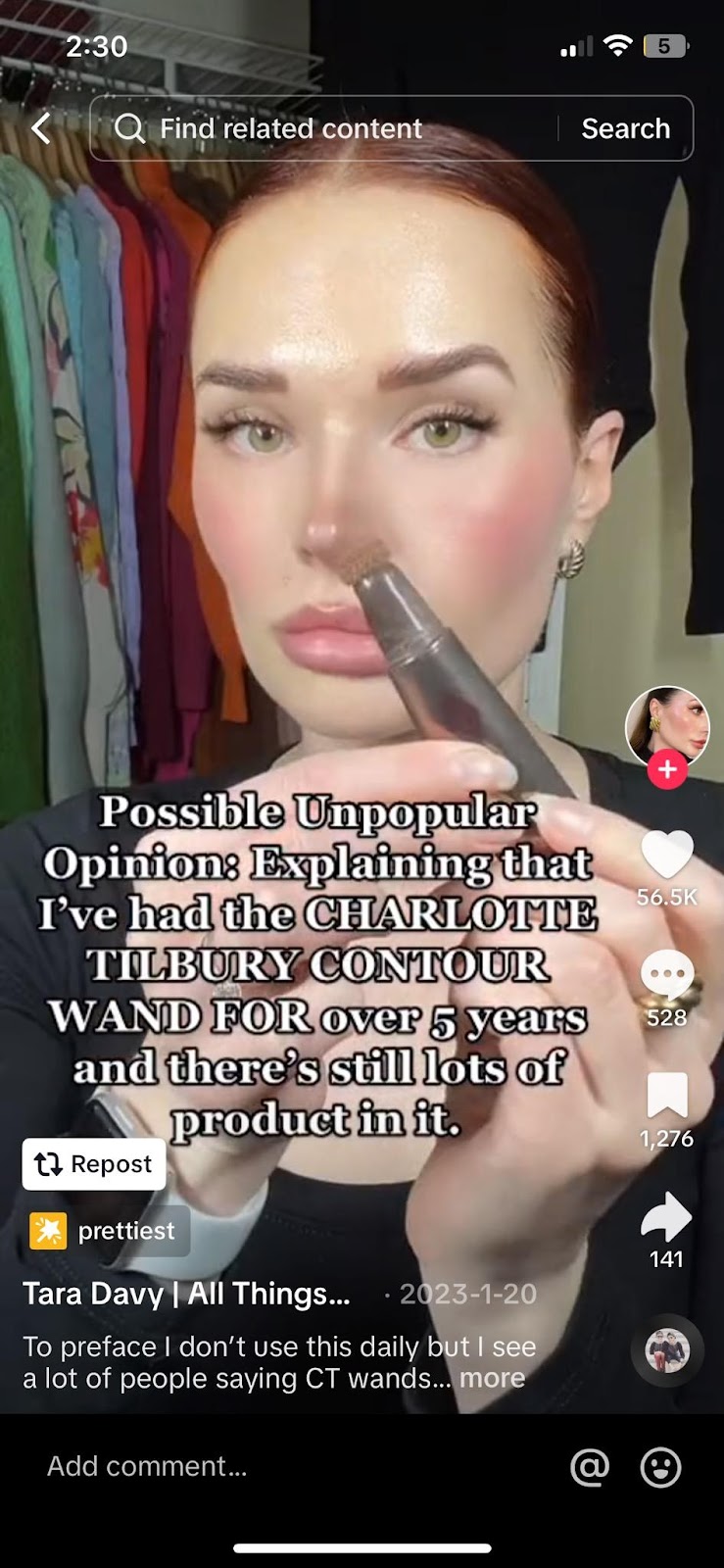

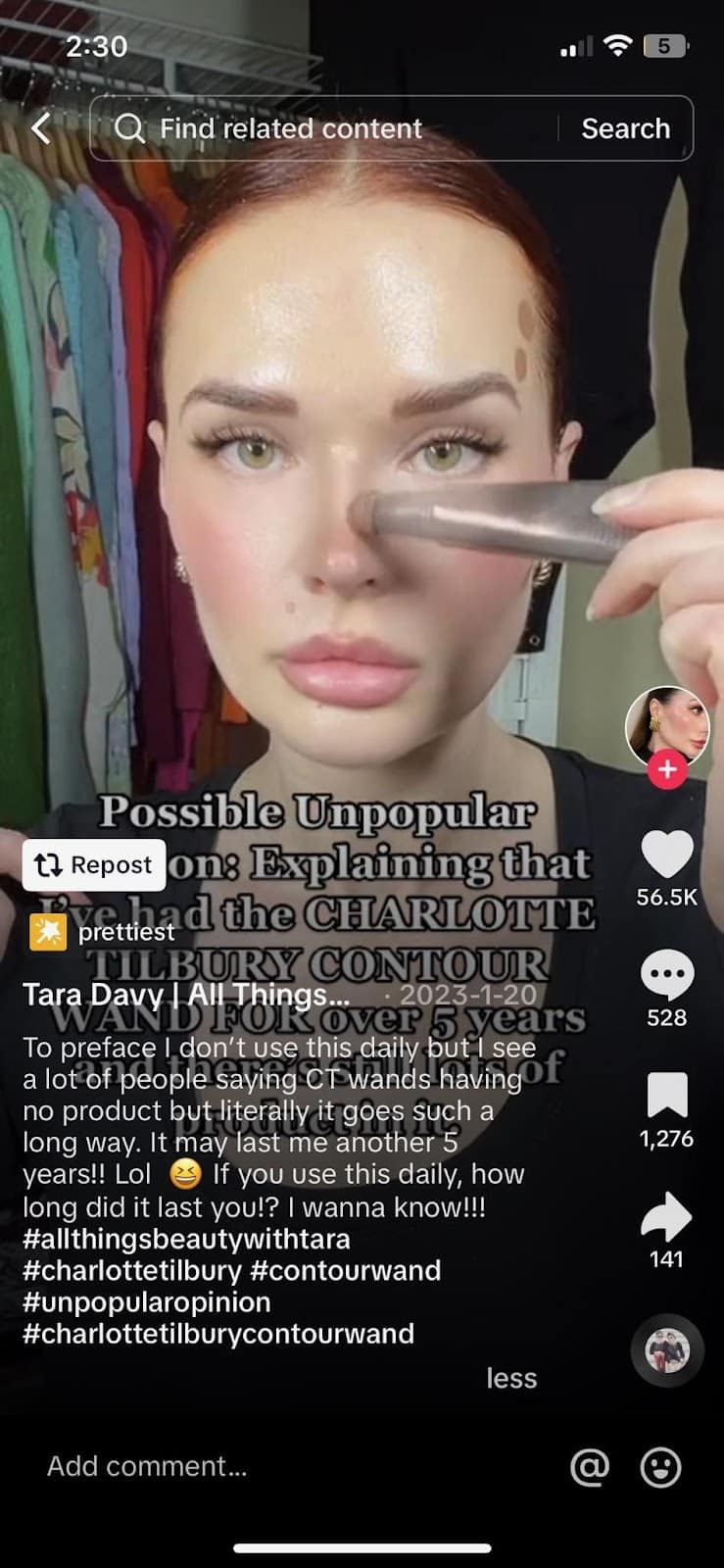

The combination of keywords in the text overlay and description in the screenshots of the video below are great examples of strategically placing keywords:

Talking about a keyword in a TikTok video is important because mentioning it verbally reinforces your video’s relevance, making it more likely to be shown to users interested in that topic. The same goes for adding keywords to video captions and voiceovers.

Content

Using popular sounds, filters and ideas can help your TikTok videos get on the For You page faster. TikTok shows you what’s trending so you can join in and boost your chances of getting noticed.

But remember, TikTok videos can stay relevant for a long time and can rank for years, so it’s smart to balance trends out with plenty of evergreen content that will still be relevant when the trend is long gone.

Engagement

The way a video is shot and edited plays a significant role in influencing engagement on TikTok. Additionally, incorporating effective calls to action, such as encouraging viewers to leave comments or share the video, can heavily impact engagement levels.

However, it’s essential to balance fostering genuine engagement and solely aiming to accumulate comments since TikTok favors comments that add value to videos. Authentic interactions and creating opportunities for meaningful conversations are paramount for maximizing engagement success.

Optimizing video length is another crucial factor for enhancing engagement on TikTok since the platform’s users have short attention spans. As a general guideline, users’ attention spans on TikTok tend to mirror their age.

For instance, a 30-year-old user typically has a 30-second attention span, while an 18-year-old user may only engage for about 18 seconds. Therefore, creators should tailor their content to align with these attention spans to maintain viewer interest and maximize engagement.

Thumbnails

Thumbnail optimization on TikTok is vital because the thumbnail is the first thing users see when scrolling through search results.

A compelling thumbnail can capture attention and attract clicks, increasing visibility and engagement. So, creating a thumbnail that effectively draws in a consumer is key.

Andrew Halfman, the Director of Social Media at Stella Rising, also recommends “[bulking up the] thumbnail section is to ensure the thumbnail has strong text overlay on it – indicating a preview of what the video is about.”

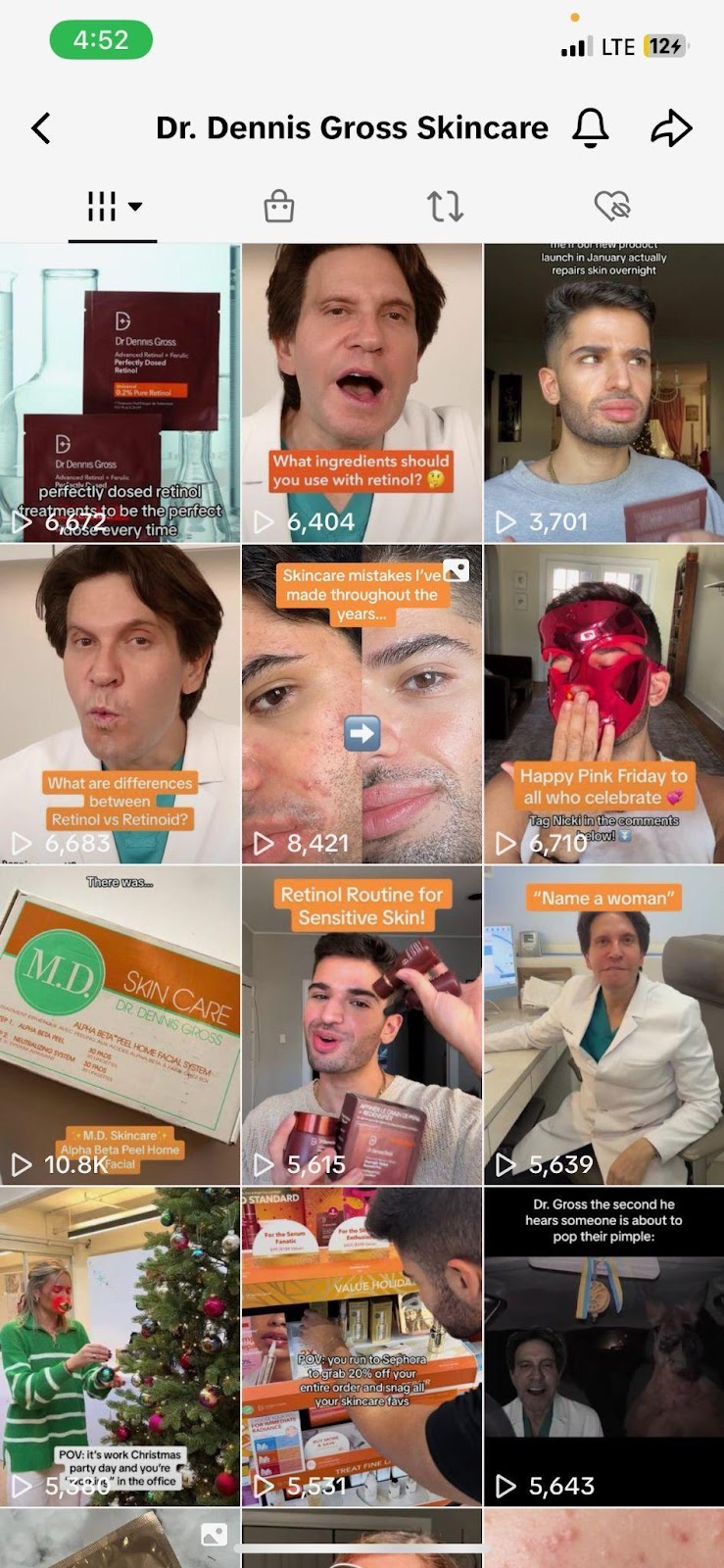

Dr. Dennis Gross Skincare’s TikTok account does a great job of optimizing thumbnails and effectively communicating the topics covered in each video to users.

How to measure SEO performance on TikTok

Measuring SEO performance on TikTok involves tracking key metrics like personalized KPIs, keyword ranking, audience demographics and engagement rates.

Monitoring these metrics helps assess visibility, audience interaction and content effectiveness, allowing for optimization and goal achievement.

Defining your KPIs

KPIs are individual to each brand, so pick which ones are most relevant to yours and begin tracking them. Some great KPIs to start with include conversion rates, engagement rates, website traffic and revenue growth.

It’s important to note that the brand KPIs will vary based on business objectives, so this performance measure isn’t a one-size-fits-all.

Use the TikTok search bar to determine keyword ranking

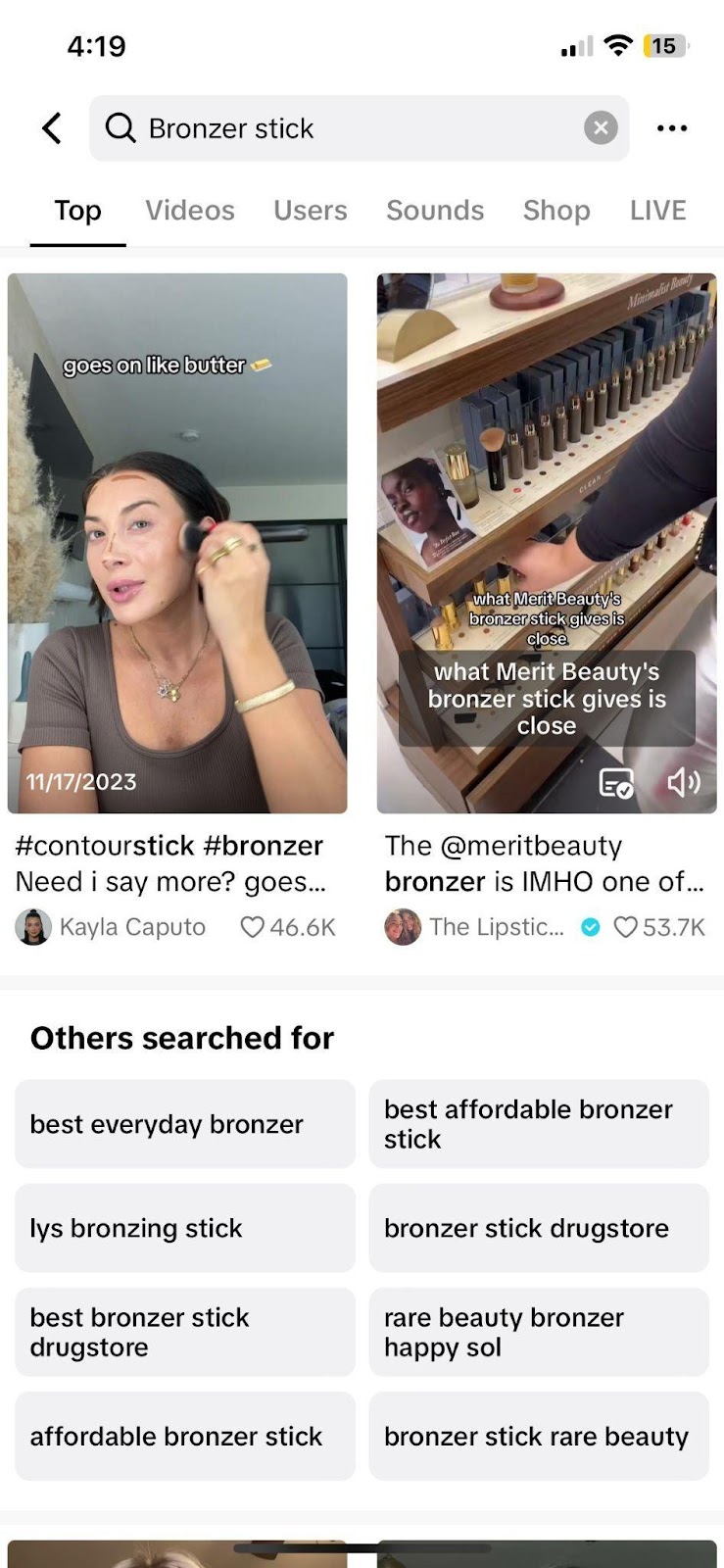

Using the TikTok search bar allows businesses to measure keyword ranking by observing where their content appears in search results relative to specific keywords or search terms.

In the screenshot below, you can see a video about Merit Beauty ranking second for the search query “bronzer stick.”

Analyze your audience to ensure you’re reaching your target consumers

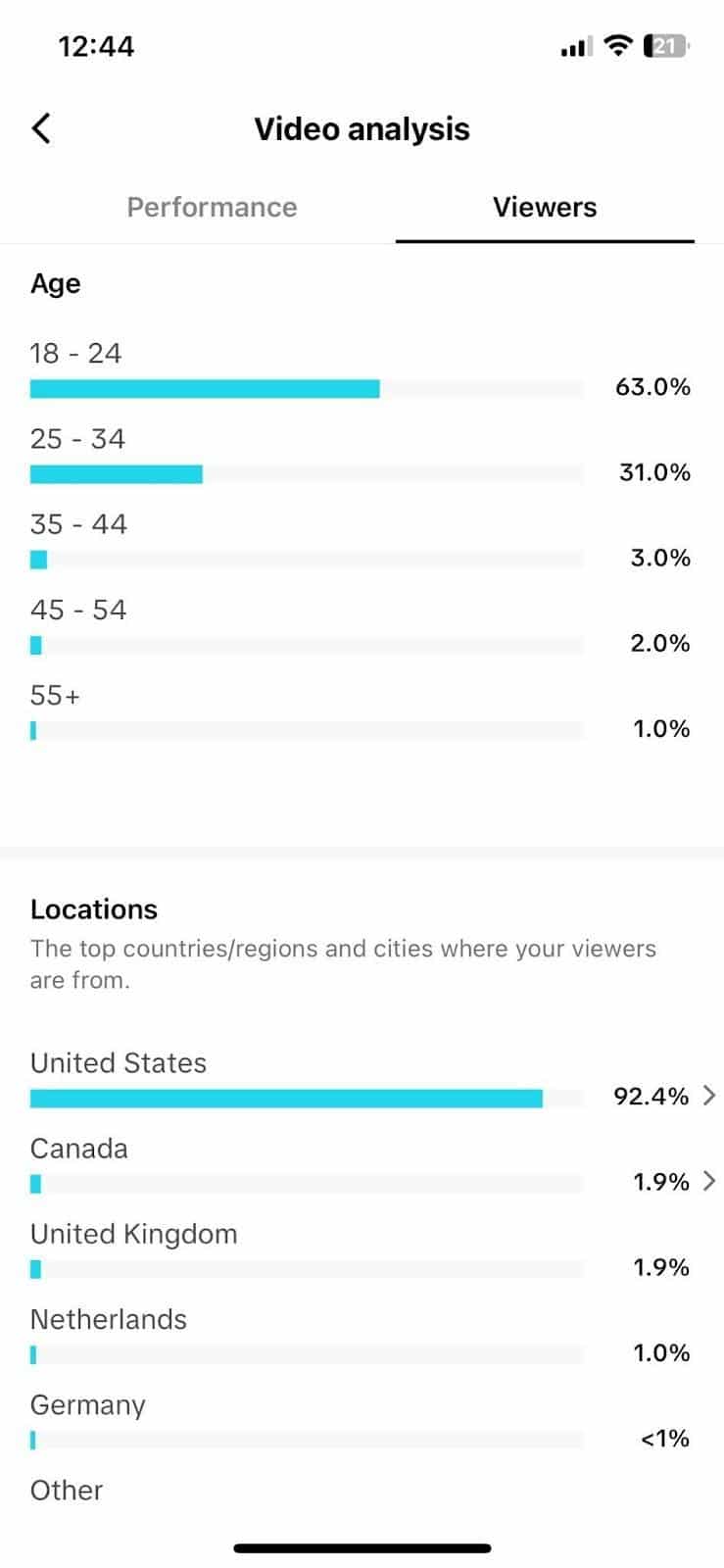

Access the Viewers tab by clicking More insights at the bottom of your video and then clicking Viewers at the top right side of the screen.

This tab provides information about your audience, such as whether they’re following you or are discovering your account for the first time. You can also see audience demographic information like gender, age range and location.

By noting how this information matches up with information about your target audience, you can determine whether your strategy is reaching the right consumers.

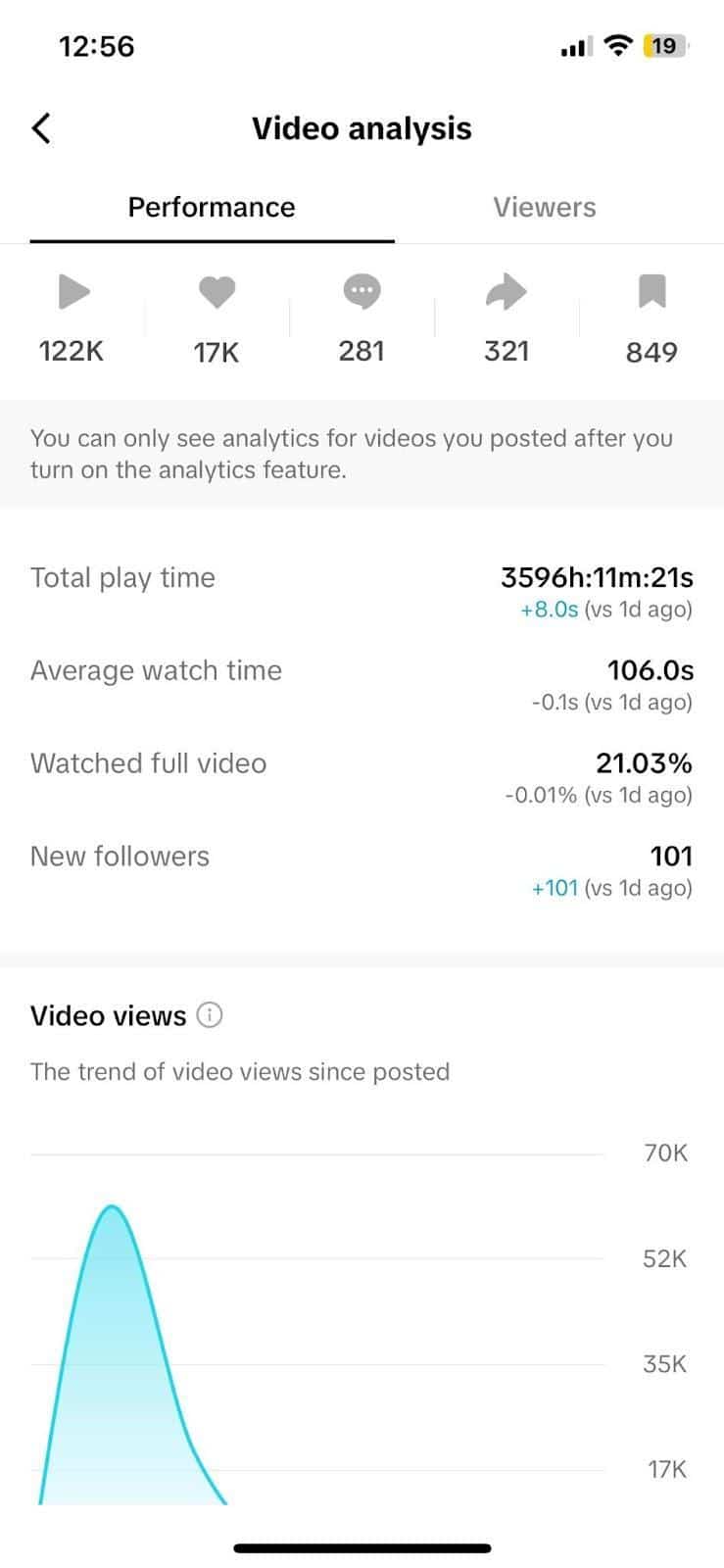

Analyze engagement rate with the ‘Performance’ tab

Access the Performance tab by clicking More insights at the bottom of your video and then clicking Performance at the top left side of the screen.

This tab provides data on user interaction with a video, including metrics such as new followers, average watch time, likes, shares and retention rate.

This tab is also very useful for helping you figure out what kinds of videos keep your audience’s attention. Metrics for average watch time and the percentage of viewers who made it through the full video are available.

SEO tools for TikTok

Although SEO for TikTok is a relatively new concept, there are tools available to simplify optimizations.

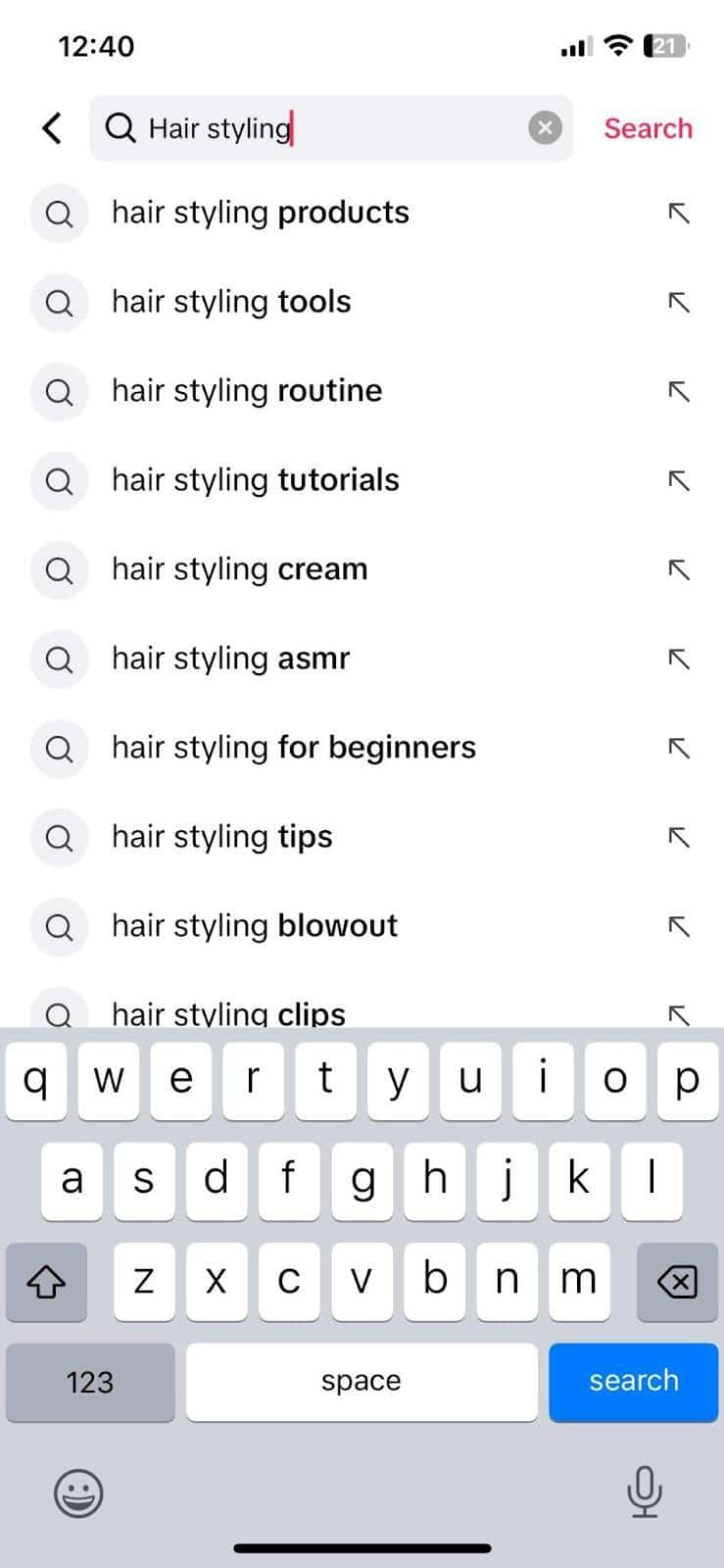

Keyword research

Many tools, such as the predictive search feature and the Keyword Insights Tool, assist with keyword research on TikTok.

The Predictive Search feature allows users to see which long-tail phrases containing their target phrase are most popular. Accessing this feature is simple: you only have to type in a few words and wait for the predictive search to generate.

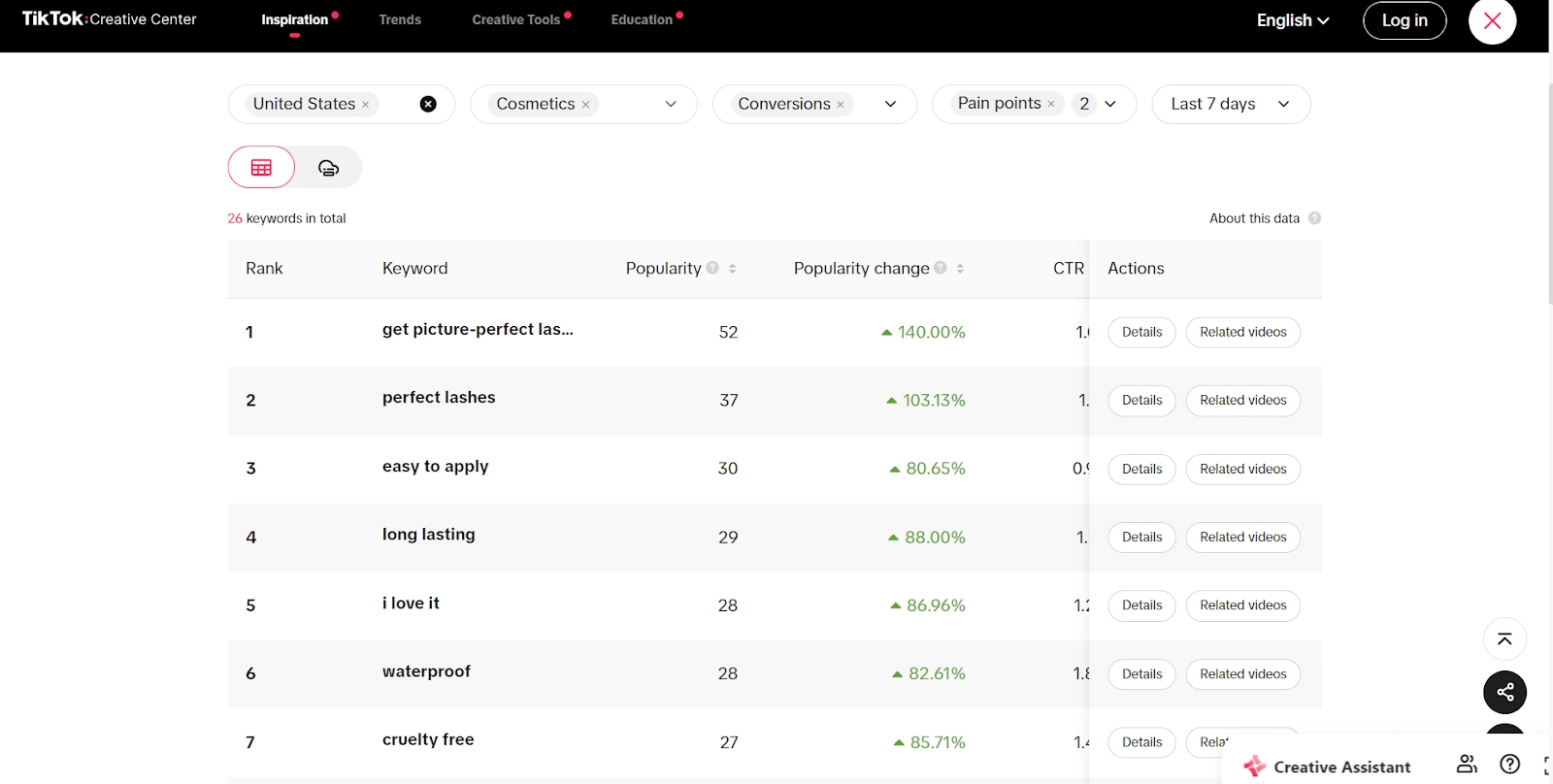

TikTok’s Keyword Insights tool, located in the TikTok Creative Center, also provides data on keyword popularity, trends and performance.

By entering a keyword, you can access insights about engagement metrics, video content on the platform about a given topic, associated hashtags, trending related topics and demographic insights, which can help inform content strategy and audience targeting.

Using the TikTok Creative Center to optimize

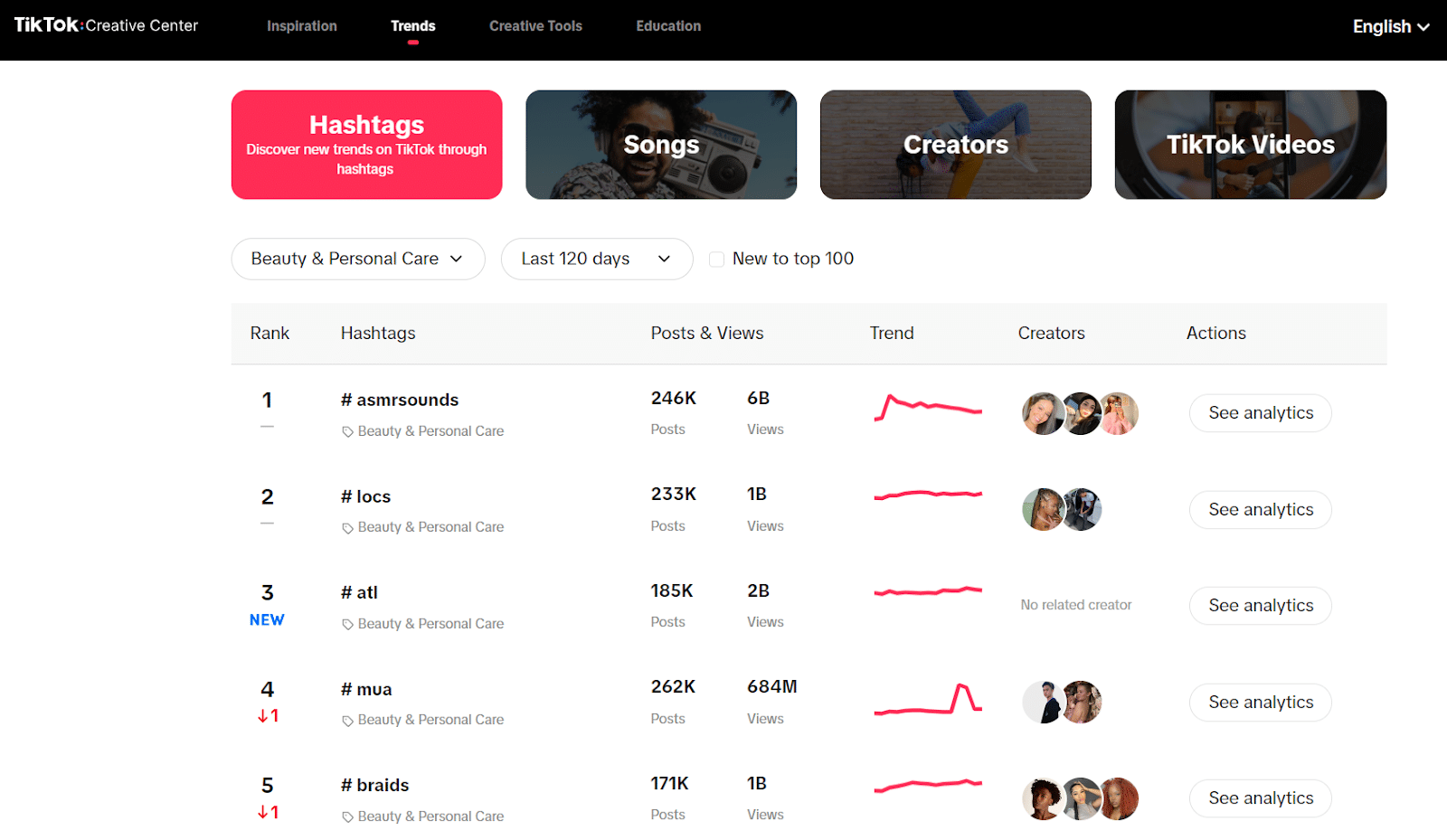

The best optimization tool for TikTok is TikTok itself. The TikTok Creative Center is the number one place to go to find trends, conduct keyword research and learn about the most up-to-date creative best practices.

The trends dropdown in the top navigation bar shows users what hashtags, songs, creators and videos are most popular at a given time.

Once you’re in the trends section of the Creative Center, you can choose to browse by hashtags, songs, creators or videos. You can also filter by industry and time period.

This tool is extremely useful for trend and hashtag optimization since it provides insight into the popularity of these video aspects.

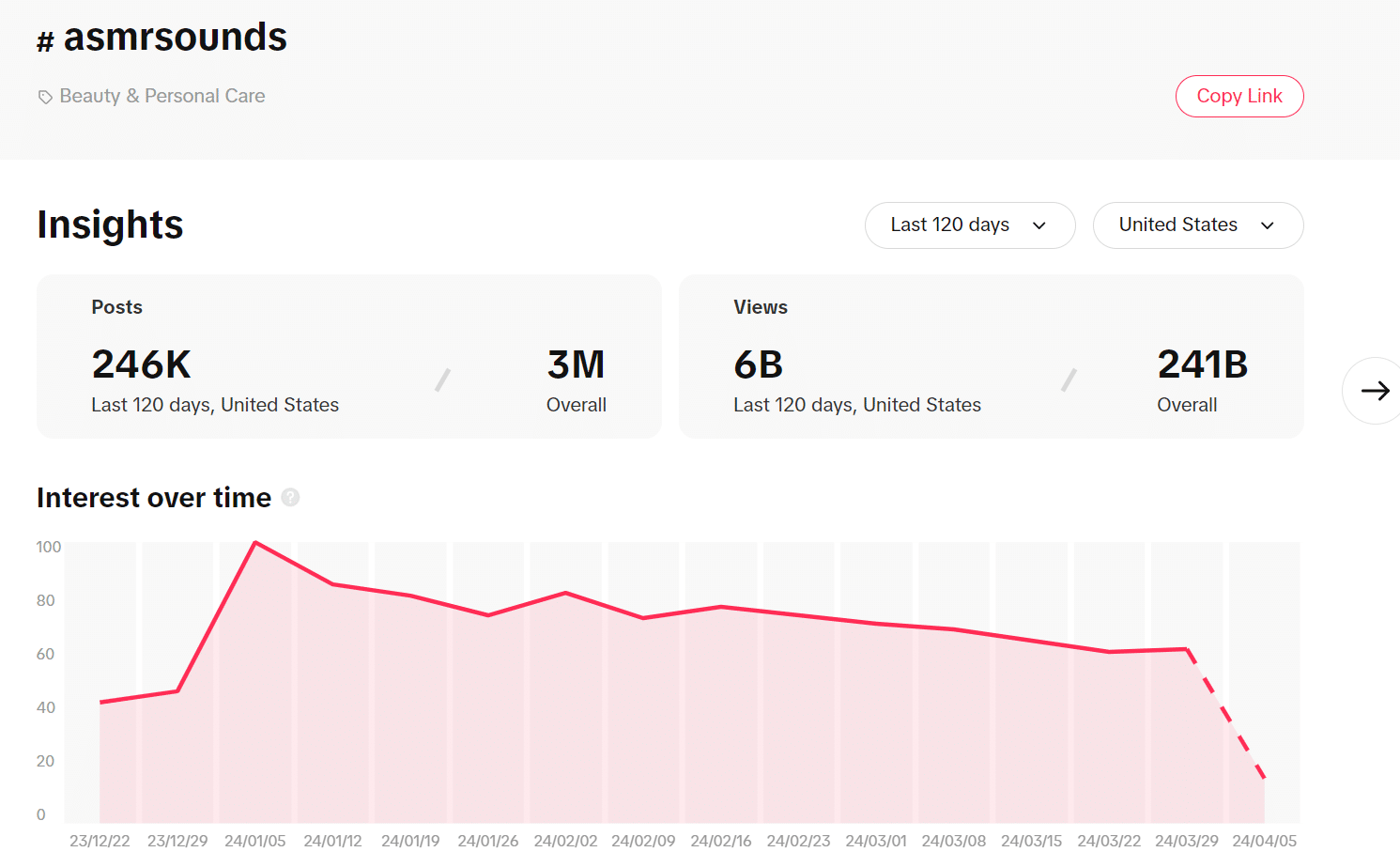

When you click on the See analytics tab in the Creative Center, you’ll be taken to a page that provides information similar to Google Trends.

The TikTok Creative Center shows how many posts are being created for each hashtag, how many views the hashtag is getting and interest in the hashtag over time.

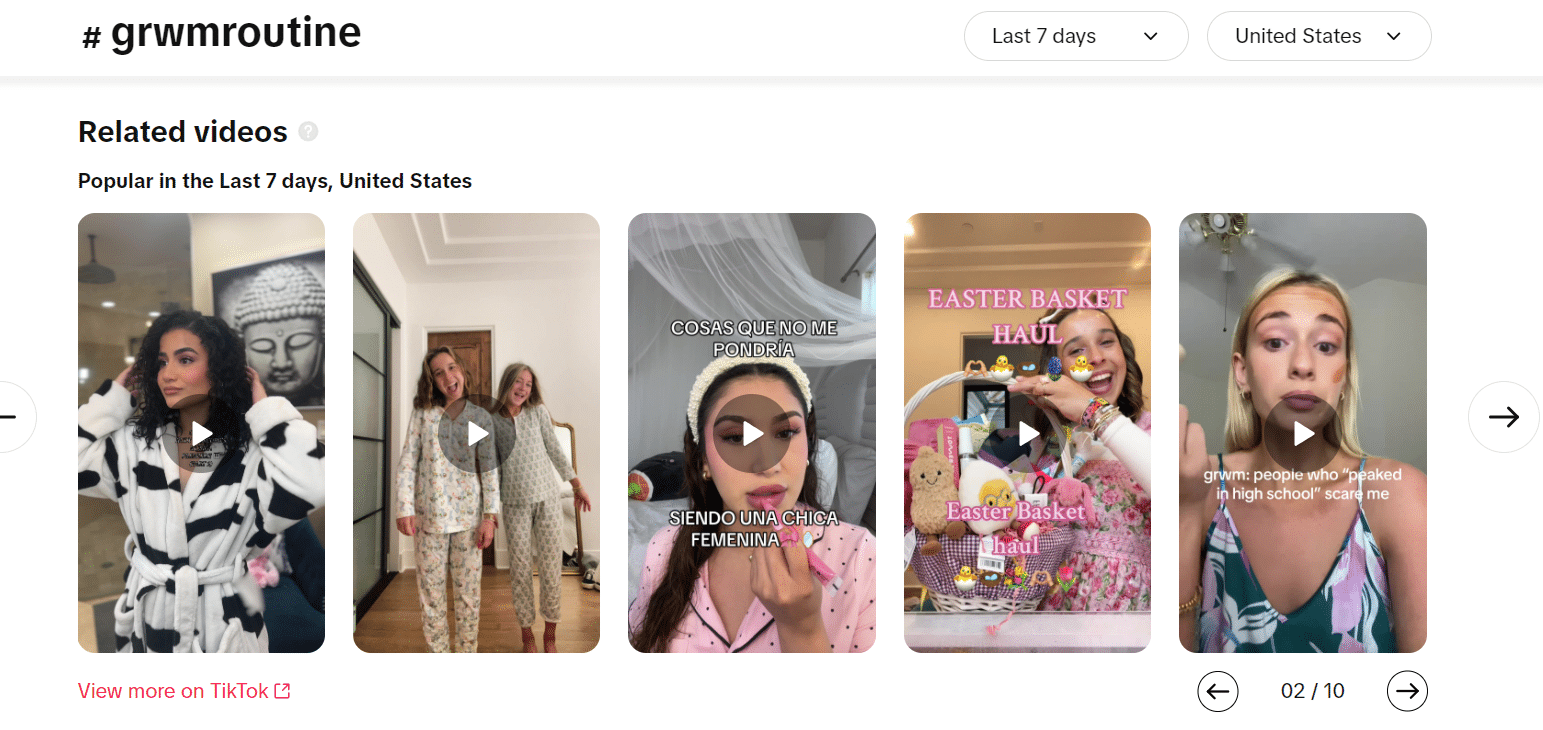

The TikTok Creative Center also shows related videos, which can be extremely helpful for content ideation on the platform.

In addition to trend popularity insights and related videos, the tool provides information about audience demographics and their related interests.

TikTok doesn’t have as many optimization tools as traditional search engines. However, the Creative Center does a good job of providing information that can be critical to improving organic visibility on the platform.

Measurement and reporting tools

Although TikTok doesn’t have nearly as many measurement and tracking tools as Google, creators and businesses can use TikTok Analytics through Business Suite to see how their content is performing and who’s watching it.

Within the Business Suite dashboard, you can seamlessly navigate to the Analytics section, where a wealth of performance metrics awaits.

From video views and likes to shares, comments and follower growth, every facet of account engagement is meticulously tracked and presented for analysis.

The platform also provides in-depth demographic data, furnishing crucial insights into your audience’s age, gender, location and interests.

Armed with these analytics, you can embark on a journalistic journey of understanding their TikTok audience dynamics, enabling them to fine-tune content strategies and foster heightened engagement on the platform.

Gain an edge with TikTok SEO

As SEO for TikTok becomes more prominent, more information on how to optimize will become available.

Even though limited resources are available at the moment, it’s important to optimize where everyone is searching – and that’s no longer just Google. You are left with a choice: adapt or get left behind.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

6 vital lenses for effective keyword research

Written on April 23, 2024 at 11:47 pm, by admin

More keyword data isn’t your problem.

No, what’s holding you back has nothing to do with your advanced ability to slice and dice keyword data dumps.

Data dumps on their own won’t help you; they’ll probably only hurt you.

Here’s why, how this mindset routinely sabotages keyword research and how to fix it.

Why keyword research is hard (and misunderstood)

Anyone anywhere can fire up a keyword research tool and export a giant list of “relevant” keywords. That’s not the problem.

The problem is that this haphazard, spray-and-pray approach doesn’t work. Not when your competitors (both direct but more importantly the indirect ones) actually know what they’re doing in competitive spaces.

In other words, your problem isn’t sifting through a ton of random keyword ideas, using arbitrary (and misleading) filters like keyword difficulty (KD) to sort which ones are a “good” target vs. a “bad” one.

My friend Ben Goodey helped illustrate the problem with keyword research in a recent LinkedIn post:

“Most keyword research “spreadsheet dumps” people make are NOT useful.

Easy keyword research:

– Full dump of entire set of keywords in “your industry”.

– Difficulty and volume appended.

– Clustered into related topics.Useful keyword research:

– Keywords you can *actually* win based on your current authority

– Keywords that show YOUR buyer is looking for YOUR product

– Keywords where competing content is weakTakes time and experience. It’s slow.

But it’s where the magic *actually* happens.”

See, SEO is part art, part science.

Yes, it can be technically complex at times. But for the most part, it’s more similar to weight loss:

What’s hard is the consistent execution, and not the actual theory or strategy (eat less; workout more).

So yes, pivot tables can be helpful, especially when you’re working with tons of data points.

But they’re only helpful to a point.

Because the best keyword research strategies actually blend multiple different types of subjective analysis at the same time, including:

- The likelihood or ability to actually rank, for…

- Business-generating topics, within a…

- Realistic time frame given your goals/objectives, considering…

- The competitive landscape you’re up against versus…

- Your site’s current inherent strengths and…

- The resources you’re able to allocate during these pre-defined time periods.

In a world of sophisticated SEO, over-engineered pivot tables, fancy formulas and similar basically become more fake busy work.

Let’s step through these six views or “lenses” so you can properly analyze keywords from different angles that are a better predictor for success.

1. The likelihood of actually ranking

This first one sounds trite but often isn’t in practice.

Yes, you should be targeting the biggest and best commercial terms in your space. But that brings us back to the age-old SEO Catch-22:

- The “best” keywords are often the most competitive.

- This means they’re also going to take the longest to rank for (so, you won’t see any results anytime soon).

If you’re ready and willing and able to stomach years of no results, then go for it!

Otherwise, if bosses or peers or clients are going to be breathing down your neck six months from now (read: most, if not all, of them), you’re gonna need to re-adjust your sights.

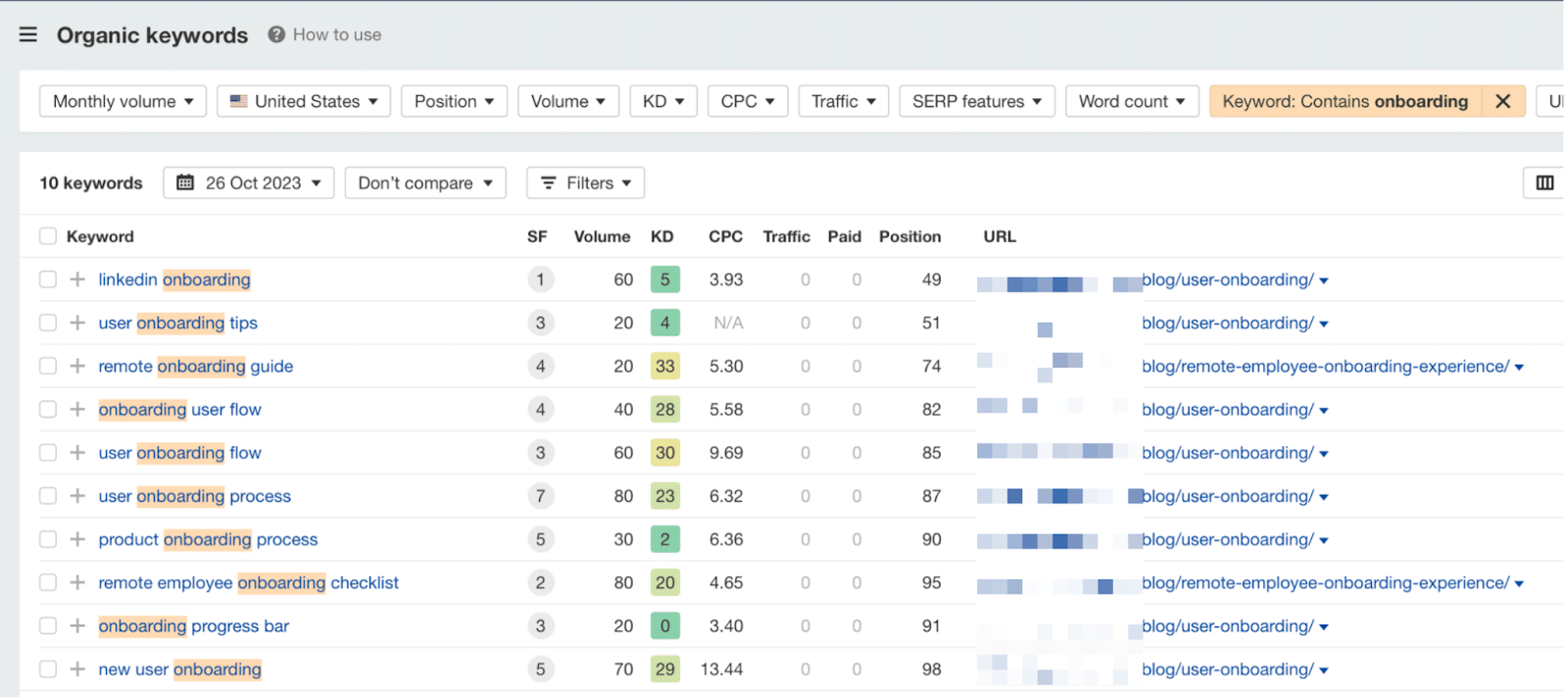

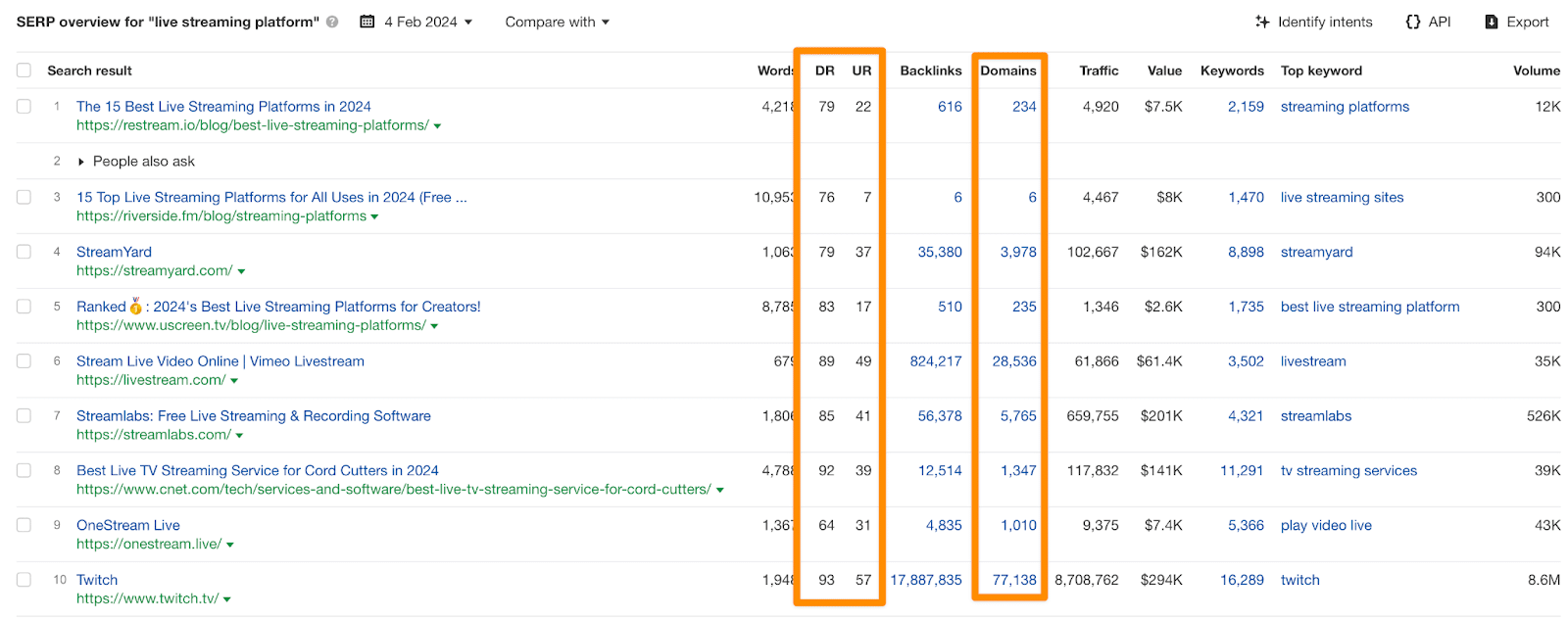

For instance, feast your eyes on this awesome “live streaming platform” keyword:

Yikes. “Super hard” keyword difficulty (KD) isn’t great.

(It’s not all bad per se; keep reading below to see why KD often lies).

However, unless your domain rating already reads ~80-90+, you’re looking at 12+ months at the earliest for any top page (let alone top five) rankings.

So. You adjust your sights – out and down!

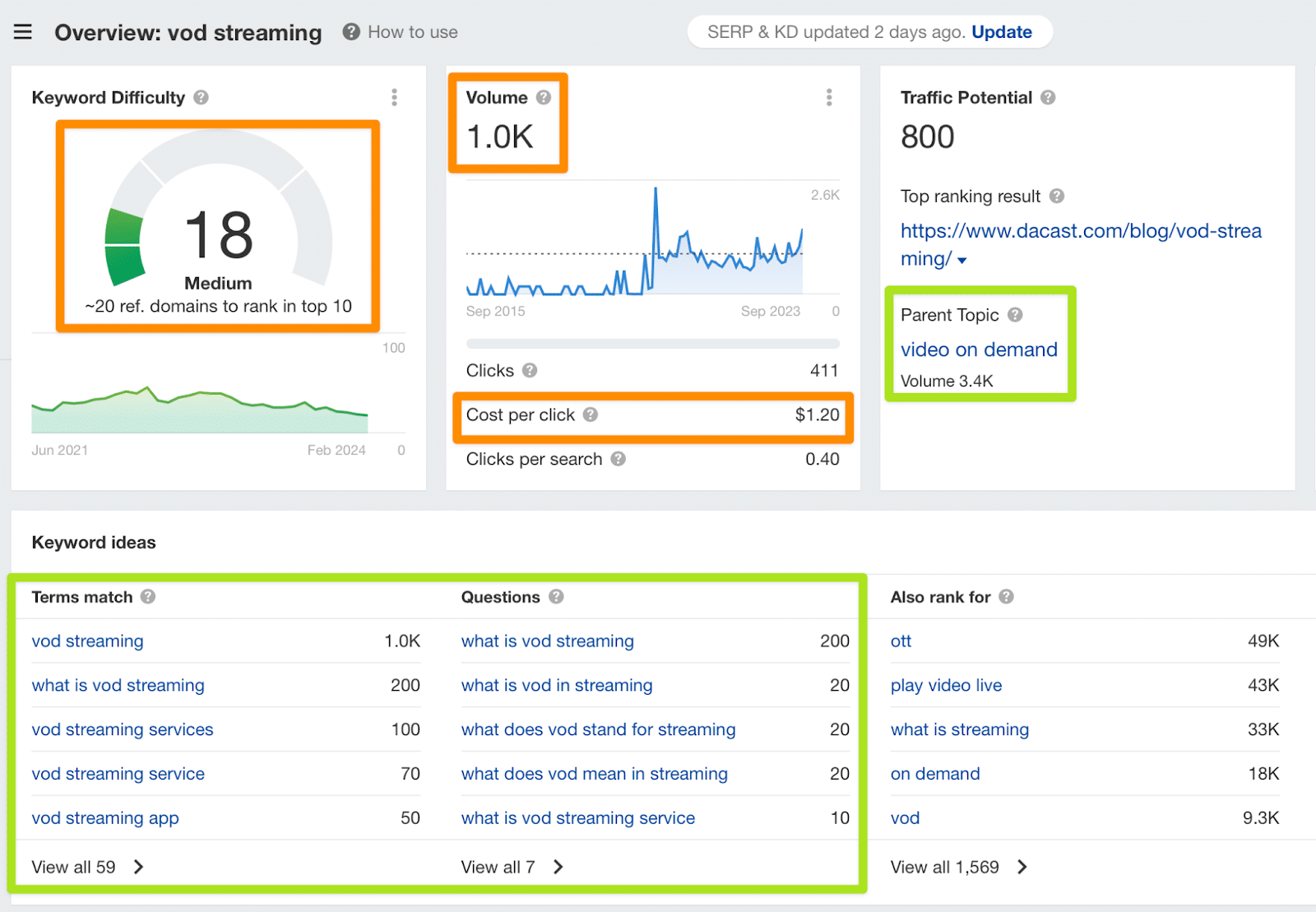

What’s a similarly relevant keyword that you can rank for in under 12 months to help “balance the books” while you’re waiting to rank for this more competitive target?

You look for similar underlying intent and relevance but one that’s much more of a short-to-medium-term goal than a long-term one.

Like this:

No, you probably still won’t rank in the top 5 for this out of the gate. But the related long-tail variations are even more promising.

So, with the right approach, you’re able to:

- Pick up the long-tail variations in the short term (0-6 months).

- Get this “VOD streaming” pillar within ~6-12 months.

- Leverage this dominance to go back for “live streaming platform” 12+ months from now.

In other words, your keyword research + topic selection + content strategy should be more holistic.

One cluster sets up another, which sets up another, which sets up another.

No pivot tables required – only long-term thinking and strategizing.

Dig deeper: The trifecta of keyword research strategy: Volume, difficulty, intent

2. Business-generating topics

Ranking for all the top-of-the-funnel keywords in your space is a great start.

It might be enough for B2C companies, depending on the rest of their customer acquisition channels and options.

But, those terms alone often don’t move the needle for long, drawn-out, complex sales cycles.

Translation: Using AI to pump out all the crappy content in the world isn’t actually going to drive you new business.

If anything, the substandard quality will likely erode the trust and credibility you’ll need to drive five-plus-figure ARPUs.

That’s why B2B brands especially need to balance both ends of the buyer’s journey:

- You need enough bottom-of-the-funnel content to convert attention into interest (where demos, paid trials or sales enablement are more of the norm).

- You must not neglect the fact that the vast majority of your ICPs don’t know who you are – so you’ll need to address pain points and education.

You should also realize that sophisticated buyers are already comparing you to alternatives before ever reaching out in the first place.

So piggybacking on competitors and alternatives of your product – even if they’re not direct competitors but simply better known (or funded) – can help you thread the needle.

This way, you can double down on expanding the “vs” or “alternatives”-queries you’re targeting and actually pushing into the top five. Like so:

If you’re just doing keyword research with a giant data dump, chances are you’re missing these critical “bridge”-type keywords to help transform searchers into leads.

‘Cause you’re setting artificial minimums around things like “volume,” even though “volume” across most keyword research tools are:

- Completely inaccurate.

- Wildly different from tool to tool.

So keep in mind that keyword research data is all relative. Not absolute.

You don’t need to care about “how much volume” there is for a “vs” or “alternative” keyword. ‘Cause in real life, you already know you’re being compared against alternatives.

Learn to trust your gut, intuition and customer feedback more than any other artificial number in a spreadsheet.

Dig deeper: B2B keyword research: A comprehensive guide

3. Ranking in a realistic time frame (given your goals and objectives)

Here’s the thing:

Everyone wants to rank for the “best” keywords, except not everyone deserves to rank for the best keywords.

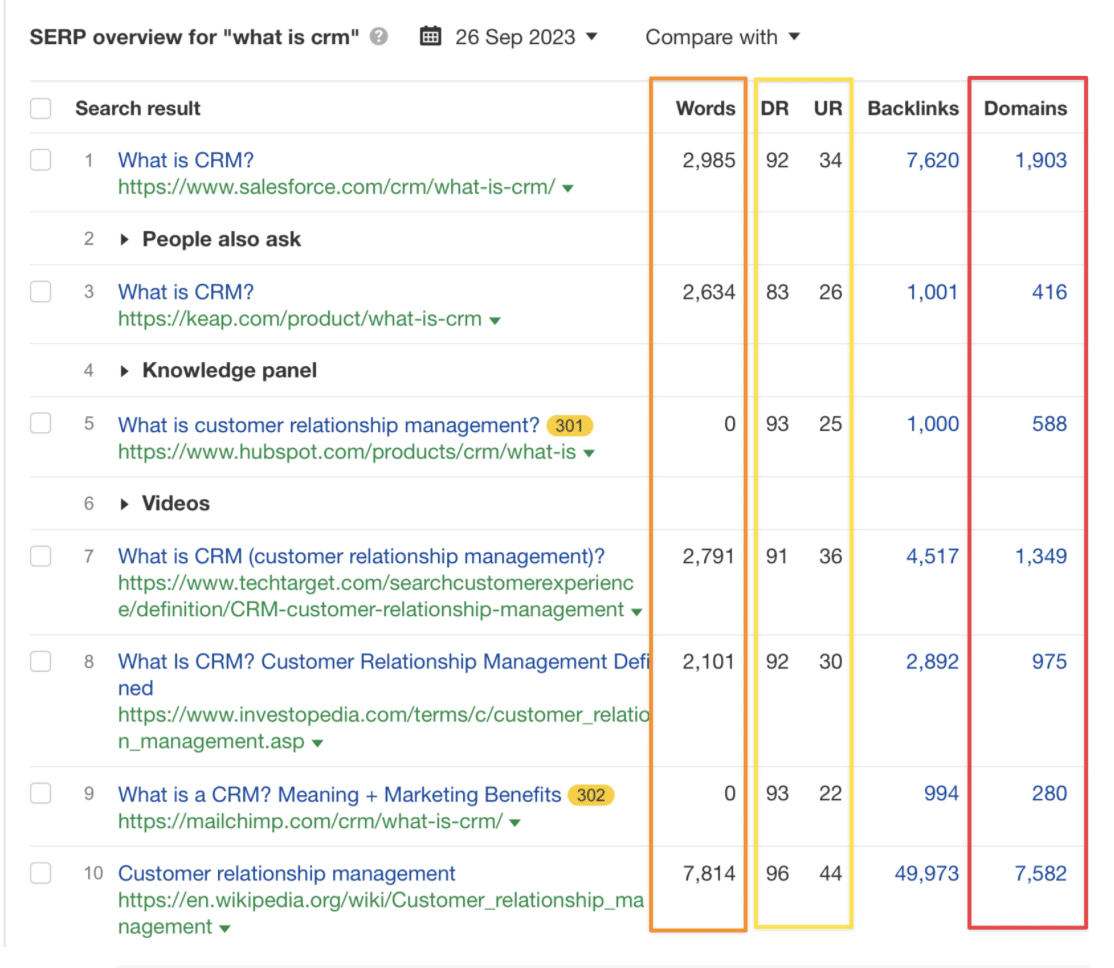

Check out this example below and look at the brand names dominating the top 10 for “what is CRM”:

Now, ask yourself:

If you’re not already a household name in the CRM space, what is the likelihood of you unseating one of these competitors?

Seriously, what’s the likelihood that you’ll rank in the top 10 for this keyword in one year?

And then, more importantly, what is it actually gonna take you – today – to get there tomorrow?

No. You probably don’t deserve to rank in the top 10 for this query.

Not today, and probably not over the next 12 months.

But two or three or five years from now? Sure! Why not?!

The Beachhead Principle can help, but only if you put in the hard work over the next few years to achieve it.

4. The competitive landscape’s relative strength

Keyword difficulty lies.

Not some of the time, but all of the time, across pretty much every single keyword research tool.

This means simply adding a filter for KD when doing keyword research isn’t good enough.

At best, it sets you up for bad expectations. At worst, it manifests in “pretty good” future rankings that never will actually break the bank (in a good way).

See, “keyword difficulty” usually relates specifically to the number (not even quality!) of backlinks to each individual piece of content currently ranking in the top ten or so.

However, it does not only ignore the quality of said backlinks but also the overall domain strength of the site.

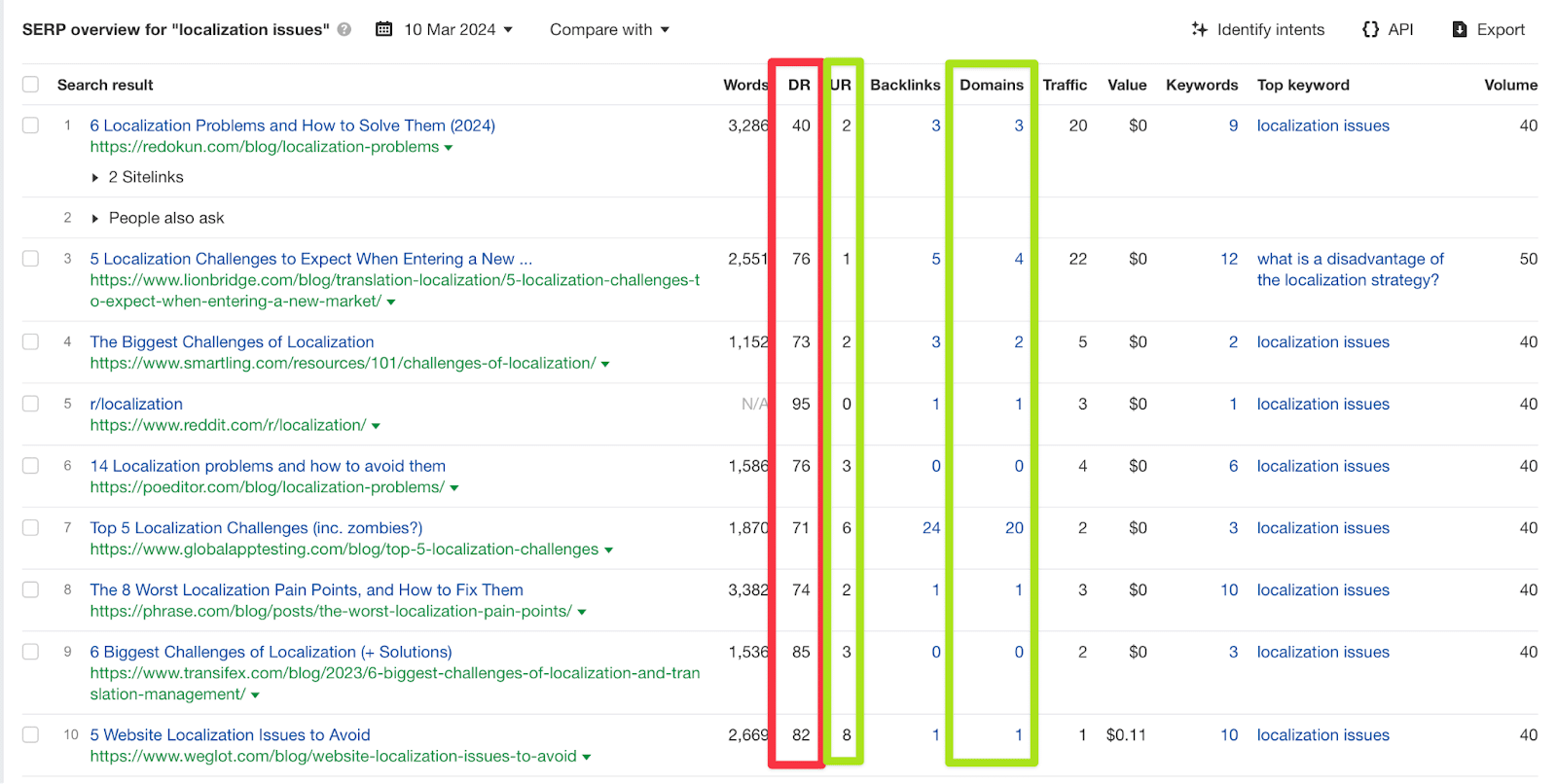

Check out this “localization issues” example below to see what I mean:

Nice! We SEOs like “Easy,” right?

Except, this:

Sure, the two green boxes (page-level strength) are kinda/sorta easy.

However, the red box? Domain strength overall? With one exception, the rest are sitting at 70+ domain ratings.

So no. This keyword isn’t “easy.”

This is why filtering a spreadsheet for “low KD” is misleading and inaccurate.

5. Your site’s current strengths

Topical authority can work practically in two ways:

- It can be a rising tide that lifts all boats (err, other similar keywords in the same space you’re deemed an expert), or…

- It can be a glass ceiling, giving you the dreaded “pretty good” rankings that never actually come to fruition (without a ton of extra work in other areas.

The simplest way to assess topical authority (or lack thereof) is to see if you have good rankings (top five or 10, at least) for lots of related content around these same queries.

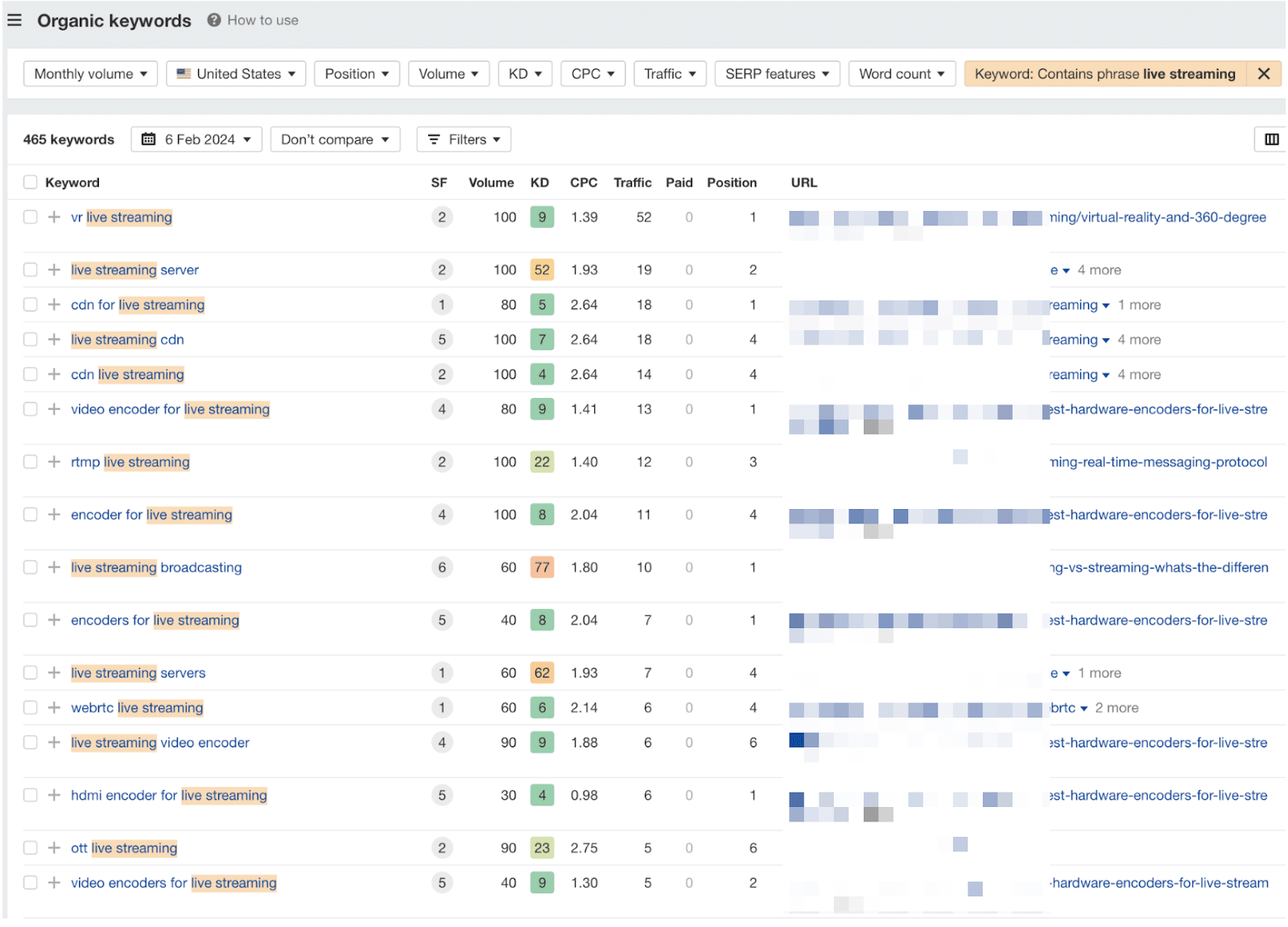

Wanna see what that looks like? Kinda like this:

Tons of top five rankings for related keywords around “live streaming.” Great!

But the inverse is true, too.

No strong rankings for other related topics or concepts? This means you have a lot more work to do!

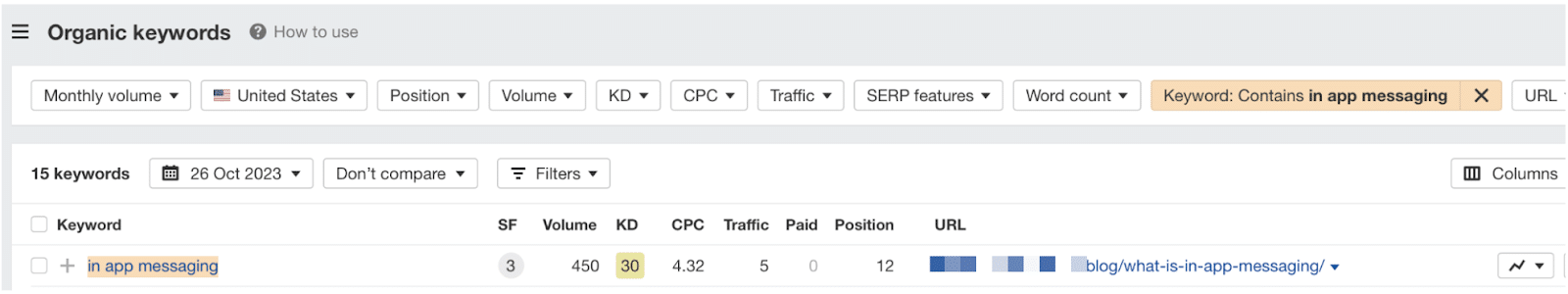

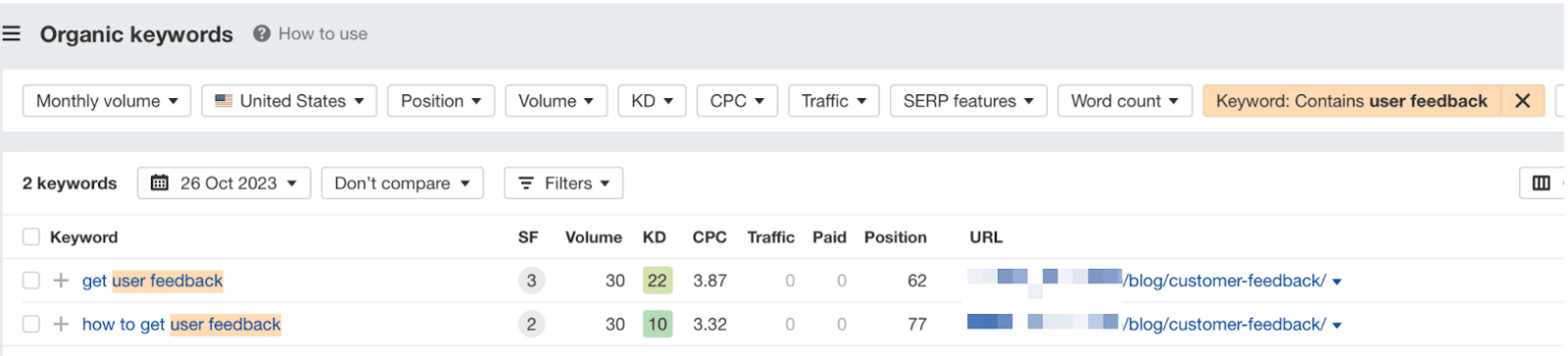

Take a look at this pillar page below and specifically the sub-sections being called out:

Now, let’s assess each area to see if this site already has good rankings for those sections.

Example 1: A single 12th position, so not really.

Example 2: Two 60+ positions – not at all!

Example 3: Lots of 40-80+ positions. So clearly, no.

The point?

That first pillar page will always struggle to rank until you can also get these other supporting pages to help, you know, actually support it!

In other words, there is no existing topical authority in this space. Creating and reinforcing this should be priority number one.

And yet? No Excel sheet or formula is going to tell you this. At least, not without analyzing the problem of not ranking from different perspectives or angles like this.

6. Resources you’re able to allocate in a specific time frame

Let’s recap and combine the first five ideas. Then, add a sixth consideration: how many resources (i.e., people, time, money) you can realistically allocate to each keyword you might want to target over the next year.

Once again, back to “live streaming platform.”

Take a peek at both the domain and page-level strengths below:

Now, an important caveat:

- You’ll notice the huge number of referring domains (in the right-hand orange box).

- That’s why this KD was listed as “Super Hard” earlier.

- But, those of you with eagle eyes will now see that the ones with thousands or tens of thousands of referring domains are actually homepages!

- This means that “Super Hard” ranking earlier is also overstated.

- Ahrefs is simply averaging out these tens of thousands of domains across the other results, too.

- If search intent is truly a comparison post, it will mean that you don’t need nearly as many links to actually rank for this query.

Confused yet?

Yes, this keyword is still pretty difficult. Don’t get it twisted.

But it’s not nearly as difficult or unattainable as that KD would have originally alluded to.

Once again, no Excel formulas would have helped you uncover this issue. Only your eyes, ears and experience can.

This is one of the many reasons why brands routinely fail at link building. When you see “1,000+” backlinks needed, you build tons and tons of cheap, low-quality links.

Yet, you’d probably be better off with better content, topical authority and fewer (dozens to hundreds) of really high-quality, editorial-based links instead.

Yes, you still need relevance and scale either way.

The type of campaigns and, therefore, the effective budget required for this one URL (compared to all the other URLs you might be juggling) should also be completely different.

A smarter, more intentional keyword research approach

Let’s face it:

SEOs are kinda geeky. We love data and want to live in a world of logic.

We believe everything we do is quantifiable and data-driven. But don’t take this way too far.

You don’t need fancy formulas and pivot tables when doing keyword research. The more data sets you’re juggling often lead you astray.

SEO is more art than pure science in this regard.

What’s hard about SEO is reading the tea leaves and then consistently executing over the long haul (read: 24+ months, minimum).

None of these can be answered by ChatGPT, Ahrefs, etc., with a simple prompt or export button.

This is why people and companies fail at SEO.

Not because “SEO is difficult/technical/doesn’t work in my space/enter other excuses here.”

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Meta AI adds Google Search results

Written on April 22, 2024 at 8:46 pm, by admin

Meta AI answers now may include Google Search results, making this the first AI assistant to include search results from both Google and Microsoft.

That said, I’ve yet to find a query that triggered Google Search results. Meta AI launched with Microsoft Bing integrated in September.

Why we care. Gartner predicted search traffic will fall due to the rise of AI chatbots, virtual agents and AI answer engines providing direct answers, as opposed to having to click on a website to find an answer. However, the models (like Meta’s Llama 3) don’t have access to real-time data – so it makes sense for Meta to incorporate information from search engines.

How Meta AI works. You can now enter searches in the search bar in the Facebook, Instagram, WhatsApp and Messenger apps. When Meta AI includes search results, those will appear as a tappable link beneath the answer, as Sources. Tapping on that link will bring you to the web, but keep you within the Meta app.

Meta AI will also appear in the main Facebook feed.

What it looks like. Here’s an image Meta shared of the search experience:

The deal. “There’s not a “ton of money” flowing either way, Zuckerberg told tech rag the Verge. He was also asked about Google outsourcing search to another AI assistant, despite having Gemini:

- “I guess I wouldn’t have been surprised if they didn’t want to do it. But it seems like they are building up a whole model around this, so it makes sense. It’s good for Google. It shows Google prominently and links to Google. They pay Apple a ton of money for distribution. They’re not paying us. So, I think it’s good for them on that.”

Meta.ai website. Meta also announced the launch of meta.ai, which makes Meta AI available on computers for the first time.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Google Chrome IP masking could radically impact search advertising

Written on April 22, 2024 at 8:46 pm, by admin

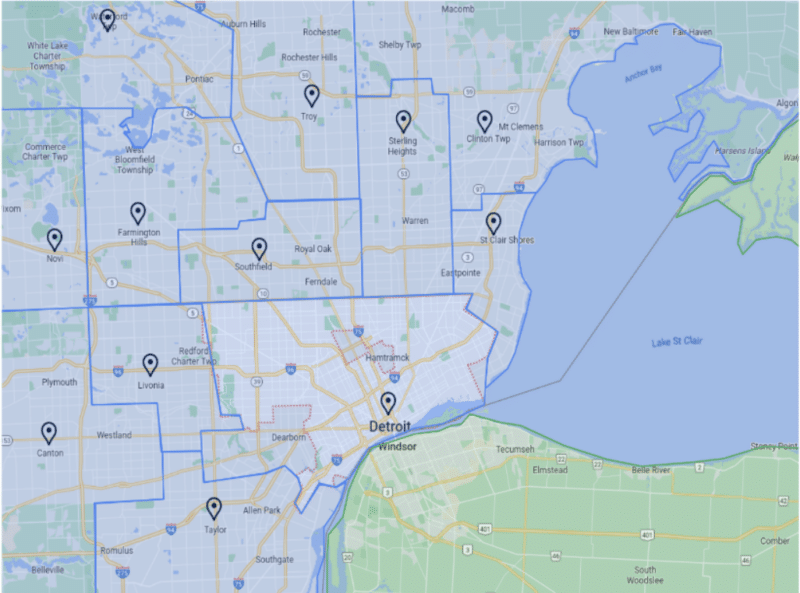

Google is developing a two-hop proxy to enhance privacy for Chrome users, which has three big implications for advertisers:

- For ad location targeting, you’ll only have the option to target regions designated by Google, which won’t be accurate.

- Because Google Chrome will use a proxy to connect to websites, it will mask users’ identities, meaning advertisers cannot distinguish between genuine and bot traffic.

- Google is increasing its collection of uniquely valuable location data, which could increase advertisers’ costs.

Why we care. The two-hop proxy is only implemented on Chrome, meaning Google will have a monopoly on this data. No other search engines will have any data for advertisers to use for location targeting. This could effectively eliminate competition in the search ads space.

What is IP-based geolocation. According to Google’s documentation:

- “IP-based geolocation is used by a swath of services within proxied third-party traffic, to comply with local laws and regulations and serve content that is relevant to users. Use cases include content localization, local cache assignment, and geo-targeting for ads.”

The details. User IP addresses will be batched and masked by region, and Google will allocate an IP address to each batch. Here’s what that looks like:

Any user assigned an IP address for a region will have been verified to be in that region. The Google geofeed will be plugged into the proxy and have city-level accuracy.

Will consumer data be truly private? While a consumer’s data will be shielded from advertisers, it won’t be safeguarded from Google, posing a threat to data privacy.

More Google privacy and self-preferencing concerns. In February, the CMA (Competition and Markets Authority said:

- “We are particularly keen on resolving any remaining concerns relating to the design of the Privacy Sandbox tools and to ensure that Google does not use the tools in a way that self-preferences its advertising services.”

Dig deeper. Google ‘cannot proceed with third-party cookie deprecation

Meanwhile, the Information Commissioner’s Office, a UK privacy regulator, also shared significant concerns about Privacy Sandbox, in a WSJ article (subscription required), published last week. Once released, nothing will stop Google and other companies from using data to track users from different sites, the ICO said.

- “Google’s proposed replacement for cookies need to do more to protect consumer privacy.”

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Airbnb dominates in search sentiment report

Written on April 22, 2024 at 8:46 pm, by admin

Airbnb is the leading advertiser in search sentiment, closely trailed by Viacom and Netflix, according to a new analysis of 100 top advertisers.

Why we care. Looking at search sentiment can help marketers better understand how consumers feel about them in real-time and what contributes to brand loyalty and sustainability. AirBnB made a significant shift from search marketing to brand marketing. As they have taken the lead in this search sentiment report, it will pique the curiosity of other advertisers to go down the same path to improve their customer sentiment ranking.

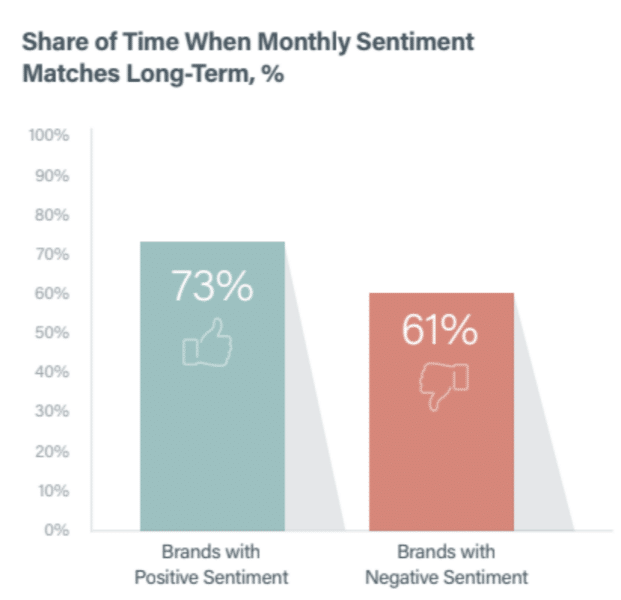

The big picture. The findings illustrate what it takes to have strong customer sentiment for an extended period.

- Brands with positive sentiment are likely to maintain it over 15 months, and the same applies to negative sentiment.

- Long-term perception has a major impact on sentiment. Consistency over time is better for long-term sentiment.

Volume and sentiment. There is no direct correlation between search volume and sentiment. However, search volume can enhance sentiment, serving as an amplifier, which advertisers should consider in their strategies.

- For instance, if a company has positive sentiment but low search volume, focusing on campaigns to increase volume would be beneficial.

- Conversely, launching ads to counter negativity and promote a positive message could be effective for those with negative sentiments but high volume.

Top 10 advertisers by search activity. Airbnb, ViacomCBS and Netflix were the top three ranked brands. The others were:

- Compagnie Financiere Richemont

- NBA

- Inspire Brands

- Sony Group Corp.

- P&G

- Apple

- Walt Disney Co.

Top advertisers by industry. These were the top advertisers across 10 categories analyzed in the report:

- Travel: Airbnb

- Retail: Compagnie Financiere Richemont

- Consumer packaged goods: Procter and Gamble

- Financial services: American Express

- Restaurants: Inspire Brands

- Entertainment and media: ViacomCBS

- Automotive: Volkswagon

- Telecom: T-Mobile

- Consumer technology: Apple

- Alcohol: AbInBev

About the data. The analysis was conducted by Captify (registration required), which collects data from over 1 billion searches on 3 million websites across 2 billion devices daily.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Structure, consume, learn and retire: Google’s pattern of learning

Written on April 22, 2024 at 8:46 pm, by admin

Over the years, Google has seemingly established a pattern in how it interacts with the web. The search engine provides structured data formats and tools that allow us to supply information to Google. Think: meta tags, schema markup, the disavow tool and more.

Google then consumes and learns from this structured data deployed across the web. Once sufficient learnings are extracted, Google then retires or de-emphasizes these structured data formats, making them less impactful or obsolete.

This cyclical process of giving structured data capabilities, consuming the information, learning from it and then removing or diminishing those capabilities seems to be a core part of Google’s strategy.

It allows the search engine to temporarily empower SEOs and brands as a means to an end – extracting data to improve its algorithms and continually improve its understanding of the web.

This article explores this “give and take” pattern through several examples.

Google’s pattern of ‘give and take’

The pattern can be divided into four stages:

- Structure: Google provides structural ways to interact with search snippets or its ranking algorithms. For example, in the past, meta keywords could tell Google which keywords were relevant to a given webpage.

- Consume: Google collects data from the web by crawling websites. This step is important. Without consuming data from the web, Google has nothing to learn from.

- Learn: Google then leverages fresh crawl data, after its recommended structures are implemented. What were the reactions to Google’s proposed tools or snippets of code? Were these useful changes, or were they abused? Google can now confidently make changes to its ranking algorithms.

- Retire: Once Google has learned what they can, there’s no reason to rely on us to feed them structured information. Leaving such inbound data pipes intact will invariably lead to abuse over time, so the search engine must learn to survive without them. The suggested structure from Google is retired in many (though not all) instances.

The race is for the search engine to learn from webmasters’ interactions with Google’s suggested structure before they can learn to manipulate it. Google usually wins this race.

It doesn’t mean no one can leverage new structural items before Google discards them. It simply means that Google usually discards such items before illegitimate manipulations become widespread.

Give and take examples

1. Metadata

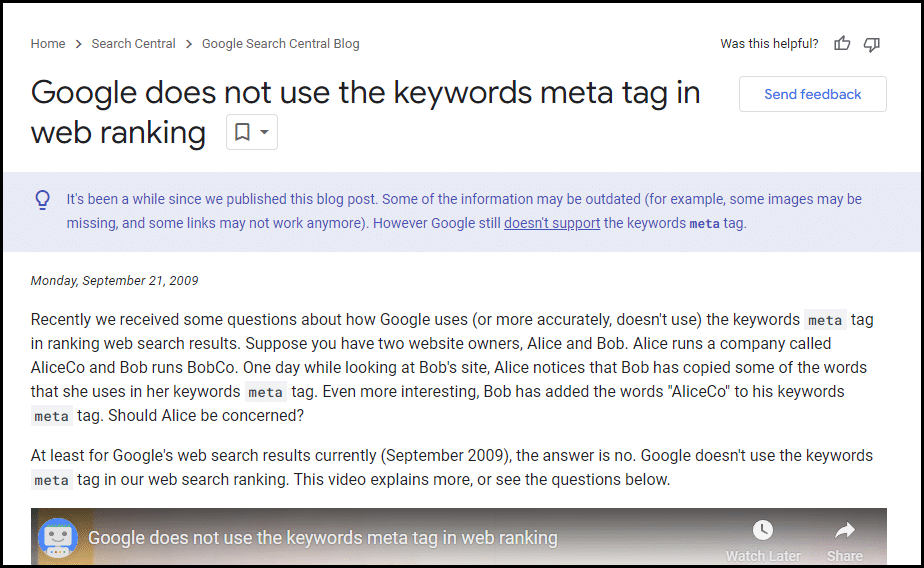

In the past, meta keywords and meta descriptions played crucial roles within Google’s ranking algorithms. The initial support for meta keywords within search engines actually predates Google’s founding in 1998.

Deploying meta keywords was a way for a webpage to tell a search engine the terms for which the page should be findable. However, such a direct and useful bit of code was quickly abused.

Many webmasters injected thousands of keywords per page in the interest of getting more search traffic than was fair. It quickly led to the rise of low-quality websites filled with ads that unfairly converted acquired traffic into advertising income.

In 2009, Google confirmed what many had suspected for years. Google stated:

“At least for Google’s web search results currently (September 2009), the answer is no. Google doesn’t use the keywords meta tag in our web search ranking.”

Another example is the meta description, a snippet of code that Google supported since its early days. Meta descriptions were used as the snippet text under a link in Google search results.

As Google improved, it started ignoring meta descriptions in certain situations. This is because users might discover a webpage through various Google keywords.

If a webpage discusses multiple topics and a user searches for a term related to topic 3, showing a snippet with a description of topics 1 or 2 would not be helpful.

Therefore, Google began rewriting search snippets based on user search intent, sometimes ignoring a page’s static meta description.

In recent times, Google has shortened search snippets and even confirmed that they mostly examine a page’s primary content when generating descriptive snippets.

2. Schema and structured data

Google introduced support schema (a form of structured data) in 2009.

Initially, it pushed the “microformats” style of schema, where individual elements had to be marked up within the HTML to feed structured or contextual information to Google.

In terms of concept, this actually isn’t too far removed from the thinking behind HTML meta tags. Surprisingly, a new coding syntax was adopted instead of just using meta tags more extensively.

For example, the idea of schema markup was initially (and largely remains) to supply additional contextual information concerning data or code that is already deployed – which is similar to the definition of metadata:

- “Information that describes other information in order to help you understand or use it.”

Both schema and metadata attempt to achieve this same goal. Information that describes other existing information to help the user leverage such information. However, the detail and structural hierarchy of schema (in the end) made it far more scalable and effective.

Today, Google still uses schema for contextual awareness and detail concerning various web entities (e.g., webpages, organizations, reviews, videos, products – the list goes on).

That said, Google initially allowed schema to alter the visuals of a page’s search listings with a great degree of control. You could easily add star ratings to your pages for Google’s search results, making them stand out (visually) against competing web results.

As usual, some began abusing these powers to outperform less SEO-aware competitors.

In February 2014, Google started talking about penalties for rich snippet spam. This was when people misused schema to make their search results look better than others, even though the information behind them was wrong. For example, a site without reviews purports a 5-star aggregate review rating (clearly false).

Fast-forward to 2024, and while still situationally useful, schema is not as powerful as it once was. Delivery is easier, thanks to Google’s JSON-LD preference. However, schema no longer has the absolute power to control the visuals of a search listing.

3. Rel=Prev / Next

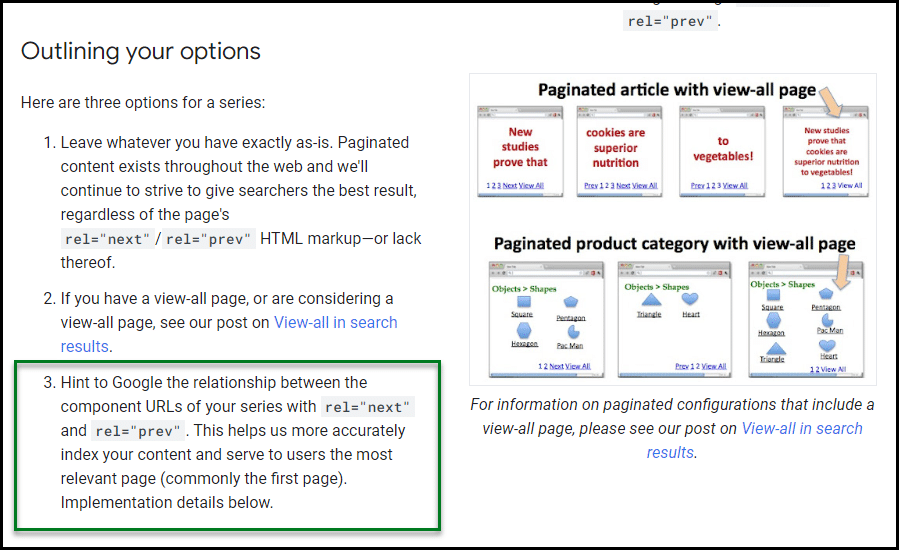

Rel=”prev” and rel=”next” were two HTML attributes Google suggested in 2011. The idea was to help Google develop more contextual awareness of how certain types of paginated addresses were interrelated:

Eight years later, Google announced they no longer supported it. They also said they hadn’t supported this kind of coding for a while, suggesting support ended around 2016, just five years after the suggestions were first made.

Many were understandably annoyed because the tags were fiddly to implement, often requiring actual web developers to re-code aspects of website themes.

Increasingly, it seemed as if Google would suggest complex code changes in one moment only to ditch them the next. In reality, it is likely that Google had simply learned all it needed from the rel=prev / next experiment.

4. Disavow tool

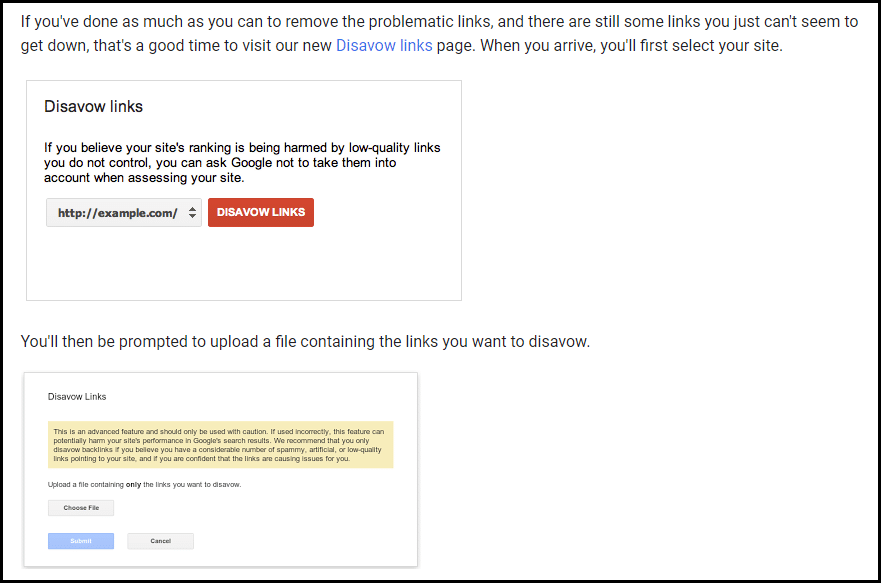

In October 2012, the web buzzed with news of Google’s new Disavow links tool.

In April 2012, Google released the Penguin update, which caused the web to be in turmoil. The update targeted spammy off-site activity (link building) heavily, and many websites saw manual action notices appear within the Search Console (then named Webmaster Tools).

Using the Disavow tool, you could upload lists of linking pages or domains they would like to exclude from Google’s ranking algorithms. If these uploaded links largely agreed with Google’s own internal assessment of the backlink profile, the active manual penalty may then have been lifted.

This would give back a “fair” amount of Google traffic to their site, though obviously, with part of their backlink profile now “disavowed” – post-penalty traffic was usually lower than pre-penalty traffic.

As such, the SEO community had a relatively low opinion of the tool. Usually, a complete backlink removal or disavow project was necessary. Having less traffic after the penalty was better than having no traffic at all.

Disavow projects haven’t been necessary for years. Google now says that anyone still offering this service is using outdated practices.

In recent years, Google’s John Mueller has been extremely critical of those selling “disavow” or “toxic links” work. It seems as if Google no longer wants us to use this tool; certainly, they do not advise us on its usage (and haven’t in many years).

Dig deeper. Toxic links and disavows: A comprehensive SEO guide

Unraveling Google’s give-and-take relationship with the web

Google provides tools or code snippets for SEOs to manipulate its search results in minor ways. Once Google gains insights from these deployments, such features are frequently phased out. Google grants us a limited amount of temporary control to facilitate its long-term learning and adaptation.

Does this make these small, temporary releases from Google useless? There are two ways of looking at this:

- Some people will say, “Don’t jump on the bandwagon! These temporary deployments aren’t worth the effort they require.”

- Others will say, “Google gives us temporary opportunities for control, so you need to take advantage before they vanish.”

In truth, there is no right or wrong answer. It depends on your ability to adapt to web changes efficiently.

If you’re comfortable with quick changes, implement what you can and react fast. If your organization lacks the expertise or resources for quick changes, it’s not worth following trends blindly.

I think this ebb and flow of give and take doesn’t necessarily make Google evil or bad. Any business will leverage its unique assets to drive further learning and commercial activity.

In this instance, we are one of Google’s assets. Whether you wish for this relationship (between yourself and Google) to continue is up to you.

You could choose not to cooperate with Google’s temporary power, long-term learning trade deals. However, this may leave you at a competitive disadvantage.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Make 2024 the year you take home the highest honor in search

Written on April 20, 2024 at 2:44 pm, by admin

Winning an industry award can greatly impact how customers, clients, and colleagues regard your brand. Showcase your achievements and celebrate your professional excellence by entering The Search Engine Land Awards — the highest honor in search marketing!

For nearly 10 years, The Search Engine Land Awards have honored some of the best in the search industry — including leading in-house teams at Wiley Education Services, T-Mobile, Penn Foster, Sprint, and HomeToGo – and exceptional agencies representing Samsung, Lands’ End, Stanley Steemer, and beyond.

This year, it’s your turn. The 2024 entry period is now open!

Here’s what you need to know:

- The Search Engine Land Awards celebrate individuals, agencies, and internal teams within the search marketing community who have demonstrated excellence in executing organic and paid search marketing campaigns.

- This year’s program features 19 unique categories, from Best Use of AI Technology in Search Marketing to Agency of the Year… click here to explore them all.

- Applying is easier than ever – send us an executive summary that showcases, in 750 words or less, the award-worthy work you and your team performed this past year.

- Completing your application empowers you to reflect on an impressive year of work, featuring its successes and lessons learned – an invaluable exercise for you and your team.

- Winning a Search Engine Land Award is a unique, rewarding, and cost-effective way to put your organization a step ahead of its competitors, gain well-earned publicity, boost company morale, and more.

- Submit your application by May 24 to unlock Super Early Bird pricing – just $395 per entry ($300 off final rates!).

Don’t miss your opportunity to participate in the only awards program recognized by Search Engine Land, the industry publication of record. Begin your application today!

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

5 alternatives to the missing Page Timings report in GA4

Written on April 19, 2024 at 11:44 am, by admin

Page load speed, among other Core Web Vitals, is a known Google organic ranking factor. While we have the PageSpeed Insights tool, it unfortunately only works on one page at a time.

The Page Timings report in Universal Analytics surfaced specific pages on your site that were slowest, allowing you to prioritize which pages to evaluate and optimize.

The tool was particularly helpful if you have a large site with thousands of pages to analyze. Armed with the list of problem URLs, you could then prioritize pages for review using the PageSpeed Insights tool.

But Google didn’t include the Page Timings report in GA4, so where can you find similar information now?

Below are several free and paid tools that can help you pinpoint your problem pages and prioritize their optimization.

1. Google Search Console

- Pros: Free.

- Cons: Highly manual, no API connections.

Google Search Console (GSC) provides a Core Web Vitals report and even separates the data by mobile versus desktop.

However, while GSC provides some examples of URLs affected, it doesn’t provide a full list. Instead, it groups pages together and shows examples from the group. The data is also not easily downloadable to a CSV for monitoring.

If your goal is regular monitoring, you’ll need to log in to GSC and review the data within the tool. The GSC API does not support exporting core web vitals report data, so you can’t pull GSC data into Looker Studio or other visualization tools.

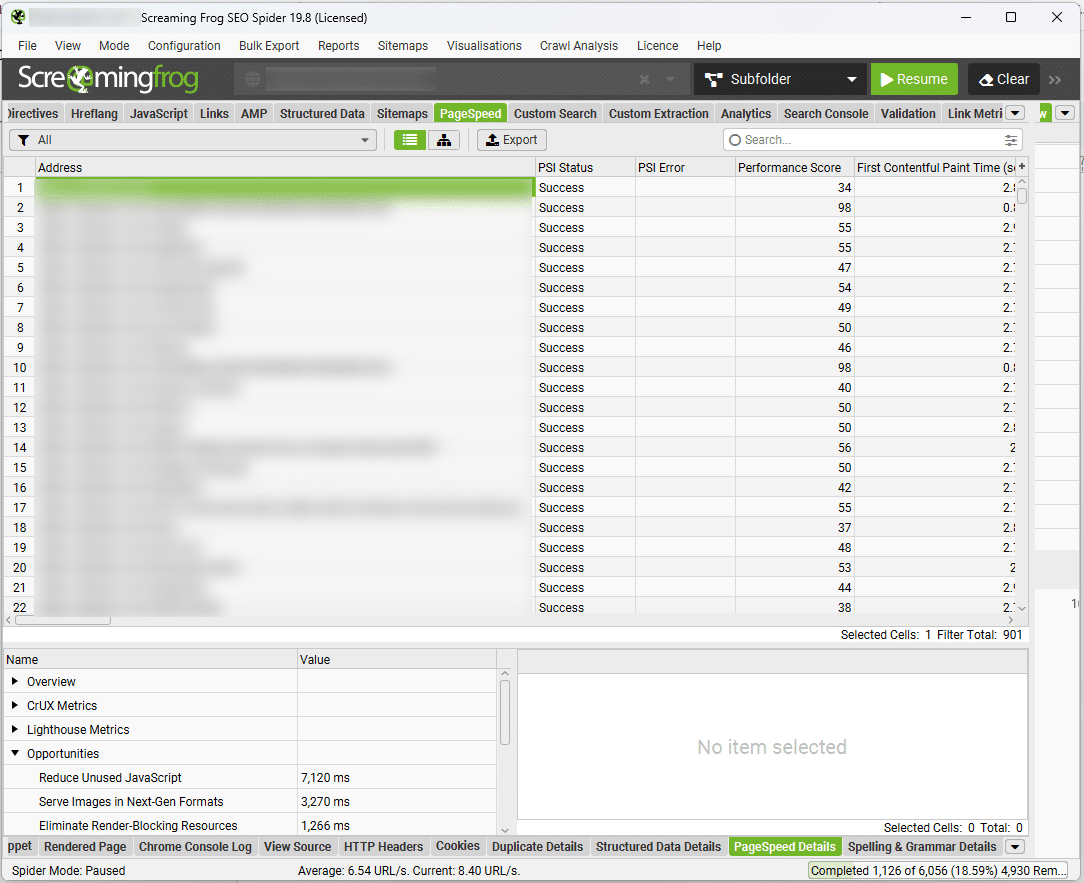

2. Screaming Frog

- Pros: Thoroughly indexes sites, connects to PageSpeed Insights API (with a key you provide), scheduling available.

- Cons: Paid tool, desktop-based.

A long-time favorite of SEO professionals, Screaming Frog software has many helpful SEO applications, but the most important thing for this article is that it provides page load times.

It can further be connected to the PageSpeed Insights tool using a key from the PageSpeed Insights API to import Core Web Vitals data directly into the PageSpeed report:

The only real drawback to Screaming Frog is that because it’s a desktop-based application, the computer you host it on has to be turned on and connected to the web when the report runs. This makes the tool less optimal for dashboarding and highly regular data monitoring.

One workaround is to have a desktop computer that is always turned on. I did this in my agency for many years with a dedicated, old desktop computer running Screaming Frog.

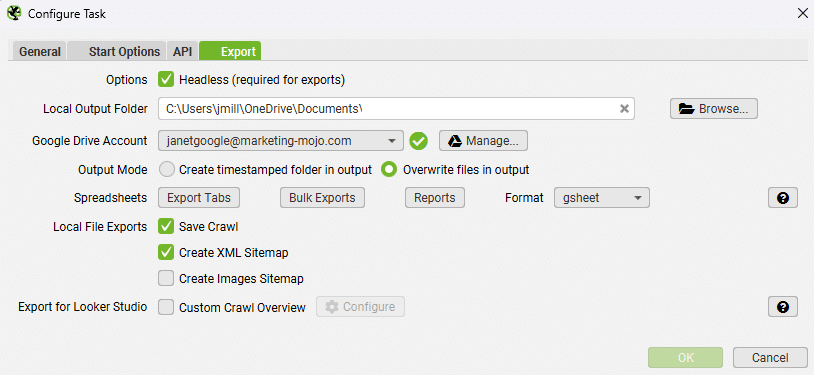

Because the tool allows for scheduling, the scheduled report can run at the appointed time as long as the computer is on and connected to the internet. Additionally, you can connect Screaming Frog to a Google Drive account and export the report tabs to Google Sheets:

If you want to use the upload for dashboarding, choose the Overwrite files in output, which will allow you to just update the same Google Sheet.

Once the data is in a Google Sheet, you can import it into other platforms, such as Looker Studio, to create dashboards and visualizations or create thresholds to send email alerts using Apps Script.

3. Ahrefs

- Pros: Thoroughly indexes sites, scheduling available, cloud-based application, connects to the PageSpeed Insights API (with a key you provide).

- Cons: Paid tool, manual data export.

Ahrefs has long been an SEO favorite for tracking backlinks, but the tool also has a robust site audit tool that tracks page load speed as it indexes a website.

Like Screaming Frog, you can connect PageSpeed Insights directly to the site audit to see specific core web vitals optimizations that should be made:

While you can export reports to Google Sheets, it’s a manual process. Site audits can be scheduled for regular intervals.

Unfortunately, the Ahrefs API doesn’t appear to have a way to automatically export the results, leaving it a bit of a manual process and less than ideal for dashboarding and near real-time reports.

4. Semrush

- Pros: Thoroughly indexes sites, scheduling available, cloud-based application, connects to the PageSpeed Insights API (no key needed).

- Cons: Paid tool, manual data export.

Another popular SEO tool is Semrush, and it also has a site audit feature that reviews page load speed and lists the pages with the longest load times:

Unlike Ahrefs and Screaming Frog, you aren’t required to enter a personal PageSpeed Insights API key to connect core web vitals optimization information directly to the audit.

Again, with this tool, however, the data export for this report is manual. Semrush has an API, though, and it will report on page load speed issues. However, the API is only available for business plans and higher, which start at $499/month.

5. Add page speed into GA4 using custom dimensions

- Pros: Free, measures actual user data for page load speed by page, scheduling not required, cloud-based application.

- Cons: Only begins tracking data once implemented (no historical data), doesn’t automatically connect with PageSpeed Insights API.

Another option to restore page load speed in Google Analytics is to create a custom dimension. You can use that custom dimension to create an Explorations report, import data into Looker Studio or export data using the GA4 API or various tools that incorporate the API.

Measure School has an excellent tutorial on how to track page load speed using Google Tag Manager and custom dimensions in GA4.

Multiple free and paid tools can export your list of slow pages using the custom dimension to Google Sheets, including the free Google Sheets extension GA4 Reports Builder for Google Sheets.

Unlike its predecessor in Universal Analytics, this extension does not have scheduling capability. I personally use Supermetrics, which is a paid tool but provides me access to multiple APIs, including GA4, and allows me to schedule reports.

Connecting with the PageSpeed Insights API

Once you have your list of the site’s slowest pages, though, you’re not completely finished! Screaming Frog, Ahrefs and Semrush pull Core Web Vitals optimizations into their platforms using the PageSpeed Insights API.

If you’re not using one of those tools, you’ll either need to interrogate each URL in the PageSpeed Insights tool manually, one by one, or you can also use the PageSpeed Insights API to make those queries for you.

If you’re not a web developer or skilled with coding, there are fortunately tools that you can use to tap into APIs, including the PageSpeed Insights API, to get the specific core web vitals details you need for optimization.

My personal favorite is Zapier, which has a webhook zap allowing even non-developers a simplified way to connect your list of slow URLs to the PageSpeed Insights and pull in whichever data points are most important:

Optimizing images can often be a quick way to improve page load speed. In the zap example above, I only pull in image details for each URL for a site with over 10,000 pages. This allows me a fast way to find:

- Which pages are slowest.

- Of those pages, which ones are slow due to images that should be resized.

- Make a list of the images that should be resized and prioritize them by greatest load time saved per image.

The benefit of this approach is that it truly can provide near-real-time reporting and dashboarding, whereas the other solutions still have drawbacks that make them less than ideal for dashboard reports.

However, you continue to measure page load speed for organic search optimization, each solution requires some set-up and work. So, if you haven’t already started on a solution, get started immediately so that you can quickly mine quick wins for SEO and improve your problem pages.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.