YouTube First Position Ads now available across Display & Video 360

Written on September 12, 2024 at 8:44 pm, by admin

First Position has been expanded to include all YouTube content through Display & Video 360, offering it at dynamic CPM rates. Previously, this feature was only available on YouTube Select inventory at fixed rates.

- Now, advertisers can secure prime ad placement at the beginning of a YouTube session across any content, enhancing relevance and impact.

Why it matters. With YouTube being the largest streaming video website in the world (boasting 1 billion hours of video being watched a day) there is a lot of opportunity if you use video assets in your campaigns. With First Position, you can now ensure your ad is the first one viewers see when watching YouTube content, maximizing visibility during critical moments and potentially driving higher engagement and brand recall.

Details. First Position guarantees that an ad will be the first in-stream spot when users begin watching YouTube, making it ideal for key campaigns like product launches or cultural events.

Case studies. As usual, Google cited well-known household brands like Booking.com and IHG Hotels & Resorts as already seeing success with First Position, so you shouldn’t necessarily expect to replicate such results if you don’t have similar resources. Booking.com drove a 21% lift in ad recall, while IHG combined it with YouTube Sponsorships to achieve double the brand awareness benchmark.

What’s next. Advertisers can now leverage First Position for any campaign across YouTube’s entire content library. For more details, visit the Help Center for Instant Reserve in Display & Video 360 or Reservations in Google Ads.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Use DAM and AI to keep up with content demands by Edna Chavira

Written on September 12, 2024 at 8:44 pm, by admin

Are you overwhelmed by the constant demand for new content? AI and Digital Asset Management (DAM) can be your secret weapons for keeping up with content demands and delivering exceptional digital experiences.

Join us for Acquia’s upcoming webinar, Driving Brand Growth: Using DAM and AI to Keep Up With Content Demands. Our expert panel will discuss:

- DAM’s role in branding and digital experience management

- Best practices for seamless migration and strategic tech consolidation

- How to effectively integrate tech stacks, content, and teams

Don’t miss this opportunity to gain valuable insights and stay ahead of the curve. Register now to secure your spot!

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

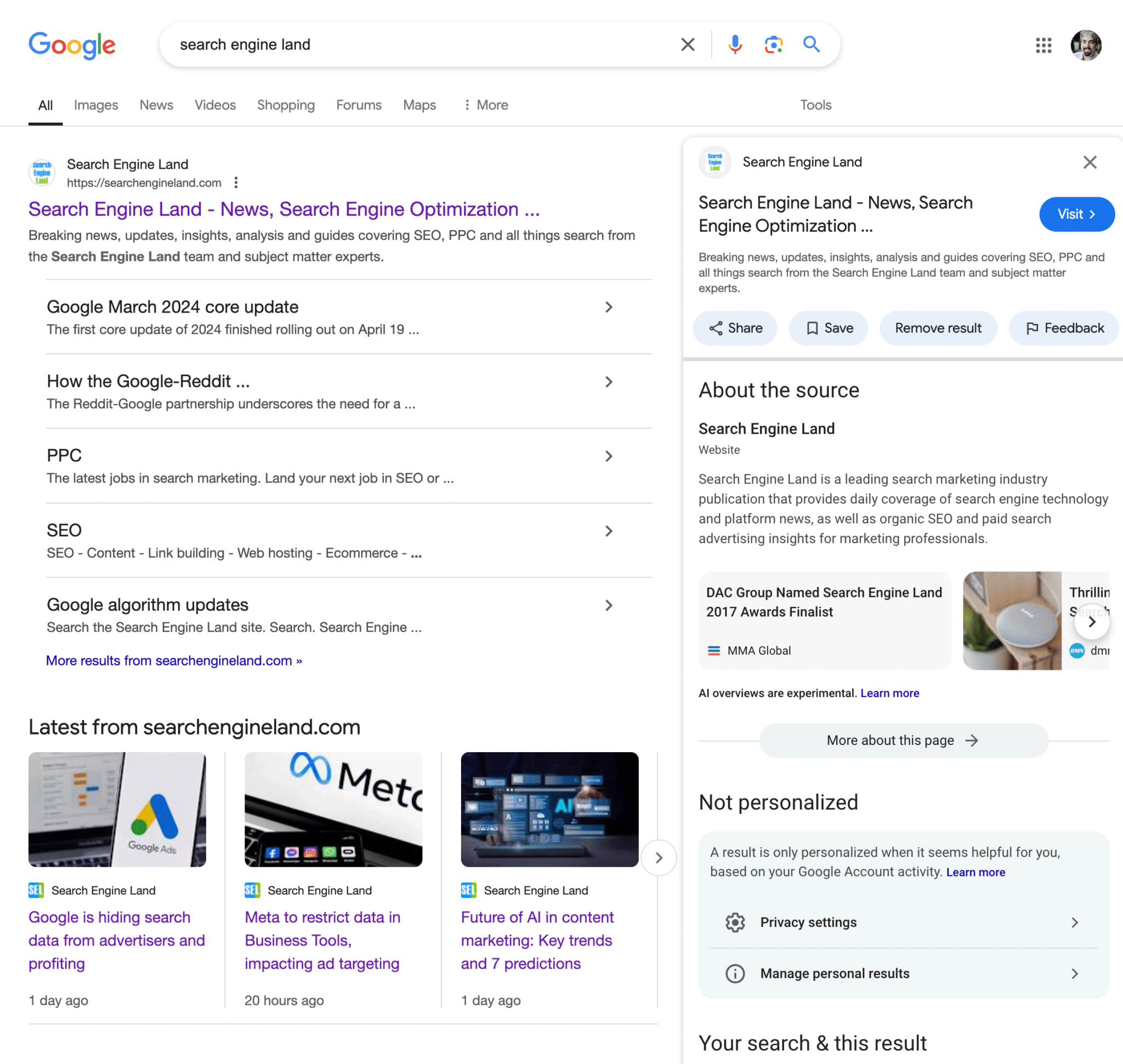

SearchGPT: What you need to know about OpenAI’s search engine

Written on September 12, 2024 at 8:44 pm, by admin

With its characteristic cheerfulness, ChatGPT will tell you that the digital media landscape is “rapidly evolving.”

Google is regularly updating its algorithm, AI is getting smarter by the day and new tools are entering the market faster than fashion trends, which go in and out of style. That can mean opportunities and challenges for us.

One of the most talked-about evolutions to the search landscape is OpenAI’s SearchGPT, a product that could rival Google’s dominance soon.

At my agency, we recently gained early access to SearchGPT. Below are our takeaways about this new tool and its implications for digital marketing.

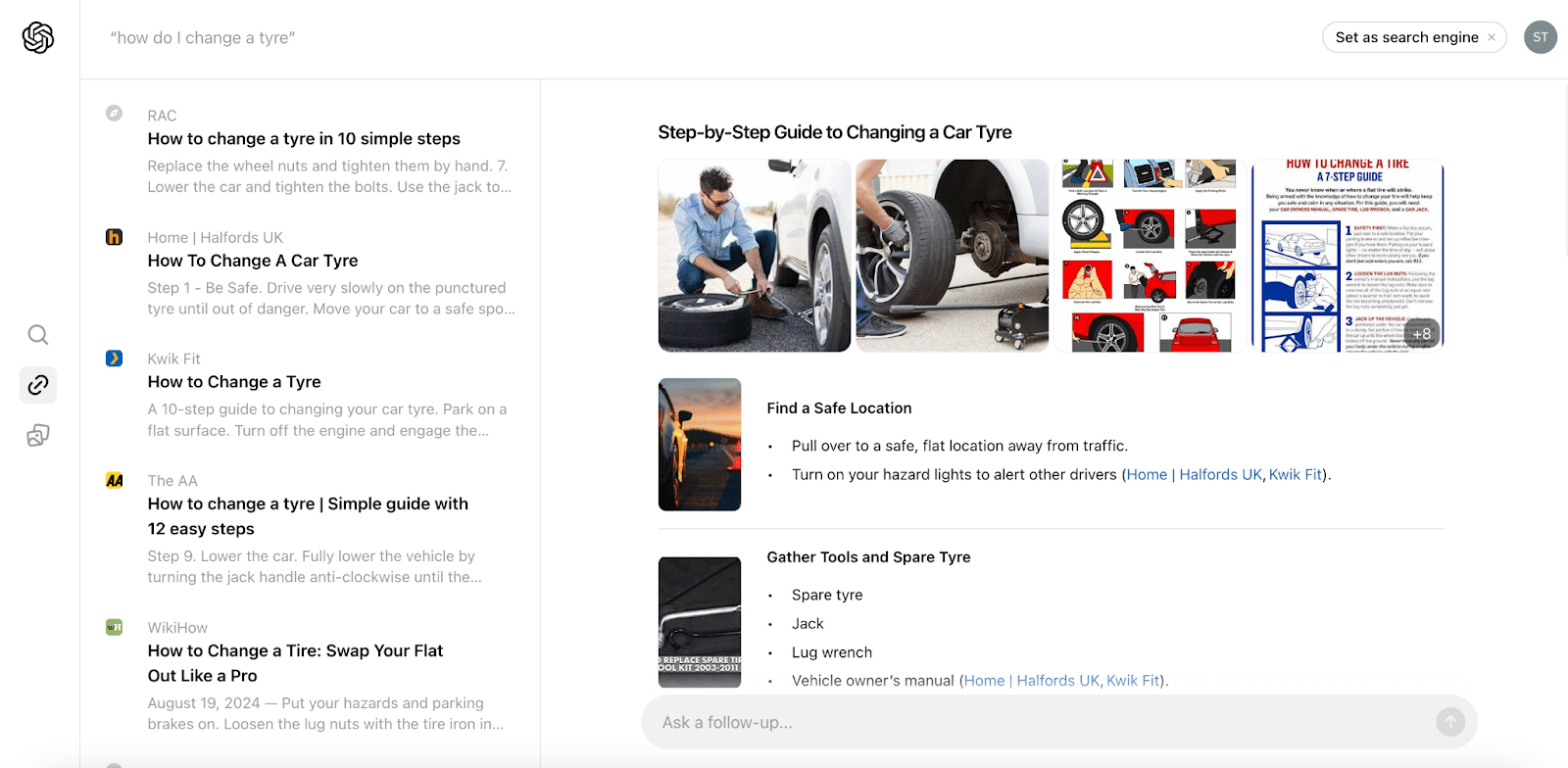

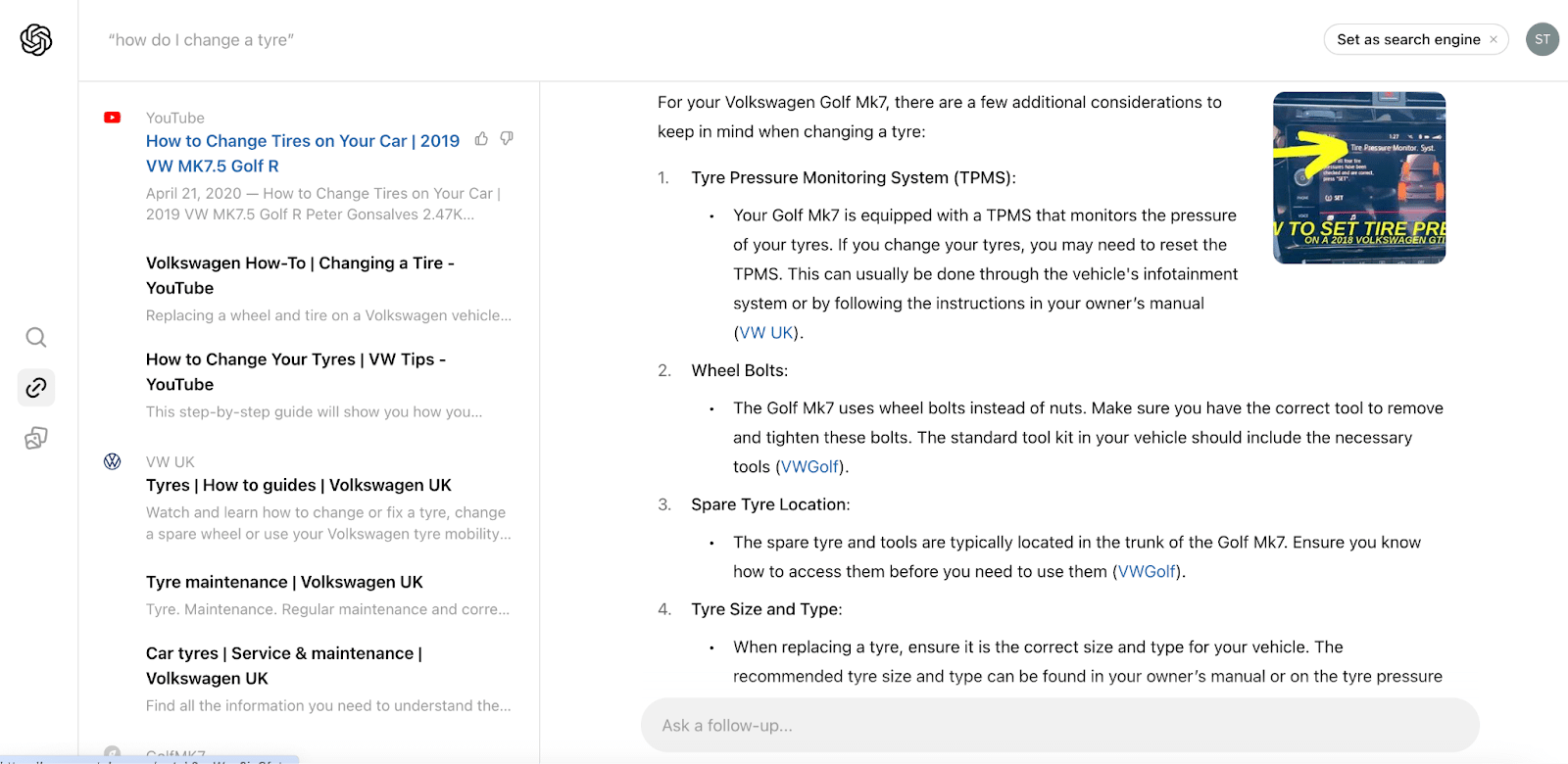

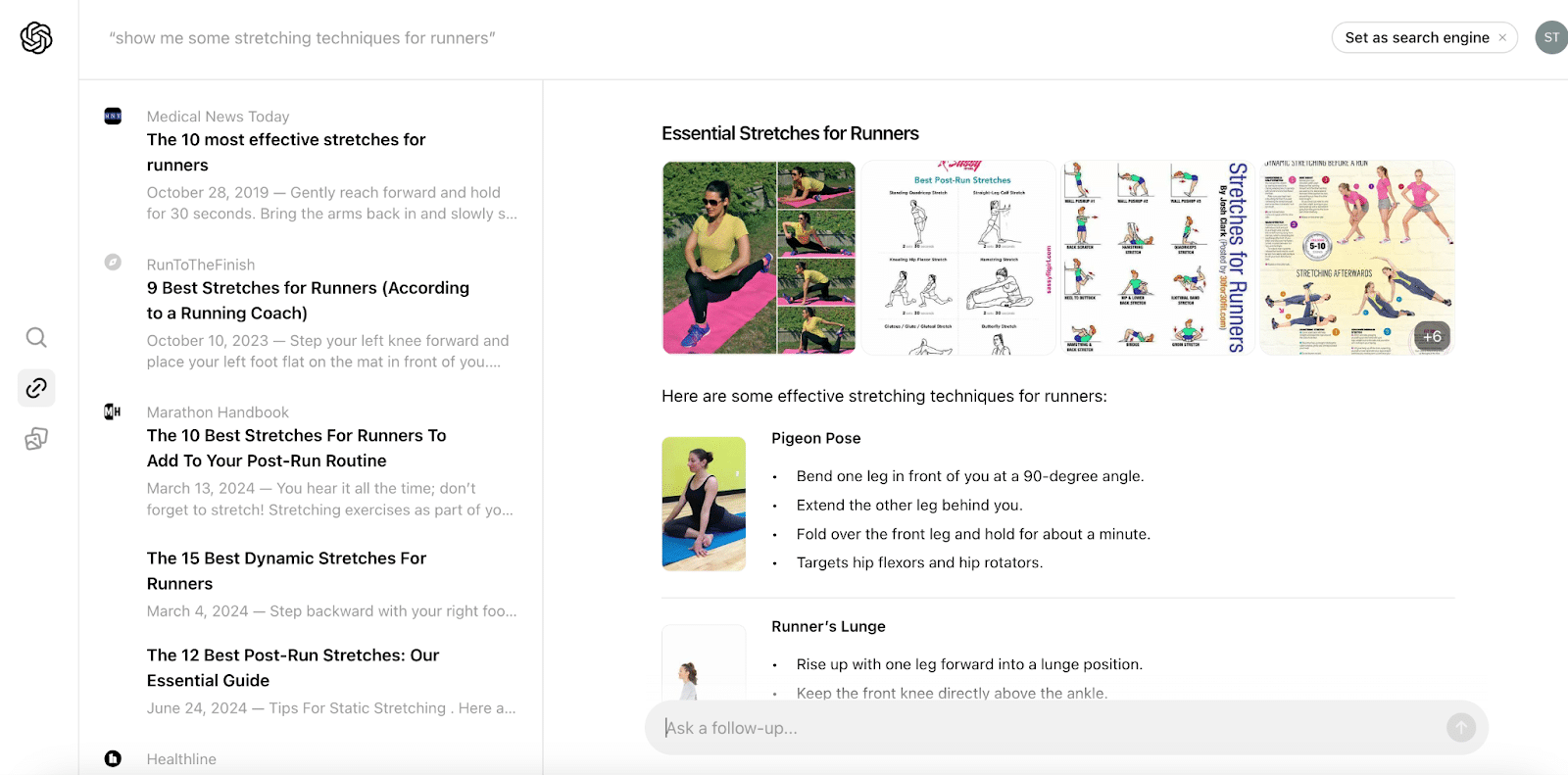

What is SearchGPT and how does it work?

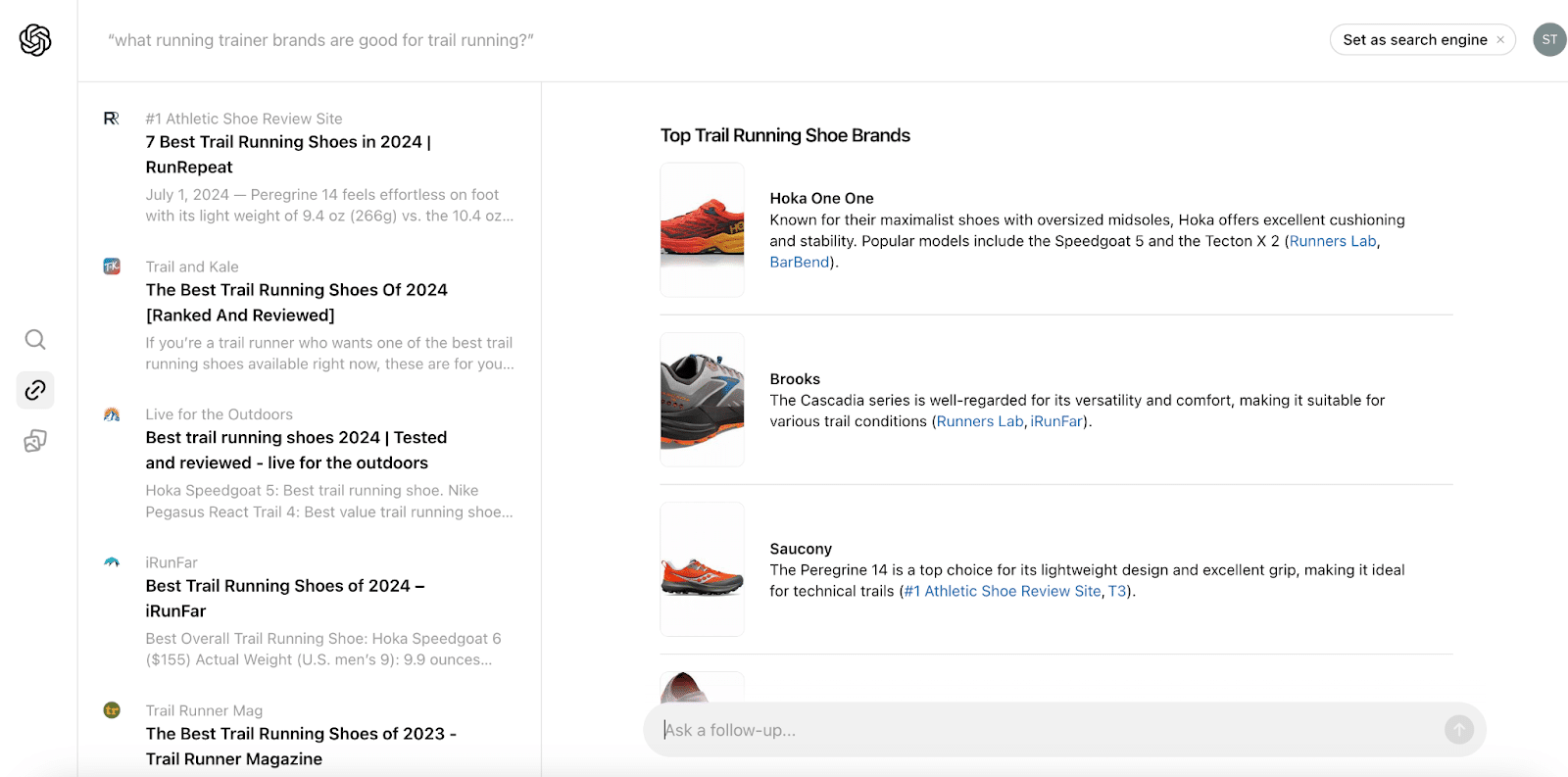

SearchGPT is an AI-powered search engine that combines the strengths of traditional search engines with the advanced conversational abilities of large language models. It delivers answers to user queries using real-time information from across the web.

Rather than returning a list of links for users to sift through like traditional search engines, SearchGPT provides direct answers, summaries and insights based on an understanding of context and the user’s intent.

OpenAI doesn’t clearly state the exact details of how SearchGPT works, however, we can surmise that it uses something akin to retrieval augmented generation (RAG) which is a popular approach used by other AI search engines including, Perplexity and Google AI Overviews.

RAG is designed to reduce the likelihood of hallucinations in responses by integrating information from a database into the LLM response to enhance accuracy.

The model converts the search query into numerical embeddings that capture its meaning and searches a vector database containing trusted information sources. In this case, the web index is most likely provided by Bing based on OpenAI’s partnership with Microsoft.

By retrieving the most relevant content, SearchGPT can generate precise responses while linking back to the original web content, ensuring transparency and reliability.

The retrieved sources serve as additional context for the language model to accurately answer the user’s query.

Key features of SearchGPT include:

- Conversational interface: Users can interact with SearchGPT in a more natural, dialogue-like manner.

- Direct answers: Instead of a list of links, SearchGPT provides concise, relevant answers to queries.

- Citations panel: A sidebar displays the sources used to generate the response, with links to the original content.

- Follow-up questions: Users can ask additional questions to explore topics further, creating a more interactive search experience.

How does SearchGPT compare to Google AI Overviews?

My initial impression of SearchGPT is positive; it certainly outperforms Google’s AI Overviews (AIO).

While SearchGPT may result in fewer clicks for informational terms – and mostly for low-intent searches – in my opinion, this shift broadens the search ecosystem and opens new doors to connect with customers beyond traditional Google SERPs.

Google’s AIO still primarily relies on traditional search, with the addition of an LLM-generated response at the top of the page, which feels more like an enhancement of rich snippet results than a full transformation. Non-tech-savvy users may not notice a significant difference.

However, for marketers, two key distinctions stand out:

- Citations.

- Conversational search.

Google’s AIO uses an icon for references, offering less transparent citations, while SearchGPT mostly links directly from the publication’s name, which could impact click-through rates.

Additionally, Google’s AIO is far less conversational. In contrast, SearchGPT allows users to build on the initial response by expanding queries using the original web content, creating a more interactive experience similar to ChatGPT’s conversational thread UI.

This means marketers might find opportunities with SearchGPT to develop content strategies that cater to a much wider range of conversational queries, encouraging users to explore content in more detail.

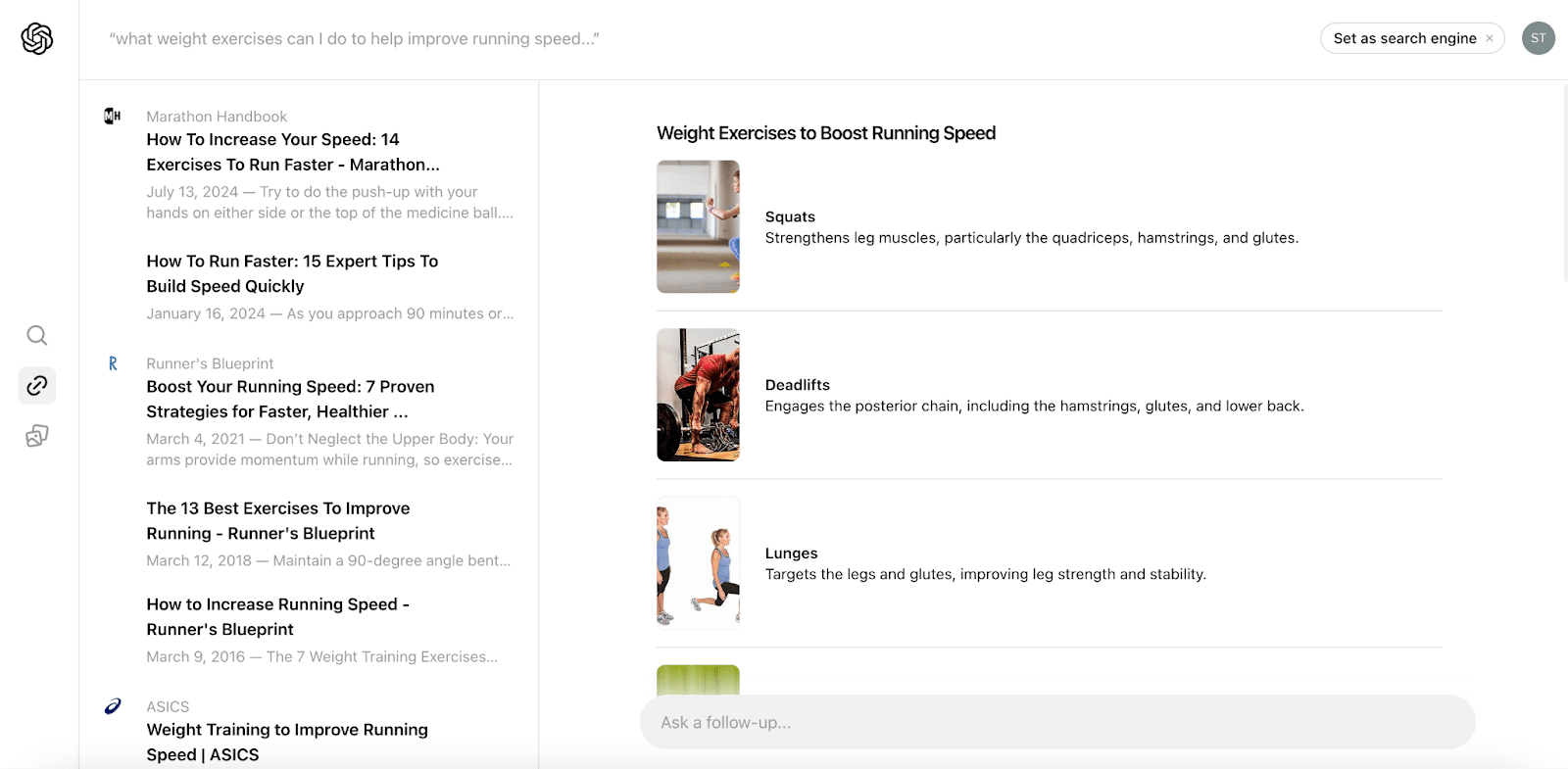

5 key implications for digital marketers

1. Search is about to become more conversational

SearchGPT’s natural language processing capabilities allow for more nuanced, dialogue-like interactions. It can understand the context and meaning behind words, which means keyword research is likely to change dramatically.

Due to its conversational nature, users are expected to ask a broader range of questions, which presents marketers with opportunities to create highly targeted content.

Instead of focusing solely on translating queries into keywords, marketers will need to understand the key topics and questions users are exploring and develop content that directly addresses these needs.

Content should be engaging, written naturally and designed to meet user intent rather than merely optimizing for algorithms. This transition emphasizes the importance of creating content that resonates with users on a deeper level.

2. Incorporate rich media into your strategies

A multimedia strategy has been crucial in marketing for years and its importance is set to grow even further. SearchGPT has the capability to reference and describe various types of media beyond just text.

Integrating videos, infographics and interactive elements into your content will enhance its value for SearchGPT.

Although for AI, descriptive text may become less necessary for multimedia content because it can understand imagery, it’s still important for your media to be well-labeled and contextually enriched.

This is to ensure your content remains inclusive and accessible to all end users, as well as being accessible and relevant to AI models, improving its effectiveness in AI-driven searches.

Dig deeper: Visual optimization must-haves for AI-powered search

3. Earned media will remain important

SearchGPT is likely to prioritize high-authority publications, meaning digital PR and thought leadership may become even more important.

What’s more, at least right now, SearchGPT seems to link out to content creators more than Google does, which may offer brands increased opportunities to boost awareness and traffic.

OpenAI’s recent partnerships with Conde Nast, The Associated Press and Vox highlight the value of content creators and underscores their role in the success of AI-powered search.

Marketers should consider targeting high-visibility content sources used by SearchGPT to enhance brand inclusion in its responses.

Developing relationships with authoritative publications and focusing on earned media can improve your chances of being featured in valuable AI-generated content.

4. High-quality content still rules them all

SearchGPT places a premium on relevant, up-to-date information, making consistent content optimization essential.

High-quality content is critical for maintaining audience engagement and increasing the likelihood of being referenced by SearchGPT. This focus on quality can drive more traffic and enhance engagement, providing a competitive edge for your brand.

Regular updates and accuracy are key to retaining relevance in the AI-driven search landscape. That means more traffic and engagement on your site and ultimately, a competitive edge as a brand.

Dig deeper: 6 guiding principles to leverage AI for SEO content production

5. Adapt your analytics and metrics

Marketers should consider tracking visibility through prompts and brand mentions within LLM responses – tracking a collection of prompts you believe your potential customers will be using rather than traditional rankings.

Traditional metrics like click-through rates (CTR) and page rankings will also become less relevant with AI-driven search.

Marketers should focus on new metrics like the accuracy of AI-generated answers that reference their content, user engagement levels and the impact of AI on brand visibility.

Metrics like these will provide better insights into how effectively your content is performing in the age of SearchGPT.

SearchGPT: The next evolution in AI-powered search

SearchGPT is new but it’s already clear that the future of digital marketing is going to be much more conversational and will move away from the traditional 10 blue links from Google. We’re still advising our clients that standard SEO best practices remain relevant.

However, brands that are not invested in earning high-quality media coverage and links through digital PR should consider adding this to their 2025 budget.

OpenAI will likely prioritize authoritative publisher content to answer AI search queries over a brand’s own website – and you don’t want to miss out on this “fast-paced evolution.”

This article was co-authored by Steve Walker.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

How to drive SEO growth with structure, skimmability and search intent

Written on September 12, 2024 at 8:44 pm, by admin

B2B content contains a Catch-22:

- You need to write for search to justify the expense to produce an evergreen asset that will grow long-term ROI, but…

- You also need to write for a sophisticated audience to build credibility to ever eventually see said ROI.

Most brands skew too far in one direction or the other.

Write primarily for search and you get derivative, regurgitated, copycat content that immediately erodes trust with discerning prospects.

Write only for prospects, however, and your content is ephemeral – forever relying on short-term bumps in referral traffic that get forgotten within a week.

Semrush has somehow managed to bridge this divide for over a decade. Their year-over-year revenue was up 21% in Q1 of 2024, while growth in large customers paying $10,000 annually is also up 32% YoY.

In this article, Semrush’s Managing Editor, Alex Lindley, shares how his three S approach – structure, skimmability and search intent – can fuel SEO growth, plus helpful examples and takeaways.

1. Structure: Answer search intent without delaying the ‘time to value’

Writing for search and readers is a delicate balancing act.

On the one hand, you need to entice readers by setting up the problem and illustrating symptoms before providing alternative solutions.

On the other hand, you need to clearly answer search intent and structure articles similar to what’s already ranking so you can have a shot at evergreen traffic.

Nowhere is this conundrum more obvious than during the editing stage. An editor might think the paragraph and phrasing is the issue, while the underlying root cause is actually a poor article structure to begin with.

You can think of this “structure” problem as twofold:

- You spend too much time talking (or writing) about stuff that doesn’t matter, while also

- Not spending nearly enough time on the stuff that does.

Lindley starts with classic journalism advice, structuring articles in an inverted pyramid to help increase the “time to value” readers will receive.

- “For content the writer is creating for SEO purposes, I always point to the inverted pyramid and/or the bottom line up front (BLUF) framework.”

- “The reason is simple: The single biggest mistake I see writers make is delaying the time to value by adding too much exposition before getting to the point. Delaying the time to value essentially negates any attempt you make later to address search intent because a huge number of readers won’t stick with you long enough to see if you ever do get to the information they’re looking for.”

- “I realize, however, that this approach can alienate some readers – and, importantly, writers I’m working with – who prefer a bit of narrative or simply love language and writing for writing’s sake. Balancing strong structure and search intent with narrative and the “delight” factor is tricky, but when there’s any doubt, I always recommend leaning on BLUF first and everything else second. It’s the best possible approach when you’re not entirely sure of the best approach.”

This advice is especially relevant for long-form B2B content.

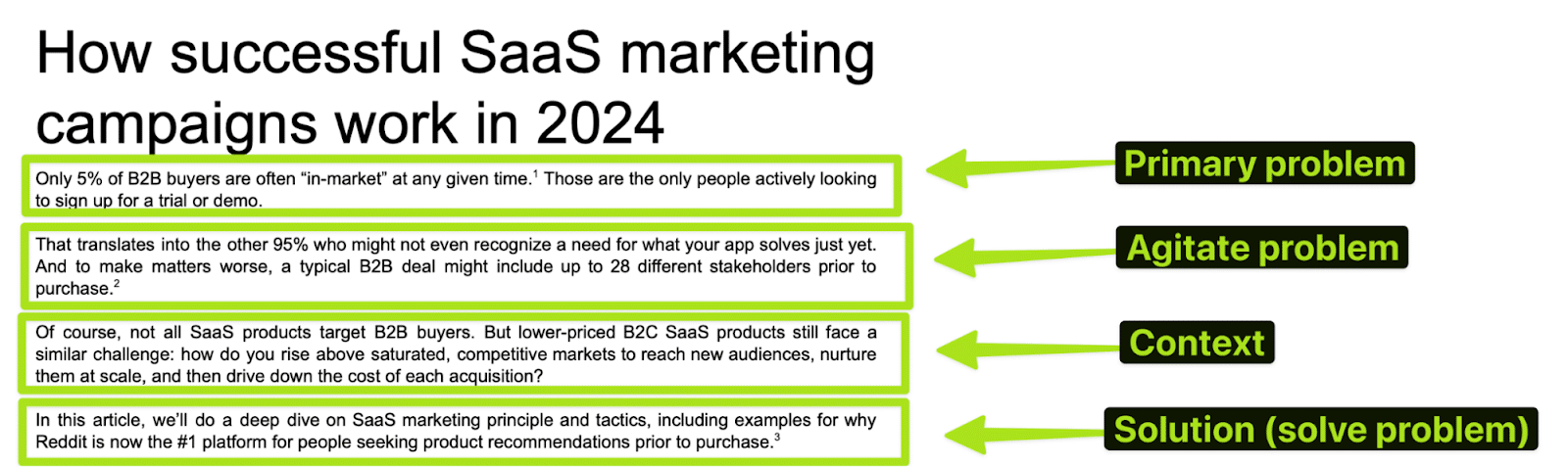

The decades-old Problem, Agitate, Solution (PAS) copywriting framework helps set context. You want to provide some background commentary so the reader immediately understands and resonates with the point you’re making, so that the ultimate payoff (or “solution”) hits that much harder.

The problem is that you might take too long to get there.

The trick, then, is to get in and get out – ASAP! Concision is the name of the game.

Unfortunately, this isn’t the only “structure” issue that causes concern.

Delaying the “time to value” is increasingly common because that’s how more and more “search”-driven content is being structured.

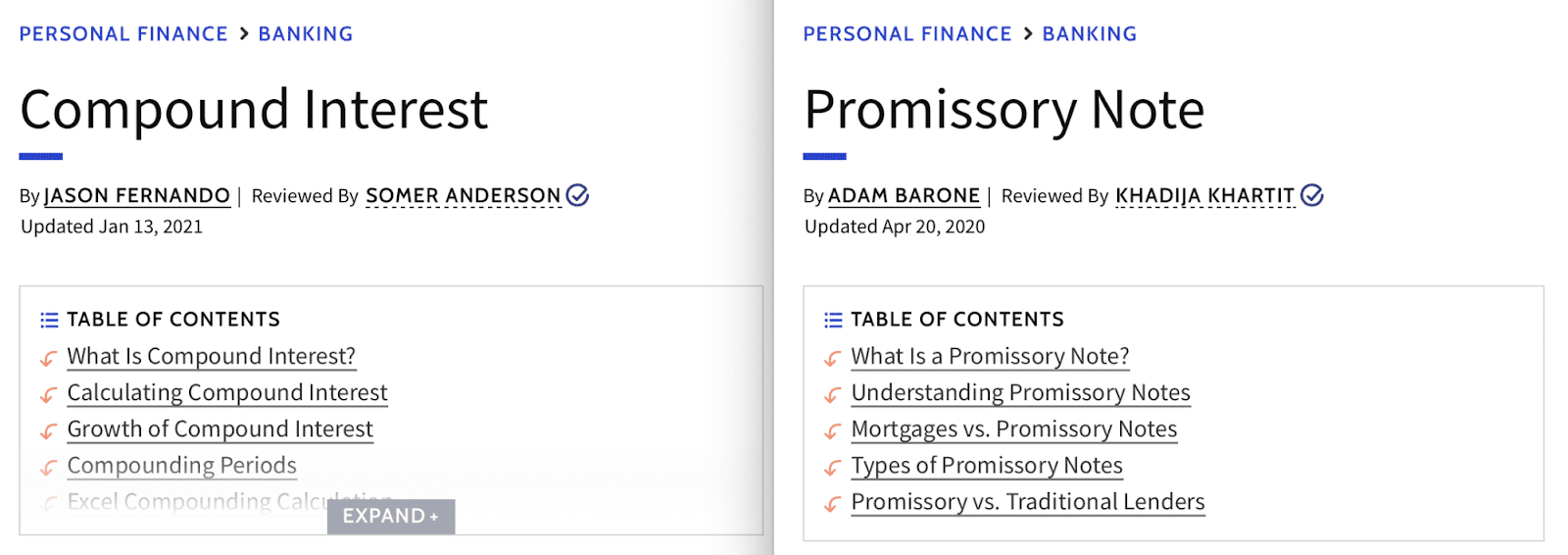

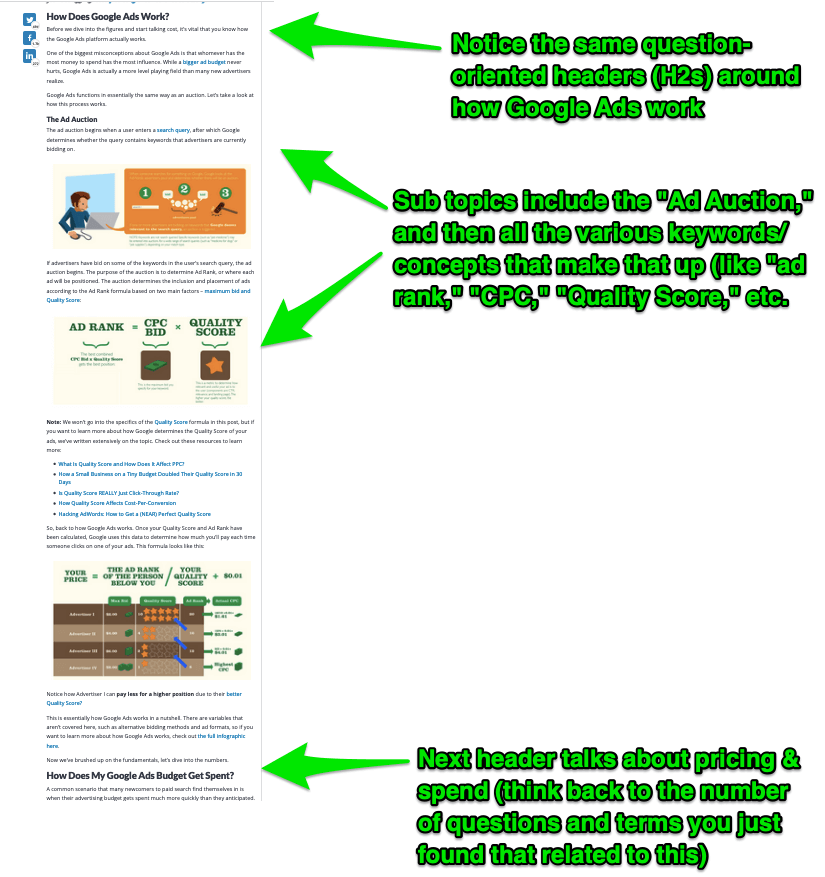

Look at the two side-by-side Investopedia examples below. Both are glossary or definition-based content, so notice the similarity in the heading structures used across each:

Another way most search content falls flat is by spending too long on the initial sections of an article (the “what is” or “why it’s important” sections) while not spending enough time answering the primary query behind the article.

- “The No. 1 biggest red flag I see with article structure is a compulsion to do the “who, what, where, why” in massive sections before getting to the meat of the article,” confirms Lindley.

- “For example, you have an article titled ‘Top SEO Tools for 2024.’ Then the structure looks like this:

- H1: Top SEO Tools for 2024

- H2: What Are SEO Tools?

- H2: Why SEO Tools Are Important

- H3: Reach a Broad Audience

- H3: Save on Advertising Costs

- H3: Make Data-Driven Decisions

- H2: How to Choose an SEO Tool

- H3: Consider Your Budget

- H3: Compare ‘Must-Have’ Features

- H3: Test Them

- H2: 11 Best SEO Tools

- H3: Tool 1

- Etc.

- H1: Top SEO Tools for 2024

- “We get 1,500 words or more in before we’ve even gotten to the point of the article. How many readers will sit through that or even scroll that many times before they get to the part they came for? Very few.”

- “Unfortunately, this kind of structure is really common online. It’s a search intent and time to value problem. And it often comes from either believing that search engines ‘want’ to see that kind of thing or feeling the need to reach a particular word count.”

- “But we simply don’t need to do that. In fact, we really shouldn’t if we aim to keep readers engaged. If the title promises something, give that thing to the reader right away. Don’t delay; don’t clear your throat. Just put it front and center. And if you need to cover the tangential whys and hows, do that later on.”

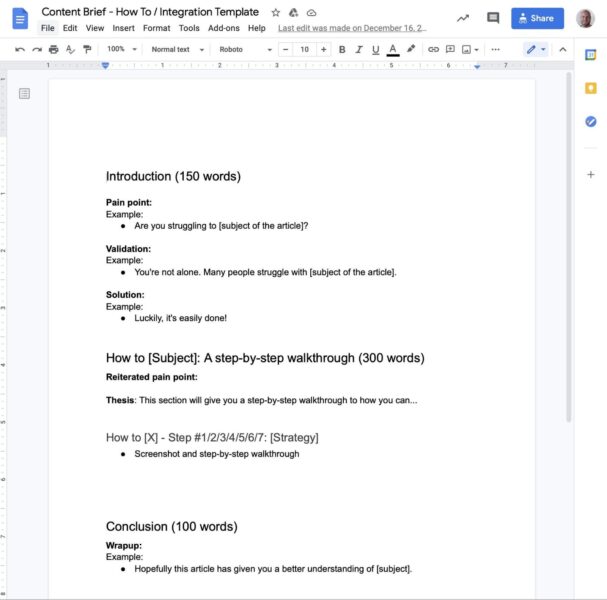

One way to mitigate this is to structure content in briefs and outlines with predetermined word count max ranges.

That way, you might still want to include the “what is” section to define a topic for search intent, but then remind writers to quickly move down to spending more time (or word count) on the sections that matter most.

A final tip on article structure and the subheading organization underneath is parallelism. Here’s how Lindley thinks of it:

- “Headers – the building blocks of article structure – should always be parallel. Listicles make an easy example. Here’s the ‘bad’ way to approach it, compared with the ‘good’ one right after.”

- H1: 3 Content Writing Tips

- H2: 1) Use Short Sentences and Paragraphs

- H2: 2) Read It Out Loud Before You Publish It

- H2: 3) Nailing the Search Intent (bad example)

- H1: 3 Content Writing Tips

- H2: 1) Use Short Sentences and Paragraphs

- H2: 2) Read It Out Loud Before You Publish It

- H2: 3) Nail the Search Intent (good example)

This last point seems small and nuanced on the surface. But as you’ll see in the next section below, it actually has a huge bearing on how “skimmable” the content is overall and whether you’re keeping the reader engaged to the end of the content.

2. Skimmability: Provide contextually relevant examples without interrupting the reading flow

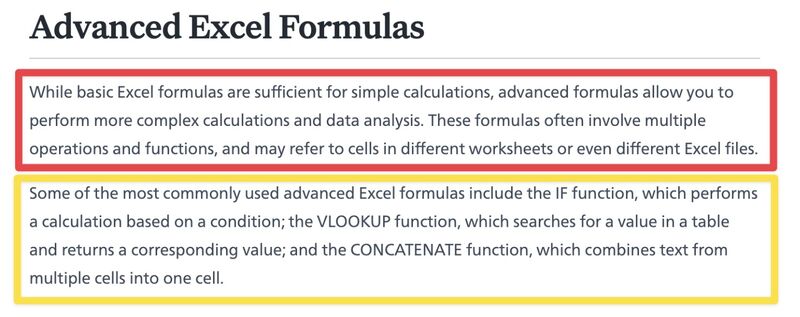

AI content can tell you what “advanced excel formulas” are, as evidenced by this sample below:

However, it’ll never:

- Show you advanced Excel formulas.

- Explain why they matter for who they help.

- Illustrate how to create advanced Excel formulas.

- Put them into a usable format like a free template or tool.

You future-proof SEO by avoiding head-on competition with what AI can do well. And instead you do what AI can’t do.

Backing up points being made in an article helps the reader visualize what you’re describing and increases the credibility in your claims.

It also arms your content with differentiation that other publishers can’t match.

- “The context part is often lacking in web content,” explains Lindley.

- “Many web publishers will throw a faintly relevant image at the top or bottom of a section and call it done – almost as if they’re working from some kind of checklist. But we need to aim for a higher level of helpfulness.”

The trouble is that knowing how to incorporate good examples always throws writers and editors for a loop. Thankfully, Lindley has a good framework to keep in mind:

- “Concept > Context (for the image or example) > Image or Example.”

- “In other words, start with the concept you’re introducing. State it plainly. Then, provide context for the example or image you’re about to introduce. Then include the example or image.”

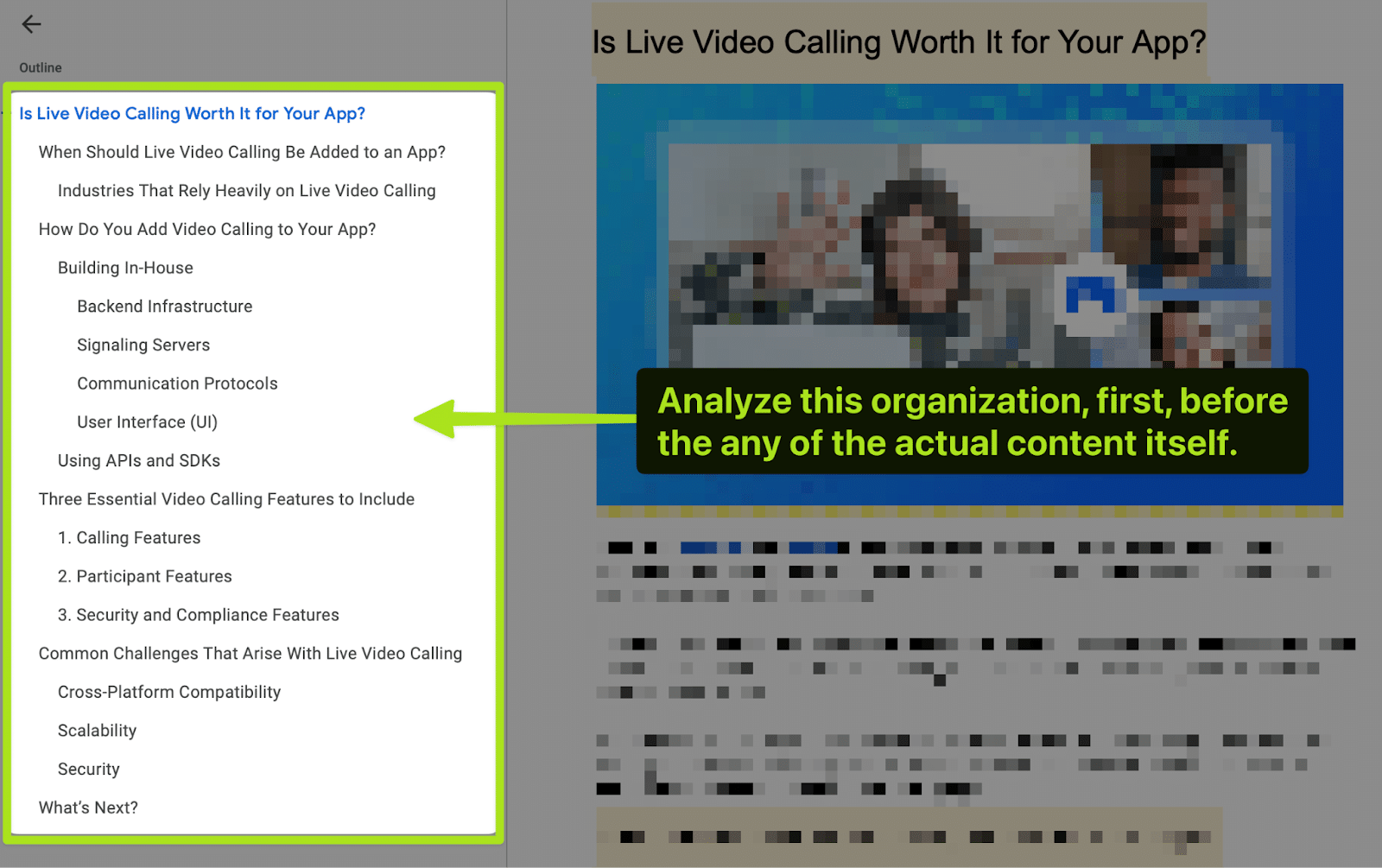

The second major skimmability issue can actually be spotted well in advance, prior to ever reading a single line of the content itself.

Go back to the overall structure again!

- “One skimmability problem that is easy to illustrate with examples (and highly applicable to many web writers) is when you’re unable to understand what a section or article is about from just the header title(s). That’s why I always look at the table of contents or stacked headers on the left side of the Google Doc before I start editing an article.”

In other words, start by familiarizing yourself with what is being proposed, the nested information under each section, and how these sections build on top of one another to get a general sense of the problems, challenges or examples that will ultimately be most appropriate later on.

Lindley continues this example with another one:

- “For example, if I see the H2 ‘Content Marketing’ in an article titled ‘Types of Digital Marketing,’ I am pretty sure that section will describe how content marketing is a type of digital marketing. But if I see the H2 ‘Fire Up Your Keyboard’ in that same article, I’m confused, and I know there’s a problem.”

How do you know whether you (or your writers) are on the right track?

Again, back out of the actual paragraphs to take in the proposed article as a whole.

The table of contents or header structure can help, as can literally minimizing the text sizing in your browser to zoom out and consider all of the content together, like so:

Last but not least, here are three additional “don’ts” Lindley recommends following to help avoid interrupting the reading flow or risk losing the reader:

- Don’t make the reader squint to look for details in images that help them understand what they’re looking at.

- Don’t make them scroll back up to the paragraph text to look for help understanding what they’re looking at.

- Don’t make them read your explanation below the image or example and then go back up to the image or example to finally understand it.

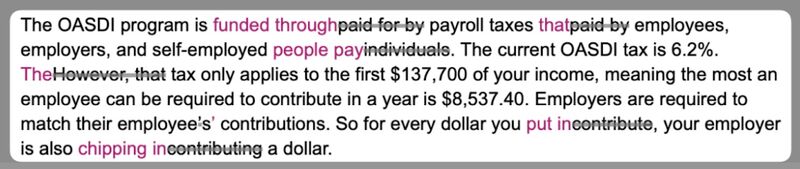

3. Search intent: Focus editing on reader clarity, less on phrasing or semantic keywords

Over the last decade of working across hundreds of brands, I’ve noticed that good writers often make bad editors and terrible content managers.

The reason comes down to a skill set mismatch, where good writers excel at ingenuity and saying the same things in different ways, while good editors instead laser-focus on consistency and clarity.

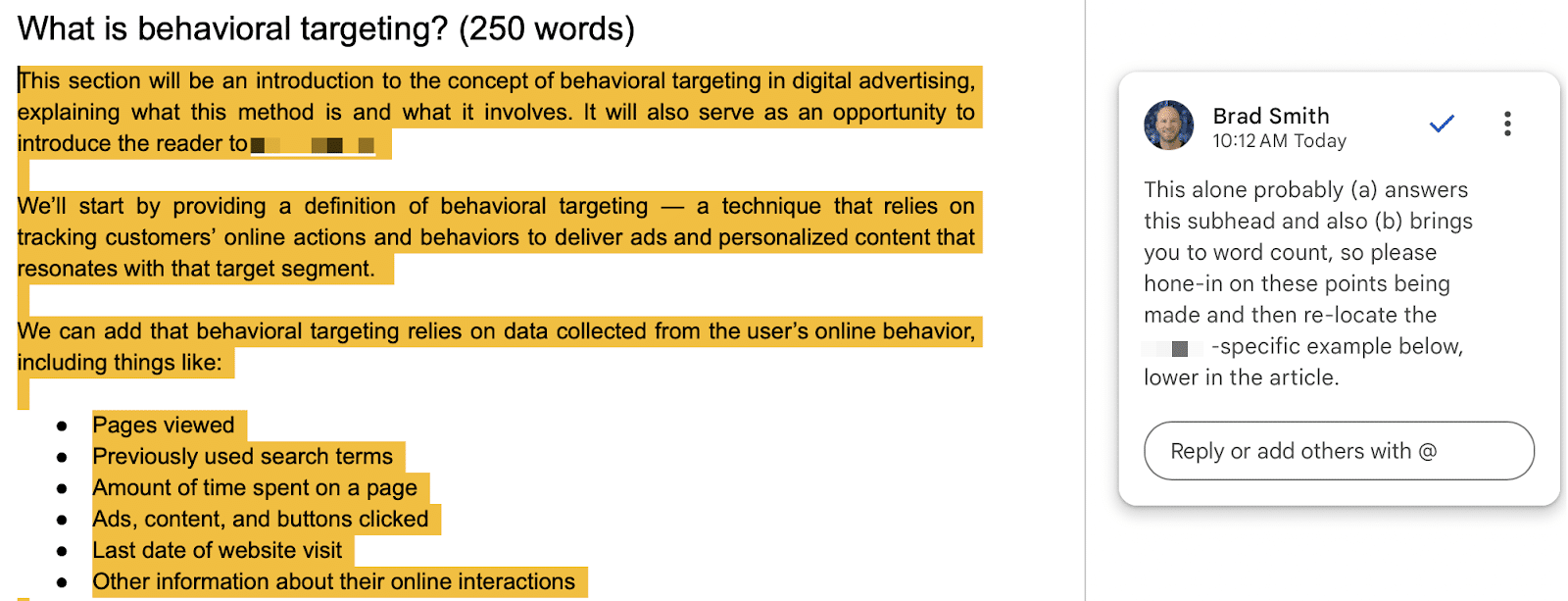

For example, take a look at the following “edits”:

As you can tell, these are done by a good “writer,” perhaps, but as an editor, it’s often missing the point.

The best editors are often akin to a coach. Their job is to sit at the intersection of the brand, the reader and search intent, then make sure to erect “bumpers” on each side to keep writers clear on the primary direction of travel.

- “The best editors maintain a radical focus on the reader. They’ll even break well-established writing rules to serve that focus,” affirms Lindley.

- “Getting into more specific areas of focus, editors should put structure, skimmability and search intent at the center of nearly everything they do.”

- “The other stuff – images, line edits, spelling, grammar, etc. – is important. But you can have all that extra stuff completely perfect and still have a bad article because you’ve neglected structure, skimmability or search intent.”

- “That’s not to say editors shouldn’t care about other concerns. They should, but if I only had 30 minutes to spend editing an article, I wouldn’t change a single word before I addressed those three Ss.”

Lindley is also a proponent of role specialization, where “strategists focus more on keywords, distribution, and the like,” while the writer can “focus on the sentence-level stuff.”

The editor might review all of these details prior to publishing, but none of them outweigh structure, skimmability and search intent.

How do you help enforce (or reinforce) these principles in practice? Especially at scale or higher volumes across a broad team?

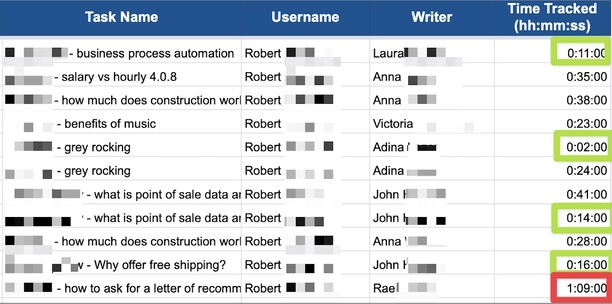

The best way I’ve found is to make editors track time against every article, writer, and content type. Then, set established benchmark thresholds for each.

For example, after publishing thousands of articles each year over the past few years, we’ve noticed that if editors continuously spend over an hour editing certain articles, it actually indicates:

- A process problem (identify underlying gaps in briefs/templates).

- An internal documentation problem (ICP/product positioning communicated + re-trained).

- A role/expectations problem (editors wanna rewrite vs. edit).

- A delegation problem (editors/content managers ‘need to do it themselves’ vs. building a systematic workflow with the first three above).

And often not a “writer” or “editor” problem.

Here’s how to set up this internal feedback loop to make sure everyone is focused on the highest and best use of their respective times (and skills):

- You should have estimated editing time ranges, including caps, to edit each content type, format, or length.

- Add time tracking per article and per writer (even more true if editors are fixed cost / in-house / full-time).

- Use this baseline data to identify trends, patterns and bad habits (rewriting vs. editing).

- Force editors to flag underlying issues or gaps – not just fix surface-level issues – that should have been better spelled out, structured or illustrated for writers in preceding steps.

- Review these issues weekly to create new supporting resources to continually re-train your editorial team.

This feedback loop has two benefits:

- Editors’ editing-per-piece effort will drop like a rock, resulting in a better experience for them.

- It also allows editors to edit more content in the same amount of time, which is a better ROI for you.

The end result is that more editing comments should follow Lindley’s recommended three S approach, providing broad, strategic recommendations like the comment below early on – as opposed to the individual rewording of sentences at the start of this section.

A balanced content strategy delivers evergreen results and boosts revenue

There’s a constant tension when writing for search and readers. Lean too far in either direction and the final outcome can often sacrifice one at the expense of the other.

The trick, as with most things in life, is to lean into the gray area filled with nuance. While also avoiding knee-jerk reactions that try too hard to oversimplify.

If you want readers to consume, engage, save, and share search-driven content, the answer isn’t to start cutting important context like your introductions. Instead, you should be writing introductions that deserve to be read.

Building your publishing process (and editing) around the three S approach are a perfect start to walking this fine line.

Because structure, skimmability, and search intent aren’t just simple, practical guardrails for editorial teams.

But also the foundation behind writing marketing content that also gets evergreen results at the same time.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Google tests more noticeable ad labels in search results

Written on September 11, 2024 at 5:43 pm, by admin

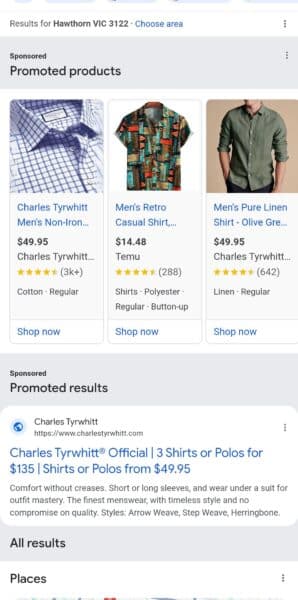

Google is experimenting with a new way to make ads more distinguishable in its search results.

The update introduces a taller gray background for ads, accompanied by a “Sponsored” label and subtitles like “Promoted products” or “Promoted results.”

This is a shift from Google’s current more subtle labelling approach.

What it looks like. Here’s a screenshot, shared by Gagan Ghotra on X:

Additionally, after the ads, Google now labels the following section as “All results,” clearly distinguishing the organic, non-sponsored listings from paid content.

Why we care: This test signals a possible shift in how Google balances ad visibility and user experience. Advertisers should keep a close eye on metrics like CTR and conversion rates as these experiments evolve.

Bottom line. This move, should it become permanent, could make ads more noticeable, potentially influencing user behavior and click rates, as Google continues to fine-tune its ad presentation for clarity and transparency.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Google adtech antitrust trial: Everything you need to know

Written on September 11, 2024 at 5:43 pm, by admin

Google is on trial for allegedly abusing its dominance of the $200 billion digital advertising industry.

The U.S. Department of Justice claimed that through acquisitions and anticompetitive conduct, Google seized sustained control of the full advertising technology (“adtech”) stack: the tools advertisers and publishers use to buy and sell ads, and the exchange that connects them.

In response, Google denied the claims stating several ad companies compete in the space, a mixture of tools are used so they don’t get the full fees, their fees are lower than industry average and small businesses will suffer the most if they lose this case.

The outcome of the landmark case could bring significant changes to Google and publishers. However, experts argue that could seriously hurt advertisers as well.

It’s equally possible the trial will result in no changes and Google will be free to continue operating as it wants.

Day 1: Accusations and badgering of witnesses (Sept. 9)

DOJ laid out their accusations as follows:

- Google controls the advertiser ad network.

- Google dominates the publisher ad server.

- Google runs the ad exchange connecting the two.

Google’s defense:

- Disputed the definition of open-web display ads.

- Argued the DOJ’s market definition is “gerrymandered” – the DOJ are manipulating the boundaries of their definition to make Google out to be the bad guy.

- Presented a chart showing competitors like Microsoft, Amazon, Meta, and TikTok.

Bottom line. This trial could determine whether Google’s control over digital advertising constitutes an illegal monopoly, potentially affecting how information is disseminated online.

What’s next. The trial is expected to last several weeks. If the DOJ wins, Google could face up to $100 billion in advertiser lawsuits, according to Bernstein analysts.

Deep dive. Read our Google antitrust trial guide for a breakdown of everything you need to know from the first trial last year.

This article will be regularly updated with the latest developments from this landmark trial.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Google Search adds Internet Archive’s Wayback Machine links to about this page

Written on September 11, 2024 at 5:43 pm, by admin

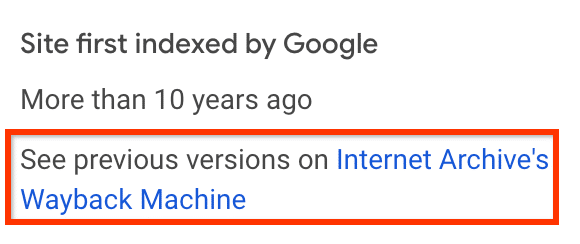

Google Search is rolling out a change to its About this page/result feature where it includes links to the Internet Archive’s Wayback Machine. This enabling searches to view the previous version of a given webpage.

Google added this feature likely because of the complaints about Google removing the cache link from that feature.

What Google said. “We know that many people, including those in the research community, value being able to see previous versions of webpages when available. That’s why we’ve added links to the Internet Archive’s Wayback Machine to our ‘About this page’ feature, to give people quick context and make this helpful information easily accessible through Search,” a Google spokesperson told us.

How it works. To access this feature, you can click on the three dots near a search result. That will bring up the About this result feature and within there you will be able select “More About This Page” to reveal a link to the Wayback Machine page for that website.

I don’t see this feature yet but it should be somewhere in this panel when it fully rolls out:

Update: Google sent me a screenshot of what the link looks like in the more about this page section:

More details. The Internet Archive is a nonprofit research library that provides and curates a digital archive of Internet sites and other cultural artifacts in digital form called the Wayback Machine. Mark Graham, Director, Wayback Machine, Internet Archive wrote:

“The web is aging, and with it, countless URLs now lead to digital ghosts. Businesses fold, governments shift, disasters strike, and content management systems evolve—all erasing swaths of online history. Sometimes, creators themselves hit delete, or bow to political pressure. Enter the Internet Archive’s Wayback Machine: for more than 25 years, it’s been preserving snapshots of the public web. This digital time capsule transforms our “now-only” browsing into a journey through internet history. And now, it’s just a click away from Google search results, opening a portal to a fuller, richer web—one that remembers what others have forgotten.”

Why we care. I use the Wayback machine a lot for my research here and for other work related projects. Having quick access to these links in Google Search can be more useful for me and searchers.

This should also help with some of the complaints around Google dropping the cache link but it does not resolve the complaints around seeing how Google sees your pages. But for that, you can use the URL Inspection tool in Google Search Console or the rich result testing tool from Google.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Ready to transform your marketing? Claim your free SMX Next pass now.

Written on September 11, 2024 at 5:43 pm, by admin

Search marketers like you must navigate a sea of constant change… from major algorithm updates to the birth of AI overviews, ever-shifting ranking factors, evolving consumer expectations… the list goes on.

The key to smooth sailing is to stay on top of the latest trends by training with experts who are ready to help.

Head into 2025 equipped with fresh knowledge and actionable tactics that can help you execute winning SEO and PPC campaigns today… and prepare for what’s coming next: Attend SMX Next, online November 13-14, for free!

This is your final chance in 2024 to unlock the world-class Search Marketing Expo experience and everything that comes with it:

- Actionable tactics. Walk away with reliable, actionable, brand-safe insights you can immediately apply to your campaigns to drive measurable results.

- In-depth live Q&A. Tap directly into some of the brightest minds in search, ask them your questions, and get real-time answers during Overtime!

- Engaging discussions. Connect with and learn from like-minded search marketers and industry experts during live Coffee Talk meetups.

- Instant on-demand access. Can’t attend live? On-demand replay is included with your free pass so you can train at your own pace.

- 100% online. Log on from your cubicle, coffee shop, couch – wherever! – and skip the plane ride, hotel room, and time out of the office.

- 100% free. Continued training doesn’t have to break the bank. Leave the expense reports (and expensive in-person training experiences) behind!

- Certificate of attendance. Showcase your knowledge of the latest industry trends with a personalized certificate and digital badge, perfect for posting on LinkedIn.

The Search Engine Land experts are hard at work programming the agenda… I’ll reach back out next week with more details. In the meantime, secure your free registration now!

Since 2006, SMX has helped more than 200,000 search marketers from around the world achieve their professional goals. Now, it’s your turn.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

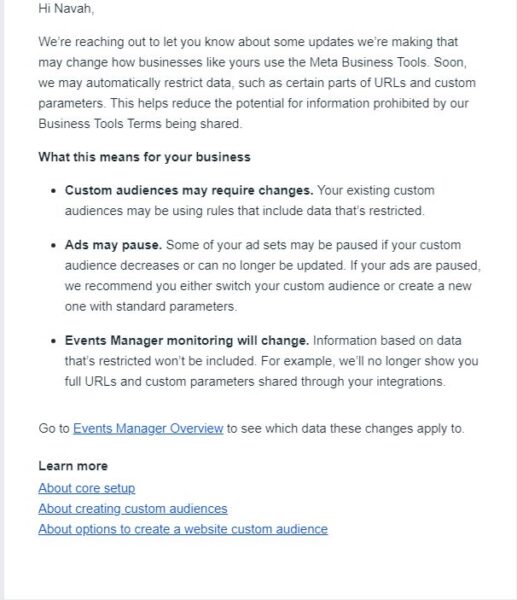

Meta to restrict data in Business Tools, impacting ad targeting

Written on September 10, 2024 at 2:42 pm, by admin

Meta is implementing new data restrictions in its Business Tools, which could potentially affect how businesses target ads and measure performance.

Why we care. This move reflects Meta’s ongoing commitment to privacy, but could complicate ad targeting and reporting for you.

Key changes.

- Automatic restriction of certain URL parts and custom parameters.

- Potential pausing of ads using highly targeted UTMs.

- Altered monitoring in Events Manager.

Impact on businesses.

- Custom audiences may need adjustments.

- Some ad sets could be paused.

- Reduced visibility in Events Manager.

First seen. Meta communicated this update to advertisers this week. PPC expert Navah Hopkins of Optmyzr shared the email she received on LinkedIn:

Hopkins commented about the importance of this update:

- “This is a critical reminder that Meta is taking privacy very seriously and our ability to report and target based on seeing/clicking ads is no longer a guaranteed state.”

Claude Sprenger Managing Partner @ Hutter Consult AG, is not surprised by this update and gave some advice on what the effect could be:

- “Sounds worse than it is. Meta already started restricting this data 12 months ago. Concrete effects – Target groups based on specific URL paths grow more slowly and can no longer be created retroactively. Target groups that combine URL paths and another rule (example: device) are no longer functional and can no longer be used in campaigns.”

What’s next.

- Businesses should review and potentially adjust custom audiences.

- Prepare for possible compromises on UTM parameters.

- Keep stakeholders informed about potential reporting changes.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

EU’s top court upholds $2.7 billion fine against Google

Written on September 10, 2024 at 2:42 pm, by admin

The European Union’s highest court has confirmed a record $2.7 billion (€2.4 billion) fine against Google for anti-competitive practices related to its Shopping service.

Details. The fine, initially imposed in 2017, was upheld by the EU Court of Justice today.

- Google was accused of favoring its own price-comparison service in search results, disadvantaging competitors.

- Google appealed the case to help circumvent potential similar fines and demands for change around other types of “vertical search” results such as maps/local, travel and other categories.

- The court ruled that Google’s conduct was “discriminatory” and fell outside the scope of fair competition.

Why we care. While Google has already made changes to comply with the 2017 decision, this ruling could lead to further adjustments in how Google shares data, algorithm transparency and more, impacting how advertisers strategize and spend their budgets.

What they’re saying:

- Google: “We are disappointed with the decision… This judgment relates to a very specific set of facts.” They then continued with noting that they had made changes to comply with the European Commissions decision in 2017.

- BEUC (European Consumer Organization): “The Court has confirmed that Google cannot unfairly deny European consumers access to full and unbiased online information.”

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.