An SEO’s guide to redirects

Written on June 3, 2024 at 12:42 pm, by admin

Redirects are a way of moving a webpage visitor from an old address to a new one without further action from them. Each webpage has a unique reference, a URL, to enable browsers to request the correct information be returned.

If a browser requests a page that has a redirect on it, it will be instructed to go to a different address. This means that the content on the original page is no longer available to request, and the browser must visit the new page to request the content there instead.

Essentially, redirects are instructions given to browsers or bots that the page they are looking for can no longer be reached and they must go to a different one instead.

Types of redirects

A website can indicate that a resource is no longer available at the previous address in several ways.

Permanent and temporary

When identifying that a redirect has been placed on a URL, search engines must decide whether to begin serving the new URL instead of the original in the search results.

Google claims it determines whether the redirect will be a permanent addition to the URL or just a temporary change in the location of the content.

If Google determines that the redirect is only temporary, it will likely continue to show the original URL in the search results. If it determines that the redirect is permanent, the new URL will begin to appear in the search results.

Meta refresh

A meta refresh redirect is a client-side redirect. This means that when a visitor to a site encounters a meta redirect, it will be their browser that identifies the need to go to a different page (unlike server-side redirects, where the server instructs the browser to go to a different page).

Meta refreshes can happen instantly or with a delay. A delayed meta refresh often accompanies a pop-up message like “you will be redirected in 5 seconds”.

Google claims it treats instant meta refreshes as “permanent” redirects, and delayed meta refreshes as “temporary” redirects.

JavaScript redirect

A redirect that uses JavaScript to take users from one page to another is also an example of a client-side redirect. Google warns website managers to only use JavaScript redirects if they can’t use server-side redirects or meta-refresh redirects.

Essentially, search engines will need to render the page before picking up the JavaScript redirect. This can mean there may be instances where the redirect is missed.

Server-side redirects

By far, the safest method for redirecting URLs is server-side redirects.

This requires access to the website’s server configuration, which is why meta refreshes are sometimes preferred. If you can access your server’s config files, then you will have several options available.

The key differences in these status codes are whether they indicate the redirect is permanent or temporary and whether the request method of POST (used to send data to a server) or GET (used to request data from a server) can be changed.

For a more comprehensive overview of POST and GET and why they matter for different applications, see the W3schools explanation.

Permanent redirects

- 301 (moved permanently): A redirect that returns a HTTP status code of “301” indicates that the resource found at the original URL has permanently moved to the new one. It will not revert to the old URL and it allows the original request method to change from POST to GET.

- 308 (permanent redirect): A 308 server response code is similar to 301 in that it indicates that the resource found at the original URL has permanently moved to the new one. However, the key difference between the 308 and the 301 is that it recommends maintaining the original request method of POST or GET.

Temporary redirects

- 302 – found (temporarily displaced): A 302 server code indicates that the move from one URL to another is only temporary. It is likely the redirect will be removed at some point in the near future. As with the 301 redirect, browsers can use a different request method from the original (POST or GET).

- 307 (temporary redirect): A 307 server code also indicates that a redirect is temporary, but as with a 308 redirect it recommends retaining the request method of the original URL.

Why use redirects

There are several good reasons to use redirects. Just like you might send out change-of-address cards when moving house (well, back in the early 2000s anyway, I’m not sure how you kids do it now), URL redirects help ensure that important visitors don’t get lost.

Human website users

A redirect means that when accessing content on your site a human visitor is taken automatically to the more relevant page.

For example, a visitor may have bookmarked your URL in the past. They then click on the bookmark without realizing the URL has changed. Without the redirect, they may end up on a page with a 404 error code and no way of knowing how to get to the content they are after.

A redirect means you do not have to rely on visitors working out how to navigate to the new URL. Instead, they are automatically taken to the correct page. This is much better for user experience.

Search engines

Similar to human users, when a search engine bot hits a redirect, it is taken to the new URL.

Instead of leaving them on a 404 error page, it can identify the equivalent URL straightaway, negating the need for the search bot to try to identify where, if anywhere, that original URL’s content might now be housed.

Demonstrating equivalence

Search engines will use redirects to determine if they should continue displaying the original URL in the search results.

If there is no redirect from an old, defunct URL to the new one, then the search engines will likely just de-index the old URL as it has no value as a 404 page. With a redirect in place, however, the search engine can directly link the new URL to the old one.

- If it is redirected permanently, the search engine will likely begin to display the new URL in the search results.

- If it is a temporary redirect, they may continue to display the old one. Google and other search engines may pass some of the ranking signals from the old URL to the new one due to the redirect.

This only happens if they believe the new page to be the equivalent of the old one in terms of value to searchers.

There are instances where this will not be the case, however, usually because the redirects have been implemented incorrectly. More on that later.

Typical SEO use cases for redirects

There are some very common reasons why a website owner might want to implement redirects to help both users and search engines find content. Importantly for SEO, redirects can allow the new page to retain some of the ranking power of the old one.

Vanity URLs

Vanity URLs are often used to help people remember URLs. For example, a TV advert might tell viewers to visit www.example-competition.com to enter their competition.

This URL might redirect to www.example.com/competitions/tv-ad-2024, which is far harder for viewers to remember and enter correctly. Using a vanity URL like this means website owners can use short URLs that are easy to remember and spell without having to set up the content outside of their current website structure.

URL rewrites

There may be instances when a URL needs to be edited once it’s already live.

For example, perhaps a product name has changed, a spelling mistake has been noticed, or a date in the URL needs to be updated. In these cases, a redirect from the old URL to the new one will ensure visitors and search bots can easily find the new address.

Moving content

Restructuring your website might necessitate redirects.

For example, you may be merging subfolders or moving content from one subdomain to another. This would be a great time to use redirects to ensure that content is easily accessible.

Moving domains

Website migrations, like moving from one domain address to another, are classic uses of redirects. These tend to be done en masse, often with every URL in the site requiring a redirect.

This can happen in internationalization, like moving a website from a .co.uk ccTLD to a .com address. It can also be necessitated through a company rebrand or the acquisition of another website and a desire to merge it with the existing one.

There may even be a need to split a website into separate domains. All of these cases would be good candidates for redirects.

HTTP to HTTPS

Not as common now the internet has largely woken up to the need for security is the migration from HTTP to HTTPS.

You still might need to switch a site from an insecure protocol (HTTP) to a secure one (HTTPS) in some situations. This will likely require redirects across the entire website.

Dig deeper: What is technical SEO?

Redirect problems to avoid

The main consideration when implementing redirects is which type to use.

In general, server-side redirects are generally safest to use. However, the choice between permanent and temporary redirects depends on your specific situation.

There are more potential pitfalls to be aware of.

Loops

Redirect loops happen when two redirects directly contradict each other. For example, URL A is redirected to URL B; however, URL B has a redirect pointing to URL A. This makes it unclear which page is supposed to be visited.

Search engines won’t be able to determine which page is meant to be canonical and human visitors will not be able to access either page.

If a redirect loop is present on a site, you will encounter a message like the one below when you try to access one of the pages in the loop:

To fix this error, remove the redirects causing the loop and point them to the correct pages.

Chains

A redirect chain is a series of pages that redirect from one to another. For example, URL A redirects to URL B, which redirects to URL C, which redirects to URL D. This isn’t too much of an issue unless the chain gets too big.

URL chains can start to affect load speed. John Mueller, Search Advocate at Google, has also stated in the past that:

- “The only thing I’d watch out for is that you have less than 5 hops for URLs that are frequently crawled. With multiple hops, the main effect is that it’s a bit slower for users. Search engines just follow the redirect chain (for Google: up to 5 hops in the chain per crawl attempt).”

Soft 404s

Another problem that can arise from using redirects incorrectly is that search engines may not consider the redirect to be valid for the purposes of rankings.

For example, if Page A redirects to Page B but the two are not similar in content, the search engines may not pass any of the value of Page A to Page B. This can be reported as a “soft 404” in Google Search Console.

This typically happens when a webpage (e.g., a product page) is deleted and the URL is redirected to the homepage.

Anyone clicking on the product page from search results wouldn’t find the product information they were expecting if they landed on the homepage.

The signals and value of the original page won’t necessarily be passed to the homepage if it doesn’t match the user intent of searchers.

Ignoring previous redirects

It can be a mistake not to consider previously implemented redirects. Without checking to see what redirects are already active on the site, you may run the risk of creating loops or chains.

Frequent changes

Another reason for planning redirects in advance is to limit the need to frequently change them. It is important from an internal productivity perspective, especially if you involve other teams in their implementation.

Most crucially, though, is that you may find the search engines struggle to keep up with frequent changes, especially if you incorrectly suggest the redirect is permanent by using a 301 or 308 status code.

Good practice for redirects

It is helpful when considering any activity involving redirects to do the following to prevent problems down the road.

Create a redirect map

A redirect map is a simple plan showing which URLs should redirect and their destinations. It can be as simple as a spreadsheet with a column of “from” URLs and “to” URLs.

This way, you have a clear visual of what redirects will be implemented and you can identify any issues or conflicts beforehand.

Dig deeper: How to speed up site migrations with AI-powered redirect mapping

Assess existing redirects

If you keep a running redirect map, look back at previous redirects to see if your new ones will impact them at all.

For example, would you create a redirect chain or loop by adding new redirects to a page that already is redirected from another? This will also help you see if you have moved a page to a new URL several times over a short period of time.

If you do not have a map of previous redirects, you may be able to pull redirects from your server configuration files (or at least ask someone with access to it if they can!)

If you do not have access to the server configuration files either, or your redirects are implemented client-side, you can try the following techniques:

- Run your site through a crawling tool: Crawling tools mimic search bots in that they follow links and other signals on a website to discover all its pages. Many will also report back on the status code or if a meta refresh is detected on the URLs they find. Screaming Frog has a guide for detecting redirects using its tool.

- Use a plug-in: There are many browser plugins that will show if a page you are visiting has a redirect. They don’t tend to let you identify site-wide redirects, but they can be handy for spot-checking a page.

- Use Chrome Developer Tools: Another way, which just requires a Chrome browser, is to visit the page you are checking and use Chrome Developer Tools to identify if there is a redirect on it. You simply go to the Network panel to see what the response codes are for each element of the page. For more details, have a look at this guide by Aleyda Solis.

Google Search Console

The Coverage tab in Google Search Console lists errors found by Google that may prevent indexing.

Here, you may see examples of pages that have redirect errors. These are explained in further detail in Google’s Search Console Help section on Indexing reasons.

Alternatives to redirects

There are some occasions when redirects might not be possible at all. This tends to be when there is a limit to what can be accomplished through a CMS or with internal resources.

In these instances, alternatives could be considered. However, they may not achieve exactly what you would want from a redirect.

Canonical tags

To help rank a new page over the old one, you might need to use a canonical tag if redirects are not possible.

For example, you may have two identical URLs: Page A and Page B. Page B is new, but you want that to be what users find when they search with relevant search terms in Google. You do not want Page A to be served as a search result anymore.

Typically, you would just add a redirect from Page A to Page B so users could not access Page A from the SERPs. If you cannot add redirects, you can use a canonical tag to indicate to search engines that Page B should be served instead of Page A.

If URL A has been replaced by URL B and they both have identical content, Google may trust your canonical tag if all other signals also point to Page B being the new canonical version of the two.

Crypto redirects

A crypto redirect isn’t really a redirect at all. It’s actually a link on the page you would want to have redirected, directing users to the new page. Essentially it acts as a signpost.

For example, a call to action like, “This page has moved. Find it at its new location.” with the text linking to the content’s new URL.

Crypto redirects require users to carry out an action and will not work as a redirect for search engines, but if you are really struggling to implement a redirect and a change of address for content has occurred, this may be your only option to link one page to the other.

Conclusion

Redirects are useful tools for informing users and search engines that content has moved location. They help search engines understand the relevance of a new page to the old one while removing the requirement for users to locate the new content.

- Used properly, redirects can aid usability and search rankings.

- Used incorrectly, they can lead to problems with parsing, indexing and serving your website’s content.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

How to extract GBP review insights to boost local SEO visibility

Written on June 3, 2024 at 12:42 pm, by admin

Since the inception of local business listings, companies have explored various methods to acquire more customer reviews. These reviews provide valuable insights into consumer sentiment, common pain points, and areas for improvement.

While many businesses use paid tools to analyze review data, there are cost-effective methods to extract similar insights, particularly for smaller businesses with limited budgets.

This article:

- Explores how to extract entities from your Google Business Profile (GBP) listings and competitor listings using Pleper’s API service.

- Examines the impact of entities mentioned in reviews on local search visibility, specifically in the Google local 3-pack results.

- Covers ethical techniques to promote revenue-driving entities through review solicitation efforts while adhering to Google’s guidelines.

Analyzing GBP reviews for business insights

Companies like Yext, Reputation, and Birdeye can analyze top entities mentioned in reviews and offer insight into the sentiment around each of these. However, they can also command quite a large price tag.

Investing in these tools is essential for businesses managing numerous listings across multiple platforms. However, extracting insights from competitor listings remains costly. Monitoring competitor listings for review insights is often seen as an unjustifiable expense.

Smaller businesses can manage listings cost-effectively by assigning an internal marketing employee, but extracting valuable insights from reviews without using tools is more challenging.

Dig deeper: How to turn your Google Business Profile into a revenue-generating channel

How to extract business insights from GBP listings

Luckily, there is a much more cost-effective method to collect entities from GBP reviews using Pleper’s API service.

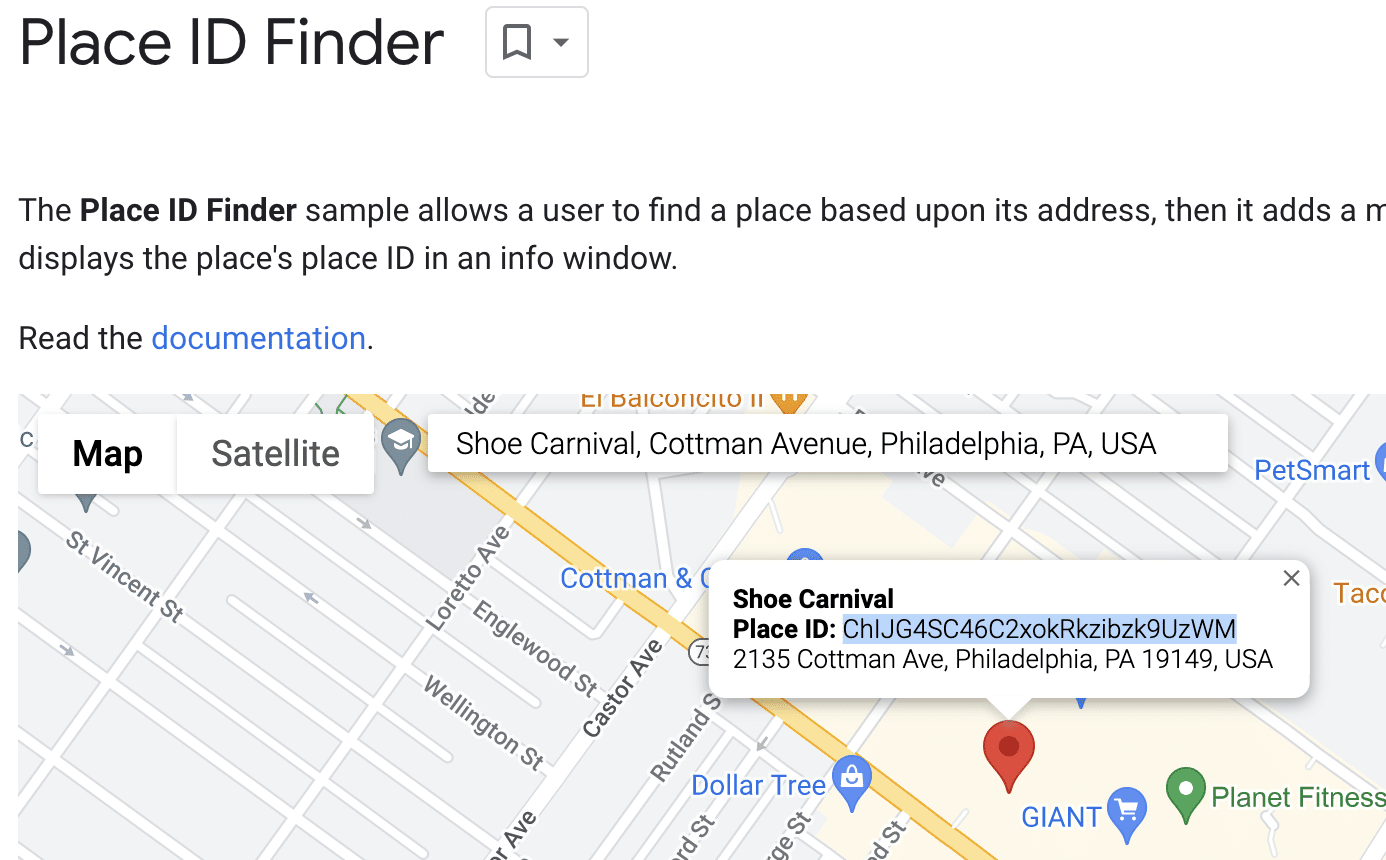

Collect place IDs for listing

For small batches, using Google’s Place ID demo works well for collecting Place IDs for your business’ listings and local competitor listings.

I’ve found that the following formula works well for searching for these listings: {business name}, {business address}.

For larger batches, I recommend using Google’s Place ID API. Using the above formula as the search query, Place IDs can be quickly and efficiently collected.

Use Pleper’s API to collect information on each listing

After each listing’s Place ID has been collected, use Pleper’s Scrape API to retrieve the listing information. Once the data has been retrieved, use a parsing script to extract review topics and assign a value to each topic based on sentiment.

Here is an example script that will do just that:

import pandas as pd

def extract_review_topics(data):

topics_list = []

sentiment_map = {

'positive': 1,

'neutral': 0,

'negative': -1

}

for entry in data['results']['google/by-profile/information']:

if 'results' in entry and 'review_topics' in entry['results']:

for topic in entry['results']['review_topics']:

topic_details = {

'Business Name': entry['results'].get('name', 'N/A'),

'Address': entry['results'].get('address', 'N/A'),

'Place ID': entry['payload']['profile_url'],

'Topic': topic.get('topic', 'N/A'),

'Count': topic.get('count', 0),

'Sentiment': sentiment_map.get(topic.get('sentiment', 'neutral'), 0)

}

topics_list.append(topic_details)

return topics_list

topics_data = extract_review_topics(batch_result)

df = pd.DataFrame(topics_data)

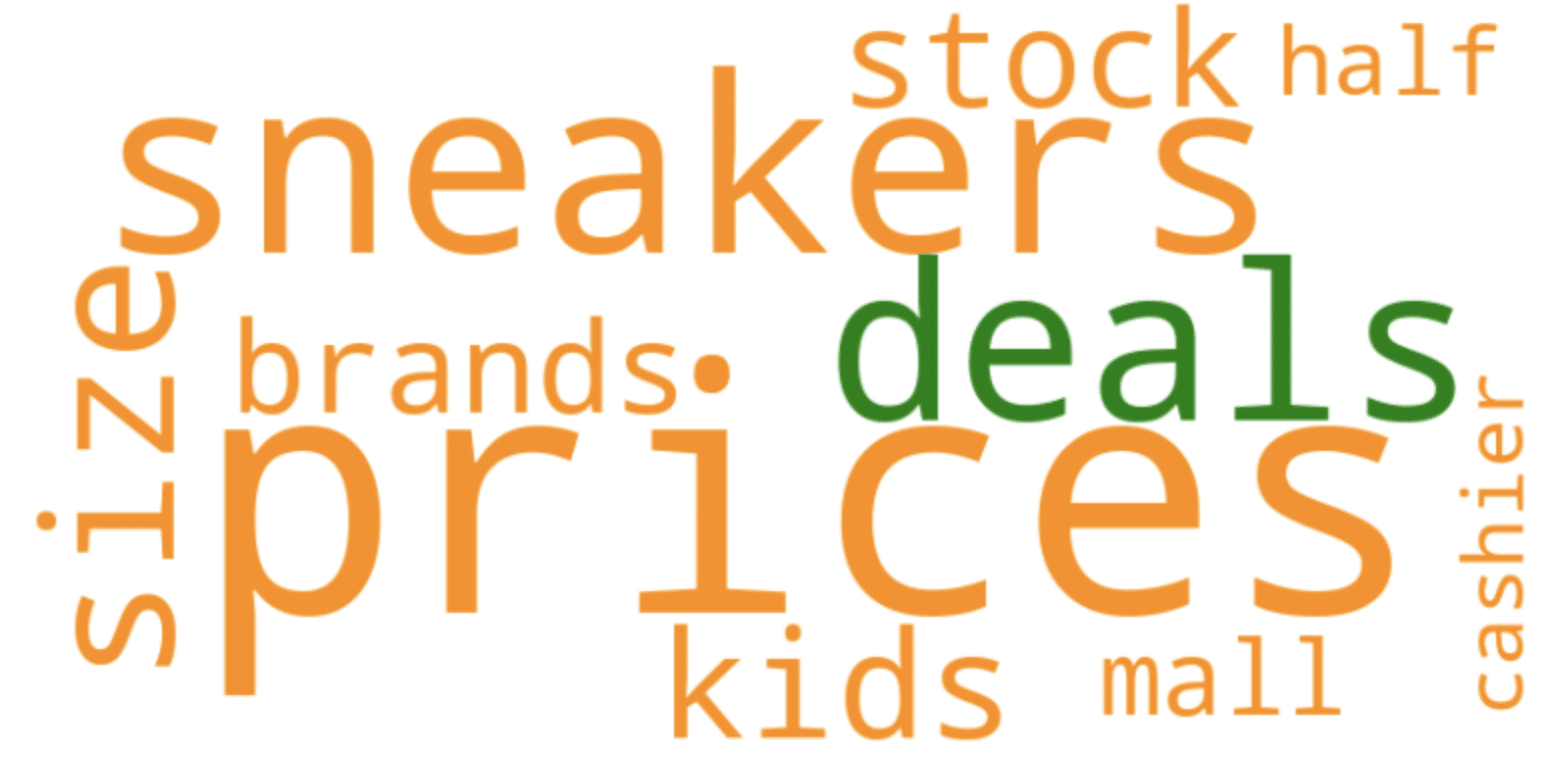

print(df)Now that the data has been properly retrieved from Pleper and parsed into a pandas dataframe, matplotlib can be used to create a word cloud like the one below:

Word clouds can be created for individual listings or aggregated data on all of a brand’s listings. Comparing word clouds from your own business listings to those of competitors can lead to truly valuable insights.

The impact of entities on local 3-pack results

Entities have been highlighted in reviews for some time now; however, I haven’t seen many SEOs attempt to promote revenue-generating entities in review solicitation.

When possible, entities mentioned in a query are highlighted within the local 3-pack via the listings reviews. Typically, this occurs on long-tail queries, where more context is provided to Google on what the searcher is looking for.

To better understand the impact of entities within reviews, let’s analyze the results of two queries:

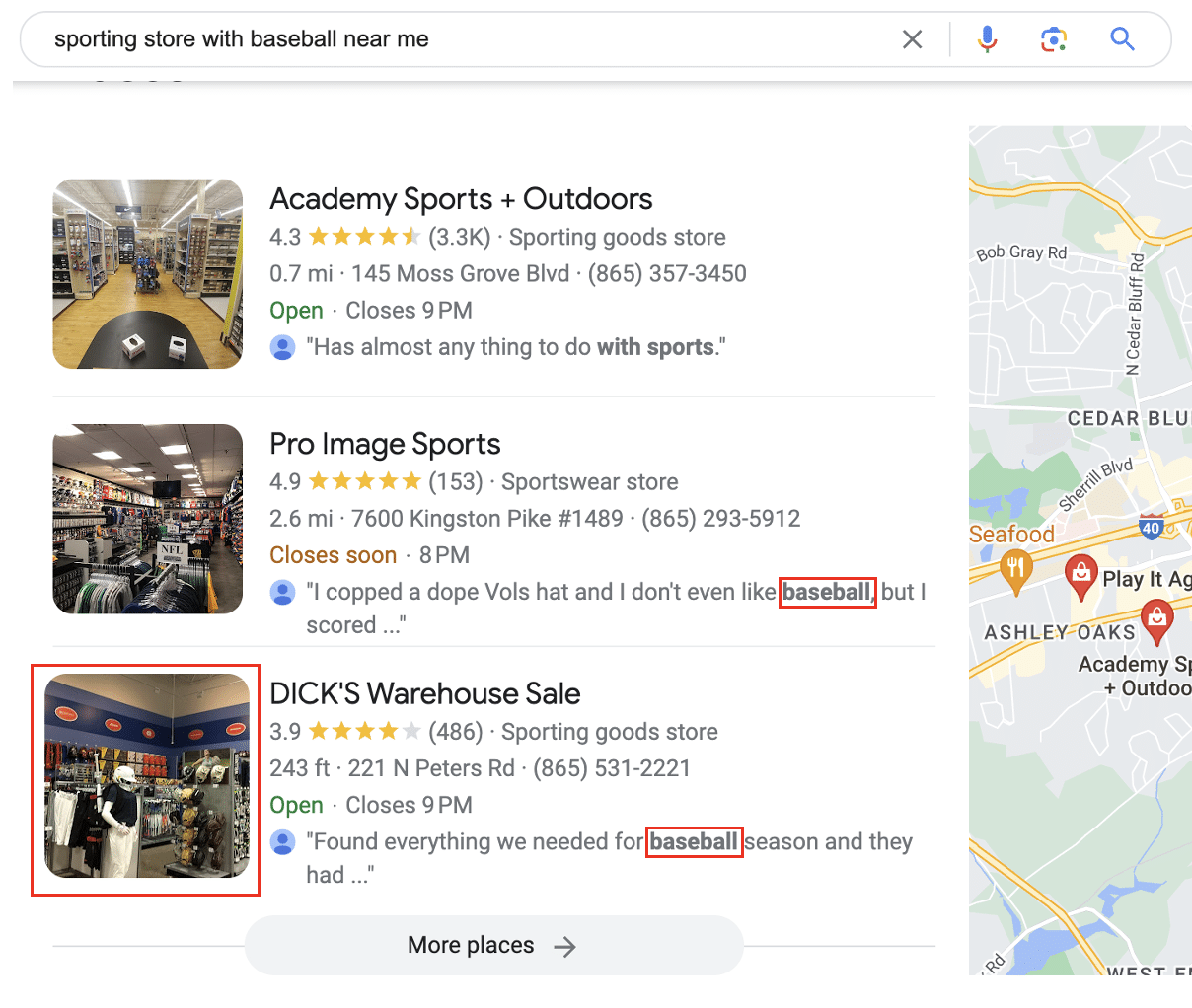

Sporting stores near me

Sporting store with baseball near me

Here are a few takeaways from comparing these two queries:

- Google dynamically changed the shown image of the DICK’S Warehouse Sale listing to showcase the baseball section.

- Google calls out review mentions about baseball by bolding the mention. As a searcher, I find that this inherently made those listings more attractive, just as their “In stock” feature would.

- The SERP dropped Going, Going, Gone! from the local 3-pack even though I was standing next to it. In my opinion, this has merit as Going, Going, Gone! has a lower stock of equipment than the typical DICK’S store and primarily leans towards apparel.

- Pro Image Sports is a clothing store that carries sports appeal. It is also 2.5 miles further than the next closest sport equipment store, Play It Again Sports, which carries baseball equipment.

When comparing these results, I couldn’t help but believe that the mention of baseball in Pro Image Sports review increased their visibility within the 3-pack, so I investigated further.

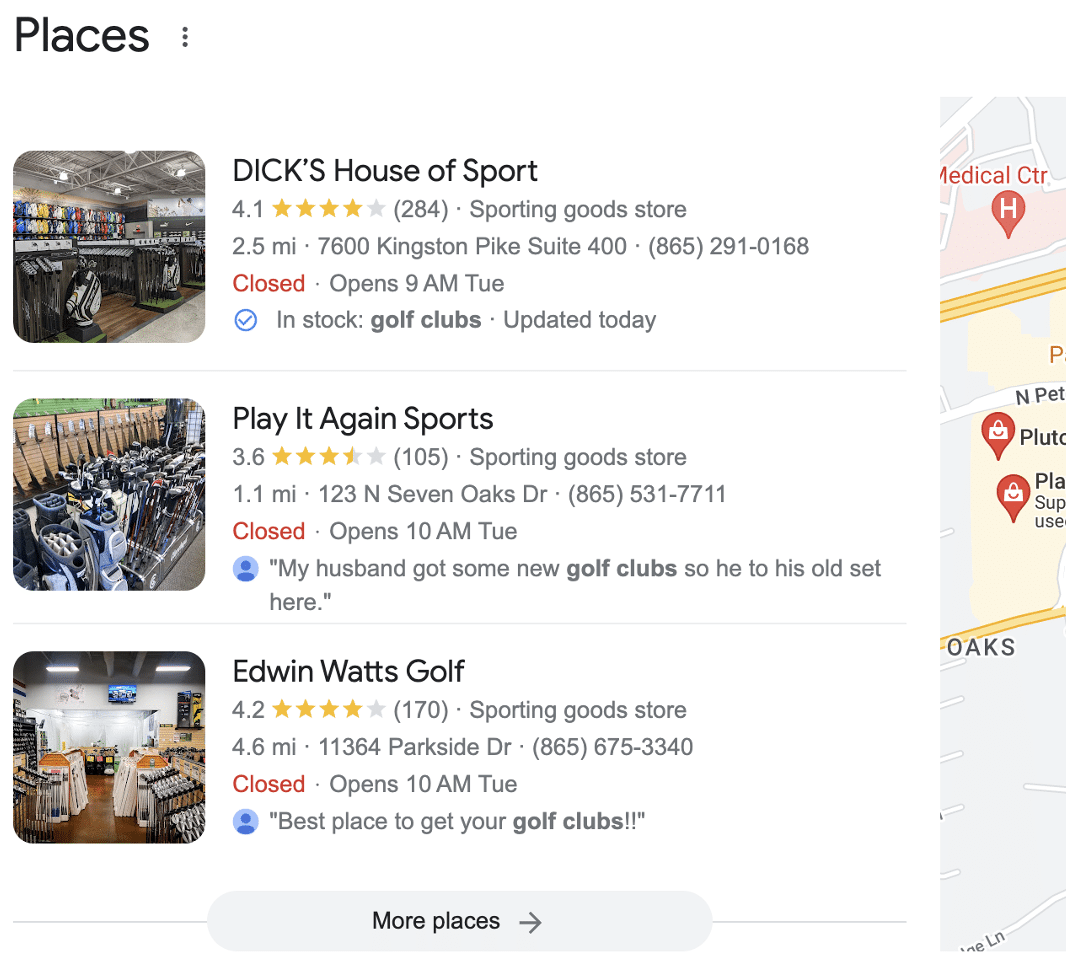

Looking at the review topics provided by Google for Play it Again Sports, I noticed a high number of reviews for “golfing clubs,” so I changed the query to “Sporting Store with Golf Clubs Near Me.”

By targeting a topic that is mentioned more frequently within Play It Again Sports’ reviews, they appeared within the local 3-pack.

From this small experiment, it’s clear that review topics (entities) play a role in local 3-pack visibility and a larger one than I once believed.

Dig deeper: How to establish your brand entity for SEO: A 5-step guide

Promoting entities in review solicitation

Google’s guidelines state that review solicitation should be honest, unbiased and without incentives. Businesses should also avoid review gating.

You can ask for reviews on specific topics and remind customers of the products or services they used. Implementation will vary based on each business’s approach to soliciting reviews.

Add a statement like “Tell us about your experience purchasing baseball equipment from us” above the review link in your solicitation email.

It’s important not to exaggerate in this message to avoid biased customer reviews. For example, avoid saying, “Tell us about your positive experience when purchasing high-quality baseball equipment from us.”

While this statement does not inherently create bias as it does not offer an incentive to leave a positive review, it can be considered manipulative, which does not fully align with Google’s guidelines for reviews to be honest and unbiased.

Dig deeper: Unleashing the potential of Google reviews for local SEO

Applying review insights for business results

After learning how to analyze listings (your own and competitors), how review topics (entities) influence local 3-pack visibility and how to increase the number of entities within reviews, it’s time to put it all together to drive business results.

Sharing insights from your business listing’s reviews with the appropriate internal stakeholders is key to helping inform strategic and operational changes. Competitor insights can be a driving force for these changes.

For example, if a competitor barber shop uses hot towels in each haircut and your business does not, this data may help make the case that your business should be doing the same.

Next, work internally to leverage business intelligence (i.e., customer purchase data) within review solicitation efforts to promote entities within reviews. Implementing these efforts will vary depending on a business’s technology stack and ability to integrate data.

A more simplistic approach may be necessary for businesses that lack the ability to integrate data. In these situations, I recommend appending a generic statement within the solicitation communication to identify a specific entity.

An example may be a mechanic shop that appends the following statement to increase the mentions of “mechanics.”

- “Tell us about your experience at our shop and the quality of our mechanics.”

Regardless of the internal approach, reviews are crucial for local SEO and shaping consumer perceptions. As an SEO, you can help your business understand its strengths and weaknesses while working to improve local search visibility.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Brave launches Search Ads

Written on June 2, 2024 at 9:42 am, by admin

Brave Search now offers Search Ads on a managed service basis.

Brave Search Ads. Here’s what Brave announced:

- Ads are sold on a cost-per-click basis.

- Rates are negotiated on a fixed basis before paid campaigns launch.

- Eligible brands can get up to 14 days of complimentary advertising.

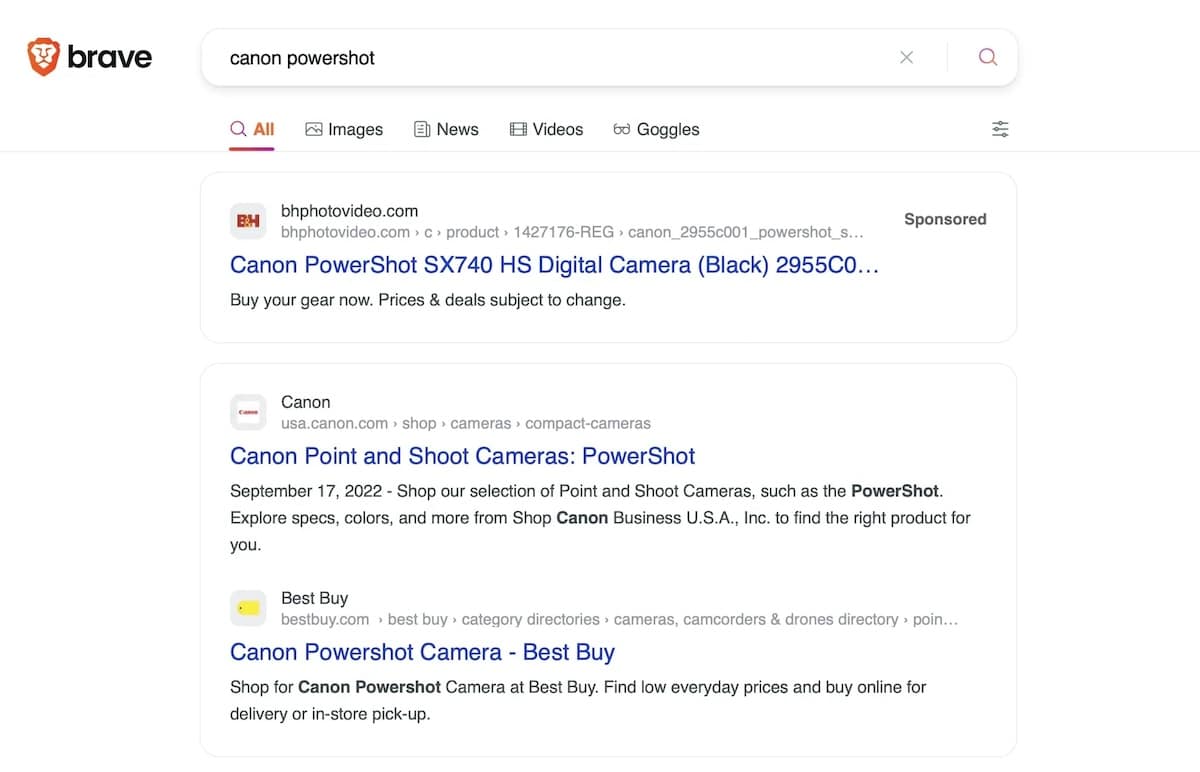

What Brave Search Ads look like. Here’s a screenshot:

Available now. Brave Search Ads are available in the U.S., Canada, the UK, France and Germany. Brands must meet certain eligibility requirements – Brave called this a “minimum threshold of eligible ad impressions in their desired region.”

Testing, testing. The launch follows 18 months of testing, Brave said. Amazon Ads Sponsored Products, Dell, Fubo, Insurify, Shutterstock and Thumbtack were among the brands that tested Brave Search Ads.

What Brave is saying. The company is pitching this as a way that brands can augment their paid media strategy and reach “highly qualified—but otherwise unreachable—audiences”:

- “As of April 2024, a third of the top search advertisers in the US by media spend are either currently testing or have moved to paid Brave Search Ads campaigns. Since the beginning of the year, 89% of customers who started Brave Search Ads campaigns have continued to advertise in consecutive months.”

About Brave Search. Brave has an independent search index (it doesn’t rely on Google or Microsoft to serve its search results). It now has 65 million monthly active users and processes over 10 billion annual searches. For context, Google processes roughly that many searches per day.

The announcement. Brave launches Search Ads in key markets, after successful test phase with leading brands.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

LinkedIn shrinks link previews for organic posts

Written on June 1, 2024 at 6:41 am, by admin

LinkedIn has reduced the size of link preview images for organic posts, while maintaining larger preview images for sponsored content.

Why it matters. The change aims to encourage more native posting on LinkedIn and could prompt more users and brands to pay for sponsored posts to retain larger link previews.

Why we care. The change incentivizes advertisers to pay to promote posts to get more visibility with larger link previews.

Driving the news. As part of this “feed simplification” update announced months ago, LinkedIn is making preview images significantly smaller for organic posts with third-party links.

- Sponsored posts will still display larger preview images from 360 x 640 pixels up to 2430 x 4320 pixels.

- The smaller organic previews are meant to “help members stay on LinkedIn and engage with unique commentary.”

What they’re saying. “When an organic post becomes a Sponsored Content ad, the small thumbnail preview image shown in the organic post is converted to an image with a minimum of 360 x 640 pixels and a maximum of 2430 x 4320 pixels,” per LinkedIn.

The other side. Some criticize the move as penalizing professionals who don’t have time for constant original posting and rely on sharing third-party content.

The bottom line. Brands and individuals looking for maximum engagement from shared links on LinkedIn may increasingly need to pay for sponsored posts.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Google to honor new privacy laws and user opt-outs

Written on June 1, 2024 at 6:41 am, by admin

Google is rolling out changes to comply with new state privacy laws and user opt-out preferences across its ads and analytics products in 2024.

The big picture. Google is adjusting its practices as Florida, Texas, Oregon, Montana and Colorado enact new data privacy regulations this year.

Key updates include:

Restricted Data Processing (RDP) (which is when Google limits how it uses data to only show non-personalized ads) for new state laws:

- Google will update its ads terms to enable RDP for these states as their laws take effect.

- This allows Google to act as a data processor rather than a controller for partner data while RDP is enabled.

- No additional action required if you’ve already accepted Google’s online data protection terms.

Honoring global privacy control opt-outs:

- To comply with Colorado’s Universal Opt-Out Mechanism, Google will respect Global Privacy Control signals sent directly from users.

- This will disable personalized ad targeting based on things like Customer Match and remarketing lists for opted-out users.

Why we care. This change Google is implementing will help keep advertisers on the right side of the law when it comes to privacy. However, the changes could impact ad targeting efficiency and personalization capabilities as more users opt out.

What they’re saying. Here’s what Google told partners in an email:

- “If you’ve already agreed to the online data protection terms, customers [i.e. advertisers] should note that Google can receive Global Privacy Control signals directly from users and will engage RDP mode on their behalf.”

Bottom line. Google is taking steps to help partners comply with tightening data privacy rules, even if it means limiting its own ad targeting.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Google explains how it is improving its AI Overviews

Written on May 31, 2024 at 3:40 am, by admin

Google responded to the bad press surrounding its recently rolled out AI Overviews in a new blog post by its new head of Search, Liz Reid. Google explained how AI Overviews work, where the weird AI Overviews came from, the improvements Google made and will continue to make to its AI Overviews.

However, Google said searchers “have higher satisfaction with their search results, and they’re asking longer, more complex questions that they know Google can now help with,” and basically, these AI Overviews are not going anywhere.

As good as featured snippets. Google said the AI Overviews are “highly effective” and based on its internal testing, the AI Overviews “accuracy rate for AI Overviews is on par with featured snippets.” Featured snippets also use AI, Google said numerous times.

No hallucinations. AI Overviews generally don’t hallucinate, Google’s Liz Reid wrote. The AI Overviews don’t “make things up in the ways that other LLM products might,” she added. AI Overviews typically only go wrong when Google “misinterpreting queries, misinterpreting a nuance of language on the web, or not having a lot of great information available,” she wrote.

Why the “odd results.” Google explained that it tested AI Overviews “extensively” before releasing it and was comfortable releasing it. But Google said that people tried to get the AI Overviews to return odd results. “We’ve also seen nonsensical new searches, seemingly aimed at producing erroneous results,” Google wrote.

Also, Google wrote that people faked a lot of the examples, but manipulating screenshots showing fake AI responses. “Those AI Overviews never appeared,” Google said.

Some odd examples did come up, and Google will make improvements in those types of cases. Google will not manually adjust AI Overviews but rather improve the models so they work across many more queries. “we don’t simply “fix” queries one by one, but we work on updates that can help broad sets of queries, including new ones that we haven’t seen yet,” Google wrote.

Google spoke about the “data voids,” which we covered numerous times here. The example, “How many rocks should I eat?” was a query no one has searched for prior and had no real good content on. Google explained, “However, in this case, there is satirical content on this topic … that also happened to be republished on a geological software provider’s website. So when someone put that question into Search, an AI Overview appeared that faithfully linked to one of the only websites that tackled the question.”

Improvements to AI Overviews. Google shared some of the improvements it has made to AI Overviews, explaining it will continue to make improvements going forward. Here is what Google said it has done so far:

- Built better detection mechanisms for nonsensical queries that shouldn’t show an AI Overview, and limited the inclusion of satire and humor content.

- Updated its systems to limit the use of user-generated content in responses that could offer misleading advice.

- Added triggering restrictions for queries where AI Overviews were not proving to be as helpful.

- For topics like news and health, Google said it already have strong guardrails in place. For example, Google said it aims to not show AI Overviews for hard news topics, where freshness and factuality are important. In the case of health, Google said it launched additional triggering refinements to enhance our quality protections.

Finally, “We’ll keep improving when and how we show AI Overviews and strengthening our protections, including for edge cases, and we’re very grateful for the ongoing feedback,” Liz Reid ended with.

Why we care. It sounds like AI Overviews are not going anywhere and Google will continue to show them to searchers and roll them out to more countries and users in the future. You can expect them to get better over time, as Google continues to hear feedback and improve its systems.

Until then, I am sure we will find more examples of inaccurate and sometimes humorous AI Overviews, similar to what we saw when featured snippets initially launched.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

How SEO moves forward with the Google Content Warehouse API leak

Written on May 31, 2024 at 3:40 am, by admin

In case you missed it, 2,569 internal documents related to internal services at Google leaked.

A search marketer named Erfan Amizi brought them to Rand Fishkin’s attention, and we analyzed them.

Pandemonium ensued.

As you might imagine, it’s been a crazy 48 hours for us all and I have completely failed at being on vacation.

Naturally, some portion of the SEO community has quickly fallen into the standard fear, uncertainty and doubt spiral.

Reconciling new information can be difficult and our cognitive biases can stand in the way.

It’s valuable to discuss this further and offer clarification so we can use what we’ve learned more productively.

After all, these documents are the clearest look at how Google actually considers features of pages that we have had to date.

In this article, I want to attempt to be more explicitly clear, answer common questions, critiques, and concerns and highlight additional actionable findings.

Finally, I want to give you a glimpse into how we will be using this information to do cutting-edge work for our clients. The hope is that we can collectively come up with the best ways to update our best practices based on what we’ve learned.

Reactions to the leak: My thoughts on common criticisms

Let’s start by addressing what people have been saying in response to our findings. I’m not a subtweeter, so this is to all of y’all and I say this with love.

‘We already knew all that’

No, in large part, you did not.

Generally speaking, the SEO community has operated based on a series of best practices derived from research-minded people from the late 1990s and early 2000s.

For instance, we’ve held the page title in such high regard for so long because early search engines were not full-text and only indexed the page titles.

These practices have been reluctantly updated based on information from Google, SEO software companies, and insights from the community. There were numerous gaps that you filled with your own speculation and anecdotal evidence from your experiences.

If you’re more advanced, you capitalized on temporary edge cases and exploits, but you never knew exactly the depth of what Google considers when it computes its rankings.

You also did not know most of its named systems, so you would not have been able to interpret much of what you see in these documents. So, you searched these documents for the things that you do understand and you concluded that you know everything here.

That is the very definition of confirmation bias.

In reality, there are many features in these documents that none of us knew.

Just like the 2006 AOL search data leak and the Yandex leak, there will be value captured from these documents for years to come. Most importantly, you also just got actual confirmation that Google uses features that you might have suspected. There is value in that if only to act as proof when you are trying to get something implemented with your clients.

Finally, we now have a better sense of internal terminology. One way Google spokespeople evade explanation is through language ambiguity. We are now better armed to ask the right questions and stop living on the abstraction layer.

‘We should just focus on customers and not the leak’

Sure. As an early and continued proponent of market segmentation in SEO, I obviously think we should be focusing on our customers.

Yet we can’t deny that we live in a reality where most of the web has conformed to Google to drive traffic.

We operate in a channel that is considered a black box. Our customers ask us questions that we often respond to with “it depends.”

I’m of the mindset that there is value in having an atomic understanding of what we’re working with so we can explain what it depends on. That helps with building trust and getting buy-in to execute on the work that we do.

Mastering our channel is in service of our focus on our customers.

‘The leak isn’t real’

Skepticism in SEO is healthy. Ultimately, you can decide to believe whatever you want, but here’s the reality of the situation:

- Erfan had his Xoogler source authenticate the documentation.

- Rand worked through his own authentication process.

- I also authenticated the documentation separately through my own network and backchannel resources.

I can say with absolute confidence that the leak is real and has been definitively verified in several ways including through insights from people with deeper access to Google’s systems.

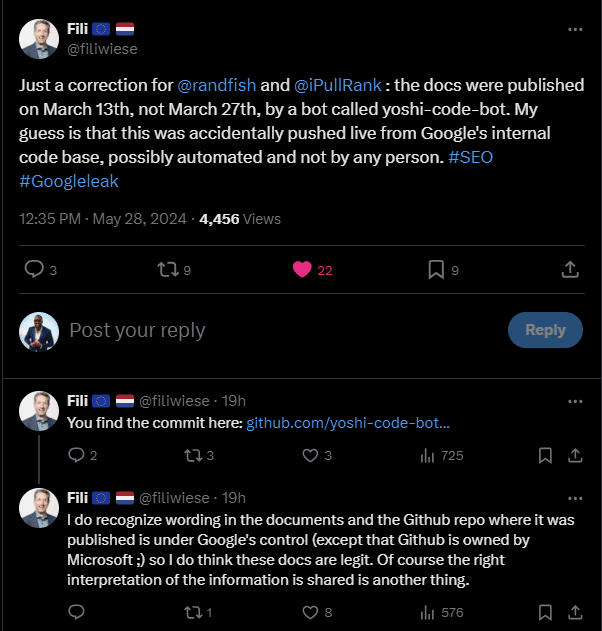

In addition to my own sources, Xoogler Fili Wiese offered his insight on X. Note that I’ve included his call out even though he vaguely sprinkled some doubt on my interpretations without offering any other information. But that’s a Xoogler for you, amiright?

Finally, the documentation references specific internal ranking systems that only Googlers know about. I touched on some of those systems and cross-referenced their functions with detail from a Google engineer’s resume.

Oh, and Google just verified it in a statement as I was putting my final edits on this.

“This is a Nothingburger”

No doubt.

I’ll see you on page 2 of the SERPs while I’m having mine medium with cheese, mayo, ketchup and mustard.

“It doesn’t say CTR so it’s not being used”

So, let me get this straight, you think a marvel of modern technology that computes an array of data points across thousands of computers to generate and display results from tens of billions of pages in a quarter of a second that stores both clicks and impressions as features is incapable of performing basic division on the fly?

… OK.

“Be careful with drawing conclusions from this information”

I agree with this. We all have the potential to be wrong in our interpretation here due to the caveats that I highlighted.

To that end, we should take measured approaches in developing and testing hypotheses based on this data.

The conclusions I’ve drawn are based on my research into Google and precedents in Information Retrieval, but like I said it is entirely possible that my conclusions are not absolutely correct.

“The leak is to stop us from talking about AI Overviews”

No.

The misconfigured documentation deployment happened in March. There’s some evidence that this has been happening in other languages (sans comments) for two years.

The documents were discovered in May. Had someone discovered it sooner, it would have been shared sooner.

The timing of AI Overviews has nothing to do with it. Cut it out.

“We don’t know how old it is”

This is immaterial. Based on dates in the files, we know it’s at least newer than August 2023.

We know that commits to the repository happen regularly, presumably as a function of code being updated. We know that much of the docs have not changed in subsequent deployments.

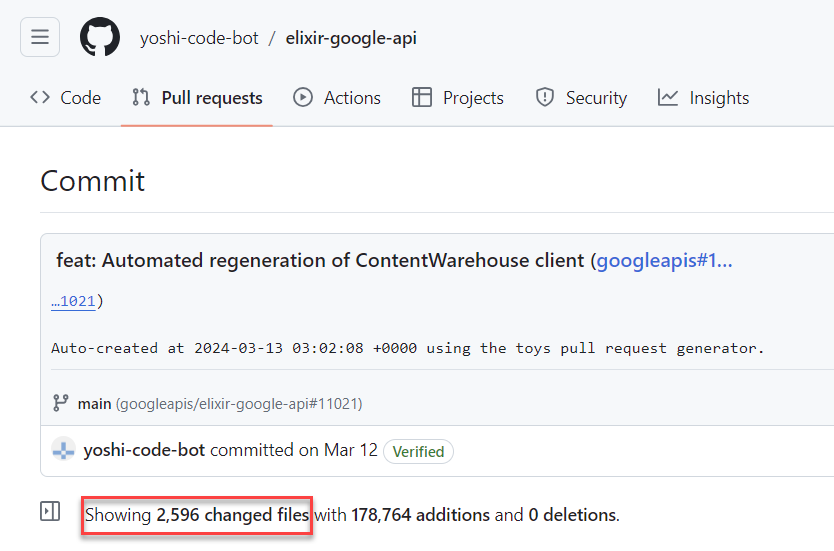

We also know that when this code was deployed, it featured exactly the 2,596 files we have been reviewing and many of those files were not previously in the repository. Unless whoever/whatever did the git push did so with out of date code, this was the latest version at the time.

The documentation has other markers of recency, like references to LLMs and generative features, which suggests that it is at least from the past year.

Either way it has more detail than we have ever gotten before and more than fresh enough for our consideration.

“This all isn’t related to search”

That is correct. I indicated as much in my previous article.

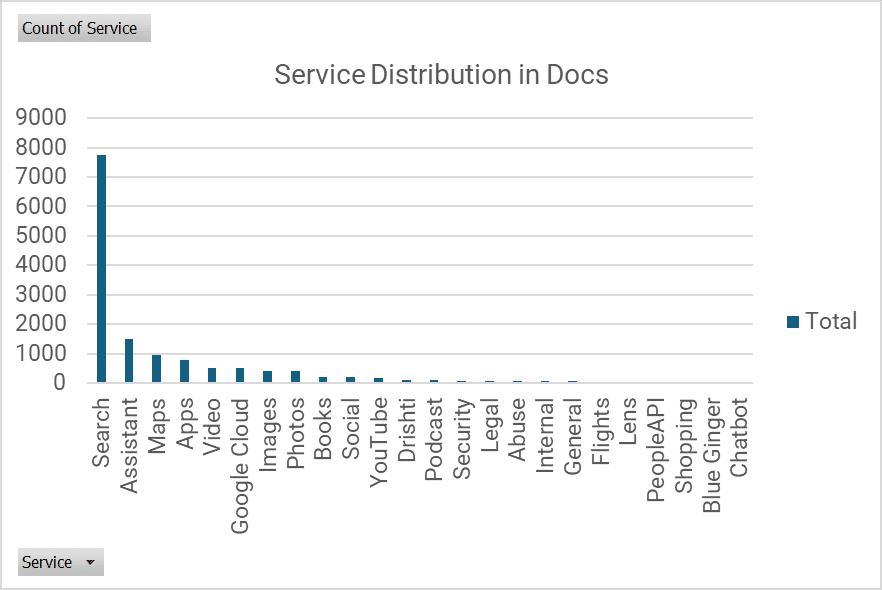

What I did not do was segment the modules into their respective service. I took the time to do that now.

Here’s a quick and dirty classification of the features broadly classified by service based on ModuleName:

Of the 14,000 features, roughly 8,000 are related to Search.

“It’s just a list of variables”

Sure.

It’s a list of variables with descriptions that gives you a sense of the level of granularity Google uses to understand and process the web.

If you care about ranking factors this documentation is Christmas, Hanukkah, Kwanzaa and Festivus.

“It’s a conspiracy! You buried [thing I’m interested in]”

Why would I bury something and then encourage people to go look at the documents themselves and write about their own findings?

Make it make sense.

“This won’t change anything about how I do SEO”

This is a choice and, perhaps, a function of me purposely not being prescriptive with how I presented the findings.

What we’ve learned should at least enhance your approach to SEO strategically in a few meaningful ways and can definitely change it tactically. I’ll discuss that below.

FAQs about the leaked docs

I’ve been asked a lot of questions in the past 48 hours so I think it’s valuable to memorialize the answers here.

What were the most interesting things you found?

It’s all very interesting to me, but here’s a finding that I did not include in the original article:

Google can specify a limit of results per content type.

In other words, they can specify only X number of blog posts or Y number of news articles can appear for a given SERP.

Having a sense of these diversity limits could help us decide which content formats to create when we are selecting keywords to target.

For instance, if we know that the limit is three for blog posts and we don’t think we can outrank any of them, then maybe a video is a more viable format for that keyword.

What should we take away from this leak?

Search has many layers of complexity. Even though we have a broader view into things we don’t know which elements of the ranking systems trigger or why.

We now have more clarity on the signals and their nuances.

What are the implications for local search?

Andrew Shotland is the authority on that. He and his group at LocalSEOGuide have begun to dig into things from that perspective.

What are the implications for YouTube Search?

I have not dug into that, but there are 23 modules with YouTube prefixes.

Someone should definitely do and interpretation of it.

How does this impact the (_______) space?

The simple answer is, it’s hard to know.

An idea that I want to continue to drill home is that Google’s scoring functions behave differently depending on your query and context. Given the evidence we see in how the SERPs function, there are different ranking systems that activate for different verticals.

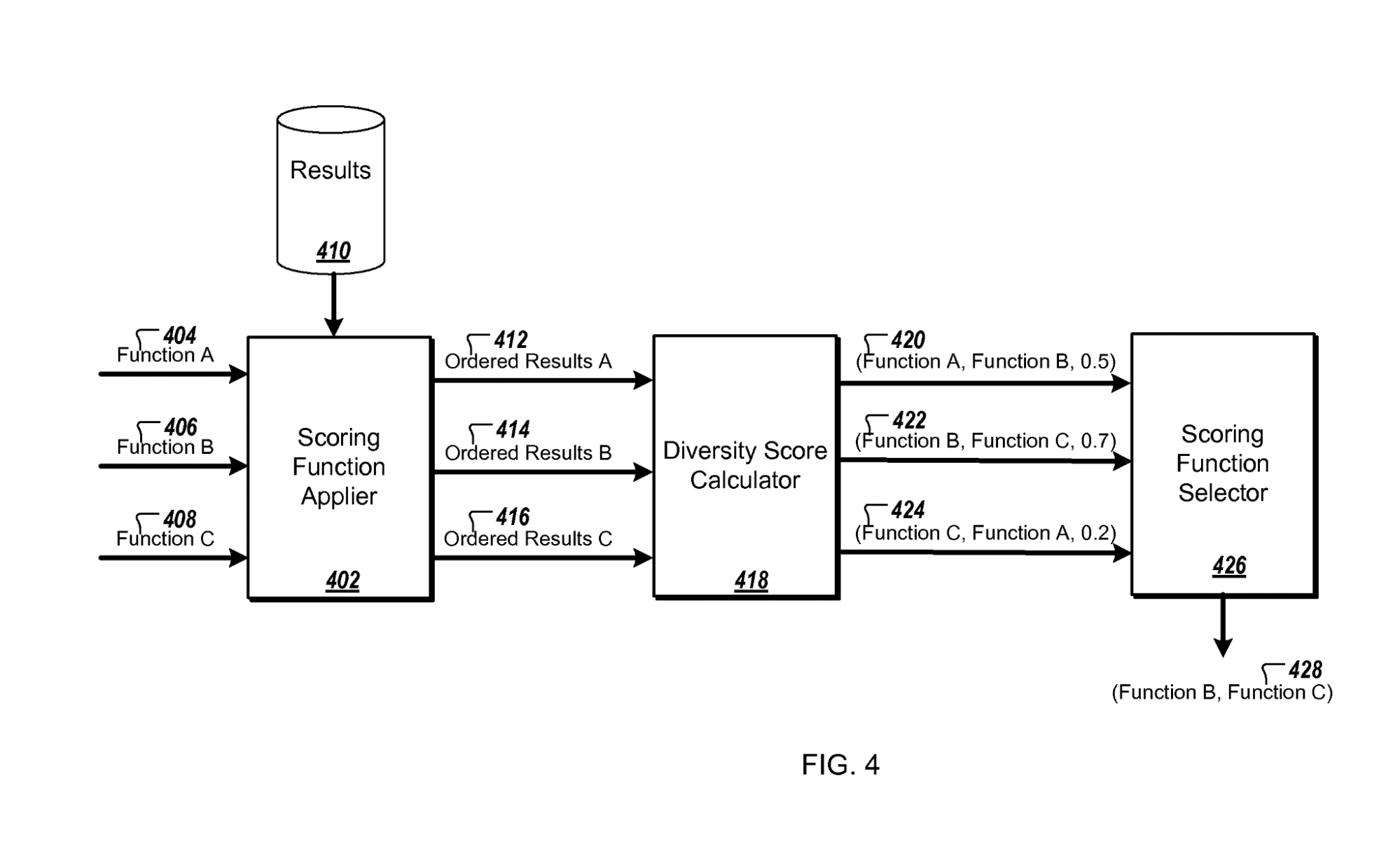

To illustrate this point, the Framework for evaluating web search scoring functions patent shows that Google has the capability to run multiple scoring functions simultaneously and decide which result set to use once the data is returned.

While we have many of the features that Google is storing, we do not have enough information about the downstream processes to know exactly what will happen for any given space.

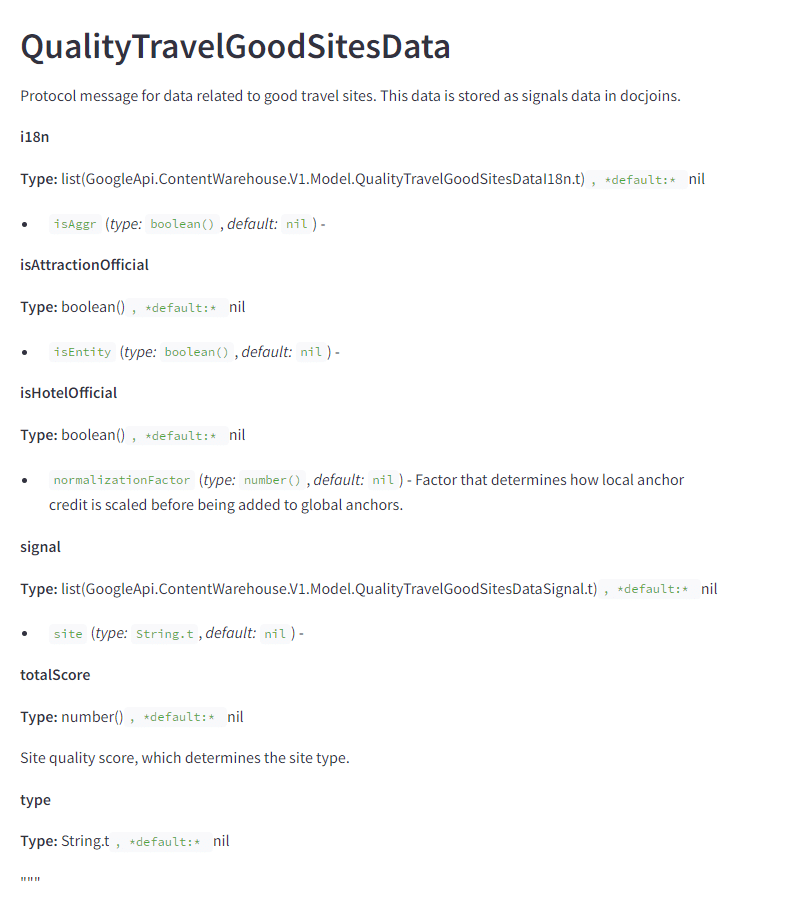

That said, there are some indicators of how Google accounts for some spaces like Travel.

The QualityTravelGoodSitesData module has features that identify and score travel sites, presumably to give them a Boost over non-official sites.

Do you really think Google is purposely torching small sites?

I don’t know.

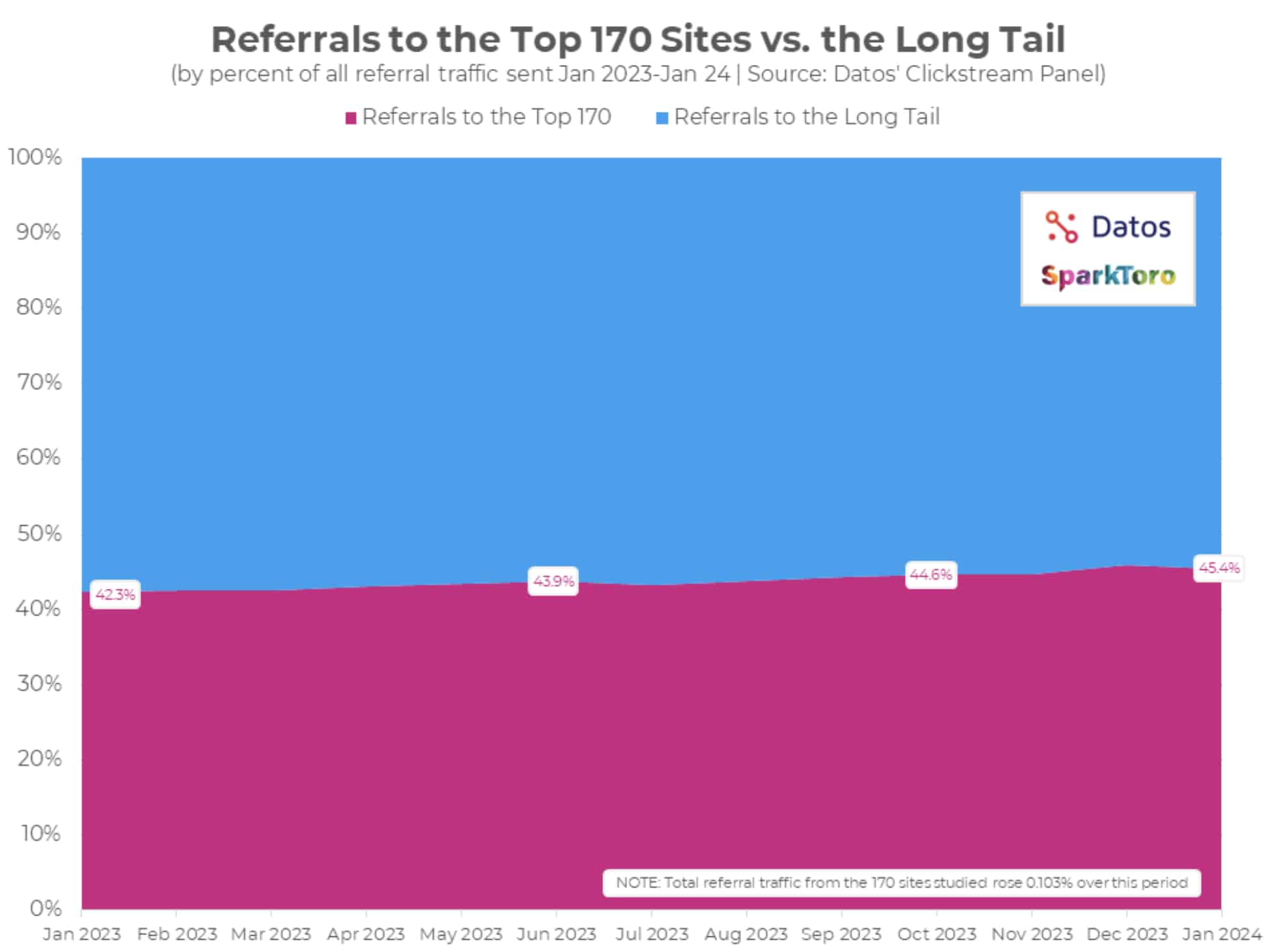

I also don’t know exactly how smallPersonalSite is defined or used, but I do know that there is a lot of evidence of small sites losing most of their traffic and Google is sending less traffic to the long tail of the web.

That’s impacting the livelihood of small businesses. And their outcry seems to have fallen on deaf ears.

Signals like links and clicks inherently support big brands. Those sites naturally attract more links and users are more compelled to click on brands they recognize.

Big brands can also afford agencies like mine and more sophisticated tooling for content engineering so they demonstrate better relevance signals.

It’s a self-fulfilling prophecy and it becomes increasingly difficult for small sites to compete in organic search.

If the sites in question would be considered “small personal sites” then Google should give them a fighting chance with a Boost that offsets the unfair advantage big brands have.

Do you think Googlers are bad people?

I don’t.

I think they generally are well-meaning folks that do the hard job of supporting many people based on a product that they have little influence over and is difficult to explain.

They also work in a public multinational organization with many constraints. The information disparity creates a power dynamic between them and the SEO community.

Googlers could, however, dramatically improve their reputations and credibility among marketers and journalists by saying “no comment” more often rather than providing misleading, patronizing or belittling responses like the one they made about this leak.

Although it’s worth noting that the PR respondent Davis Thompson has been doing comms for Search for just the last two months and I’m sure he is exhausted.

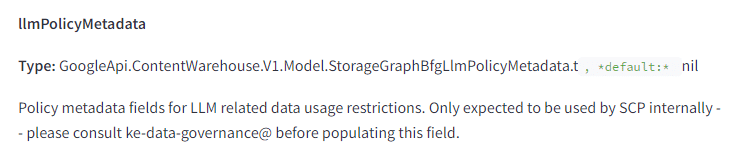

Is there anything related to AI Overviews?

I was not able to find anything directly related to SGE/AIO, but I have already presented a lot of clarity on how that works.

I did find a few policy features for LLMs. This suggests that Google determines what content can or cannot be used from the Knowledge Graph with LLMs.

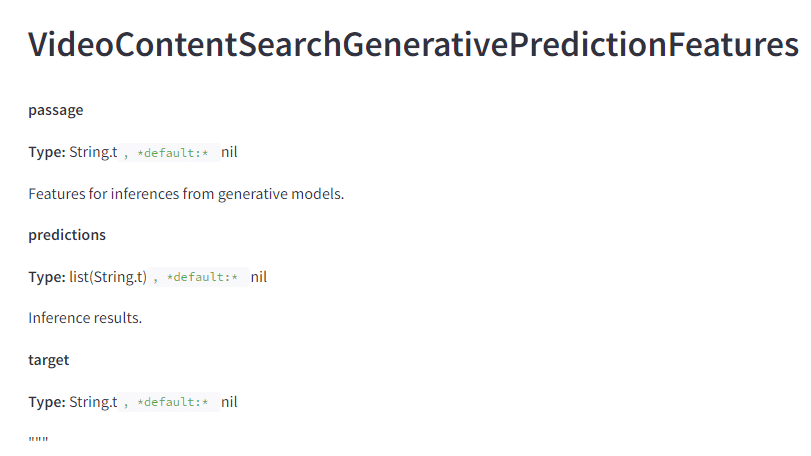

Is there anything related to generative AI?

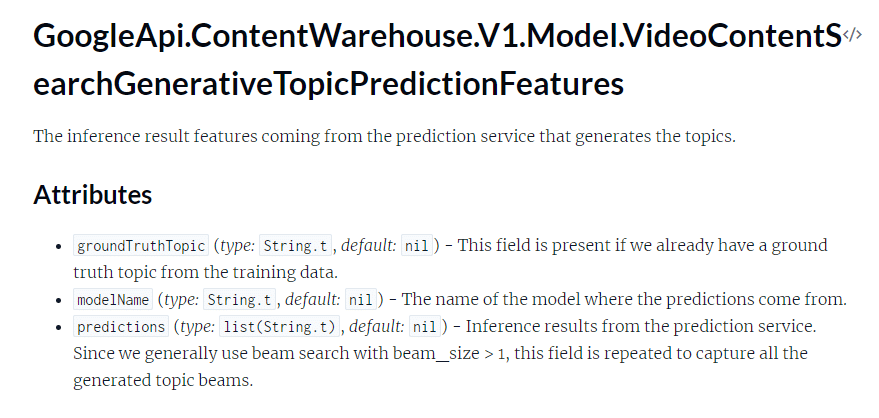

There is something related to video content. Based on the write-ups associated with the attributes, I suspect that they use LLMs to predict the topics of videos.

New discoveries from the leak

Some conversations I’ve had and observed over the past two days has helped me recontextualize my findings – and also dig for more things in the documentation.

Baby Panda is not HCU

Someone with knowledge of Google’s internal systems was able to answer that the Baby Panda references an older system and is not the Helpful Content Update.

I, however, stand by my hypothesis that HCU exhibits similar properties to Panda and it likely requires similar features to improve for recovery.

A worthwhile experiment would be trying to recover traffic to a site hit by HCU by systematically improving click signals and links to see if it works. If someone with a site that’s been struck wants to volunteer as tribute, I have a hypothesis that I’d like to test on how you can recover.

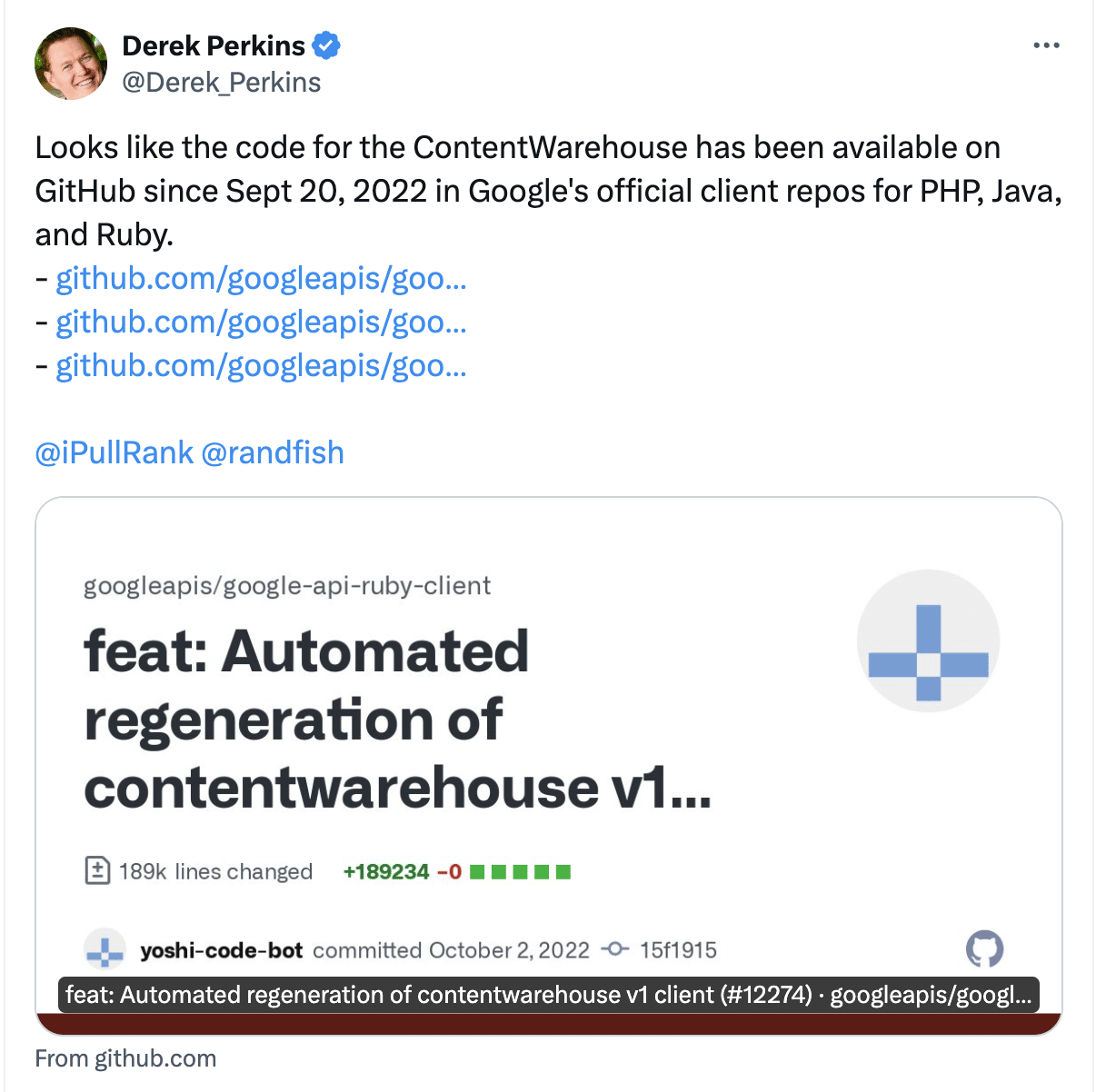

The leaks technically go back two years

Derek Perkins and @SemanticEntity brought to my attention on Twitter that the leaks have been available across languages in Google’s client libraries for Java, Ruby, and PHP.

The difference with those is that there is very limited documentation in the code.

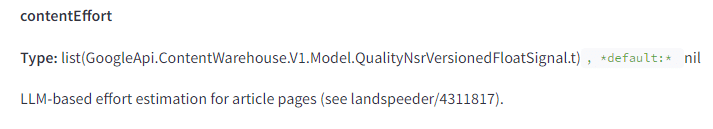

There is a content effort score maybe for generative AI content

Google is attempting to determine the amount of effort employed when creating content. Based on the definition, we don’t know if all content is scored this way by an LLM, or if it is just content that they suspect is built using generative AI.

Nevertheless, this is a measure you can improve through content engineering.

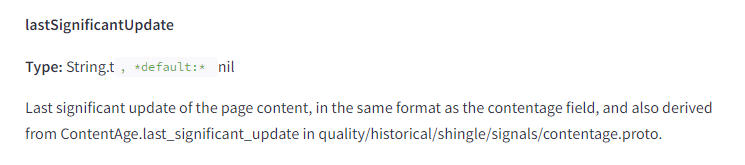

The significance of page updates is measured

The significance of a page update impacts how often a page is crawled and potentially indexed. Previously, you could simply change the dates on your page and it signaled freshness to Google, but this feature suggests that Google expects more significant updates to the page.

Pages are protected based on earlier links in Penguin

According to the description of this feature, Penguin had pages that were considered protected based on the history of their link profile.

This, combined with the link velocity signals, could explain why Google is adamant that negative SEO attacks with links are ineffective.

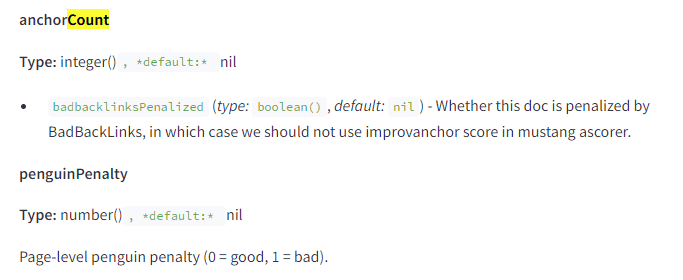

Toxic backlinks are indeed a thing

We’ve heard that “toxic backlinks” are a concept that simply used to sell SEO software. Yet there is a badbacklinksPenalized feature associated with documents.

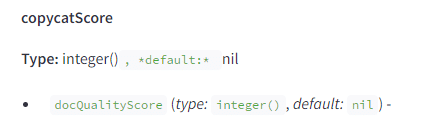

There’s a blog copycat score

In the blog BlogPerDocData module there is a copycat score without a definition, but is tied to the docQualityScore.

My assumption is that it is a measure of duplication specifically for blog posts.

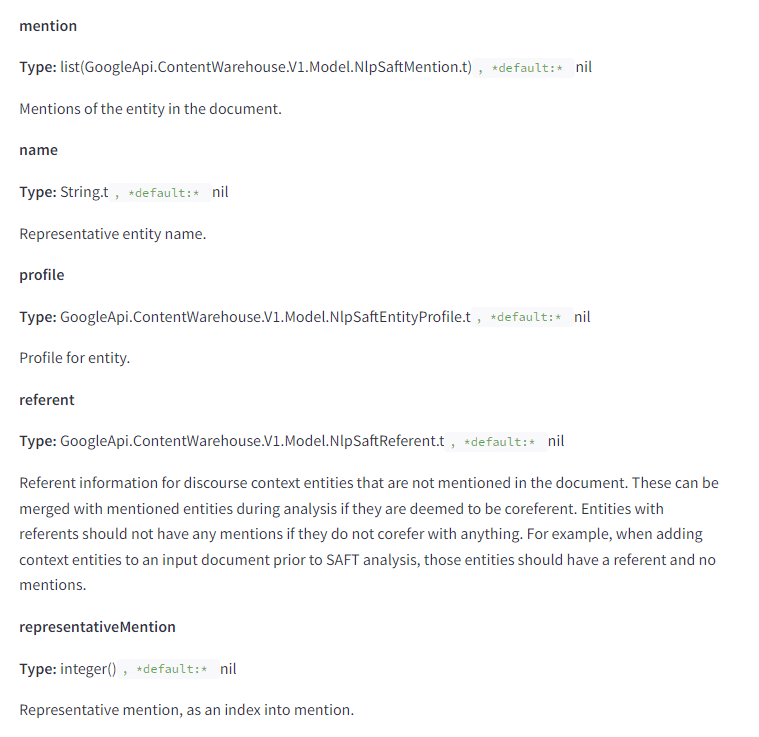

Mentions matter a lot

Although I haven’t come across anything suggesting that mentions are treated as links, there are lot of mentions of mentions as they relate to entities.

This simply reinforces that leaning into entity-driven strategies with your content is a worthwhile addition to your strategy.

Googlebot is more capable than we thought

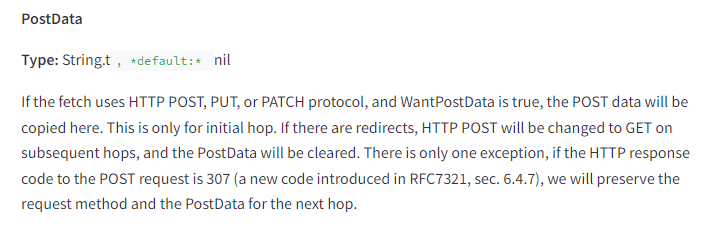

Googlebot’s fetching mechanism is capable of more than just GET requests.

The documentation indicates that it can do POST, PUT, or PATCH requests as well.

The team previously discussed POST requests, but the other two HTTP verbs weren’t previously revealed. If you see some anomalous requests in your logs, this may be why.

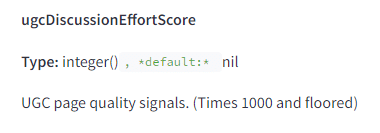

Specific measures of ‘effort’ for UGC

We’ve long believed that leveraging UGC is a scalable way to get more content onto pages and improve their relevance and freshness.

This ugcDiscussionEffortScore suggests that Google is measuring the quality of that content separately from the core content.

When we work with UGC-driven marketplaces and discussion sites, we do a lot of content strategy work related to prompting users to say certain things. That, combined with heavy moderation of the content, should be fundamental to improving the visibility and performance of those sites.

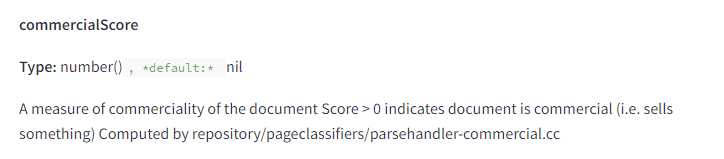

Google detects how commercial a page is

We know that intent is a heavy component of Search, but we only have measures of this on the keyword side of the equation.

Google scores documents this way as well and this can be used to stop a page from being considered for a query with informational intent.

We’ve worked with clients who actively experimented with consolidating informational and transactional page content, with the goal of improving visibility for both types of terms. This worked to varying degrees, but it’s interesting to see the score effectively considered a binary based on this description.

Cool things I’ve seen people do with the leaked docs

I’m pretty excited to see how the documentation is reverberating across the space.

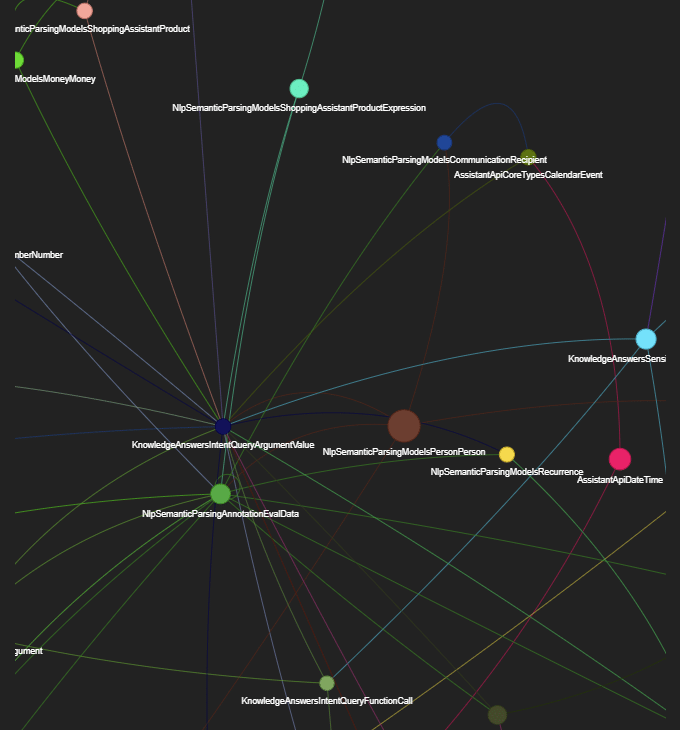

Natzir’s Google’s Ranking Features Modules Relations: Natzir builds a network graph visualization tool in Streamlit that shows the relationships between modules.

WordLift’s Google Leak Reporting Tool: Andrea Volpini built a Streamlit app that lets you ask custom questions about the documents to get a report.

Direction on how to move forward in SEO

The power is in the crowd and the SEO community is a global team.

I don’t expect us to all agree on everything I’ve reviewed and discovered, but we are at our best when we build on our collective expertise.

Here are some things that I think are worth doing.

How to read the documents

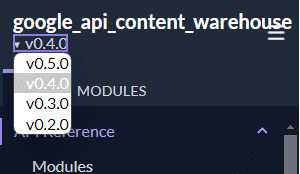

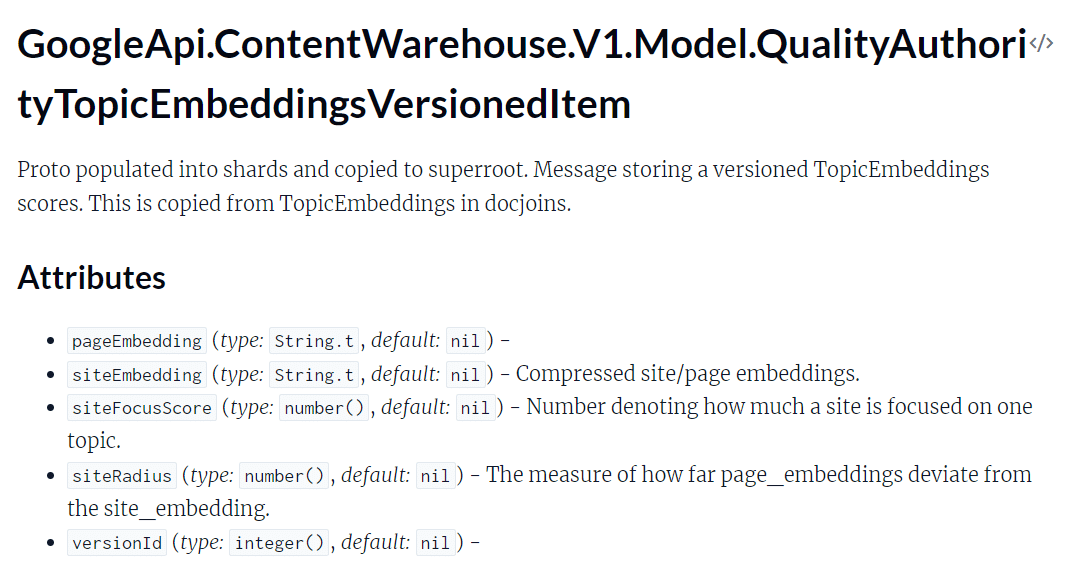

If you haven’t had the chance to dig into the documentation on HexDocs or you’ve tried and don’t know here to start, worry not, I’ve got you covered.

- Start from the root: This features listings of all the modules with some descriptions. In some cases attributes from the module are being displayed.

- Make sure you’re looking at the right version: v0.5.0 Is the patched version The versions prior to that have docs we’ve been discussing.

- Scroll down until you find a module that sounds interesting to you: I focused on elements related to search, but you may be interested in Assistant, YouTube, etc.

- Read through the attributes: As you read through the descriptions of features, take note of other features referenced in them.

- Search: Perform searches for those terms in the docs.

- Repeat until you’re done: Go back to step 1. As you learn more, you’ll find other things you want to search and you’ll realize certain strings might mean there are other modules that interest you.

- Share your findings: If you find something cool, share it on social or write about it. I’m happy to help you amplify.

One thing that annoys me about HexDocs is how the left sidebar covers most of the names of the modules. This makes it difficult to know what you’re navigating to.

If you don’t want to mess with the CSS, I’ve made a simple Chrome extension that you can install to make the sidebar bigger.

How your approach to SEO should change strategically

Here are some strategic things that you should more seriously consider as part of your SEO efforts.

If you are already doing all these things, you were right, you do know everything, and I salute you.

SEO and UX need to work more closely together

With NavBoost, Google is valuing clicks as one of the most important features, but we need to understand what session success means.

A search that yields a click on a result where the user does not perform another search can be a success even if they did not spend a lot of time on the site. That can indicate that the user found what they were looking for.

Naturally, a search that yields a click and a user spends 5 minutes on a page before coming back to Google is also a success. We need to create more successful sessions.

SEO is about driving people to the page, UX is about getting them to do what you want on the page. We need to pay closer attention to how components are structured and surfaced to get people to the content that they are explicitly looking for and give them a reason to stay on the site.

It’s not enough to hide what I’m looking for after a story about your grandma’s history of making apple pies with hatchets (or whatever recipe sites are doing these days). Rather, it should be more about providing the exact information, clearly displaying it, and enticing the user to remain on the page with something additionally compelling.

Pay more attention to click metrics

We treat Search Analytics data as outcomes, but Google’s ranking systems treat them as diagnostic features.

If you rank highly and you have a ton of impressions and no clicks (aside from when SiteLinks throws the numbers off) you likely have a problem.

What we are definitively learning is that there is a threshold of expectation for performance based on position. When you fall below that threshold you can lose that position.

Content needs to be more focused

We’ve learned definitively that Google uses vector embeddings to determine how far off given a page is from the rest of what you talk about.

This indicates that it will be challenging to go far into upper funnel content successfully without a structured expansion or without authors who have demonstrated expertise in that subject area.

Encourage your authors to cultivate expertise in what they publish across the web and treat their bylines like the gold standard that it is.

SEO should always be experiment-driven

Due to the variability of the ranking systems, you cannot take best practices at face value for every space. You need to test, learn and build experimentation in every SEO program.

Large sites leveraging products like SEO split testing tool Searchpilot are already on the right track, but even small sites should test how they structure and position their content and metadata to encourage stronger click metrics.

In other words, we need to actively test the SERP, not just the site.

Pay attention to what happens after they leave your site

We now have verification that Google is using data from Chrome as part of the search experience. There is value in reviewing the clickstream data from SimilarWeb and Semrush.

Trends provide to see where people are going next and how you can give them that information without them leaving you.

Build keyword and content strategy around SERP format diversity

Google potentially limits the number of pages of certain content types ranking in the SERP, so checking the SERPs should become part of your keyword research.

Don’t align formats with keywords if there’s no reasonable possibility of ranking.

How your approach to SEO should change tactically

Tactically, here are some things you can consider doing differently. Shout out to Rand because a couple of these ideas are his.

Page titles can be as long as you want

We now have further evidence that the 60-70 character limit is a myth.

In my own experience we have experimented with appending more keyword-driven elements to the title and it has yielded more clicks because Google has more to choose from when it rewrites the title.

Use fewer authors on more content

Rather than using an array of freelance authors, you should work with fewer that are more focused on subject matter expertise and also write for other publications.

Focus on link relevance from sites with traffic

We’ve learned that link value is higher from pages that prioritized higher in the index. Pages that get more clicks are pages that are likely to appear in Google’s flash memory.

We’ve also learned that Google highly values relevance. We need to stop going after link volume and solely focus on relevance.

Default to originality instead of long form

We now know originality is measured in multiple ways and can yield a boost in performance.

Some queries simply don’t require a 5,000-word blog post (I know, I know). Focus on originality and layer more information in your updates as competitors begin to copy you.

Make sure all dates associated with a page are consistent

It’s common for dates in schema to be out of sync with dates on the page and dates in the XML sitemap. All of these need to be synced to ensure Google has the best understanding of how hold the content is.

As you refresh your decaying content, make sure every date is aligned so Google gets a consistent signal.

Use old domains with extreme care

If you’re looking to use an old domain, it’s not enough to buy it and slap your new content on its old URLs. You need to take a structured approach to updating the content to phase out what Google has in its long-term memory.

You may even want to avoid there being a transfer of ownership in registrars until you’ve systematically established the new content.

Make gold-standard documents

We now have evidence that quality raters are doing feature engineering for Google engineers to train their classifiers. You want to create content that quality raters would score as high quality so your content has a small influence over the next core update.

Bottom line

It’s shortsighted to say nothing should change. Based on this information, I think it’s time for us to reconsider our best practices.

Let’s keep what works and dump what’s not valuable. Because, I tell you what, there’s no text-to-code ratio in these documents, but several of your SEO tools will tell you your site is falling apart because of it.

How Google can repair its relationship with the SEO community

A lot of people have asked me how can we repair our relationship with Google moving forward.

I would prefer that we get back to a more productive space to improve the web. After all, we are aligned in our goals of making search better.

I don’t know that I have a complete solution, but I think an apology and owning their role in misdirection would be a good start. I have a few other ideas that we should consider.

- Develop working relationships with us: On the advertising side, Google wines and dines its clients. I understand that they don’t want to show any sort of favoritism on the organic side, but Google needs to be better about developing actual relationships with the SEO community. Perhaps a structured program with OKRs that is similar to how other platforms treat their influencers makes sense. Right now things are pretty ad hoc where certain people get invited to events like I/O or to secret meeting rooms during the (now-defunct) Google Dance.

- Bring back the annual Google Dance: Hire Lily Ray to DJ and make it about celebrating annual OKRs that we have achieved through our partnership.

- Work together on more content: The bidirectional relationships that people like Martin Splitt have cultivated through his various video series are strong contributions where Google and the SEO community have come together to make things better. We need more of that.

- We want to hear from the engineers more. I’ve gotten the most value out of hearing directly from search engineers. Paul Haahr’s presentation at SMX West 2016 lives rent-free in my head and I still refer back to videos from the 2019 Search Central Live Conference in Mountain View regularly. I think we’d all benefit from hearing directly from the source.

Everybody keep up the good work

I’ve seen some fantastic things come out of the SEO community in the past 48 hours.

I’m energized by the fervor with which everyone has consumed this material and offered their takes – even when I don’t agree with them. This type of discourse is healthy and what makes our industry special.

I encourage everyone to keep going. We’ve been training our whole careers for this moment.

Editor’s note: Join Mike King and Danny Goodwin at SMX Advanced for a late-breaking session exploring the leak and its implications. Learn more here.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Google adds opt-in for Video Enhancements for ads

Written on May 31, 2024 at 3:39 am, by admin

Google is allowing more advertisers to opt into new “video enhancements” for video ad campaigns. These aim to improve performance by automatically creating additional video formats from advertisers’ original assets.

Why we care. With more video consumption happening on mobile devices, having ads properly formatted for different screen sizes and orientations is crucial. The new enhancements use AI to resize and reformat videos to be more effective.

How it works. Advertisers upload a standard horizontal video as they normally would.

Google’s AI automatically generates additional versions in different aspect ratios like square (1:1) and vertical (9:16, 4:5).

It intelligently crops and repositions the video to preserve key elements.

Shorter video clips are also automatically created by cutting down long videos to highlight key moments.

Image credit: Thomas Eccel

Image credit: Thomas EccelThe benefits. According to Google, this:

- Creates mobile-optimized video ads without any extra work for advertisers.

- May boost campaign performance by serving properly formatted video ads.

- Saves time and resources by automating creation of additional video assets.

How to enable/disable.

- Video enhancements are enabled by default for new video ad campaigns.

- Advertisers can toggle them on/off in their campaign settings under “Additional settings” > “Video enhancements.”

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Unpacking Google’s massive search documentation leak

Written on May 31, 2024 at 3:39 am, by admin

A massive Google Search internal ranking documentation leak has sent shockwaves through the SEO community. The leak, which exposed over 14,000 potential ranking features, provides an unprecedented look under the hood of Google’s closely guarded search rankings system.

A man named Erfan Azimi shared a Google API doc leak with SparkToro’s Rand Fishkin, who, in turn, brought in Michael King of iPullRank, to get his help in distributing this story.

The leaked files originated from a Google API document commit titled “yoshi-code-bot /elixer-google-api,” which means this was not a hack or a whistle-blower.

SEOs typically occupy three camps:

- Everything Google tells SEOs is true and we should follow those words as our scripture (I call these people the Google Cheerleaders).

- Google is a liar, and you can’t trust anything Google says. (I think of them as blackhat SEOs.)

- Google sometimes tells the truth, but you need to test everything to see if you can find it. (I self-identify with this camp and I’ll call this “Bill Slawski rationalism” since he was the one who convinced me of this view).

I suspect many people will be changing their camp after this leak.

You can find all the files here, but you should know that over 14,000 possible ranking signals/features exist, and it’ll take you an entire day (or, in my case, night) to dig through everything.

I’ve read through the entire thing and distilled it into a 40-page PDF that I’m now converting into a summary for Search Engine Land.

While I provide my thoughts and opinions, I’m also sharing the names of the specific ranking features so you can search the database on your own. I encourage everyone to make their own conclusions.

Key points from Google Search document leak

- Nearest seed has modified PageRank (now deprecated). The algorithm is called pageRank_NS and it is associated with document understanding.

- Google has seven different types of PageRank mentioned, one of which is the famous ToolBarPageRank.

- Google has a specific method of identifying the following business models: news, YMYL, personal blogs (small blogs), ecommerce and video sites. It is unclear why Google is specifically filtering for personal blogs.

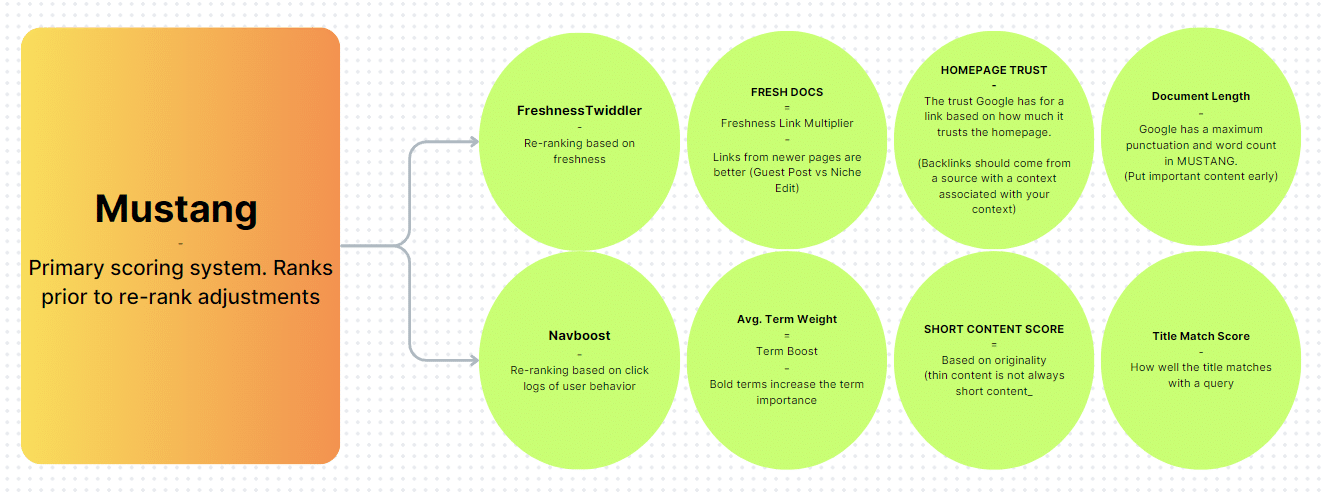

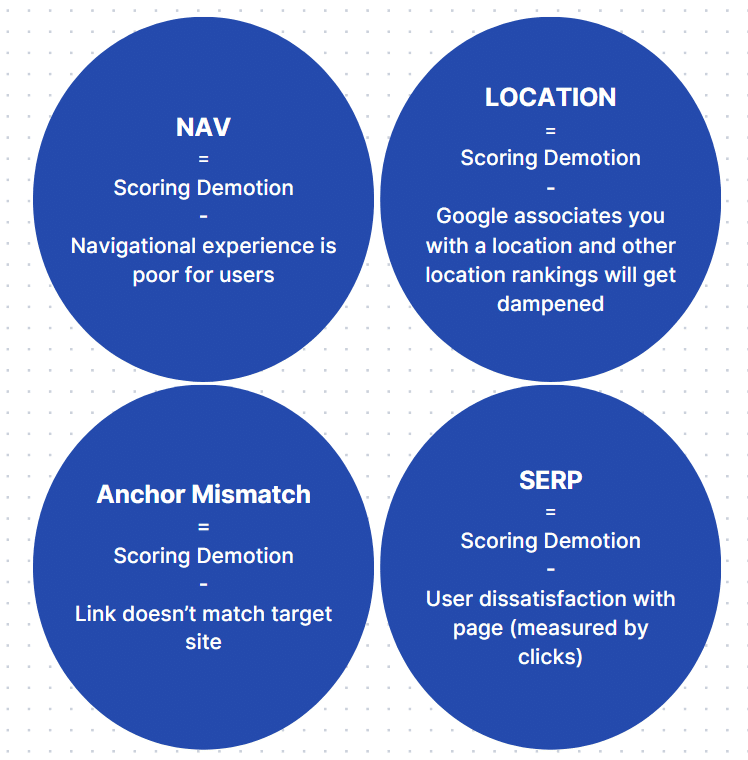

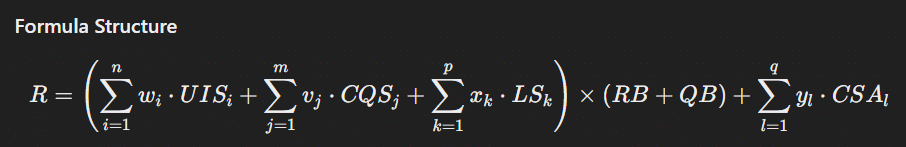

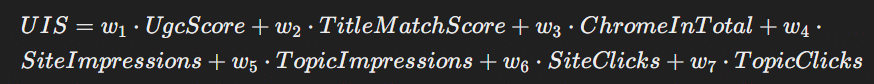

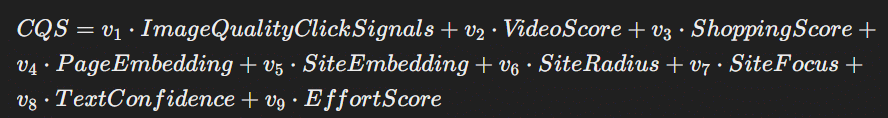

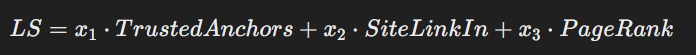

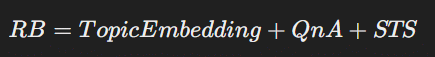

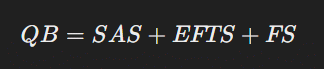

- The most important components of Google’s algorithm appear to be navBoost, NSR and chardScores.

- Google uses a site-wide authority metric and a few site-wide authority signals, including traffic from Chrome browsers.

- Google uses page embeddings, site embeddings, site focus and site radius in its scoring function.

- Google measures bad clicks, good clicks, clicks, last longest clicks and site-wide impressions.

Why is Google specifically filtering for personal blogs / small sites? Why did Google publicly say on many occasions that they don’t have a domain or site authority measurement?

Why did Google lie about their use of click data? Why does Google have seven types of PageRank?

I don’t have the answers to these questions, but they are mysteries the SEO community would love to understand.

Things that stand out: Favorite discoveries

Google has something called pageQuality (PQ). One of the most interesting parts of this measurement is that Google is using an LLM to estimate “effort” for article pages. This value sounds helpful for Google in determining whether a page can be replicated easily.

Takeaway: Tools, images, videos, unique information and depth of information stand out as ways to score high on “effort” calculations. Coincidentally, these things have also been proven to satisfy users.

Topic borders and topic authority appear to be real

Topical authority is a concept based on Google’s patent research. If you’ve read the patents, you’ll see that many of the insights SEOs have gleaned from patents are supported by this leak.

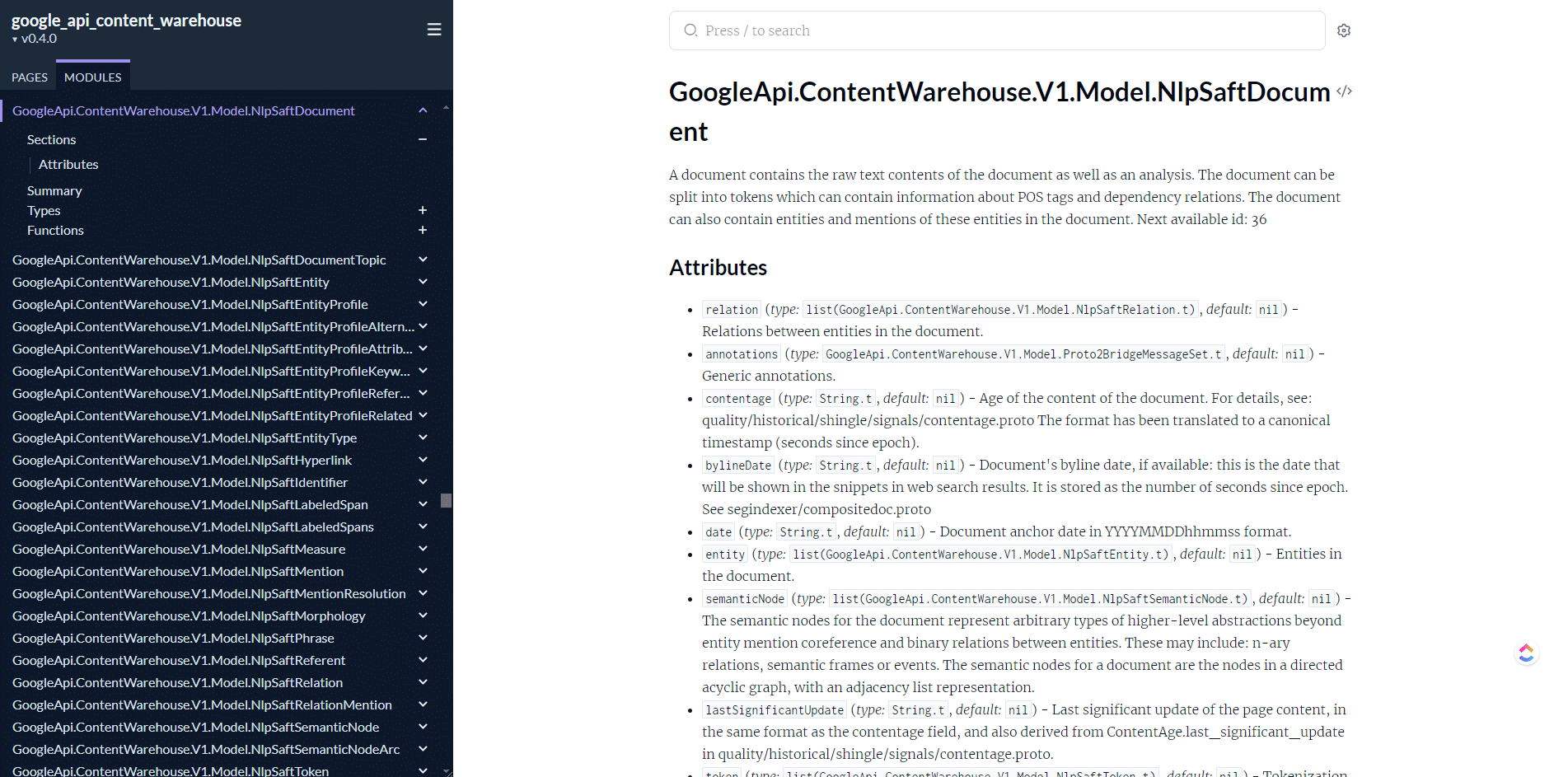

In the algo leak, we see that siteFocusScore, siteRadius, siteEmbeddings and pageEmbeddings are used for ranking.

What are they?

- siteFocusScore denotes how much a site is focused on a specific topic.

- siteRadius measures how far page embeddings deviate from the site embedding. In plain speech, Google creates a topical identity for your website, and every page is measured against that identity.

- siteEmbeddings are compressed site/page embeddings.

Source: Topic embeddings data module

Source: Topic embeddings data moduleWhy is this interesting?

- If you know how embeddings work, you can optimize your pages to deliver content in a way that is better for Google’s understanding.

- Topic focus is directly called out here. We don’t know why topic focus is mentioned, but we know that a number value is given to a website based on the site’s topic score.

- Deviation from the topic is measured, which means that the concept of topical borders and contextual bridging has some potential support outside of patents.

- It would appear that topical identity and topical measurements in general are a focus for Google.

Remember when I said PageRank is deprecated? I believe nearest seed (NS) can apply in the realm of topical authority.

NS focuses on a localized subset of the network around the seed nodes. Proximity and relevance are key focus areas. It can be personalized based on user interest, ensuring pages within a topic cluster are considered more relevant without using the broad web-wide PageRank formula.

Another way of approaching this is to apply NS and PQ (page quality) together.

By using PQ scores as a mechanism for assisting the seed determination, you could improve the original PageRank algorithm further.

On the opposite end, we could apply this to lowQuality (another score from the document). If a low-quality page links to other pages, then the low quality could taint the other pages by seed association.

A seed isn’t necessarily a quality node. It could be a poor-quality node.

When we apply site2Vec and the knowledge of siteEmbeddings, I think the theory holds water.

If we extend this beyond a single website, I imagine variants of Panda could work in this way. All that Google needs to do is begin with a low-quality cluster and extrapolate pattern insights.

What if NS could work together with OnsiteProminence (score value from the leak)?

In this scenario, nearest seed could identify how closely certain pages relate to high-traffic pages.

Image quality

ImageQualityClickSignals indicates that image quality measured by click (usefulness, presentation, appealingness, engagingness). These signals are considered Search CPS Personal data.

No idea whether appealingness or engagingness are words – but it’s super interesting!

- Source: Image quality data module

Host NSR

I believe NSR is an acronym for Normalized Site Rank.

Host NSR is site rank computed for host-level (website) sitechunks. This value encodes nsr, site_pr and new_nsr. Important to note that nsr_data_proto seems to be the newest version of this but not much info can be found.

In essence, a sitechunk is taking chunks of your domain and you get site rank by measuring these chunks. This makes sense because we already know Google does this on a page-by-page, paragraph and topical basis.

It almost seems like a chunking system designed to poll random quality metric scores rooted in aggregates. It’s kinda like a pop quiz (rough analogy).

NavBoost

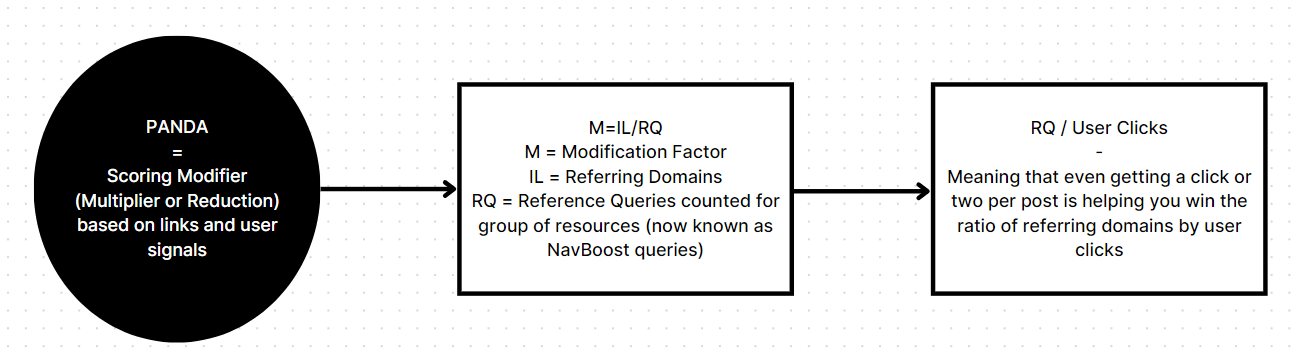

I’ll discuss this more, but it is one of the ranking pieces most mentioned in the leak. NavBoost is a re-ranking based on click logs of user behavior. Google has denied this many times, but a recent court case forced them to reveal that they rely quite heavily on click data.

The most interesting part (which should not come as a surprise) is that Chrome data is specifically used. I imagine this extends to Android devices as well.

This would be more interesting if we brought in the patent for the site quality score. Links have a ratio with clicks, and we see quite clearly in the leak docs that topics, links and clicks have a relationship.

While I can’t make conclusions here, I know what Google has shared about the Panda algorithm and what the patents say. I also know that Panda, Baby Panda and Baby Panda V2 are mentioned in the leak.

If I had to guess, I’d say that Google uses the referring domain and click ratio to determine score demotions.

HostAge

Nothing about a website’s age is considered in ranking scores, but the hostAge is mentioned regarding a sandbox. The data is used in Twiddler to sandbox fresh spam during serving time.

I consider this an interesting finding because many SEOs argue about the sandbox and many argue about the importance of domain age.

As far as the leak is concerned, the sandbox is for spam and domain age doesn’t matter.

ScaledIndyRank. Independence rank. Nothing else is mentioned, and the ExptIndyRank3 is considered experimental. If I had to guess, this has something to do with information gain on a sitewide level (original content).

Note: It is important to remember that we don’t know to what extent Google uses these scoring factors. The majority of the algorithm is a secret. My thoughts are based on what I’m seeing in this leak and what I’ve read by studying three years of Google patents.

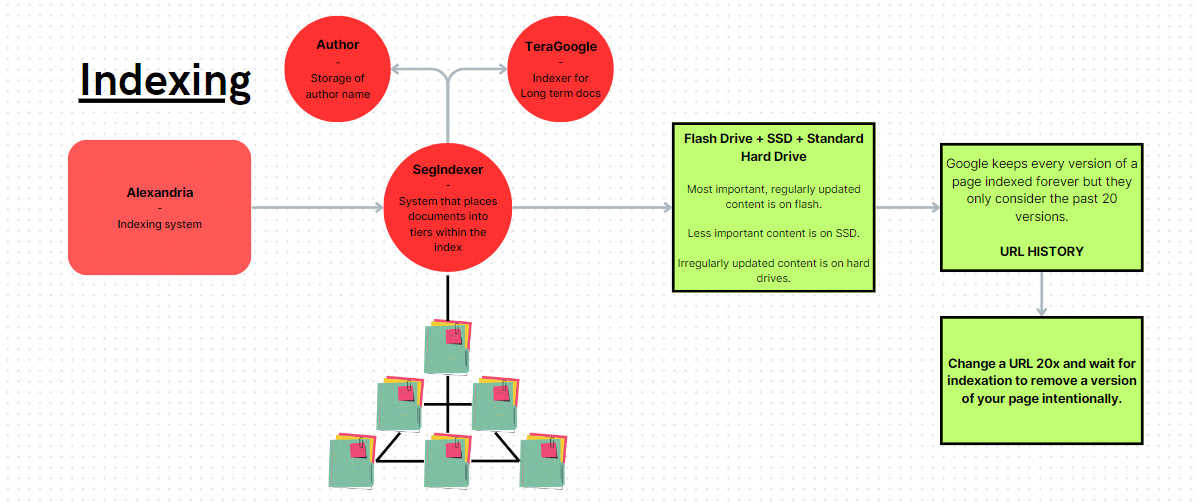

How to remove Google’s memory of an old version of a document

This is perhaps a bit of conjecture, but the logic is sound. According to the leak, Google keeps a record of every version of a webpage. This means Google has an internal web archive of sorts (Google’s own version of the Wayback Machine).

The nuance is that Google only uses the last 20 versions of a document. If you update a page, wait for a crawl and repeat the process 20 times, you will effectively push out certain versions of the page.

This might be useful information, considering that the historical versions are associated with various weights and scores.

Remember that the documentation has two forms of update history: significant update and update. It is unclear whether significant updates are required for this sort of version memory tom-foolery.

Google Search ranking system

While it’s conjecture, one of the most interesting things I found was the term weight (literal size).

This would indicate that bolding your words or the size of the words, in general, has some sort of impact on document scores.

Index storage mechanisms