Improving content quality at scale with AI

Written on May 24, 2024 at 9:34 am, by admin

I’ve learned through experience to be cautious when using the words “content” and “scale” close to each other in SEO because it’s usually coded-speak for creating content in large volumes, primarily for search engines.

We’ve seen time and time again this approach ending in disaster when the search engines work out what is going on.

When used correctly, however, AI can be a powerful assistant for SEO and help work out how to improve the quality of our content.

What we’re going to do

Our goal is to use an automated process to find “intent gaps” in our content.

To do this – all in real-time – we will:

- Crawl our content URLs.

- Analyze the text content on the page with ChatGPT.

- Compare this to an intent map of Google’s People Also Ask data to determine where we have gaps in our content.

The result will be a spreadsheet that potentially saves us hundreds of hours by automatically listing questions that our content does not answer, which Google has already determined are related to the page’s intent.

Tools we need

- Screaming Frog SEO spider: This popular web crawler recently released its v20, which, among other things, includes a new feature we will use to execute custom JavaScript while crawling, meaning we can extract data as we go.

- OpenAI API: The OpenAI API will allow us to programmatically interact with ChatGPT for content analysis. Summarizing and reviewing content, rather than creating it, is one of the strongest uses for Large Language Model systems.

- AlsoAsked API: AlsoAsked is the only tool with an async/sync API which allows us to programmatically query and access People Also Asked data in any language/region supported by Google.

Why this approach is so powerful

People Also Ask (PAA) data

Typical PAA result for [how to change car battery]

Typical PAA result for [how to change car battery]

We’re using PAA data for this project because it has several distinct advantages over other types of keyword data:

Intent clustering by Google

Google uses PAA boxes to help users refine queries, but they also serve as an induction loop of interaction data for Google to understand what users want from a query on average.

The term ‘intent’ generally refers to the overall goal a user wants to complete, and this intent can consist of several searches. Google’s research has shown that for complex tasks, it takes, on average, eight searches for a user to complete a task.

In the above example, Google knows that when users have the intent of learning how to change a car battery, one of the most common searches they will perform on this journey is asking which terminal to take off first.

We also know that Time To Result (TTR) is one of Google’s metrics for measuring its own performance. It’s essentially how quickly a user has completed their mission and fulfilled their intent. Therefore, it makes sense that we can improve our content and reduce the TTR by including searches that are in close ‘intent proximity’ to the topic of our article.

If we can make the content more useful, we’re improving its chances of ranking well. No other source of keyword data can provide such detail on queries that come up as ‘zero volume’ keywords on traditional research tools.

Recency

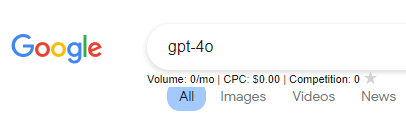

No other sources of search data give queries and updates as quickly as People Also Ask data. As I write this, GPT-4o was released 4 days ago. However, major keyword research tools (incorrectly) still say there are 0 searches for “GPT-4o”:

Keywords Everywhere showing 0 monthly searches for “gpt-4o”

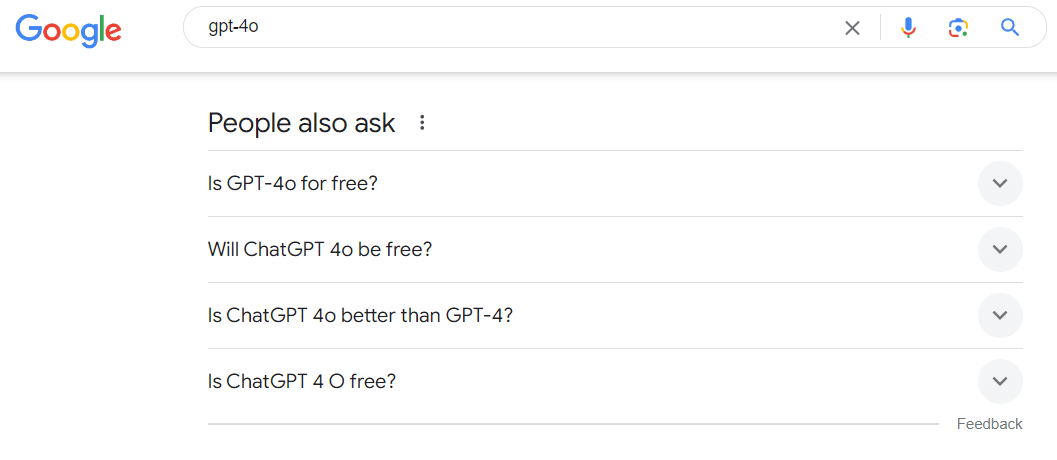

Keywords Everywhere showing 0 monthly searches for “gpt-4o”For the same search term, you can see that Google’s People Also Ask feature has already been updated with numerous queries about GPT-4o, asking if it’s free and how it’s better.

PAA results for GPT-4o

PAA results for GPT-4oBeing the first to publish on a particular topic is a huge advantage in SEO. Not only are you almost guaranteed to rank if you’re one of the first sites to produce the content, but there is usually an early flurry of links around new topics that go to these sites that will help you sustain rankings.

The recency of the data also means it’s an excellent way to see if your content needs updating to align with the current search intent, which is not static.

Step-by-step approach

1. Update Screaming Frog to >v20.1

Before we begin, it’s worth checking that you have the latest version of Screaming Frog. CustomJS was introduced in v20.0, and since v20.1 the AlsoAsked + ChatGPT CustomJS is packaged with the installer, so you don’t need to manually add it.

Screaming Frog update menu

Screaming Frog update menuScreaming Frog can update directly from the program while only one instance is running. To find this option, go to Help > Check for Updates, which will require a restart.

2. Crawl site URLs

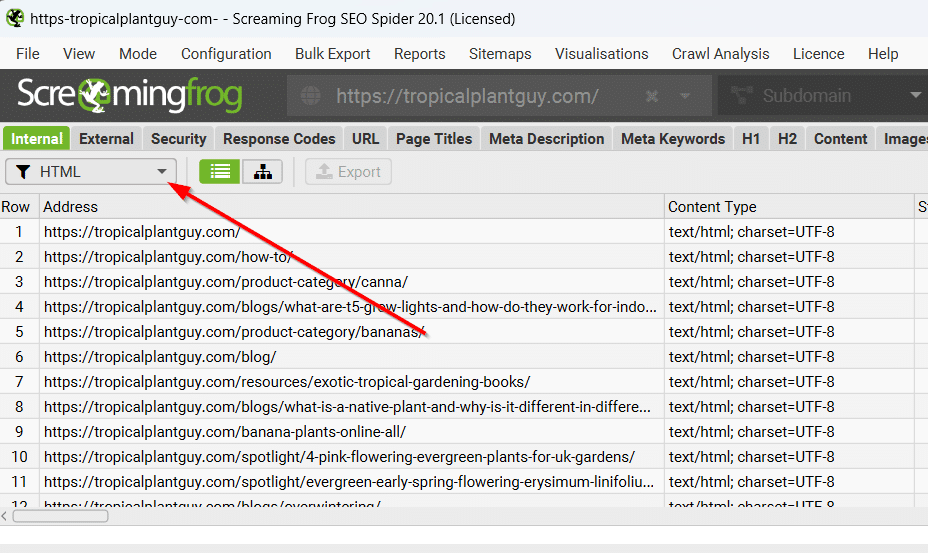

Although we will not run this process on all URLs, we need a list of URLs to choose from. The easiest way to achieve this is to start a standard crawl of your website with Screaming Frog and select the HTML filter to view pages.

HTML Filter in Screaming Frog

HTML Filter in Screaming FrogIf your site requires client-side JavaScript to render content and links, don’t forget to go into Configuration > Spider > Rendering and change Rendering from Text Only to JavaScript.

3. Select content URLs

Although this process can work on all different types of pages, it tends to offer the most value on informational pages. We must also consider that each URL we query will use more OpenAI tokens and AlsoAsked credits.

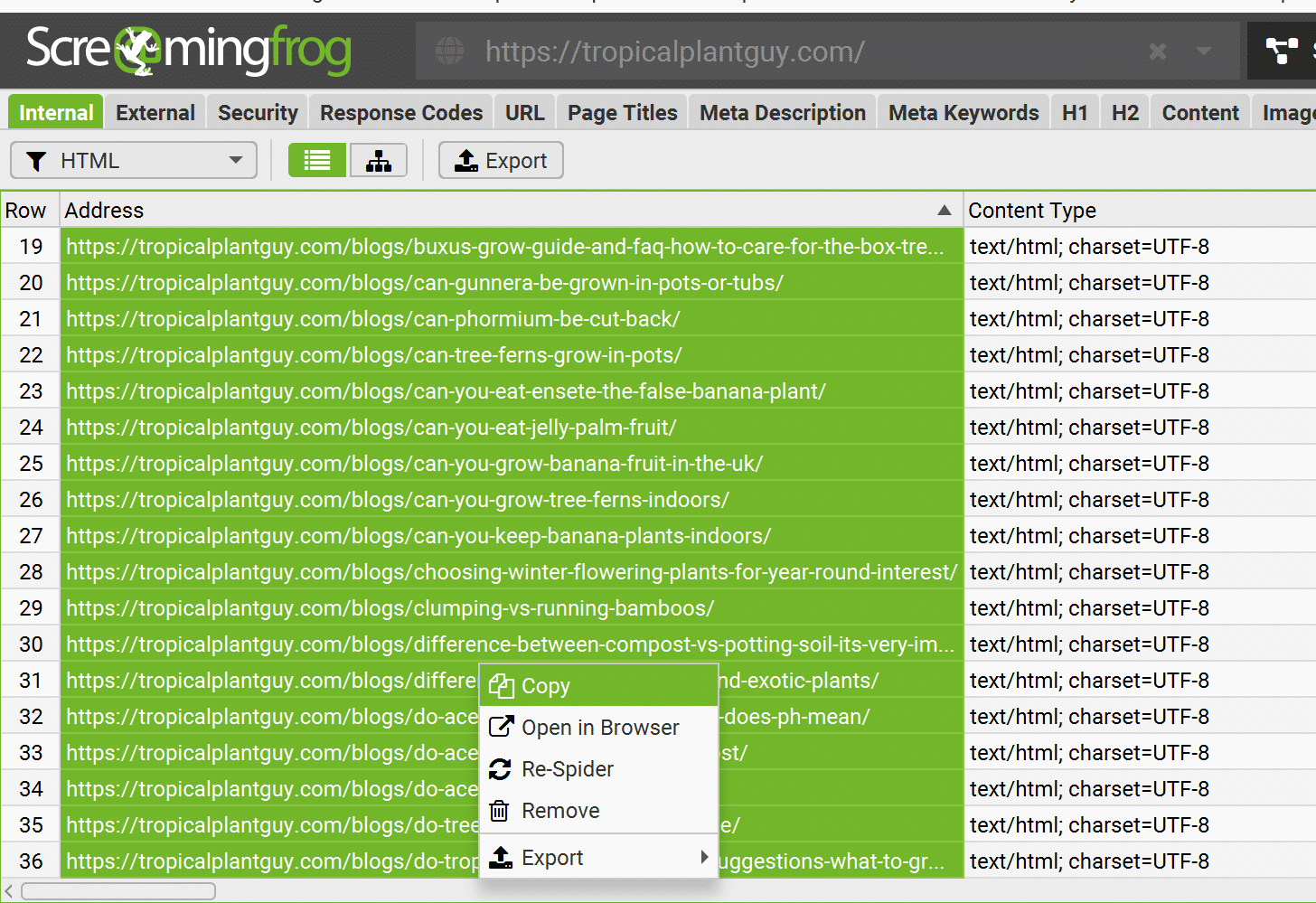

For this reason, I would recommend starting with your content URLs. For this example website, I will look at blog posts, which I know all have /blogs/ in the URL.

Screaming Frog offers a quick way to show only these URLs by typing ‘/blogs/’ into the filter box at the top right.

Filtering to URLs that contain /blogs/

Filtering to URLs that contain /blogs/Your URL pattern may differ, and it doesn’t matter if there is no obvious URL pattern, as Screaming Frog offers a powerful Custom Search to filter based on page rules.

For this example, I will simply select and Copy the URLs I am interested in, although it would also be possible to export them to a spreadsheet if you have a large amount you want to work through.

Copy or export the URLs you want to run the analysis on

Copy or export the URLs you want to run the analysis on4. Import CustomJS

The new CustomJS option can be found under the Configuration > Custom > Custom JavaScript menu.

Custom JavaScript options

Custom JavaScript optionsThis will open the Custom JavaScript window. In the bottom right, click the + Add from Library button to load a list of pre-packaged custom JavaScript that ships with Screaming Frog.

Scroll down and select (AlsoAsked+ChatGPT) Find unanswered questions and click Insert.

5. Configure API keys

We’re not quite ready to go yet. We now need to edit the imported JavaScript with our API keys — but don’t worry, that’s really easy!

Once the CustomJS is important, you need to click on this edit icon:

The easy-to-miss edit JS button

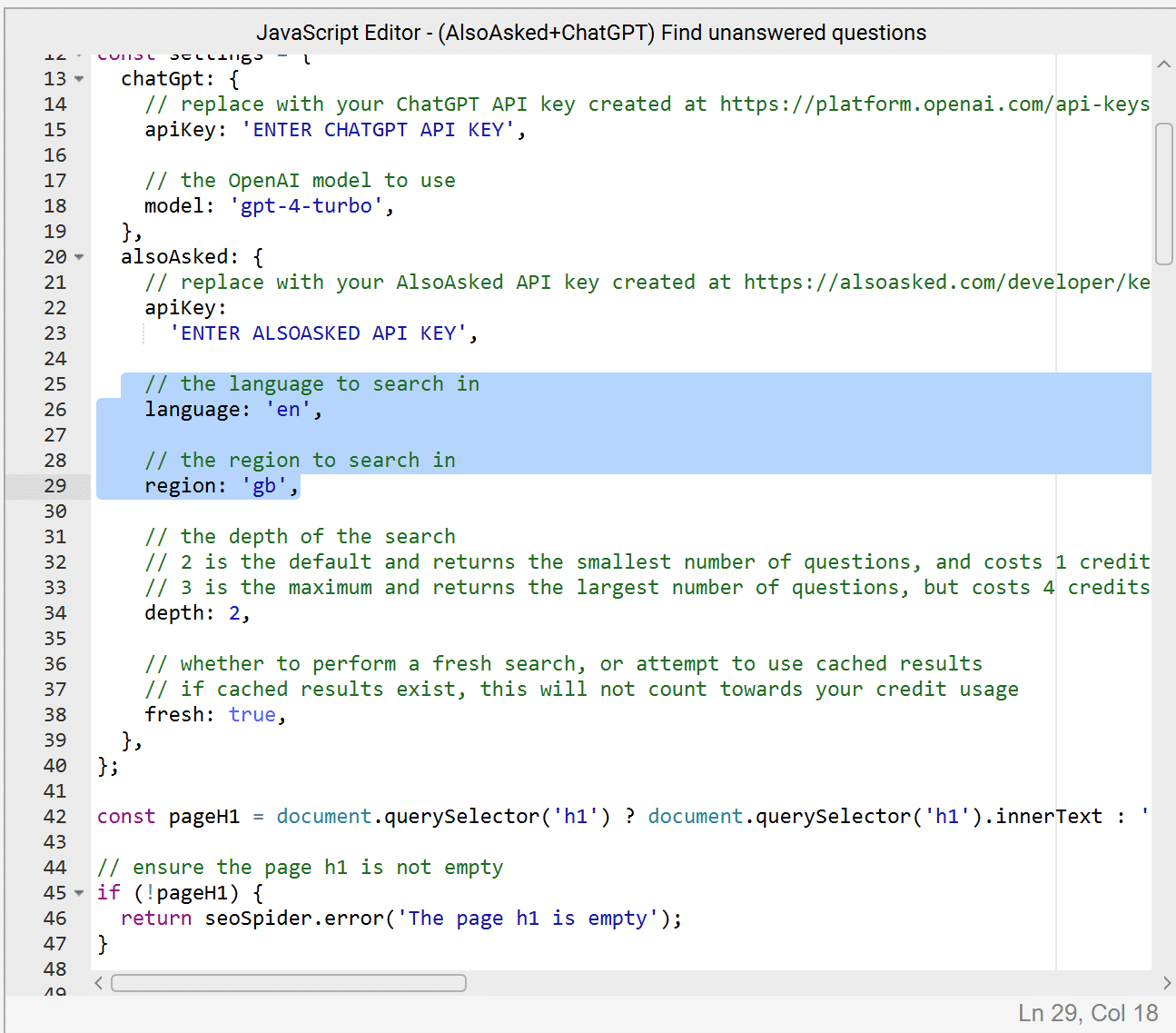

The easy-to-miss edit JS buttonYou should now see the JavaScript code in the editor window. There are two parts you need to edit, which are in capitals: ‘ENTER CHATGPT API KEY’ and ‘ENTER ALSOASKED API KEY’.

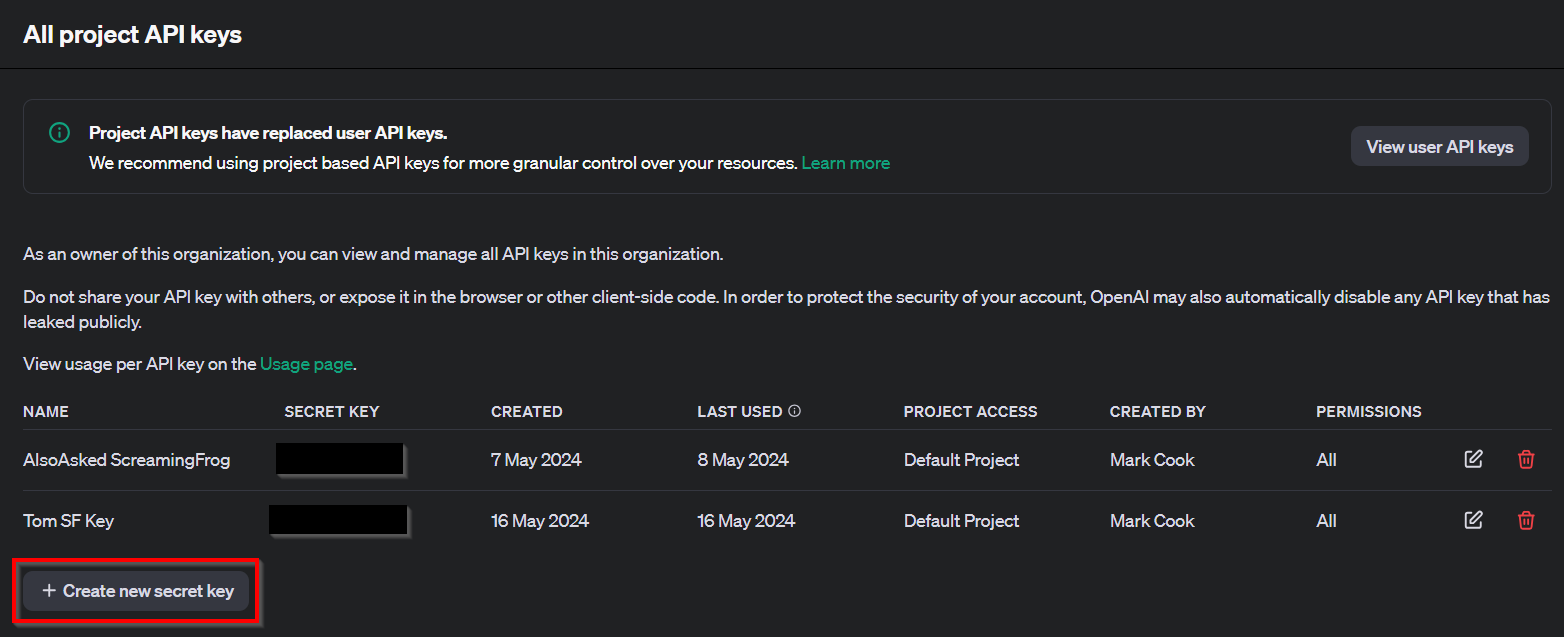

OpenAI API key

You can create an OpenAI key from https://platform.openai.com/api-keys

When you click Create new secret key, you’ll be prompted for a name and the project it’s attached to. You can call these whatever you like. OpenAI will put a secret key (be careful never to share this!) on your clipboard, which you can paste into your Screaming Frog CustomJS edit window.

The cost of ChatGPT will depend on token usage, which also depends on which pages we provide. Before deploying anything, it’s worth double-checking the spending limits you have set up to make sure you don’t unexpectedly go over budget.

AlsoAsked API key

AlsoAsked API access requires a Pro account, which provides 1,000 queries every month, although you can buy additional credits if you need to do more.

The costs here are much easier to predict, with a single URL costing $0.06 or with bulk Pay As You Go credits as low as $0.03. This means you could fully analyze 1,000 URLs of content for as low as $30, which would take days of manual work to achieve the same.

With a Pro account, you can create an API key.

Once again, give the key a name you will recognize, leave the ‘environment’ set to ‘Live’ and click ‘Create key’.

This will generate an API key to paste into the Screaming Frog CustomJS edit window.

6. Review settings

Configure PAA language and region

AlsoAsked supports all of the same languages and regions that Google offers, so if your website is not in English or targeting Great Britain, you can configure these two settings within the JavaScript from line 25 onwards.

You can use any ISO 639 language codes and ISO 3166 country codes. Google’s coverage with People Also Asked data is much lower in non-English languages.

Occasionally, English results will be returned as a fallback if no results for the region/language combination are provided, as there are often intent commonalities.

Customize the ChatGPT prompt

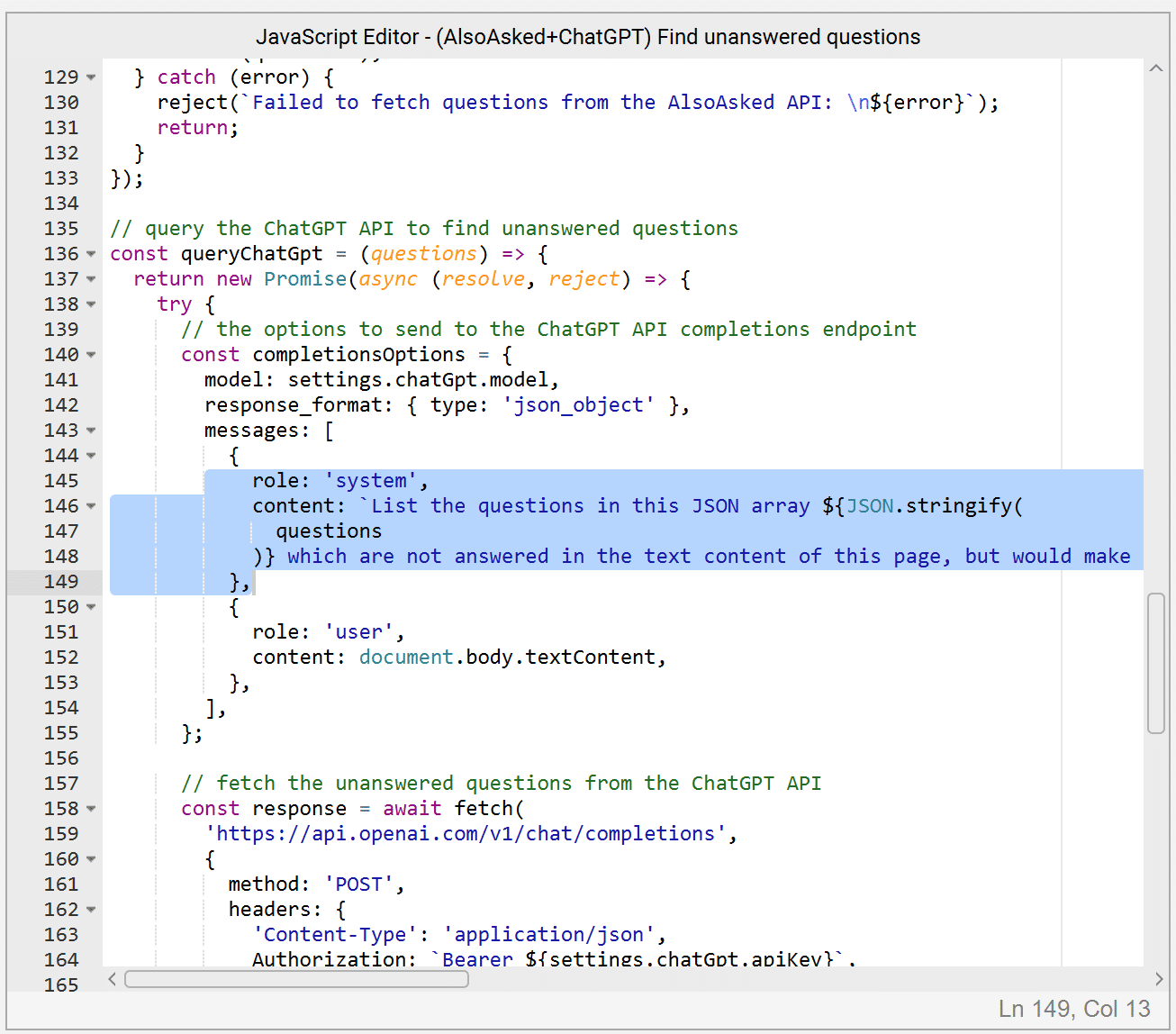

The current prompt used in the script for ChatGPT is:

- List the questions in this JSON array ${JSON.stringify(questions)} which are not answered in the text content of this page, but would make sense to answer in context to the rest of the content. Output the questions that are not answered in a JSON array of strings within an object called unanswered_questions.

There may be ways to improve output with more specific prompting related to your content by editing the part of the prompt in bold. This can be worth playing around with and seeing where you get the best output for your website.

To improve the output of the prompt, we have also asked ChatGPT to filter not only the unanswered questions but also the unanswered questions that might make sense to answer given the rest of the content on the page.

Warning: The beginning and end of the prompt, which are not highlighted in bold, specify specific formats, variables and objects that are used elsewhere in the script. If you change these without adjusting the script, it will likely break.

The prompt starts at around line 146

The prompt starts at around line 146

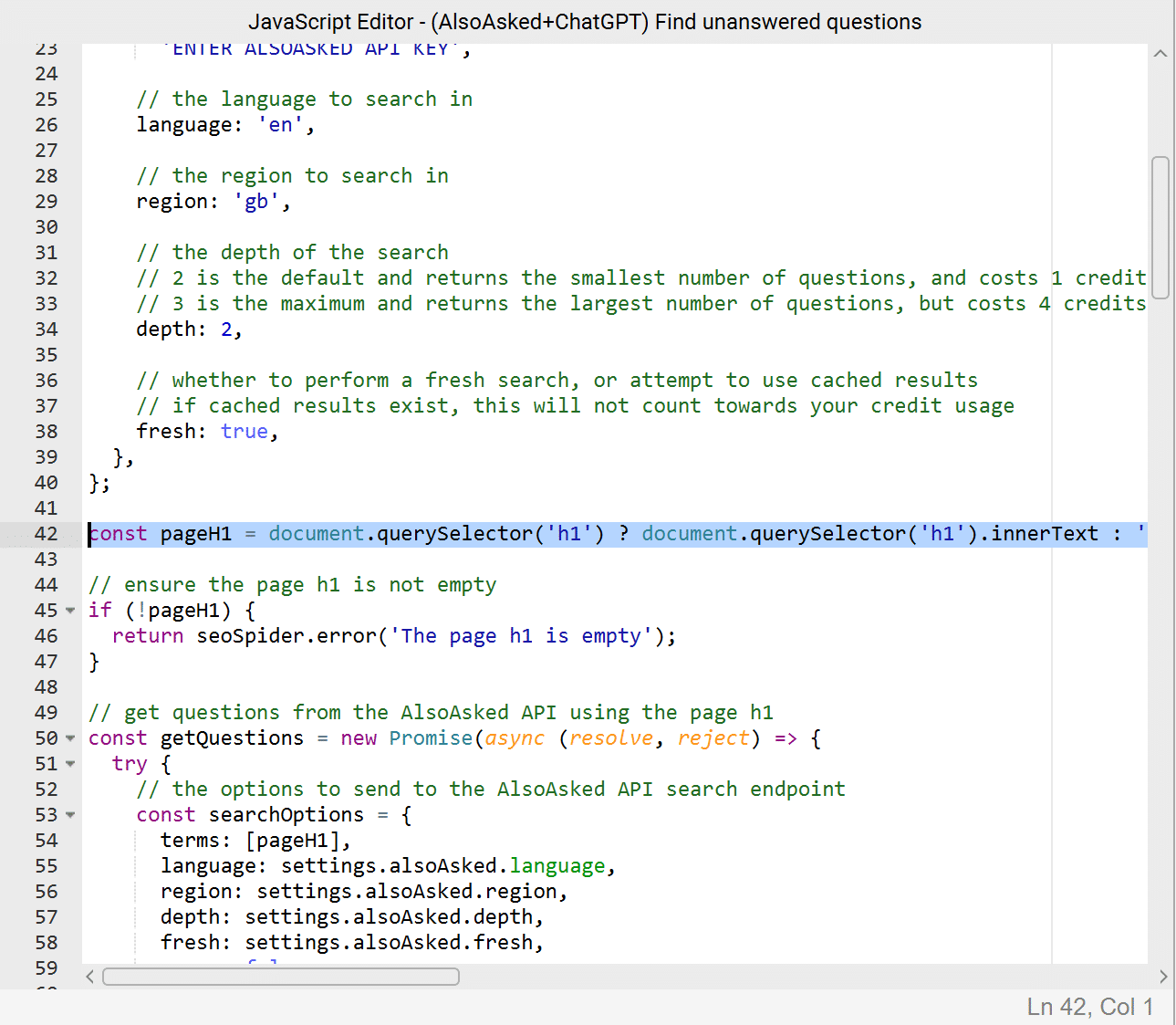

Check H1 inputs

We are prompting for People Also Asked data with the contents of the Header 1 (h1) on the target URL.

This means that if the page does not have a readable H1 tag, the script will fail, but I’m sure that, as we’re all SEOs, nobody will be in that position.

The H1 selector is on line 42

The H1 selector is on line 42With a little coding, it is possible to change this variable to pass other parameters, such as a title tag, to fetch People Also Ask data, although our experiments have shown that H1s tend to be the best bet as they are a good description of page content.

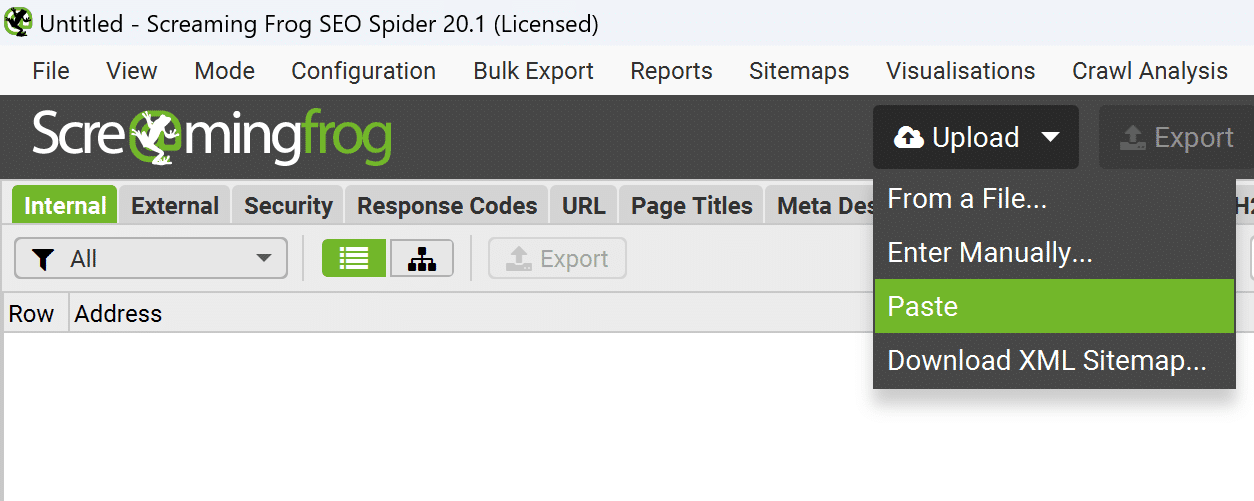

7. Run List crawl for selected URLs

Use the Clear button at the top of the Screaming Frog interface to start a new crawl, and then select the Mode menu and change the crawl type to List.

Selecting List crawl type

Selecting List crawl typeImportant: As you will be running Custom JavaScript, you must ensure your rendering mode is set to JavaScript in Configuration > Spider > Rendering or the script will not execute.

The Upload button will now let you import your list of URLs. You can simply select Paste if you copied your URLs to the clipboard as I did. If you exported them to a file, select From a File…

Importing selected URLs for new crawl

Importing selected URLs for new crawl8. View results

Your results will be in the Custom JavaScript tab, which you can find either by clicking the down arrow to the right of the tabs and selecting Custom JavaScript’

Unanswered questions as determined by ChatGPT

Unanswered questions as determined by ChatGPTHere, you will find your URLs, along with a list of questions based on PAAs that ChatGPT has determined have not been answered within your content and that might make sense.

Once the crawl is complete, you can use the Export button to produce a convenient spreadsheet for review.

Fit this in with your current SOPs

There are many ways to gather data to improve your content, from qualitative user feedback to looking at quantitative metrics within analytics. This is just one method.

This particular methodology is extremely useful because it can give some inspiration based on actual data while leaning into the strength of LLMs by summarising instead of generating content to put it into context.

With some extra tooling, it would be possible to build these kinds of checks in as you are producing content and even on scheduled crawls to alert content creators when new gaps appear.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

How important are backlinks for SEO in 2024?

Written on May 24, 2024 at 9:34 am, by admin

SEO today is more complex and challenging than at any other point.

Once upon a time, not too long ago, links were the most important thing in SEO.

But is that still true?

This article will examine the significance of link SEO today. It will include various perspectives within the industry, analyze empirical evidence and consider Google’s recent statements and updates on the role of links in their ranking algorithms.

Backlinks and SEO: Confusion in today’s landscape

Links matter. The volume and quality of external links to a webpage can influence its search rankings.

Beyond the number of links, other factors that play a role include:

- Link diversity (do your links come from multiple sites?).

- Relevance.

- Quality.

- Authority.

Technical aspects and Google’s preference for editorial (non-paid) links further impact effectiveness.

Links create PageRank, which helps elevate keyword rankings. Although Google no longer shows PageRank publicly, it still influences its algorithm.

How much? Opinions vary. Some believe PageRank is crucial, while others think its importance is all but dead.

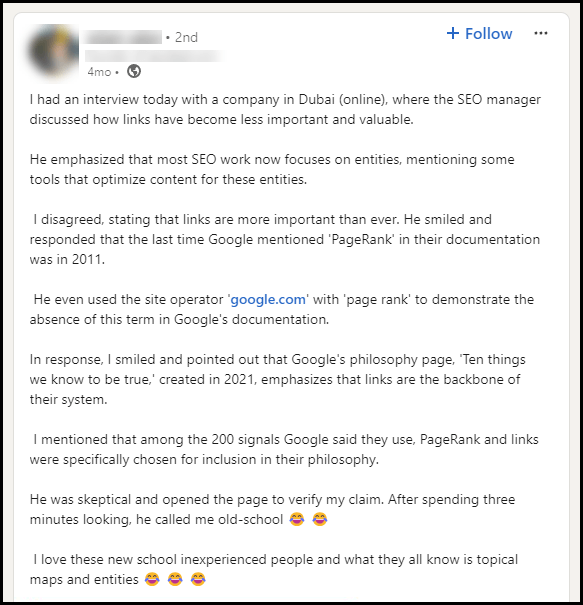

Just check out this LinkedIn post:

Such posts can lead to interesting discussions that surface interesting views:

Opinions on the importance of links vary widely, leaving many confused.

If you’re among the confused, know you aren’t alone.

So – are links as crucial as sellers claim? Or are links an industry scam costing thousands for unnecessary links?

The truth, as is typically the case, is somewhere in between.

Let’s further examine these perspectives and measure them against the facts.

Google’s statements and updates

Before we proceed, it’s probably prudent to check recent comments from Google.

Let’s start with comments from Gary Illyes, a webmaster trends analyst at Google, from September, as reported here on Search Engine Land:

- “I think they [links] are important, but I think people overestimate the importance of links. I don’t agree it’s in the top three. It hasn’t been for some time.”

Then, in March, Google released a Spam Policy update, removing the word “important” in reference to links as a ranking factor. This change coincided with the March 2024 core algorithm update.

In April, there was a bit of a storm when Illyes indicated that links were less important at a search conference:

- “We need very few links to rank pages… Over the years we’ve made links less important.”

John Mueller, a Google search advocate, also made these comments:

- “My recommendation would be not to focus so much on the absolute count of links. There are many ways that search engines can discover websites, such as with sitemaps. There are more important things for websites nowadays, and over-focusing on links will often result in you wasting your time doing things that don’t make your website better overall.”

Some parts of Google’s documentation still mention links and PageRank as core aspects of its ranking systems.

Within their documentation, Google states that PageRank is:

- “one of our core ranking systems used when Google first launched. Those curious can learn more by reading the original PageRank research paper and patent. How PageRank works has evolved a lot since then, and it continues to be part of our core ranking systems.”

So, even if Google is reducing the prominence of link data within its ranking algorithms, it seems that will be a gradual sunset.

And here’s what Google’s philosophy page says:

- “We assess the importance of every web page using more than 200 signals and a variety of techniques, including our patented PageRank

algorithm, which analyses which sites have been ‘voted’ to be the best sources of information by other pages across the web. As the web gets bigger, this approach actually improves, as each new site is another point of information and another vote to be counted.”

algorithm, which analyses which sites have been ‘voted’ to be the best sources of information by other pages across the web. As the web gets bigger, this approach actually improves, as each new site is another point of information and another vote to be counted.”

This suggests that links and PageRank are still important in 2024. However, if you read further:

- “We never manipulate rankings to put our partners higher in our search results and no one can buy better PageRank. Our users trust our objectivity and no short-term gain could ever justify breaching that trust.”

The statement that “no one can buy better PageRank” indicates that Google aggressively defends this part of its ranking algorithm.

It also suggests that Google believes, or wants us to believe, that paid link building services are ineffective. If such services were effective, Google couldn’t claim that no one can buy better PageRank.

This doesn’t mean links are unimportant. But Google invests heavily to make simple link building strategies ineffective.

Despite Google’s efforts to reduce reliance on links and prevent backlink exploitation, the SEO industry still promotes link building as a valid tactic. The industry continues to generate business and educate those they see as uninformed.

These practices will persist unless Google makes radical changes to its ranking algorithms and clearly communicates them to the industry.

Google aims to move away from links to better rank content, but what could replace PageRank?

For now, nothing.

However, with Google’s AI capabilities, this could change.

If Google can evaluate content usefulness directly without external links, they won’t need to rank webpages anymore. Instead, responding to search queries will become an exercise in AI-driven, technical content extraction and surfacing.

This shift seems inevitable.

Empirical evidence and studies

The key question to ask when it comes to your SEO efforts is: “Do links work right now?”

Let’s look at findings from Ahrefs, Backlinko and MonsterInsights:

Study 1: Ahrefs backlink statistics and findings

Ahrefs – a platform for backlink analysis, keyword indexing, content optimization, and cloud-based technical SEO auditing – updated its SEO Statistics for 2024 on March 18.

Ahrefs found a positive correlation between the number of sites linking to a page and that page’s ranking and SEO traffic performance. Most top-ranked pages gain between 5% and 14% more followed links per month.

Many other stats from Ahrefs reference publishers like Authority Hacker and include survey-based data.

Study 2: Backlinko search ranking findings

“A site’s overall link authority (as measured by Ahrefs Domain Rating) strongly correlates with higher rankings,” according to a Backlinko study, updated on March 24.

Google doesn’t use SEO tool scores in its algorithms, but it still uses PageRank. Ahrefs Domain Rating is designed to simulate PageRank, so a correlation is expected if Ahrefs is accurate.

Backlinko’s study also said:

- “Pages with lots of backlinks rank above pages that don’t have as many backlinks. In fact, the #1 result in Google has an average of 3.8x more backlinks than positions #2-#10.”

However, correlation doesn’t imply causation. High rankings can make a page more visible, leading to more backlinks and citations. Still, the correlation between link authority and higher rankings is strong.

- “We found no correlation between page loading speed (as measured by Alexa) and first page Google rankings.”

The point above is slightly misleading. Google doesn’t adjust rankings based on Alexa page-speed ratings. Instead, they use Core Web Vitals to assess page performance. Therefore, this statement might be based on poor input data.

On to the next point:

- “Getting backlinks from multiple different sites appears to be important for SEO. We found the number of domains linking to a page had a correlation with rankings.”

The importance of link diversity is generally accepted within the search community.

Study 3: Monster Insights Ranking Factor Findings

Monster Insights, a WordPress plugin provider, determined that backlinks “have a huge influence on Google’s ranking algorithm,” in January.

Monster Insights concluded that sites with higher volumes of backlinks usually achieve higher Google ranking positions (on average):

- “All in all, backlinks from high authority websites are more valuable, and will boost your rankings more, than links from lower rated sites. Acquiring these links sends a signal to Google that your content is trustworthy, since other high-quality websites vouch for it.”

Making sense of link data and associated claims

While most studies show a correlation between links, rankings and search traffic, they don’t address causality.

Do links cause pages to rank higher, or do high-ranking pages attract more links?

This has been a major debate in SEO for over a decade.

Some have had success with link building, while others have seen no results or even received a manual action from Google.

I trust Ahrefs’ study the most because it provides tools and data without selling link building services, reducing bias. They have a massive index of over 14 trillion live backlinks.

Understanding the role of backlinks in 2024

Your main takeaway is that links still matter now.

However, traditional link building, which often produces poor-quality links, has been ineffective for some time.

Success requires creative ideas and real-world events that garner high-tier editorial coverage.

Don’t “build” links. Earn and attract links. Producing links isn’t as simple as stacking blocks.

While backlinks are becoming less important, they are still an important part of SEO.

Adopting a holistic and quality-focused approach in link acquisition and broader SEO strategies will likely yield the best results in this changing landscape.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

TikTok debuts AI-powered ad automation tools

Written on May 24, 2024 at 9:34 am, by admin

TikTok unveiled a suite of new AI and automation tools for advertisers at its TikTok World event, aiming to make it easier for brands to create effective ads at scale on the viral video platform.

The big picture. TikTok is leaning into AI and automated ad tech as it looks to cement itself as more than just a media platform, but as a key “business partner” driving sales and ROI for brands.

New tools rundown. Four tools to take note of:

- TikTok One – A centralized hub giving advertisers access to creators, creative tools, partners and measurement capabilities.

- TikTok Symphony – An umbrella for TikTok’s AI ad tools, including support for:

- Script writing

- Video production

- Creative asset optimization

- Automated ad optimization. Using AI to optimize ad creative, targeting, budgets and more to hit set campaign goals like purchases or awareness.

- TikTok shop automation. AI tools to optimize ad spend, bidding, creative and other e-commerce costs for TikTok Shop ads.

Why we care. TikTok is a powerful platform for driving sales and ROI. These new AI and automation tools can significantly enhance ad creation and effectiveness.

What they’re saying. Adrienne Lahens, TikTok’s global head of creator marketing solutions, said:

- “We’re building for the future of creative, and we’re inviting brands to come and test and learn with us.”

Early results. An Ohio wellness brand piloting TikTok’s Shop automation tools saw 136% higher sales and 4x higher ROI while saving 10 hours per week, TikTok claims.

U.S. ban. TikTok declined to comment on whether advertising has been impacted by the threat of a potential U.S. ban over data security concerns.

The bottom line. TikTok is making an aggressive push into AI-driven ad automation as it tries to position itself as an essential sales and marketing channel rivaling Google and ad tech players.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Broad vs. deep expertise: How to decide which is right for you

Written on May 23, 2024 at 6:32 am, by admin

You can either have broad or deep subject matter expertise. The choice is yours.

If you’re in the early or middle stage of your career, then this is the perfect time to decide whether you want to go broad or deep.

Here’s how to think about this choice and decide what’s best for your search marketing career.

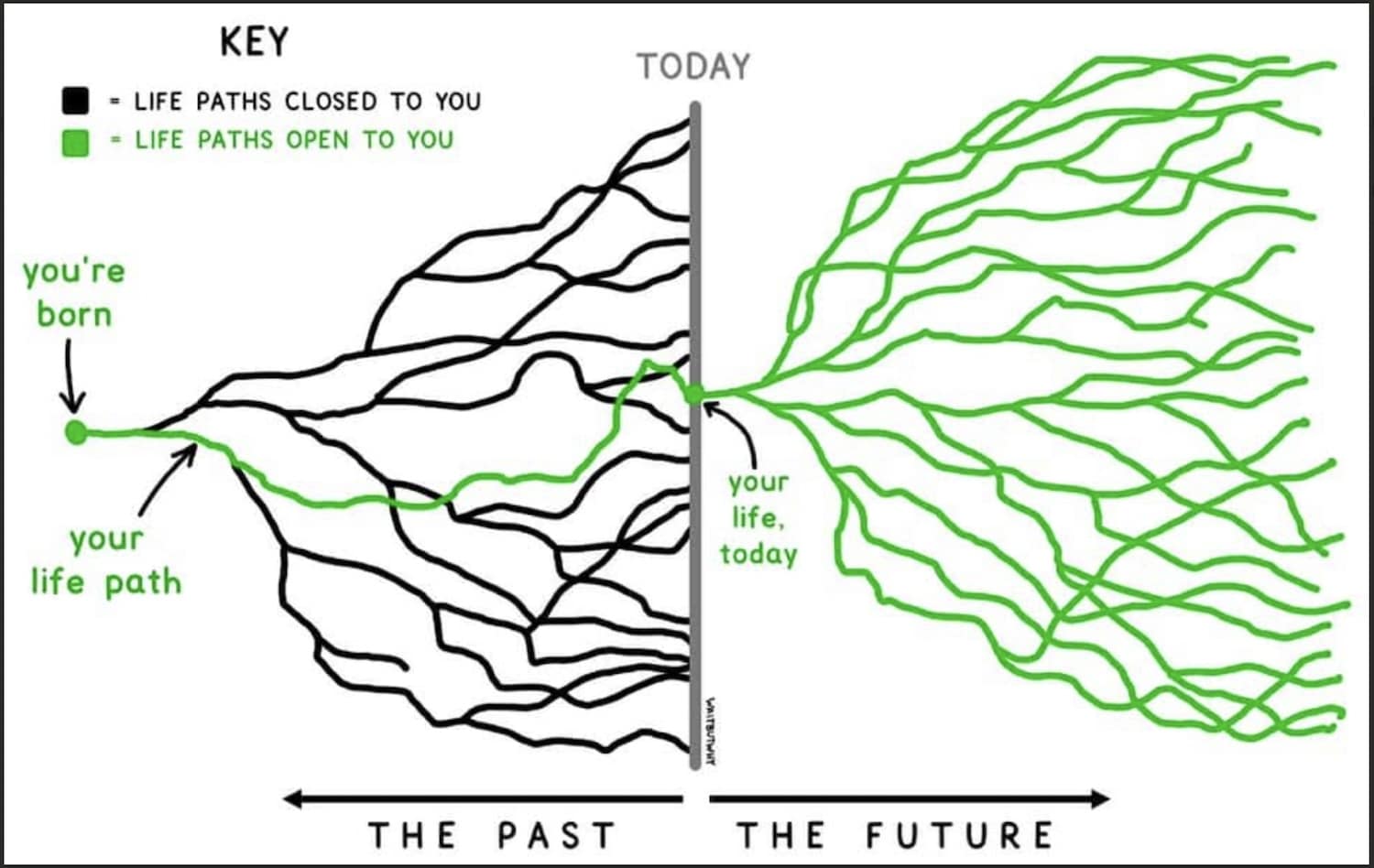

The T-shaped marketer

I base my approach on the “T-shaped marketer” model: an expert in one area (vertical bar) with broader knowledge across related disciplines (horizontal bar).

This allows for specialization and versatility – a valuable combination in marketing.

There is no wrong choice. Circumstances often dictate the decision.

My starting point for these decisions is the following concept:

I revisit this concept frequently throughout the year, for myself and others.

Remember:

- You have around 40 working years.

- Even if you made a terrible call, there is time to correct it.

- There are far more things outside of your control than in your control.

- Sometimes you are lucky, sometimes you are not.

- Companies can go through explosive growth or go out of business.

The case to be a deep subject matter expert

- Deep expertise: Having an in-depth understanding of the subject matter has advantages. Knowing how to navigate strategically and technically can unlock unique opportunities that you’d be qualified for, but others would struggle with. This includes grasping the intricacies of algorithms, platform changes, staying updated with constant changes, and developing highly effective strategies.

- Demand: Generalists are more common, so demand often drives specialization. You could be an expert in a subject, vertical, or specific problem (e.g., technical SEO for Shopify sporting goods). When that problem arises, you’re well-positioned to solve it. Demand can also vary by market or company. For instance, an agency winning paid search work may spur opportunities in that area.

- Thought Leadership: Deep expertise provides a unique market perspective, enabling thought leadership. You can anticipate client needs and future trends. Specialists often become recognized authorities, writing for publications or speaking at conferences.

The case to be broad

- Versatility: Broad skills allow you to participate in diverse conversations and connect dots across disciplines. Many tactics share similarities, like keyword research for SEO and PPC, or campaign structures for paid social and search. These commonalities make your skillset more adaptable as clients’ and brands’ needs evolve.

- Big picture thinking: Hyper-specialization can make it hard to see the bigger picture. Having broader knowledge helps maintain a higher-level view. This facilitates solving complex problems like budget allocation, creative strategies or optimizing the customer journey.

- Communication and collaboration: Generalists excel at communicating across teams and departments, ensuring cohesive marketing. They can understand various tasks and requirements, helping bring projects together and resolve cross-discipline issues quickly.

5 tips to help you make your decision

Assess your strengths and passions

Consider what you genuinely enjoy and excel at.

Do you prefer diving deep into technical details, or do you thrive on variety and collaboration?

Find something you love. Your interests greatly impact job satisfaction.

2. Consider the market

Research the demand and salary trends for specialists and generalists in your area and industry.

These fluctuate as the industry changes and evolves.

Look at companies’ roles (e.g., VP of SEO or VP of Marketing) to gauge the value of specialization or generalization as you advance.

3. Think long term

Many worry that specialization could limit relevance or career growth, with generalist roles seeming more valued.

However, both paths offer opportunities as you advance – and require continuous skill improvement.

Getting paid as a senior specialist (e.g., paid search) may be harder than a generalist, but true expertise commands a premium.

Focus on what you can control and excel at. It creates opportunities over time, though patience is key. Expertise and skill are eventually recognized and rewarded, even if it’s challenging initially.

4. Talk to people in the field

Network with professionals who have taken different paths.

Ask about their experiences, challenges faced, and rewards reaped.

Their insights can be invaluable when weighing your options.

5. Experiment and explore

Still unsure?

Try different roles or projects to experience specialization and generalization.

This can help you discover what truly resonates.

What’s the right path for you?

There’s no one-size-fits-all answer about whether you should develop broad or deep subject matter expertise.

The best path is the one that aligns with your individual strengths, interests and long-term goals.

Carefully considering these factors can help you make an informed decision that sets you up for a fulfilling and successful marketing career.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Survey: Shoppers open to using AI search to discover products

Written on May 23, 2024 at 6:32 am, by admin

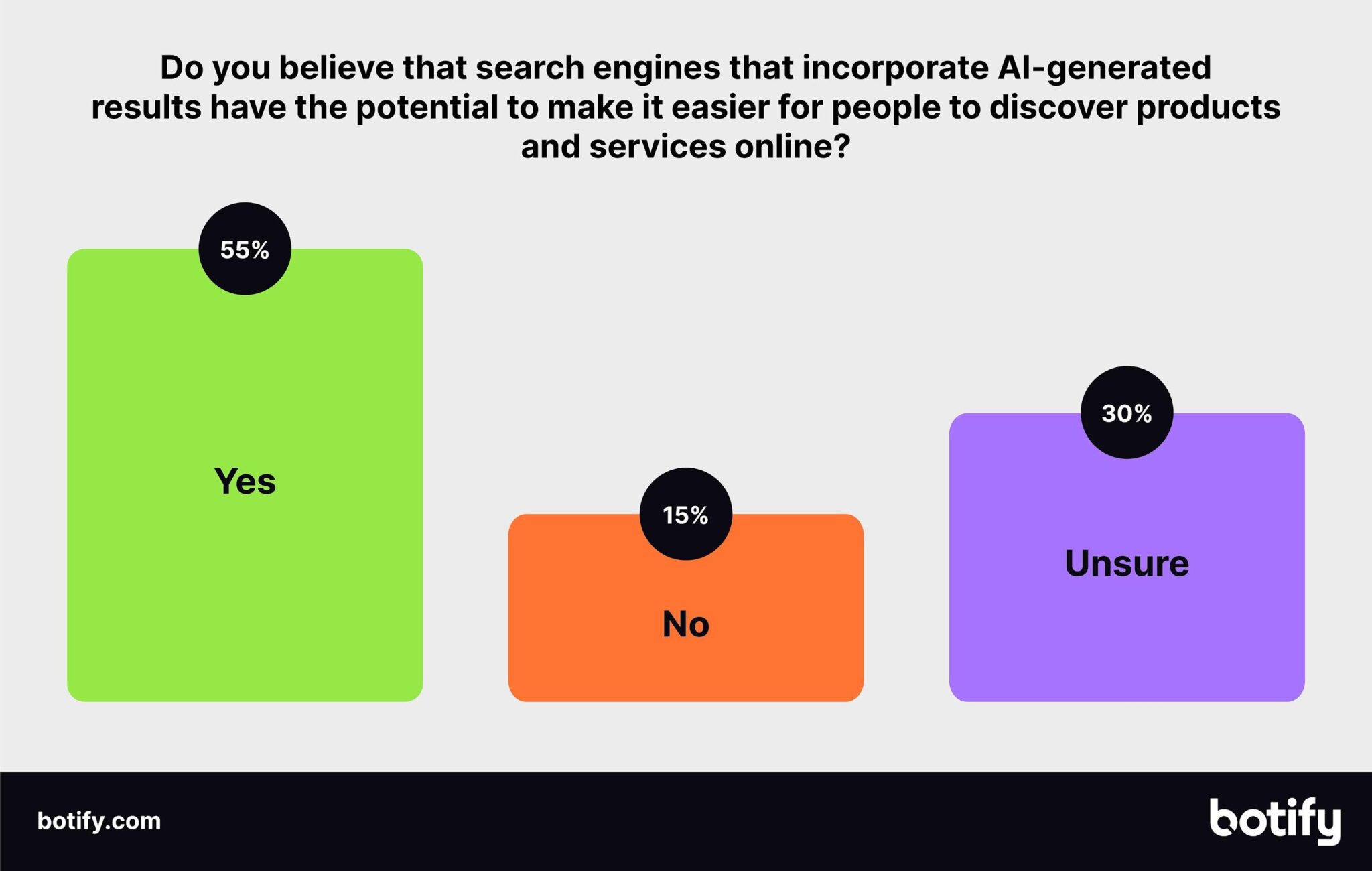

Search engines that incorporate AI-generated results have the potential to make it easier for people to discover products and services, according to a new survey from Botify, shared exclusively with Search Engine Land.

Why we care. Search marketers tend to look at search much differently than everyday Google Search users. So it’s interesting to see what consumers know and think about AI-powered search. The data is a good reminder that user behavior is always slow to change, so don’t expect a quick transition from Classic Search to AI Search.

By the numbers. Some interesting findings from Botify’s survey:

- 44% of respondents were familiar with generative search – 58% of Millennials and 58% of Gen Z were most familiar; 74% of Baby Boomers were unfamiliar with it.

- 37% of respondents tried an AI-driven search engine and found the results more satisfying.

- 34% said the way they search on AI Search hasn’t changed; 28% said they make “more detailed” search queries; 9% said they write longer prompts.

- 56% of respondents, when asked to choose between two results pages for the same query, preferred AI-generated results over traditional search results.

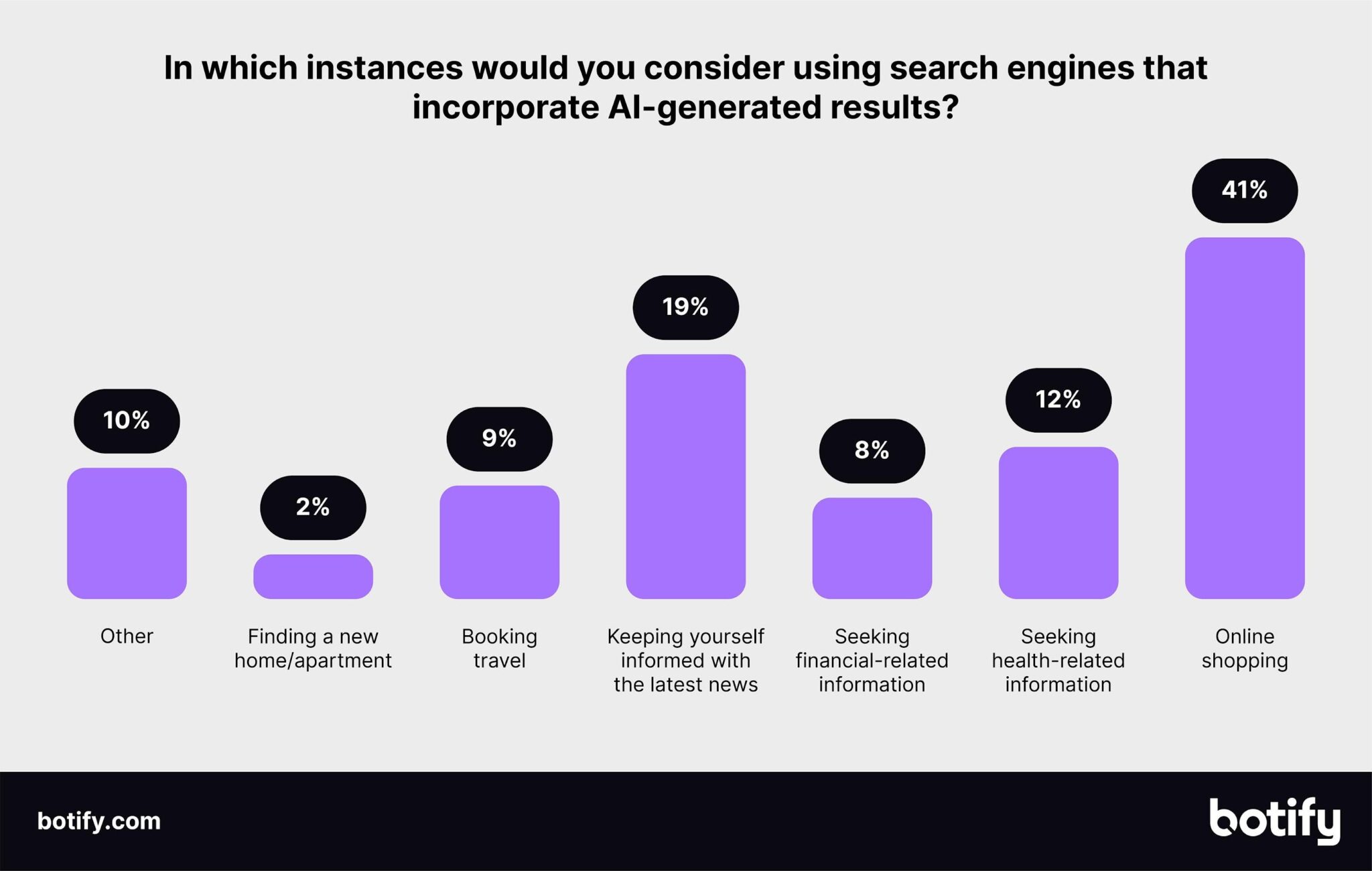

Online shopping. Forty-one percent of respondents said the top reason for using AI search was for online shopping. Other top reasons to use it were for keeping up with the latest news (19%) and seeking health-related information (12%).

- Meanwhile, a majority of respondents (55%) said “Yes,” they believe search engines that incorporate AI-generated results have the potential to make it easier for people to discover products and services.

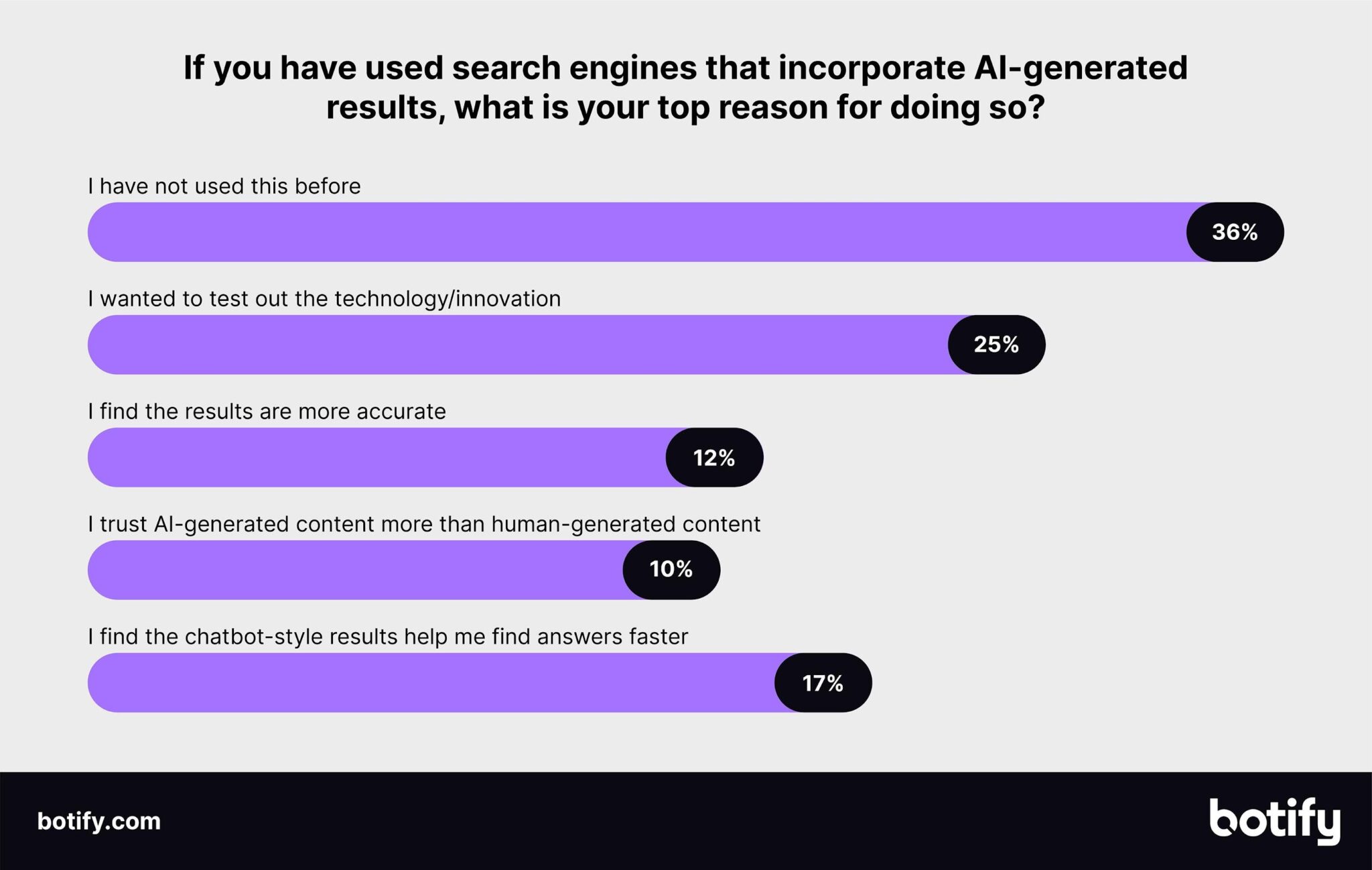

Faster answers. While 36% of respondents haven’t used AI search, 25% of consumers have – and mainly to test out the new technology.

- Meanwhile, 17% said their top reason for using AI-powered search was because the chatbot-style results helped them find answers faster.

About the survey. The data was collected by Dynata, an independent market research company, on behalf of Botify. The survey of 1,000 US respondents, aged 18+, was conducted in February.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Bing Search outages since early this morning

Written on May 23, 2024 at 6:32 am, by admin

Starting at around 1:30am ET, Bing Search and the sites Microsoft Bing powers, have been experiencing global and widespread outages. This leads to many Bing Searches failing, and then third-party services that rely on Bing Search, such as Copilot for web and mobile, Copilot in Windows, ChatGPT internet search, DuckDuckGo and Ecosia, also failing.

Downdetector. According to Downdector.com Bing has been having issues since around 1:30am ET:

What Bing’s outage looks like. Here are some screenshots of Bing.com searches failing and of partner sites failing:

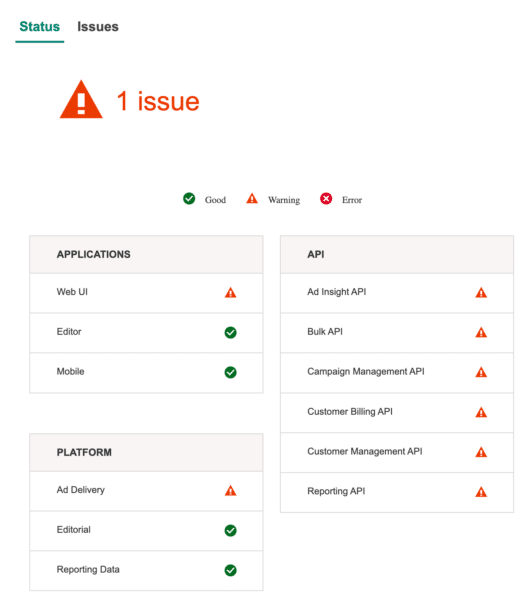

Microsoft Advertising services down. Also, Microsoft Ad platform server status page shows outages as well:

Why we care. If you rely on getting traffic from Bing or those third parties, you may see a dip in that traffic today. In addition, if you rely on using these services for your job, you may have to switch gears and work on something else until they are restored.

It’s back. Around 6:15am ET, it seems the services are now back up and running – at least for me at this moment. Although, many users are still telling me it is not working for them.

Microsoft update. Microsoft posted an update at around 7:30am ET:

While we isolate the root cause, we're transitioning requests to alternate service components to expedite service recovery. More information can be found in the admin center under CP795190.

— Microsoft 365 Status (@MSFT365Status) May 23, 2024

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Accessibility matters: Strategies for building inclusive digital experiences by Edna Chavira

Written on May 23, 2024 at 6:32 am, by admin

In today’s digital landscape, inclusivity and accessibility are no longer optional; they are essential for engaging with diverse audiences and fostering meaningful connections. Neglecting digital accessibility can alienate potential customers and limit your brand’s reach.

During this webinar, our expert panel will delve into the evolving consumer behaviors and preferences, highlighting the importance of creating content that resonates across various audiences and platforms. They will also discuss the critical role of website accessibility in organizations, aligning with the Americans with Disabilities Act (ADA) to ensure broader reach and avoid potential legal pitfalls.

Attendees will gain valuable insights on how to craft digital experiences that are elegant, engaging, and inclusive, fostering a brand presence that makes everyone feel welcome. Register now to save your spot!

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Google Marketing Live 2024: Everything you need to know

Written on May 22, 2024 at 3:31 am, by admin

Unsurprisingly, AI took center stage during the various announcements made today at Google Marketing Live 2024.

Updates also focused on creatives, data and giving the consumer what they want straight from the Search results. Now is the time to start paying closer attention to your impression levels.

Here’s a recap of everything you need to know from Google Marketing Live, with links to our full coverage of each major announcement.

Google PMax upgrade allows mass AI creative asset production

You can now produce high-quality creative assets rapidly and at scale for Performance max campaigns.

With Generative AI, will be able to create ads a lot faster, have brand customized ads, advanced image editing and automatic showcasing of product feeds in AI-generated creatives

New reporting functionalities have also been rolled out for Youtube and creative assets.

Google rolls out immersive, AI-powered Shopping Ads

Video and virtual ad types come for Shopping Ads advertisers.

Retailers can integrate short-form product videos into ads, virtual try on allows shoppers to see how tops fit different body types, and 3D show spins.

Allowing consumers to engage with your creative and products (virtually) before landing on your page.

Google visual storytelling advances for YouTube, Discover, Gmail

You can now target your potential customers with vertical videos, stickers, and automatically generated animated image ads.

These video ads are now available across the 3 billion users across YouTube, Discover and Gmail through Demand Gen campaigns.

More variety for your Demand Gen campaigns.

Google starts testing ads in AI overviews

Your relevant Search and Shopping ads will appear in “sponsored” sections within AI-generated overview boxes on the SERP.

No need for you to do anything – existing Search, PMax and Shopping campaigns will be eligible.

How to make it not eligible – that is unknown as of now.

Google’s first-party data unification Ads Data Manager available to all

Centralize and activate your first-party data for more effective AI-powered campaigns with Google’s Data Manager Tool now available to everyone.

Use it for data integration (consolidates disparate first-party data sources into a unified analytics hub) and audience insights and targeting.

Google gives merchants new brand profiles, AI branding tools

New tools have been released to better showcase your brands and create visual content.

You can create a brand profile to highlight key merchant information on Search, featuring brand imagery, videos, customer reviews, deals, and more.

Product Studio now includes brand alignment features that let you use uploaded images to inspire your AI-generated ad and the ability to create videos from a single product photo.

Google tests AI-powered ads for complex purchases

Google is testing giving consumers an interactive experience upon getting their search results.

AI will be used to provide tailored advice and recommendations based on user needs and context.

The aim is to improve user experience on the SERP and provide a ready to convert consumer when they reach the brand landing page.

But what will this do to search traffic to brand sites? Time will tell.

Why we care. A lot of updates this year focused on less effort for a consumer to click through the site. So it will be interesting to see what that leads to for driving traffic. Still, there are several functionalities that have been introduced that have been long awaited (like reporting) and allow brands to better showcase their creativity.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

Super Early Bird rates expire this Friday… enter the Search Engine Land Awards now!

Written on May 22, 2024 at 3:31 am, by admin

Winning an industry award can greatly impact how customers, clients, and colleagues regard your brand.

Showcase your achievements and celebrate your professional excellence by entering the Search Engine Land Awards — the highest honor in search marketing!

Since its 2015 inception, the Search Engine Land Awards have honored some of the best in the search industry — including leading in-house teams at Wiley Education Services, T-Mobile, Penn Foster, Sprint, and HomeToGo – and exceptional agencies representing Samsung, Lands’ End, Stanley Steemer, and beyond.

This year, it’s your turn. And now is the best time to apply: Super Early Bird rates expire at 11:59pm ET this Friday, May 24… submit your completed application before then to save $300 off final rates (per entry!).

Want to learn more? Check out our FAQ page, explore the 2024 categories, and review advice from the judges.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.

WordPress 6.5 gains lastmod date for sitemaps files

Written on May 22, 2024 at 3:31 am, by admin

WordPress version 6.5 now supports including the lastmod element within sitemaps files, which can help search engines understand which pieces of content are new or updated. This can help improve crawl efficiency for search engine crawlers and reduce server load on your website.

Lastmod. Lastmod is an element that you can add to your sitemap file that should represent the last time that URL was modified. Search engines do look at that date when processing your sitemap files for crawling purposes. In fact, Bing said last year it would rely more on the lastmod date for crawling purposes.

Gary Illyes from Google posted this news on LinkedIn saying, “The lastmod element in sitemaps is a signal that can help crawlers figure out how often to crawl your pages.”

He added:

“If you’re on WordPress, since version 6.5, you have this field natively populated for you thanks to Pascal Birchler and the WordPress developer community. If you’re holding back on upgrading your WordPress installation, please bite the bullet and just do it (maybe once there are no plugin conflicts). Don’t be like Gary and still run a version 2*. If you’re not on WordPress, try to populate it with the last significant modification date, where “significant” loosely means a change that might matter to your users and, by proxy, to your site.”

Fabrice Canel from Bing commented on that post, saying:

“A big shoutout to Pascal Birchler, Gary Illyes, Google, and the WordPress developer community for their fantastic contribution to WordPress version 6.5. The integration of the lastmod signal within sitemaps is a game-changer, offering for millions of web sites, great data insight about when their content is changing allowing to optimize crawling activities and ensuring content freshness in search engines.

For anyone reluctant to update their WordPress installation, consider this feature a prime motivator. The more websites implement lastmod in their sitemaps, the search engines — whether AI-driven or rules-based — will increasingly capitalize on this signal.

Complimentary, please adopt https://www.indexnow.org/ to take control of crawl and your SEO game with real-time indexing. The right setup is IndexNow (for real-time update) and sitemaps with lastmod (daily – catchup mode) to ensure comprehensive and fresh coverage.

Having better online visibility isn’t just about having a website. It’s about your latest content being found.”

Upgrade. Currently, about 21% of WordPress installs are using version 6.5, so it might make sense to double check to see if you are on that version if you want to improve crawl efficiency.

If you are not on WordPress, you can ask your developers how to get accurate lastmod dates in your sitemap files.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Category seo news | Tags:

Social Networks : Technorati, Stumble it!, Digg, de.licio.us, Yahoo, reddit, Blogmarks, Google, Magnolia.