Archive for the ‘seo news’ Category

Thursday, May 30th, 2024

The search community is still unpacking and processing the huge reveal of the Google Search ranking documents that were made public yesterday morning. Everyone has been asking, why has Google not commented on the leak. Well, Google has finally commented – we spoke to a Google spokesperson about the data leak.

Google told us. Google told us that there are a lot of assumptions being published based on the data leak that are being taken out of context, that are incomplete and added that search ranking signals are constantly changing. This is not to say Google’s core rankings principles change, they do not, but the specific and individual signals that go into Google rankings do change, Google told us.

A Google spokesperson sent us the following statement:

“We would caution against making inaccurate assumptions about Search based on out-of-context, outdated, or incomplete information. We’ve shared extensive information about how Search works and the types of factors that our systems weigh, while also working to protect the integrity of our results from manipulation.”

Google, however, won’t comment about the specific elements, which are accurate, which are invalid, which are currently being used, how are they being used, and how strongly (weighted) they are being used. Google won’t comment about specifics because Google never comments on specifics when it comes to its ranking algorithm, a Google spokesperson told me. Google said if they did comment, spammers and/or bad actors can use it to manipulate its rankings.

Google also told us that it would be incorrect to assume that this data leak is comprehensive, fully-relevant or even provides up-to-date information on its Search rankings.

Did Google lie to us. That is hard to say for sure. There are some clear details about ranking signals Google historically told us they do not use, that were specifically mentioned in the leaked documents. Of course, Google’s statement says what is in the document may have never been used, been tested for a period of time, may have changed over the years or may be used. Again, Google won’t get into specifics.

Of course, a lot of folks in the SEO community have always felt Google has lied to us and that you should do your own testing to see what works in SEO and what does not work in SEO.

I, for one, trust people when they look me in the eye and tell me something. I do not believe the Google representatives I’ve spoke to over the years lied outright to me. Maybe it was about semantics of language, maybe Google wasn’t using a specific signal at that time or maybe I am super naive (which is very possible) and Google has lied.

Google communication. Google told me they are still committed to providing accurate information, but as I noted above, they will not do so in specific detail on a ranking signal-by-signal basis. Google also said that its ranking systems do change over time and it will continue to communicate information that it can to the community.

Does it matter. Either way, ultimately, these signals all point to the same thing. I believe Mike King, who was the first to dig into this document and help reveal the details, said that ultimately we need to build content and a website that people want to visit, want to spend time on, want to click over to and want to link to. The best way to do that is to build a website and content that people want like and enjoy. So the job of an SEO is to continue to build great sites, with great content. Yea, it is a boring answer – sorry.

What happened. As we covered, thousands of documents, which appear to come from Google’s internal Content API Warehouse, were released March 13 on Github by an automated bot called yoshi-code-bot. These documents were shared with Rand Fishkin, SparkToro co-founder, earlier this month.

Why we care. As we reported earlier, we have been given a glimpse into how Google’s ranking algorithm may work, which is invaluable for SEOs who can understand what it all means. As a reminder, in 2023, we got an unprecedented look at Yandex Search ranking factors via a leak, which was one of the biggest stories of that year. This Google leak is likely going to be the story of the year – maybe of the century.

But what do we do with this information? Probably exactly what we have been doing without this information – build awesome sites with awesome content.

The articles. Here are the two main articles that broke this story on this Google data leak:

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Thursday, May 30th, 2024

Google has confirmed a “logging error” that affected the product snippets reporting within the Google Search Console performance report. The issue occurred between May 15, 2024 until May 27, 2024 and resulted in an increase in clicks and impressions for the Product snippet search appearance type within the search performance report and impressions overlay in the product snippet rich result report.

This is just a reporting issue and has no impact and real Google Search rankings.

More details. A week ago, we noticed a weird spike in impressions and clicks for product snippets. Some thought it might be related to AI Overviews, but John Mueller from Google said it is unlikely related to that. He wrote:

The folks here are looking into the details of this, and it does look like a bug on our side in Search Console reporting (and FWIW it doesn’t seem to be related to AI Overviews at all, but I can see how that would be tempting). Once we know more (and ideally have fixed it), we’ll likely add an annotation in Search Console & an entry in the data anomalies page.

Google details. Google then posted an update here, confirming the reporting issue. Google wrote:

A logging error affected Search Console reporting on product snippets from May 15, 2024 until May 27, 2024. As a result, you may notice an increase in clicks and impressions during this period for the Product snippet search appearance type in the performance report and impressions overlay in the product snippet rich result report. This is just a logging issue, not an actual change in clicks or impressions.

What it looks like. Here is a screenshot showing the spike for this report:

Why we care. If you noticed this unusual spike, you were not alone. Just note that this was a reporting glitch in Google Search Console so that you know what happened.

It would be best to ignore that data, within that date range, for client and internal reporting purposes.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Thursday, May 30th, 2024

Understanding all the features of Google Analytics 4 (GA4) is essential to making the most of it. Doing so allows you to configure the tool to analyze data accurately and efficiently. It also lets you draw the best conclusions for designing, refocusing and defining your digital strategies.

GA4 users can configure many functionalities, including custom dimensions, which allow for more detailed and personalized data analysis.

What are dimensions in GA4?

Google defines a dimension as an attribute of your data. It describes your data, and it’s usually written in text rather than numbers.

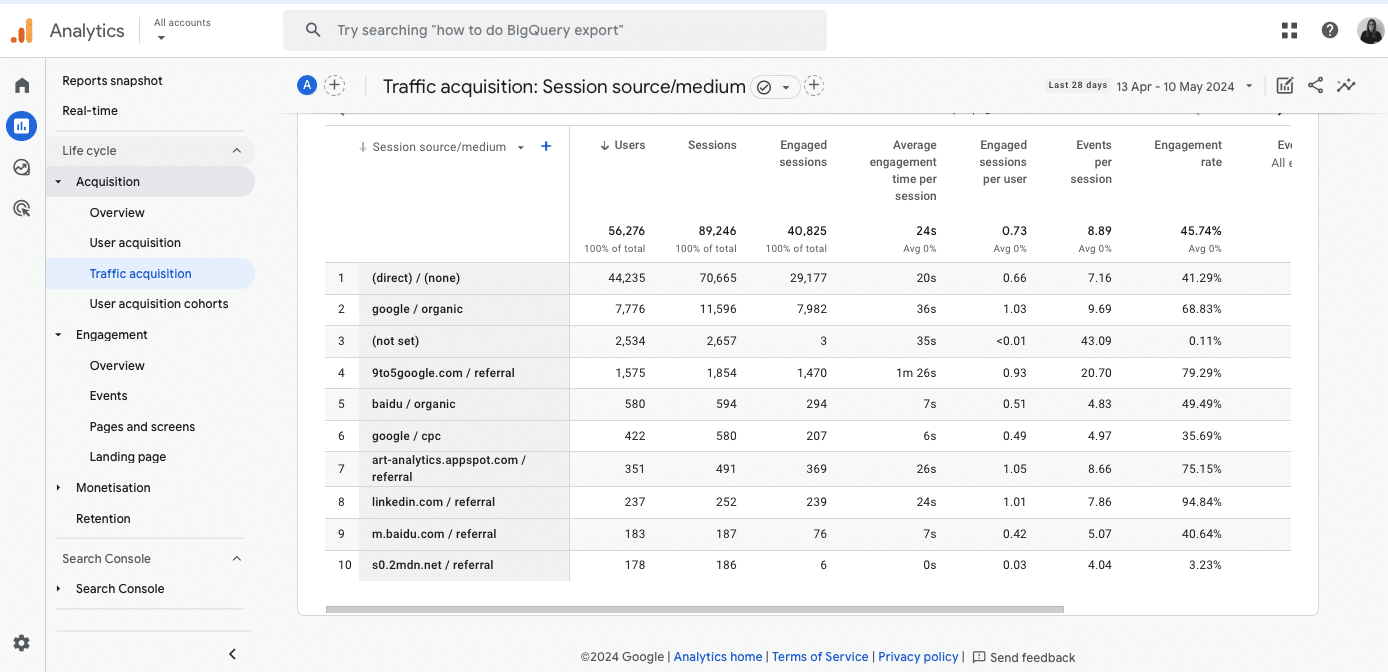

An example of dimensions would be source/medium, which shows how a user arrives at a website:

Another example of dimension would also be the Event name, which shows the different events that happen on a website and how the user interacts with it:

When creating the GA4 account, the tool preconfigures a wide list of dimensions automatically by default.

However, if this is not enough for your strategy’s analysis and you need to analyze attributes in more detail and specifically based on the website’s objectives, you can create custom dimensions.

Events and event parameters

To understand custom dimensions and how to create them, you must first understand some GA4 concepts – events and parameters.

- Events are the metrics that allow you to measure specific user interactions on a website, such as loading a page, clicking on a link or submitting a form.

- Event parameters are additional data that site information about those events (i.e., additional information about how users interact with a website).

There are two types of event parameters in GA4, depending on how they are captured by the tool:

- Automatically collected parameters: These are preconfigured by GA4, which automatically captures a set of parameters (e.g. page_location, page_referrer or page_title). Google provides a list of all these event parameters created automatically or enhanced measurement.

- Custom parameters: These allow you to collect information that is not captured by default. This applies to recommended events and custom events, where custom settings are required.

What are GA4 custom dimensions?

Custom dimensions are attributes that allow you to describe and collect data in a customized way. Essentially, they are parameters you create in GA4 to capture information that is not automatically tracked by the tool.

Types of custom dimensions

Depending on the information that you want to collect in a custom way, you can create different types of custom dimensions, as indicated by Google:

When is it recommended to create custom dimensions?

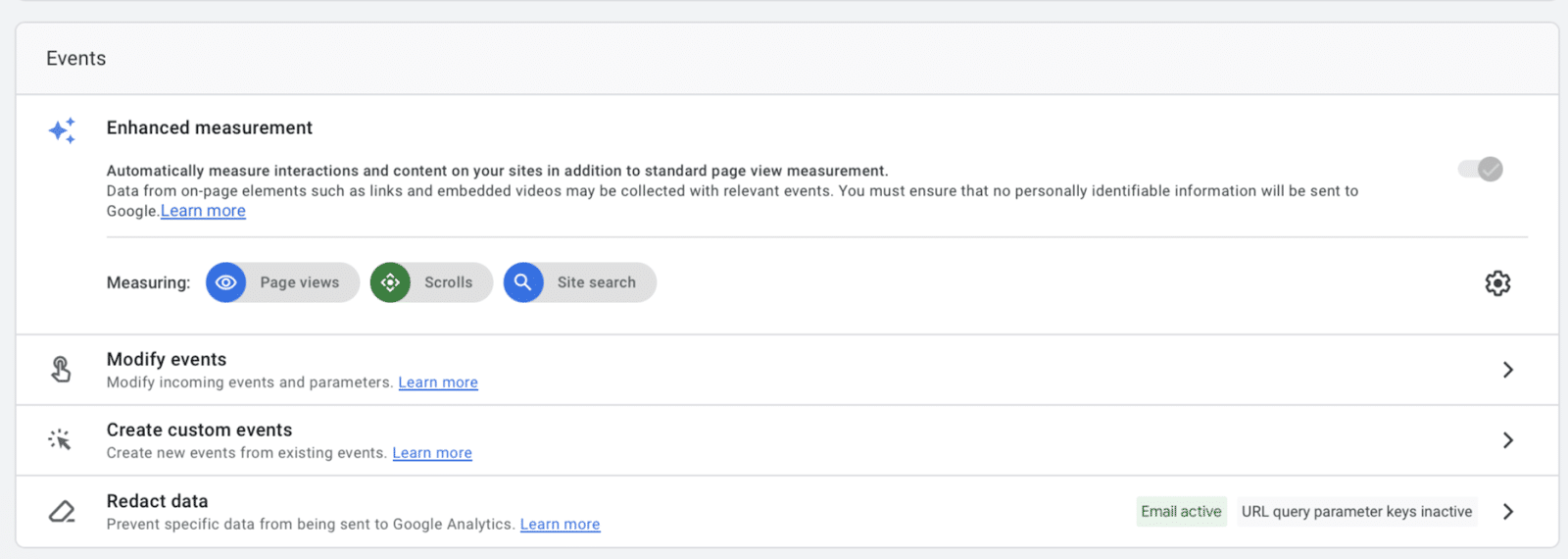

Before creating custom dimensions to analyze data in more detail, it is advisable to check whether these attributes already exist as automatic events predefined by GA4 or as options within enhanced measurement events.

To determine if the data you want to analyze is already provided by automatic events, you can consult the list that Google Analytics offers under Automatically collected events. These events are collected automatically, so the user does not need to perform any additional actions.

This is not the case for the enhanced measurement events, these must be activated within the GA4 account if you want to collect this information.

To activate these attributes, you will do it inside Admin > Data streams > Events > Enhanced measurement.

If the information you want to analyze is not included within these automatic events, it is recommended that you create it as a custom dimension.

Get the daily newsletter search marketers rely on.

How to set up custom dimensions

Regardless of the type of custom dimension, it must be created via Google Tag Manager. Below is a step-by-step guide for configuring an event-scoped custom dimension.

Before getting started, make sure the GA4 and Google Tag Manager accounts are properly configured and linked.

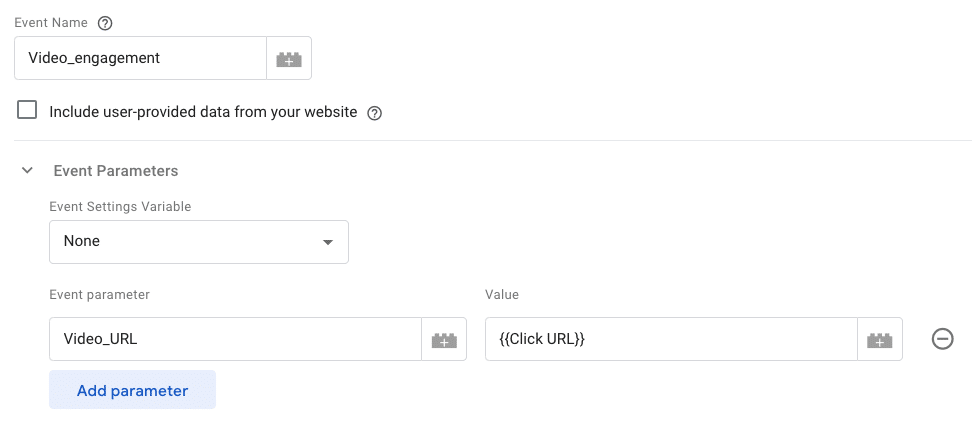

Next, you need to define and create which event parameter you want and send it as a custom dimension in GA4.

In this case, you are going to show in the following image how to create the event parameter to analyze the URL of the video that a user plays on your website:

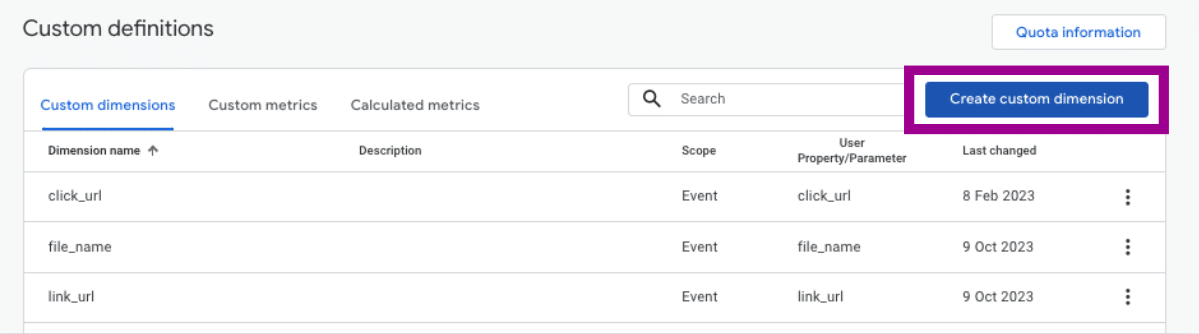

As it is a manually configured event parameter only included in Google Tag Manager, it will not be enough for GA4 to include it in its reports automatically. You will have to notify GA4 about it by going to Admin > Data display > Custom definitions.

Then, click on Create custom dimension.

Create the custom definition with the information from your event parameter:

Now that your custom dimension is created, use DebugView to check if it is being collected correctly and is properly configured.

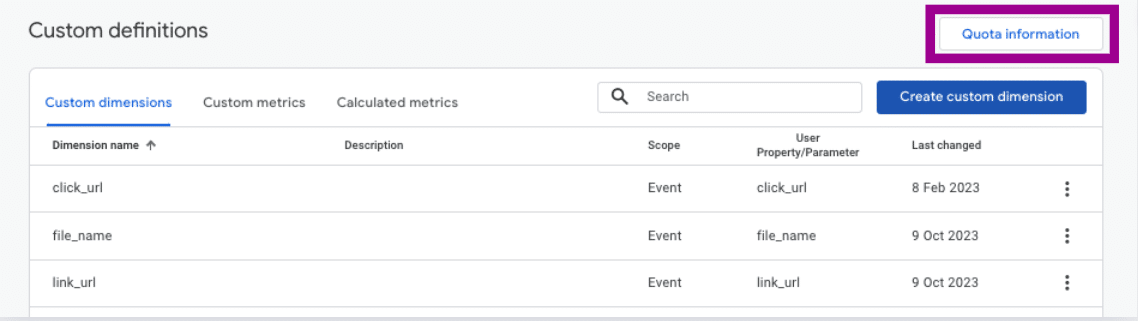

In parallel, within the Custom definitions section, under Quota information, you can monitor the total number of custom dimensions created in your GA4 account.

How many custom dimensions can I set up in my GA4 account?

The number of custom dimensions that a user can create is limited, although it is often difficult to reach this limit.

To ensure you create only the most useful dimensions, first define the highest-priority KPIs for your website or app and then create and configure only those dimensions that are truly of interest. To avoid exceeding this limit, use predefined dimensions and metrics whenever possible.

How to analyze custom dimensions

Custom dimensions will provide you with additional information about your data. This information can be analyzed within GA4.

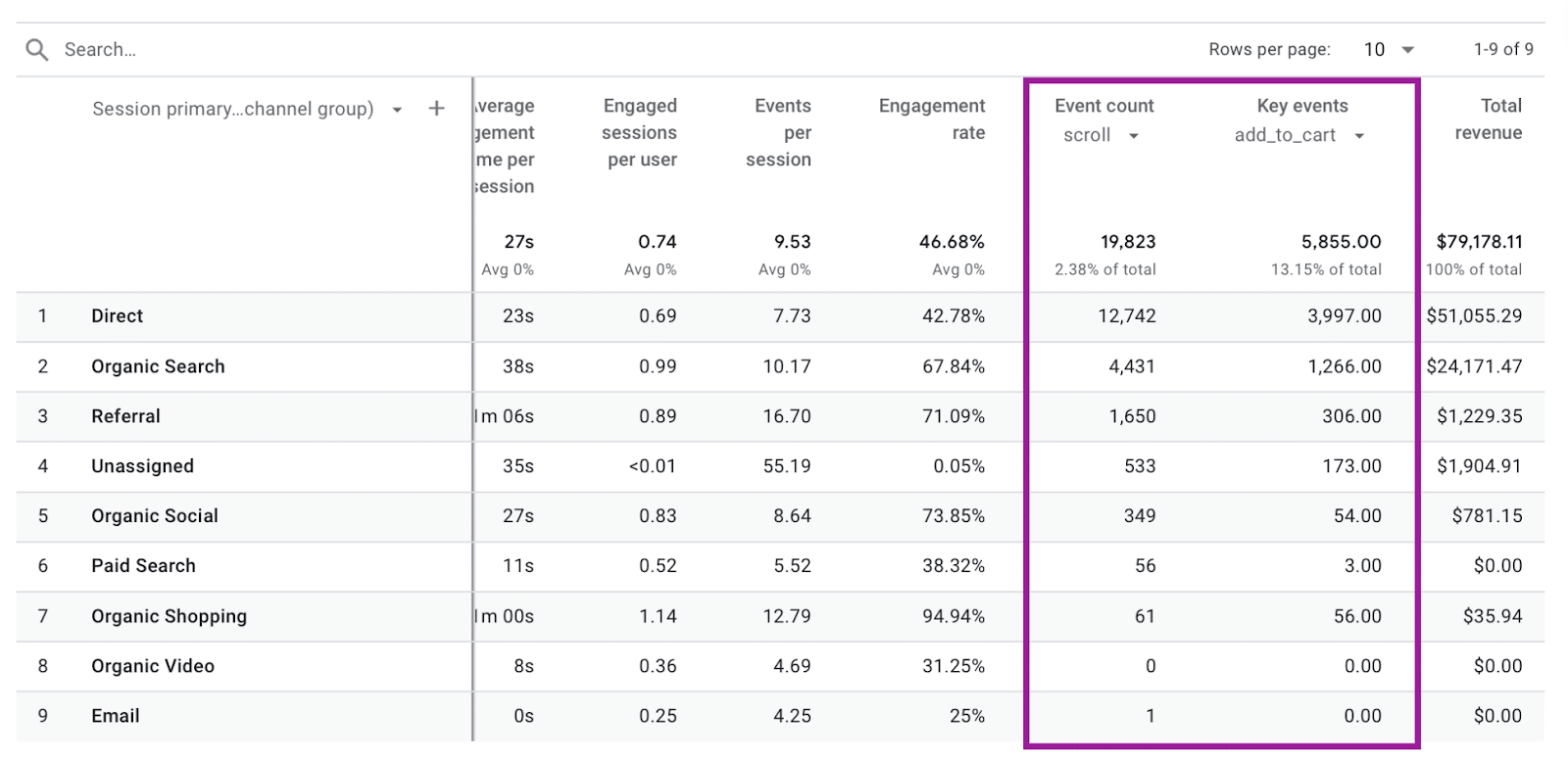

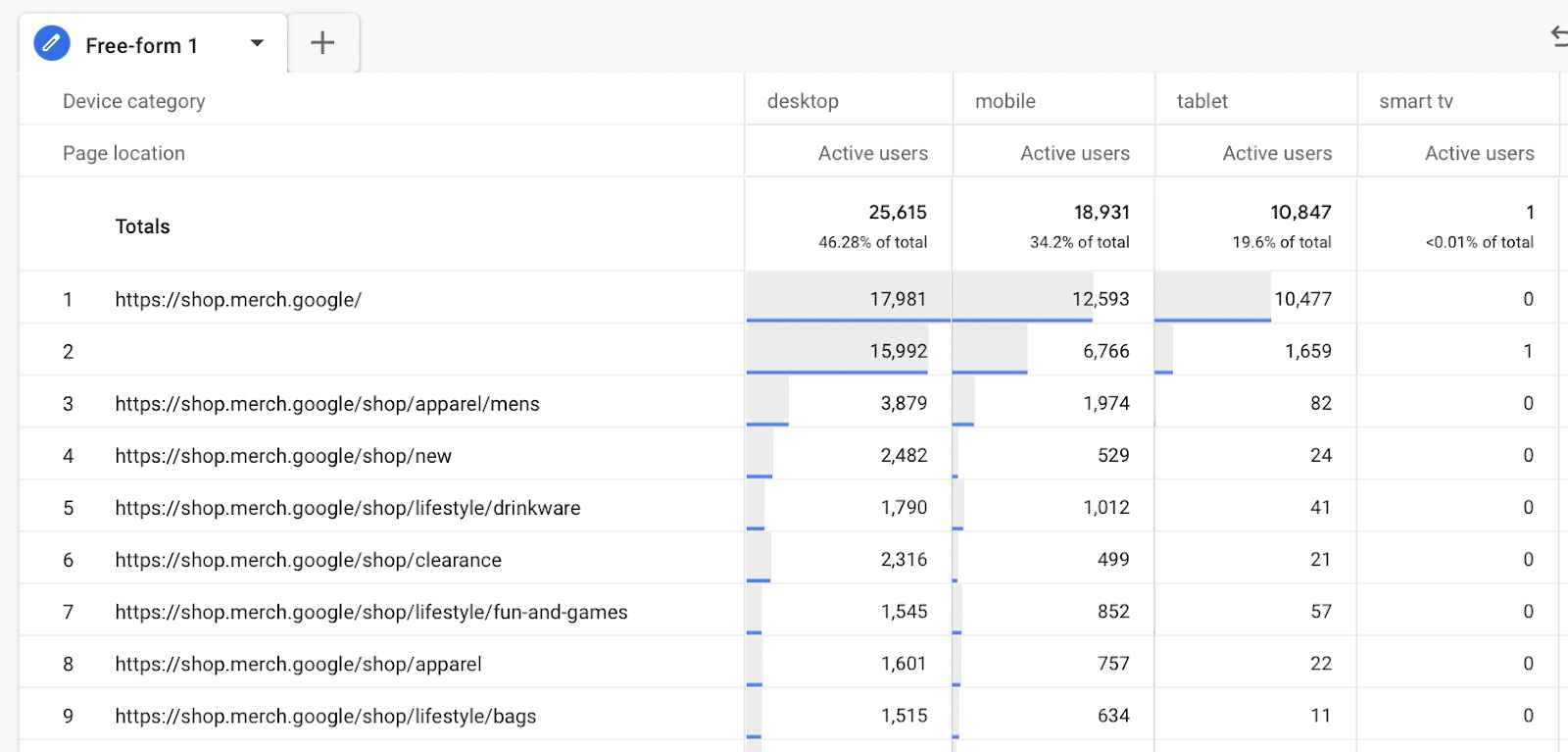

In the case of GA4, you can analyze the custom dimensions through the same reports that the tool offers in a customized way, either in the traffic or events panel, for example:

Custom dimensions can also provide more information about your data when using the explore section:

Creating custom dimensions is a valuable method for enhancing your analytics with valuable insights for your business.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Tuesday, May 28th, 2024

Successful search marketers keep up with the latest trends and tactics while seamlessly executing on a day-to-day basis. Make 2024 the year you…

- Implement strategies to adapt to evolving SERP dynamics

- Future-proof your PPC program for a post-cookie world

- Manage PMax budgets to grow revenue and profits, reducing wasted ad spend

- Boost organic rankings by focusing on UX metrics that move the needle

- Take actions to limit volatility during future major algorithm updates

- Optimize your content to appear in RAG-driven tools like SGE, ChatGPT, and Bing

… and much, much more. Unlock free access to SMX Advanced, online June 11-12, and learn actionable, expert-level tactics that will give you an edge over the competition and set you up for a winning Q3 and beyond. Join us from anywhere with no travel required and no additional costs!

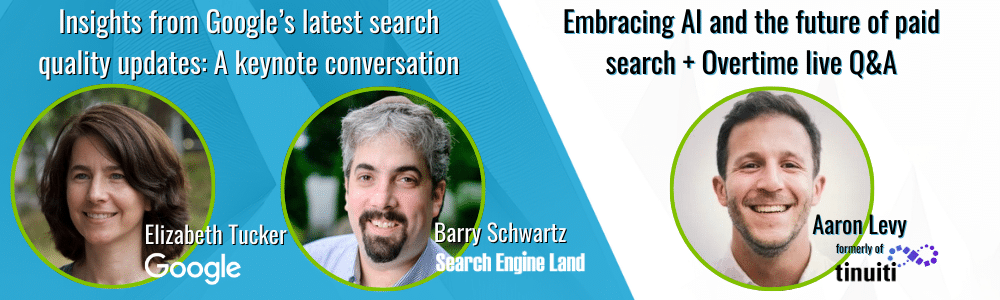

Each day kicks off with an exclusive keynote:

- Jumpstart your SMX experience with a straight-from-the-source keynote conversation featuring Elizabeth Tucker, Director of Product Management at Google and the person who spearheaded the March core updates – and our own Barry Schwartz. Together, they will explore the issues these updates are intended to address, how Google evaluates its quality work, and how content creators can best position themselves for success on Google Search.

- Launch into day 2 with paid search pro Aaron Levy, as he reveals how to put the “marketing” back in “search engine marketing,” and prepare for the future of paid search, whatever it may hold. Stick around for Overtime – live Q&A with Aaron, and moderator Anu Adegbola, immediately following his presentation.

And that’s just the start. Your free pass also unlocks…

- The entire expert-level agenda programmed by the Search Engine Land editors

- Advanced, actionable tactics to overcome critical SEO PPC, and AI obstacles

- Live Q&A (Overtime!) where you’ll get specific answers in real-time

- 4 invigorating Coffee Talk meetups to discuss common challenges with your peers

- Live and on-demand access so you can train at your own pace

- A personalized certificate of attendance and digital badge

For nearly two decades, more the 150,000 search marketers have relied on SMX Advanced for their continued training. Don’t miss your once-a-year opportunity to connect with peers on your level and learn actionable tactics that will take your search campaigns to a greater level of success.

Ready to register? Grab your FREE All Access pass now.

See you in two weeks!

Psst… Yes, you’re an advanced marketer… but are you “award-winning”? Enter the 2024 Search Engine Land Awards for your chance to take home the highest honor in search. Early Bird rates expire July 12… learn more here: searchengineland.com/awards

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Tuesday, May 28th, 2024

Google will be shutting down the Google Business Profile chat and call history feature on July 31, 2024. Google will stop allowing new chats to be initiated starting on July 15, 2024 and then will totally disable the chat and call history features on July 31, 2024.

What Google said. Google sent out numerous types of emails to businesses, depending on if they uses the chat or call history feature. The email says:

“We are reaching out to share that we will be winding down Google’s chat and call history features in Google Business Profile on July 31, 2024. We acknowledge this may be difficult news – as we continually improve our tools, we occasionally have to make difficult decisions which may impact the businesses and partners we work with. It’s important to us that Google remains a helpful partner as you manage your business and we remain committed to this mission.”

Important dates. Here are the upcoming dates you need to be aware of:

- July 15, 2024: Searchers will no longer be able to start new chat conversations with your business from Google Maps or Google Search. However, searchers that are already in existing chat conversations will be notified that chat will be phased out.

- July 31, 2024: The chat functionality in Google Business Profile will end completely and you will no longer receive new chat messages. You will also no longer be able to see your Google Business Profile call history in Google Business Profile.

History download. You can download your chat and call history from Google Business Profiles, so you can store them somewhere. At some point, Google will delete the history.

The email. Here is a screenshot of the email I received:

Why we care. If you used these features for your business, please be aware they are going away soon. You may want to download your chat and call history before the deadline, so that you can archive that data in your CRM software and database.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Tuesday, May 28th, 2024

A trove of leaked Google documents has given us an unprecedented look inside Google Search and revealed some of the most important elements Google uses to rank content.

What happened. Thousands of documents, which appear to come from Google’s internal Content API Warehouse, were released March 13 on Github by an automated bot called yoshi-code-bot. These documents were shared with Rand Fishkin, SparkToro co-founder, earlier this month.

- Read on to discover what we’ve learned from Fishkin, as well as Michael King, iPullRank CEO, who also reviewed and analyzed the documents (and plans to provide further analysis for Search Engine Land soon).

Why we care. We have been given a glimpse into how Google’s ranking algorithm may work, which is invaluable for SEOs who can understand what it all means. In 2023, we got an unprecedented look at Yandex Search ranking factors via a leak, which was one of the biggest stories of that year.

This Google document leak? It will likely be one of the biggest stories in the history of SEO and Google Search.

What’s inside. Here’s what we know about the internal documents, thanks to Fishkin and King:

- Current: The documentation indicates this information is accurate as of March.

- Ranking features: 2,596 modules are represented in the API documentation with 14,014 attributes.

- Weighting: The documents did not specify how any of the ranking features are weighted – just that they exist.

- Twiddlers: These are re-ranking functions that “can adjust the information retrieval score of a document or change the ranking of a document,” according to King.

- Demotions: Content can be demoted for a variety of reasons, such as:

- A link doesn’t match the target site.

- SERP signals indicate user dissatisfaction.

- Product reviews.

- Location.

- Exact match domains.

- Porn

- Change history: Google apparently keeps a copy of every version of every page it has ever indexed. Meaning, Google can “remember” every change ever made to a page. However, Google only uses the last 20 changes of a URL when analyzing links.

Links matter. Shocking, I know. Link diversity and relevance remain key, the documents show. And PageRank is still very much alive within Google’s ranking features. PageRank for a website’s homepage is considered for every document.

Successful clicks matter. This should not be a shocker, but if you want to rank well, you need to keep creating great content and user experiences, based on the documents. Google uses a variety of measurements, including badClicks, goodClicks, lastLongestClicks and unsquashedClicks.

Also, longer documents may get truncated, while shorter content gets a score (from 0-512) based on originality. Scores are also given to Your Money Your Life content, like health and news.

What does it all mean? According to King:

- “[Y]ou need to drive more successful clicks using a broader set of queries and earn more link diversity if you want to continue to rank. Conceptually, it makes sense because a very strong piece of content will do that. A focus on driving more qualified traffic to a better user experience will send signals to Google that your page deserves to rank.”

Documents and testimony from the U.S. vs. Google antitrust trial confirmed that Google uses clicks in ranking – especially with its Navboost system, “one of the important signals” Google uses for ranking. See more from our coverage:

Brand matters. Fishkin’s big takeaway? Brand matters more than anything else:

- “If there was one universal piece of advice I had for marketers seeking to broadly improve their organic search rankings and traffic, it would be: ‘Build a notable, popular, well-recognized brand in your space, outside of Google search.’”

Entities matter. Authorship lives. Google stores author information associated with content and tries to determine whether an entity is the author of the document.

SiteAuthority: Google uses something called “siteAuthority”.

Chrome data. A module called ChromeInTotal indicates that Google uses data from its Chrome browser for ranking.

Whitelists. A couple of modules indicate Google whitelist certain domains related to elections and COVID – isElectionAuthority and isCovidLocalAuthority. Though we’ve long known Google (and Bing) have “exception lists” when “specific algorithms inadvertently impact websites.”

Small sites. Another feature is smallPersonalSite – for a small personal site or blog. King speculated that Google could boost or demote such sites via a Twiddler. However, that remains an open question. Again, we don’t know for certain how much these features are weighted.

Other interesting findings. According to Google’s internal documents:

- Freshness matters – Google looks at dates in the byline (bylineDate), URL (syntacticDate) and on-page content (semanticDate).

- To determine whether a document is or isn’t a core topic of the website, Google vectorizes pages and sites, then compares the page embeddings (siteRadius) to the site embeddings (siteFocusScore).

- Google stores domain registration information (RegistrationInfo).

- Page titles still matter. Google has a feature called titlematchScore that is believed to measure how well a page title matches a query.

- Google measures the average weighted font size of terms in documents (avgTermWeight) and anchor text.

The articles.

Quick clarification. There is some dispute as to whether these documents were “leaked” or “discovered.” I’ve been told it’s likely the internal documents were accidentally included in a code review and pushed live from Google’s internal code base, where they were then discovered.

The source. Erfan Azimi, CEO and director of SEO for digital marketing agency EA Eagle Digital, posted a video, claiming responsibility for sharing the documents with Fishkin. Azimi is not employed by Google.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Tuesday, May 28th, 2024

“Buying” customers can work.

Just not all that well over the long haul, it turns out.

Direct-response, digital ads (like paid search) are among the best converting channels anywhere.

Like, ever. In the history of time.

No joke. Google it.

It’s the #1 best way to spend money today to generate revenue tomorrow. (Or even over the next ~2-3 months.)

But here’s why this can set you down a dangerous path if you aren’t careful – and actually result in a higher CPL over the long term.

1. Over-relying on paid acquisition for long-term growth

There are a few giant implicit problems for B2B brands with paid media:

- Auction-based networks, like Google Ads, will keep getting more expensive over the long-term.

- While you might be able to improve ad spend optimization over the short-term (A/B testing creatives, graphics, audiences, placements, timing, etc.), you’re unlikely to significantly control or bring down the ROI over the long-term (as CPCs continued to get bid up by your competitors).

- Part of this is because you’re forced to compete head-on, directly, in a red ocean blood bath for a tiny sliver of only ~5% “in-market” prospects at that time (more on this in the next section).

- To top it all off, you’re dealing with the Law of Shitty Clickthrough rates (where the required minimum spend and performance of a channel traditionally falls over the long-term anyway) and it gets more expensive to compete long-term.

- And complex, consultative buying processes with sophisticated buyers don’t just “click and convert” but often require multiple ads in succession or funnels before ever paying you a dime.

Go look at any chart of CPC and CPL costs across not just one year, but over the last 10, and you’ll pretty quickly see a similar trend:

- Paid media (in established categories) increases over the long term.

- Ad creative click-throughs and response rates often fall over the long term.

- Thus, your potential ROI and profit margins decreases over the long term, too.

This ain’t new, either.

B2B CPCs and lower-click through rates have been maligned on this very site since 2007!

That’s not because B2B marketers are dumb. It’s actually the opposite. It’s just that low-priced, transactional sales or impulse buys are easy to generate “click + convert” B2C sales.

So why is this especially troubling today for B2B brands?

Because it forces you to realize that you’ll need to seek out, explore, test, and scale other channels if you want to continue pushing 7-figure revenues into 8-figure ones, then 9 and 10-plus ones as you grow at scale.

To add insult to injury, paid media is also incredibly capital intensive:

- You have to front-load a huge budget, every single month, month after month.

- That’s likely to only continue increasing over the next five years from now.

- In order to hopefully squeeze out revenue over the next few months.

- So that you can hopefully just break-even on each customer within ~6 months.

- In order to make a profit and get “paid back” 6-12 months later (assuming the customer sticks that long, too).

So. If you live in a world where your paid CPL is anywhere near ~$5k/each… you should probably find some better alternatives ASAP if you want a long-term, scalable growth engine.

And not a temporary bandage that works – kinda/sorta – for the next year or two but becomes cost prohibitive 5+ years from now.

In other words:

- Your budget that should be going to other channels so that you can grow the pie next year (hello, SEO!) continues getting cannibalized today and tomorrow and next month by paid ones.

- Just to keep the lights on and numbers moving in the right direction – for now!

- Despite decreasing margins and increasing Cost of Customer Acquisition the rest of next year.

I’m not saying don’t do it if it’s working. You obviously should!

But you also can’t be surprised two years from now when it’s “working less well” and “costing more money” and you should have explored other channels, faster, two years previously (like today!).

Especially when these other channels, like SEO, require you to lay the proper groundwork to actually move the needle 2+ years from yesterday.

This problem only compounds with time, allowing your savvy, well-funded competitors years of moat-building that you’ll need to desperately-and-futility-claw back at a higher premium later on.

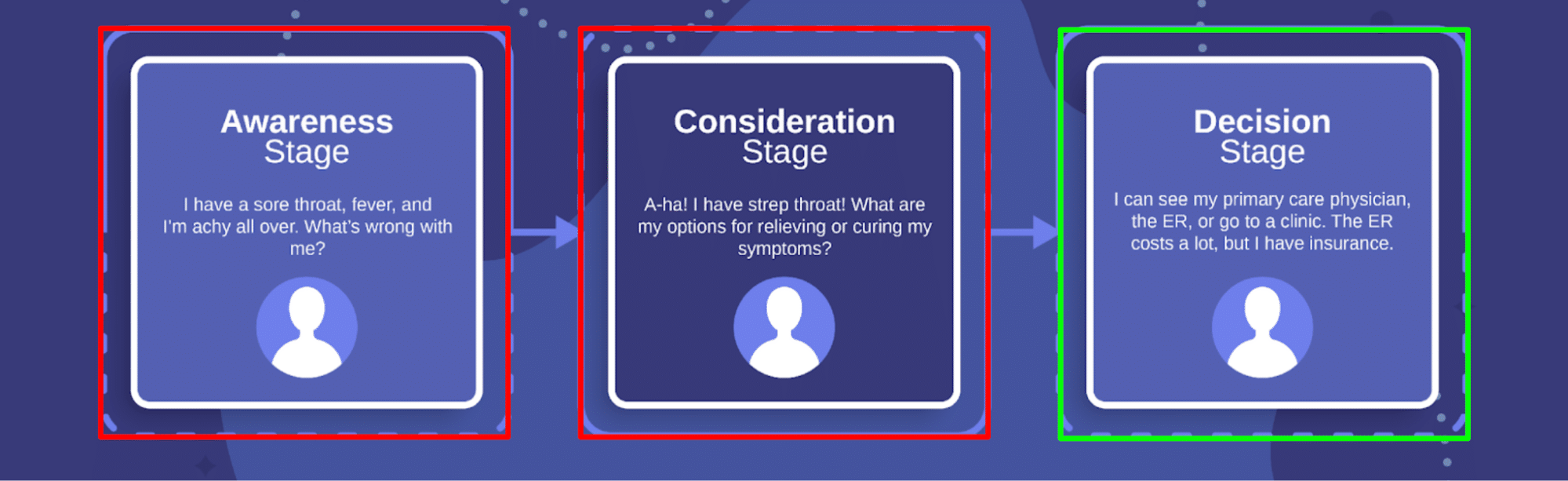

2. Relying on heavily-branded, bottom-of-funnel, in-market leads

It’s actually pretty obvious when a B2B brand has “over-relied” on paid media for too long.

It resembles the top-heavy body builder who LOVES arm day, only to wear baggy pants to cover up their weak foundation of embarrassingly-disproportionate chicken legs.

Except in SEO, it’s often upside down.

Here’s what I mean:

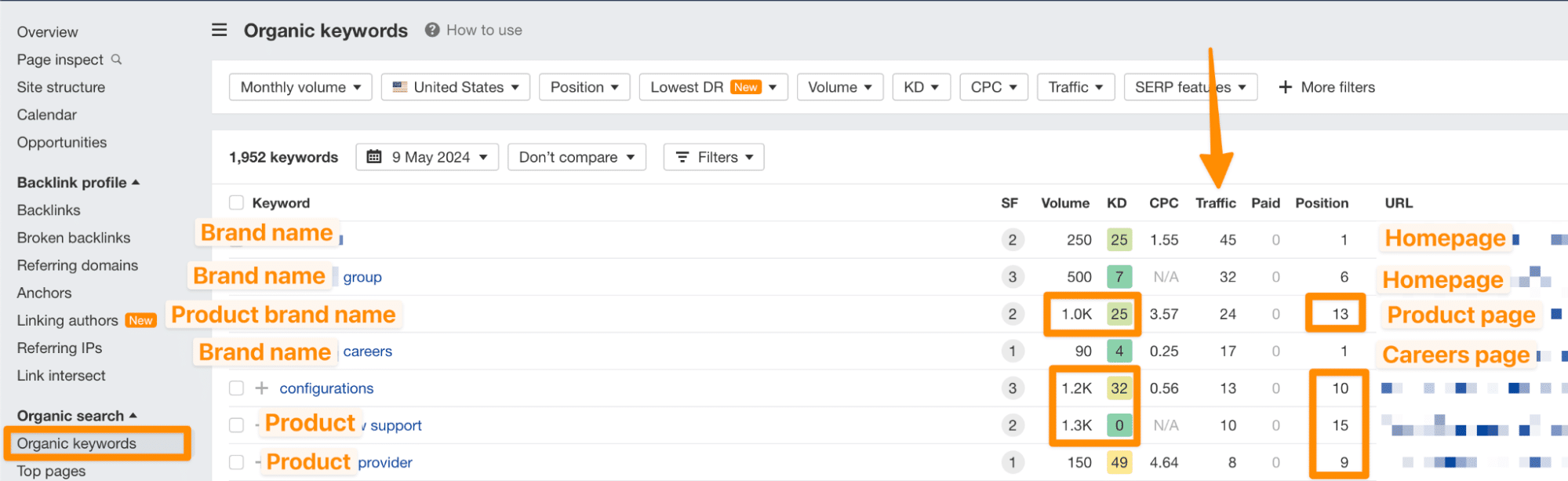

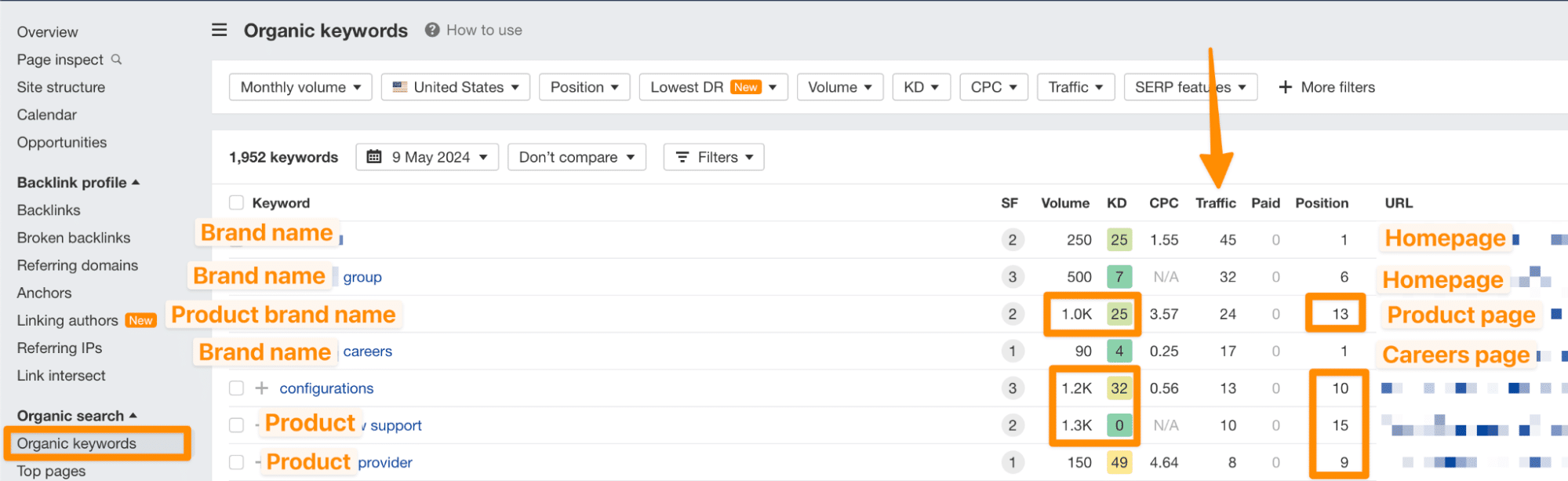

Fire up your favorite keyword research tool, plugin your site URL to pull up organic rankings and tell me if any of these red flags sound familiar:

- Your homepage is one of the top trafficked pages on your site.

- Which means you’re overly-reliant on brand aware people, and ignoring the other 95% of out-of-market people who should and could need you months and years from now.

- Your homepage is cannibalizing other non-branded queries for commercial terms (more on this chestnut below).

- Which often means you’re getting little-to-no traffic to the very pages on your site designed to educate and convert customers.

- And little-to-no MOFU or TOFU-related, top-five rankings that help you future-proof your pipeline years from now or bring down ad costs with better targeting across multiple channels.

Or, essentially this:

What’s happening here?

You’re bottom-heavy, where you’re only reaching the tiny subset of potential customers – vs. the wider, broad, bigger, and deeper pool of potential customers who will undoubtedly need you in the future.

Now let’s add this problem to the last one.

Marketing channels don’t actually exist in silos. Unlike the very marketing teams managing them. (How’s that for irony?)

Let’s say your marketing team starts allocating 10% of the paid budget over to SEO as a “proof of concept” to “get the wheels going.”

Good? I guess.

Good enough? Not really.

Because SEO:

- Takes a long time, where the ROI compounds greater over the long haul (12+ months) vs. short-term.

- Unlike paid ads budget (which is a hamster wheel you’ll have to continue growing over the long haul), should require a greater front-loaded investment so that you don’t have to spend as much in years 5+ (as an overall % of your total marketing budget.

Hundreds, if not thousands, of intro calls over the past 15 years tells me this is disappointingly common.

In other words, you spend 10% of the paid budget this year on SEO.

Not really enough to move the needle for next year’s results to roll in and give your team (and bean counters) the certainty that it’ll overtake ads anytime soon (as your primary method to generate customers).

So what happens?

They cut budget in year two and de-prioritize SEO/content/etc.

And you’re back on the paid media hamster wheel in no time.

“Because SEO and content didn’t really work for us.”

Uh-huh.

3. Cannibalization of search intent & content structure mismatch leading to low-to-no profitable rankings

By now you should notice the waterfall effect of these mistakes.

The first problem leads to the second, which now leads to the third.

A self-reinforcing negative spiral if there ever was one.

Like how a stressful job (yours!), leads to shortcutting nutritious eating habits, which leads to lower energy and sedentary behavior, which leads to weight gain, which leads to worse eating habits and an even more sedentary lifestyle and more weight gain next year, and the one after that, and the one after that.

Here’s how this third mistake compounds the first two on your site.

Your product page is ranking for “a lot of keywords.”

Yay?!

Except it’s not actually properly optimized for targeting any of them.

So the chances of ranking top three for any of one of those keywords is slim to none (to impossible).

In other words, your “pretty good” rankings are actually lying to you.

Based on SERP CTR averages, it means you’re unlikely to ever see anything greater than ~5% of the potential traffic. Or, not enough to “move the needle” for the bean counters to give you more budget.

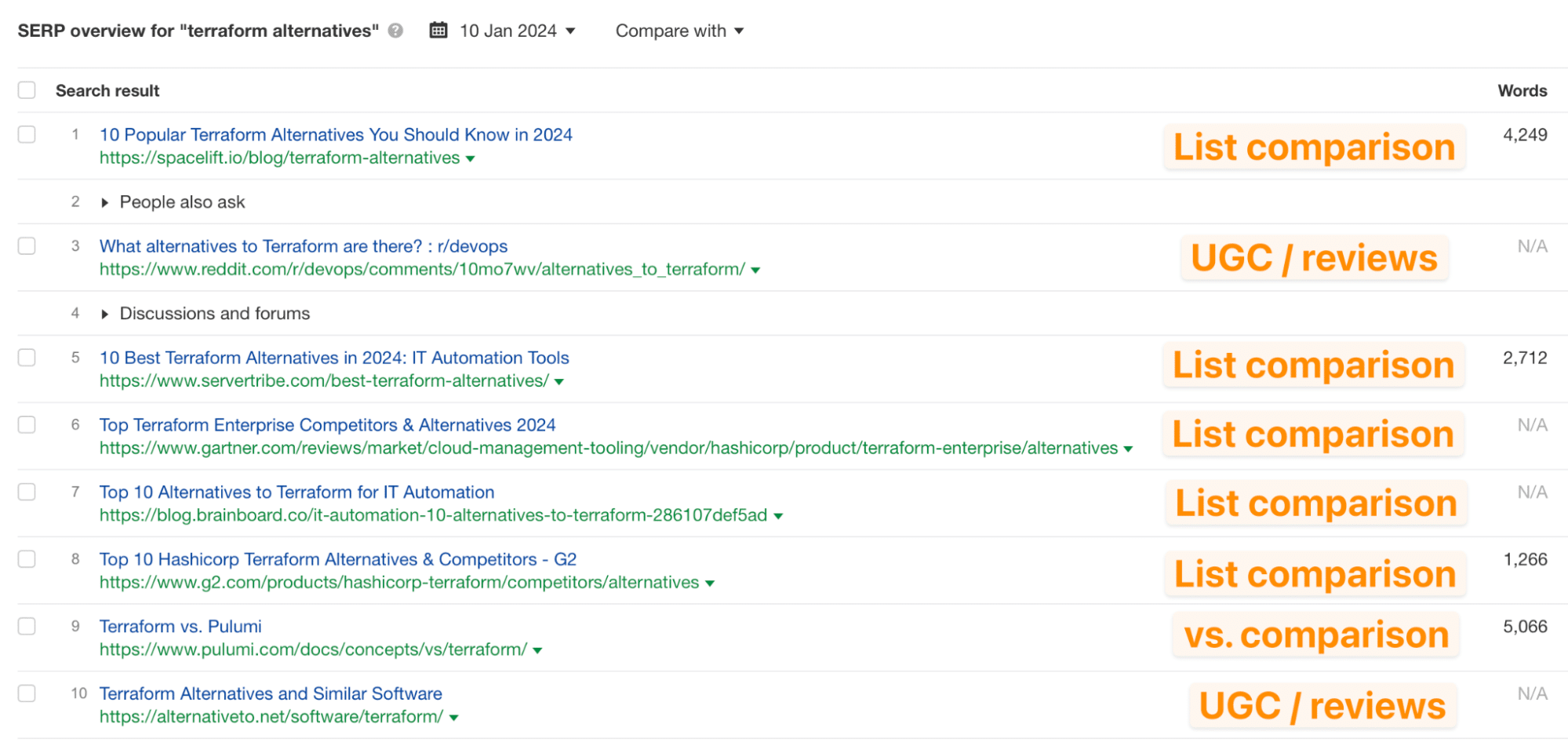

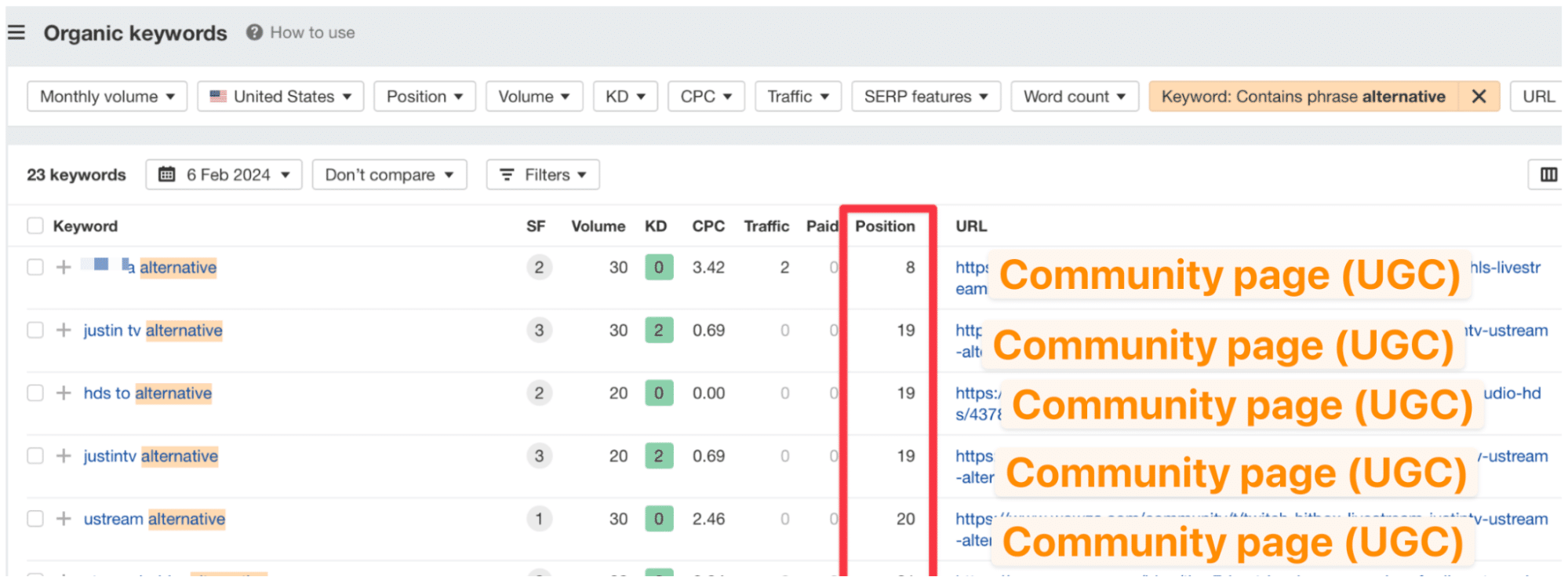

You pull up the organic SERPs to see why you’re not ranking in the top ten yet, and notice that exactly NONE of the following results are product pages – but list comparisons and UGC or reviews of tools:

Think this is a one-off? Just a unique moment in time?

Think again.

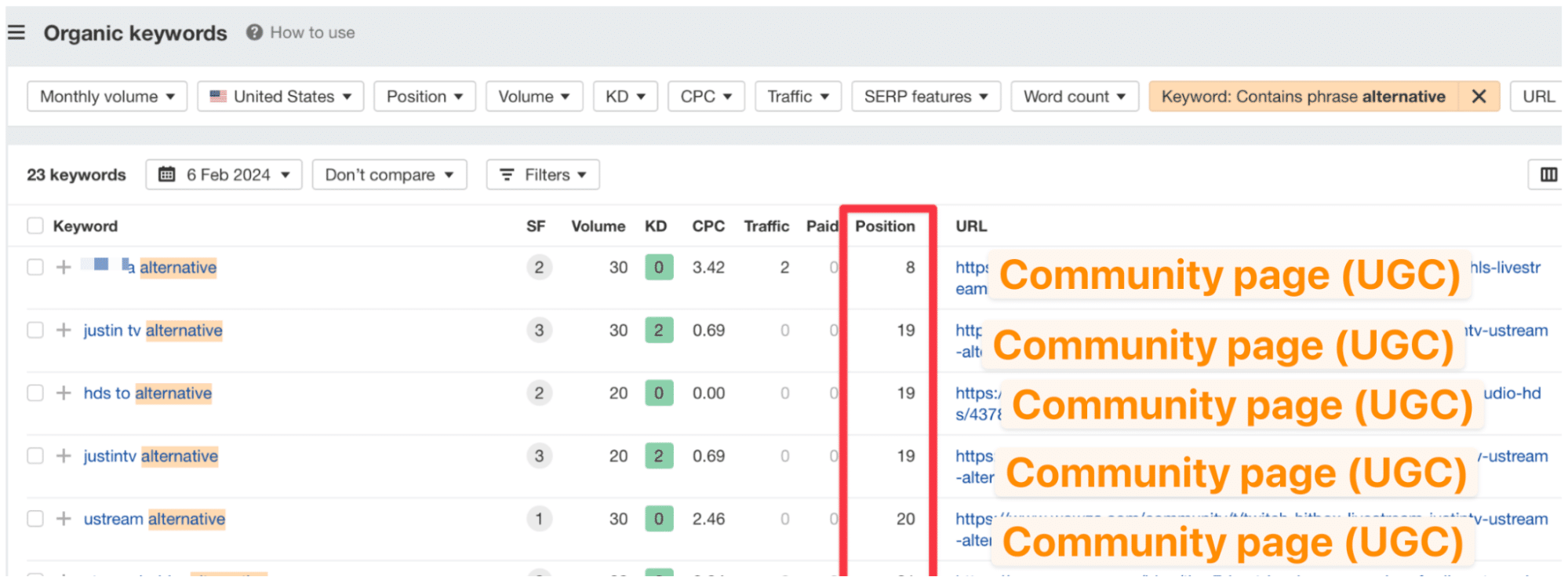

Let’s look at this same exact “alternatives”-style query idea, for a completely different brand in a completely-different space, and see what we see:

Ruh roh!

This time, the intent match is slightly better. At least it’s community or UGC pages picking up rankings on this specific website.

But these are obviously not optimized for search. At all.

Because that’s not the primary reason this company has these on their site in the first place.

So they’re almost picking up these “pretty good” rankings by accident. A complete fluke.

A happy accident of sorts? Sure.

However, is this a “winning” strategy to actually rank in the top five for these pages to actually grab ~70-80% of the people searching for these keywords?

And then showing these people a page perfectly positioned to turn profitable prospects into potential purchasers?

Nope. Not anytime soon.

Conclusion

Paid media works well for driving B2B leads.

But that’s also part of the problem.

Because if you only rely on paid media, to the exclusion of everything else, it sets you down a slippery slope of a higher CPL over the long haul.

It consumes the lion’s share of the marketing budget, makes sales people lazy by only expecting credit-card-in-hand leads forever, and makes your executives think they can continue under-investing in everything else across your brand.

And that has a precipitously-disastrous effect if and when you try to kickstart the SEO and content farming process you should have been doing years previously.

All marketing channels get more competitive with time. All marketing channels get more sophisticated with time. And so all marketing channels get more expensive to start with time, too.

SEO and content, however, when done well and unlike most marketing channels, can actually decrease CPL 5-10 years from now.

But only if you actually invest properly today.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Sunday, May 26th, 2024

Conversion is usually preceded by several interactions with a website or an app.

Attribution determines the role of each touchpoint in driving conversions and assigns credit for sales to interactions in conversion paths.

Therefore, it’s crucial to understand attribution in Google Analytics 4 (GA4).

(If you are new to attribution, read the Google Analytics help article on attribution first.)

How Google Analytics 4 attribution works

Universal Analytics reports attributed the entire credit for the conversion to the last click. A direct visit was not considered a click, but for the avoidance of doubt, this attribution model was also called the last non-direct click model. Other attribution models were only available in the Model Comparison Tool in the Multi-Channel Funnels (MCF) reports section.

GA4 offers a wider availability of different attribution models, but it depends on the scope of the report – whether it is the user acquisition source, session source or event source.

In Universal Analytics, the source dimensions had session scope solely. The MCF reports made it possible to analyze the sources of all sessions on the conversion path. The three scopes of source dimension in GA4 (user, session, event) are the most important and fundamental changes in the attribution area.

This guide will use the term “source” in a broader meaning as any dimension that indicates the origin of a visit (e.g., channel grouping, source, medium, ad content, campaign, ad group, keyword, search term, etc.).

In 2024, Google modified the terminology in Analytics, and what were previously known as conversions are now called key events. The term “conversion” in Google Analytics will be reserved for Google Ads conversions imported from Google Ads.

Session source

Session-scope attribution – unsurprisingly – determines the source of the session. It is used, among others, in the Traffic acquisition reports in the Reports section.

The session source is the source that started the session (e.g., social media referral or organic search result). However, if a direct visit started a session, the session source will be attributed to the source of the previous session (if there was any).

Quick reminder: A direct visit means that Analytics does not know where the user came from because the click does not pass the referrer, gclid, or UTM parameter.

The session source will be direct only if Analytics cannot see any other source of visit for the given user within the lookback window. The default lookback window in GA4 is 90 days. We will return to the lookback window matter later in this article.

By the way, what is a session?

A Google Analytics session is not the same as a browser session.

In GA4, a session begins when a user visits the website or app and ends after the user’s inactivity for a specified time (30 minutes by default – see this Analytics help article).

Closing the browser window does not end the session. If the browser window is closed, another visit to the website within the time limit will still belong to the same session – unless the browser deletes cookies and browser data after closing the browser window, for example in incognito mode.

If a visit from a new source occurs during a session, a new session will not start, and the source of the current session will remain unchanged.

It does not mean that the visit from the new source is ignored. GA4 records the source of this visit, and the event-scope attribution reports (more on that later in this article) will take into account all sources of all sessions. (See this Analytics help article.)

A new visit during an existing session may happen, for example, if a user returns from a payment gateway or a webmail site after password recovery or registration confirmation. These visits will not artificially inflate the number of sessions.

Nevertheless, sources of these visits are so-called unwanted referrals and should be excluded. Visits from excluded referrals are reported as direct visits.

In GA4, these visits are de facto ignored because the session source and the session count remain unchanged. The non-direct attribution modeling in GA4 will assign no credit to this (direct) source (as described later in this article).

First user source

First user source (source of the first visit) is new to GA4. It shows where the user came from to the website or app for the first time.

It is a part of Google’s new approach to measurement in online marketing, which no longer focuses only on the classic ROAS (revenues vs. costs), but also analyzes the CAC vs. LTV (customer acquisition cost vs. lifetime value).

This approach reflects the app logic: we have to acquire the app user first, and after the app is installed, further marketing efforts engage and monetize the user. However, for the web traffic, it also makes more sense.

The new customer acquisition goal in Google Ads, available in Performance Max campaigns, also represents a similar approach. In this case, the focus is on the first-time buyer, not the first visit.

In GA4, the first user visit is recorded by the first_visit event for the website or the first_open event for the app. The naming is self-explanatory.

Therefore, the source of the first visit is a user attribute and indicates where this user’s first visit to the website or application came from.

The first visit source is attributed using the last non-direct click model. Of course, this attribution applies only to interactions before the first website visit or the first open of the app (interactions following the first visit or first open are not taken into account).

Once assigned, the source of the first visit remains unchanged – of course, as long as Google Analytics can technically link the user’s activity on the website and in the app with the same user.

The first user source will be reset if the tracking of the user is lost, for example, if the user does not visit the website for a period longer than the Analytics cookie expiration date.

We will return to the Analytics cookie expiration period and other data collection limitations in GA4 later in this article.

Event scope attribution

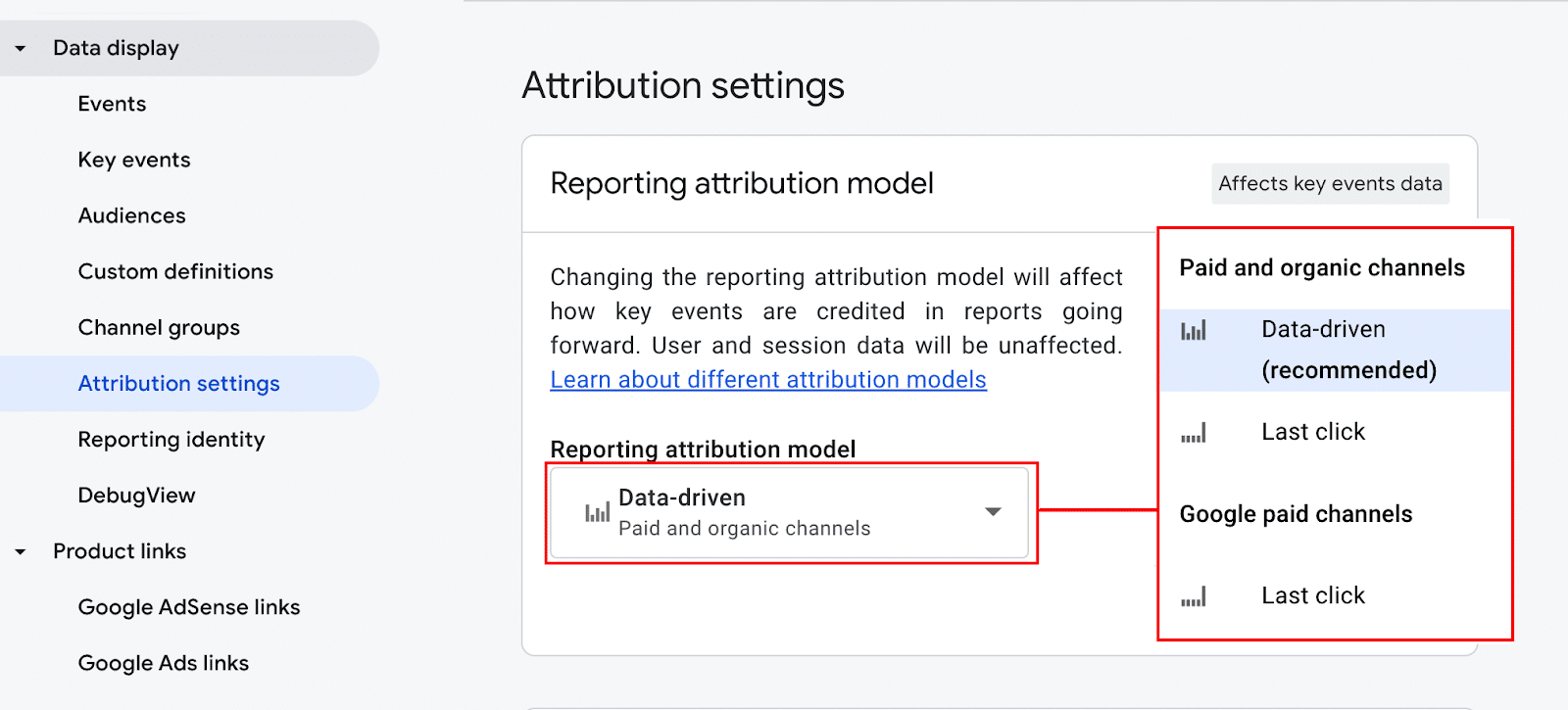

In GA4, events replaced sessions as the fundament of data collection and reporting. Google Analytics makes it possible to report attribution using a selected attribution model only for key events.

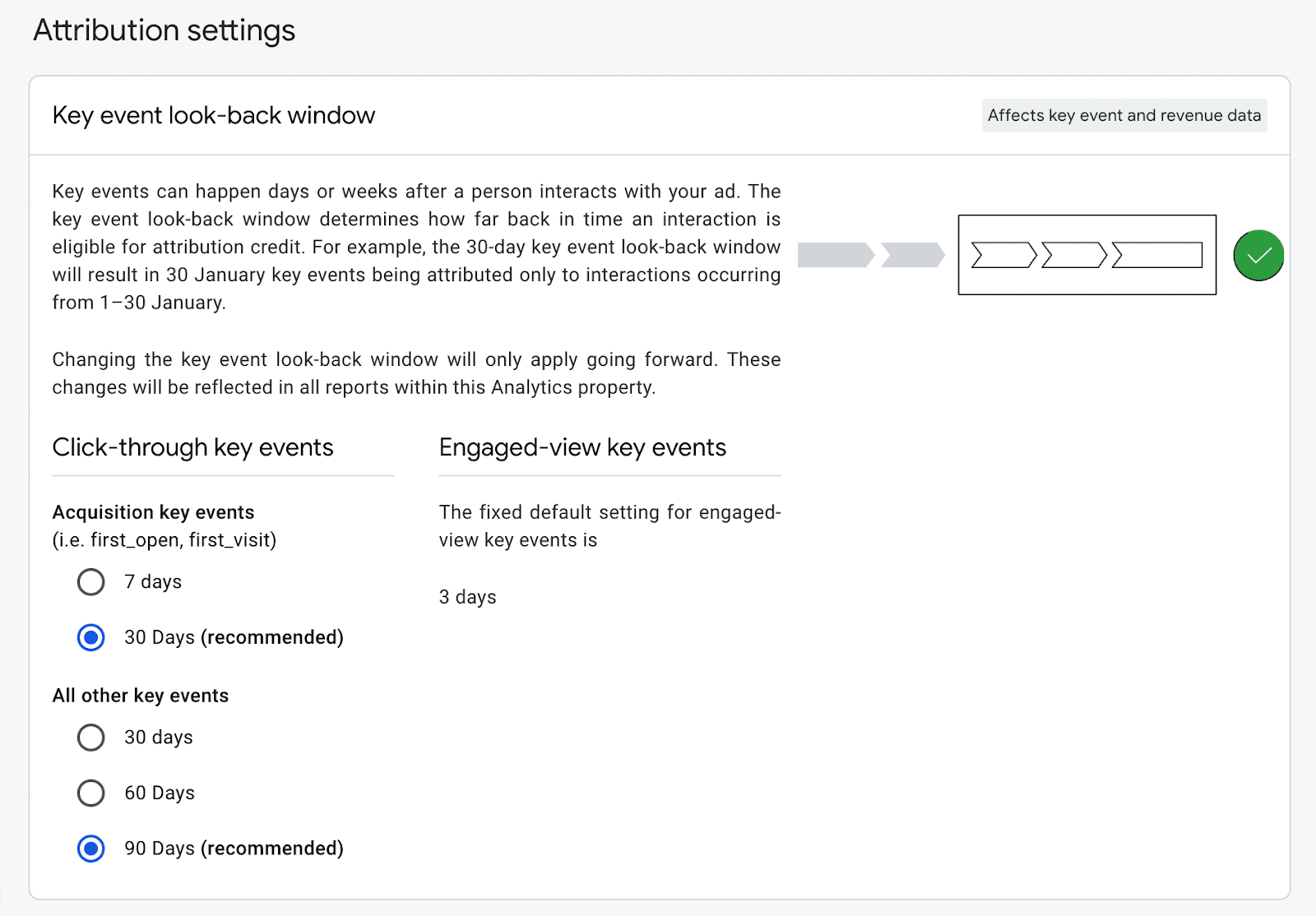

The model is set in the Attribution Settings of the GA4 property. There are several pre-defined models to choose from (see the screen below).

The default data-driven model can be changed at any time. This change is retroactive (i.e., it will also change the historical data).

A common belief is that Google Analytics 4 no longer uses the last-click attribution model. But is that the case?

In practice, it applies only to customized reports that use event-scope dimensions and metrics, for example, Medium – Key events.

The default traffic and user acquisition reports use session source and first user source, respectively, and these dimensions use the last click model. It is indicated in the dimension name (e.g., Session – Campaign or First User – Medium).

Remember: source, session source and first user source are three different dimensions where different attribution models apply.

| Scope |

Attribution Model |

Where available |

| Session |

Last click |

E.g., traffic acquisition reports |

| User (first user source) |

Last click |

E.g., user acquisition report |

| Event |

Model set in the GA4 property settings (data-driven by default) |

E.g., in the Explore section |

Attribution settings

The attribution model set in the property settings applies to all reports in the property.

There are several attribution models (described in the earlier mentioned Analytics help article), to choose from. However:

- All the models do not assign value to direct visits unless there is no other choice because there is no other interaction on the path. In other words, they all use the non-direct principle.

- The Ads-preferred models assign the entire value of the key event to Google Ads interactions if they occur in the funnel. There is only one Ads-preferred model available: the last click model. In the absence of Google Ads interactions on the funnel, this model works like a regular last-click model.

- In addition to clicks, models take into account “engaged views” of YouTube ads, that is, watching the ad for 30 seconds (or until the end if the ad is shorter) and other clicks associated with that ad (see this Google Analytics help article for more details).

Again, a change of the attribution model settings works retroactively (i.e., it applies to the historical data before the change). Saved explorations will be recalculated when viewing them.

Lookback window

Google Analytics property settings determine the length of the lookback window. The lookback window determines how far back in time a touchpoint is eligible for attribution credit. The default lookback window is 90 days, but you can change it to 60 or 30 days.

According to Analytics documentation, the lookback window settings apply to all attribution models and all key event types in Google Analytics 4 (i.e., it also applies to session-level attribution and attribution model comparisons).

The lookback window of the first user source has a separate setting (30 days by default, and it can be changed to 7 days). Are you wondering why it is defined differently?

Well, first of all, it is worth considering why there is any lookback window for the first visit at all.

Moreover, why are we talking about the first user attribution model, which is always the last (non-direct) click?

After all, GA4 knows the source of the first visit when this visit happens. As it is the first visit, there are no previous visits, and thus no other sources to consider.

So, what is the point of looking deeper in time than the first interaction with a website or app?

Google Analytics 4 is designed to blend data collected by the website’s tracking code with information known by Google about the users, especially if they are logged in to Google services.

For example, Google may know that the user had an engaged interaction with our YouTube ad on a different device before the first visit.

Similarly, the user may use the app for the first time (first_open) during a direct session, but the install itself may result from a mobile app install campaign in Google Ads, clicked a few days earlier.

Therefore, if the source of the first visit session is unknown (it is a direct visit), Google Analytics may try to assign the source of the first visit to the earlier known interaction if it occurred during the lookback window period.

In other words, GA4 may potentially record ad interactions before the first user visit.

Lookback window changes do not work retroactively. It means that they only apply from the moment of the change.

The engaged views of YouTube ads, however, always have three days lookback window, regardless of the property settings.

Get the daily newsletter search marketers rely on.

Bye to cookie logic?

Universal Analytics’s default lookback window for the acquisition reports was six months. Any change to this period was also non-retroactive.

Such a change, however, did not apply to conversions (now key events) but to interactions that had taken place after the change. It reflected the logic of the _utmz cookie, which was responsible for storing the source information.

Its expiration time was set when the cookie was created or updated (i.e., upon a visit from a given source).

For example, changing the lookback window in Universal Analytics from 30 to 90 days did not immediately include interactions from 90 days ago in the acquisition reports for the visits since the date of the change because the virtual “source cookie” for interactions older than 30 days has already “expired.”

There was a transition period (in this example, 90 days), after which all key events were fully reported under the new lookback window.

Google Analytics 4 uses a different data model. They could therefore break with this past and stop using the cookie logic.

For example, they could apply changes to all key events that have taken place since the change, as it is now in Google Ads. Interpreting such would be much easier. They could, but they did not.

In GA4, the change applies to interactions still in the lookback window.

For example, if the lookback window is increased from 30 to 90 days, the key events will not immediately be reported in the new, 90 days lookback window. It will be reflected in the reports after 60 days from the date of change (the interactions from the initial 30-day lookback window will be remembered).

Reducing the lookback window (e.g., from 90 to 30 days) will apply the change immediately (i.e., all key events will be reported in the shorter, 30 days window).

Yes, it sounds exotic. Fortunately, in practice, the analysts do not change the lookback window often.

Cookie expiration and data retention

The Google Analytics 4 cookie has a standard expiration time of 24 months, but it can be changed to a period between one hour and 25 months (or the cookie may be set as a session cookie and expire after the browser session end).

Subsequent visits may renew this time limit. This will be the period in which Analytics will be able to recognize a returning user and remember the source of the first visit – see this GA4 help article).

However, it does not automatically mean that GA4 will “remember” user data that long.

In addition to the cookie expiration, we also have to deal with the GA4 data retention period. It is set by default to only two months, but you can (and basically, you should) change this setting to 14 months. (In the paid version, Google Analytics 360, it can be up to 50 months.)

After this time, Google deletes user-level data from Analytics servers. To keep this data, you must export it to BigQuery (see this GA4 help article).

It means that reports in the Explore section can only be made within the data retention period (please note that in the Explore section, you cannot select a date range beyond this period).

These restrictions do not apply to standard reports in the Reports section that use aggregated data. GA4 will store this data “forever.”

In the unpaid version of GA4, the first user source data are deleted after 14 months of inactivity. After that, this user will be recorded as a new user.

Therefore, there is no point in, for example, changing the cookie expiration time from default 24 months to a longer period, unless you use Google Analytics 360.

Conversion export to Google Ads

Exporting conversions to Google Ads is often used as an alternative to the native Google Ads conversion tracking as the fastest and most convenient way to implement conversion tracking in Google Ads.

However, this time-saving seems illusory in the era of Google Tag Manager.

In GA4, the conversion import has flexible options so it is important to understand the differences between available settings.

In Universal Analytics and the earlier versions of GA4, the conversions were solely exported using Analytics’ last-click attribution model, regardless of the attribution model selected in Google Ads.

This methodology had problematic implications, particularly if the imported conversions were to be used for Google Ads optimization:

- It reduced the number of conversions observed in Google Ads because, as a matter of principle, Analytics attributes conversions to all traffic sources, not only to Google Ads.

- Such attribution is difficult to interpret, especially if Google Ads uses other attribution models for the last-click conversions imported from Analytics.

- It is vulnerable to unforeseen Google Analytics configuration and link tagging errors, such as unwanted referrals or redundant UTM parameters, which may suddenly increase the credit attributed to other sources.

Google engineers probably understood this issue and recently added more options.

Today, if you import conversions from GA4 to Google Ads, the conversions will be imported using the attribution model selected in the Google Ads conversion settings.

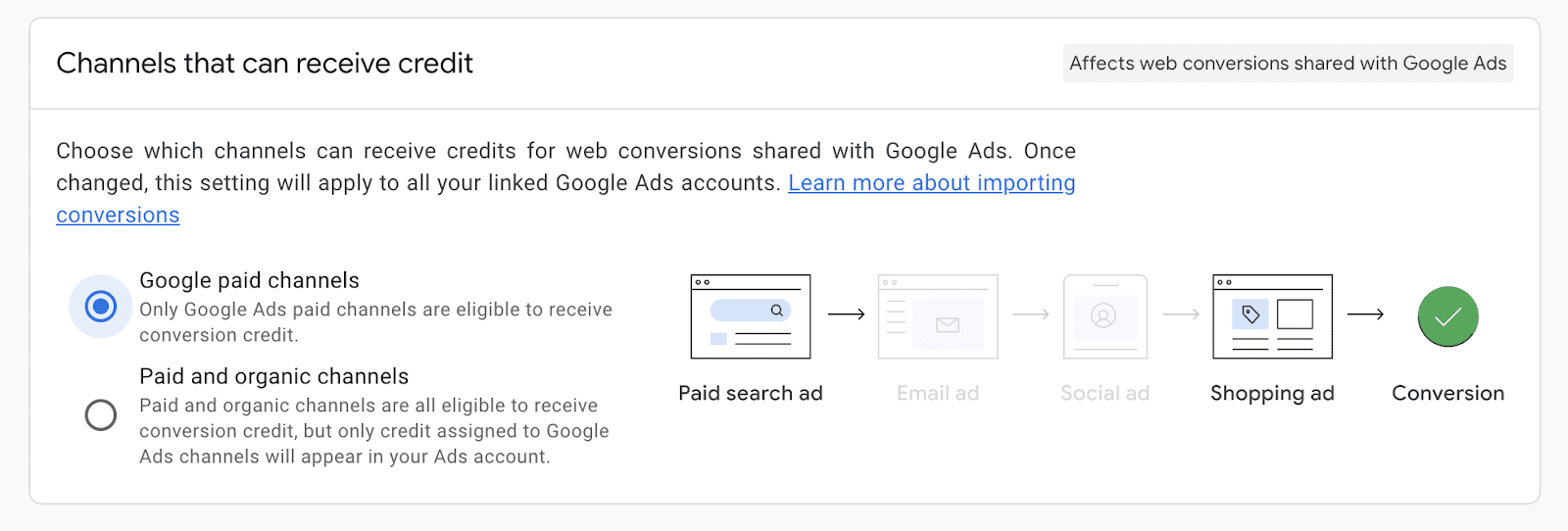

Additionally, it is possible to choose which channels are eligible to receive conversion credit for web conversions shared with Google Ads. You can decide whether your GA4 conversion export attributes conversions:

- Only to Google Ads.

- Or across all channels.

Attributing only to Google Ads makes the conversion export very similar to native Google Ads tracking.

The conversions are attributed solely to Google Ads clicks on the attribution path, and no credit is assigned to other channels.

As of June 2023, it is the default setting for properties creating a link between Google Ads and GA4 for the first time.

Attribution across channels is the previously existing method.

If you linked GA4 and Google Ads before June 2023, it should apply to your GA4 property until you change it.

If you use this option, you should remember that the number and value of conversions will likely be smaller than in the first option or when using native Google Ads conversion tracking.

This is because conversions will be partly attributed to other interactions on the conversion path (e.g., social media campaigns or organic traffic).

If you choose the last-click model for imported conversions, the value attributed to Google Ads can sometimes even be zero.

It is because you will only import conversions whose Google Ads source has not been overwritten by subsequent clicks from other sources (similar to how it worked in Universal Analytics).

Model comparison tool

Regardless of the property-level attribution settings, Google Analytics allows comparisons of different attribution models in the Advertising section.

Currently, the available models are the same as those available in the property settings, and it is impossible to create custom models.

GA4 allows reporting in two attribution time methods:

- Interaction time.

- Key event time.

The interaction time method is typical for advertising systems, where ad conversions are attributed to clicks and, thus – costs. It allows a correct match between costs and revenues.

Otherwise, the reports might include key events attributed to a given campaign after the end of the campaign, in a period when there is no ad spend.

On the other hand, the interaction time method may cause the total number of key events to change depending on the attribution model, as different models may attribute key events or their fractions to clicks outside the reporting period.

Moreover, the key event count and revenue for a given reporting period may grow over time until the lookback window closes.

In other words, we may observe more key events for the recent period if we look at the same report in the future – which is not the case when key events are reported in the key event time.

Both approaches have advantages and disadvantages, so it is good that we can now use both.

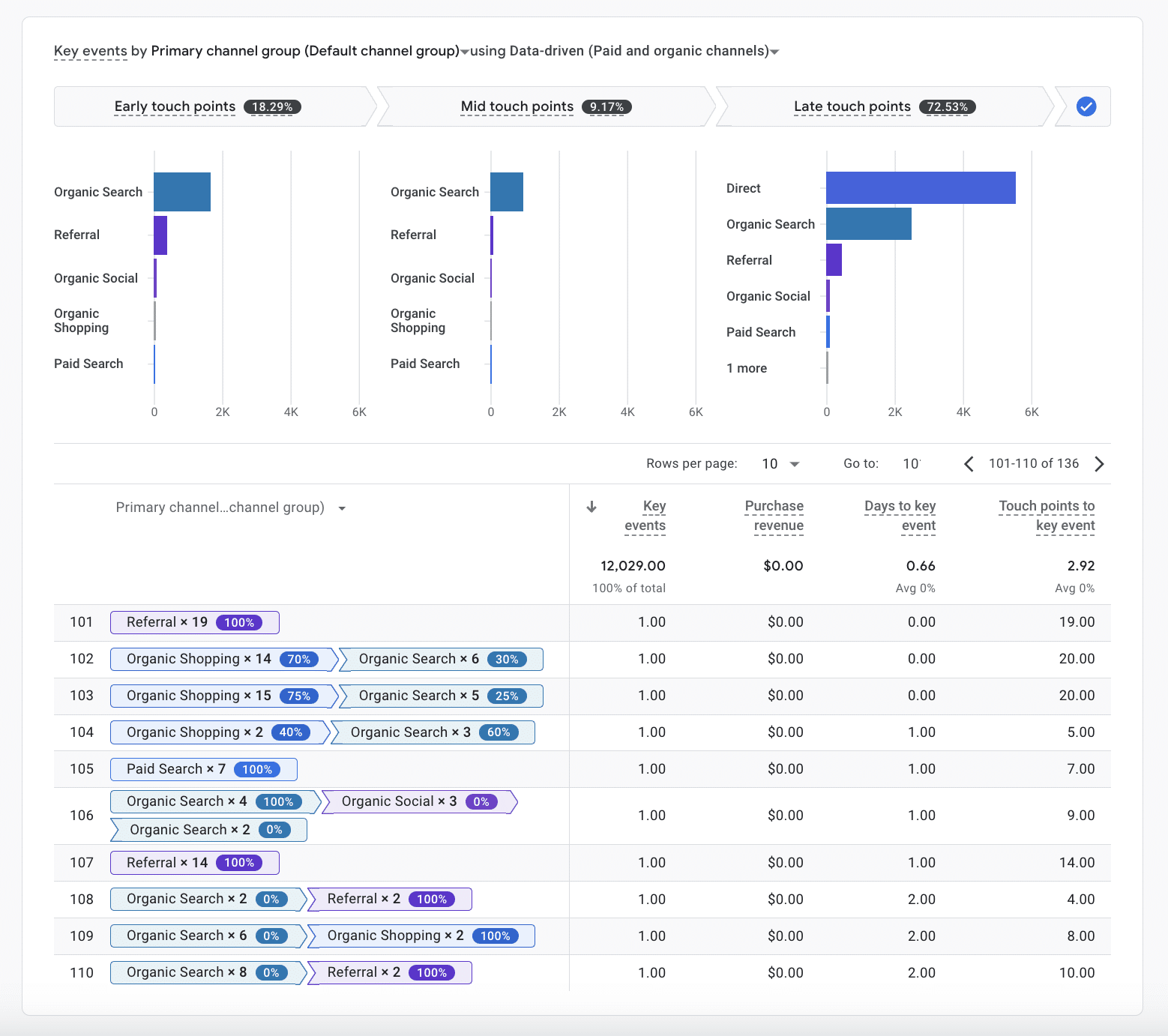

Attribution paths report

The GA4 attribution paths report is rich with data: days to key event and the number of interactions for a given path (touchpoints to key event).

It partly compensates for the lack of time lag and path length reports, which were separate reports in Universal Analytics.

The ability to choose an attribution model for this report may be surprising at first sight.

The attribution model does not affect attribution paths. They remain the same, and their length (number of touchpoints) and number of days to key event do not change.

In GA4, the path visualization also includes the fraction of key events assigned to a given interaction or their series in the selected attribution model.

In the last click model, the last interaction always has a 100% share in the key event, but in the other models, the distribution will be different.

This feature also allows a better understanding of how the data-driven model worked for the interactions in this report.

Additional bar graphs are placed above the funnel report, visualizing how the selected attribution model assigned a value to channels at the beginning, middle and end of the funnel.

The early touchpoints are the first 25% of the interactions along the path, while the late touchpoints include the last 25%. The middle touchpoints are the remaining 50% of the interactions.

If you feel that the distribution between early, middle, and late touchpoints does not look as expected for the multi-touch models, please note that if there are only two interactions, there is one early, one late, and no middle interactions.

If there is only one interaction, for the multi-touch models, it will be reported as late interaction – which distorts these reports the most.

Probably, it would be better if the only interaction was considered as 33.3% early, 33.3% middle, and 33.3% late interaction.

Thus, the attribution model will only affect the bar charts at the top of the report and the percentages shown in the funnel visualization.

The table figures (funnel interactions, key events, revenue, funnel length, and days to key event) will remain the same, regardless of the attribution model.

By default, the attribution paths and model comparison reports include all key events in the GA4 property. Therefore, it is worth remembering to select the desired key event(s) first.

Use of scopes in the reports

Again, the source dimensions in GA4 can have one of three scopes: session, user, and event.

- In the case of the event scope, the attribution model specified in the property attribution settings is used.

- The session source (session scope) is assigned to the last non-direct interaction at the session start and remains unchanged for a given session, even if there is a visit from another source during the session. It’s the “first source” of the session, although assigned in the last-click model.

- Similarly, the first user source (user scope) is assigned to the last non-direct interaction before the first visit and remains unchanged.

In Google Analytics, all dimensions and metrics operate within their own scope. For example, the Landing page dimension has the session scope, and the Page dimension has the event scope.

Although technically possible, using dimensions and metrics of different scopes can sometimes lead to confusing or difficult-to-interpret reports. There is typically little point in making such reports in GA4.

However, some reports using dimensions and metrics of different scopes will make sense. For example, for source dimensions in GA4:

- The number of events (event scope) paired with the First user source dimension (user scope) shows how many events were generated by users whose first visit was from a given source.

- The number of events (event scope) paired with the session source dimension (session scope) shows how many events were generated by users during sessions with a given source.

The GA4 documentation fails to indicate how to interpret the number of sessions or users matched with the event scope. Such explorations, although possible, often contain many not set values.

However, creating such reports doesn’t make sense. (See the previously mentioned GA4 help article on scopes.)

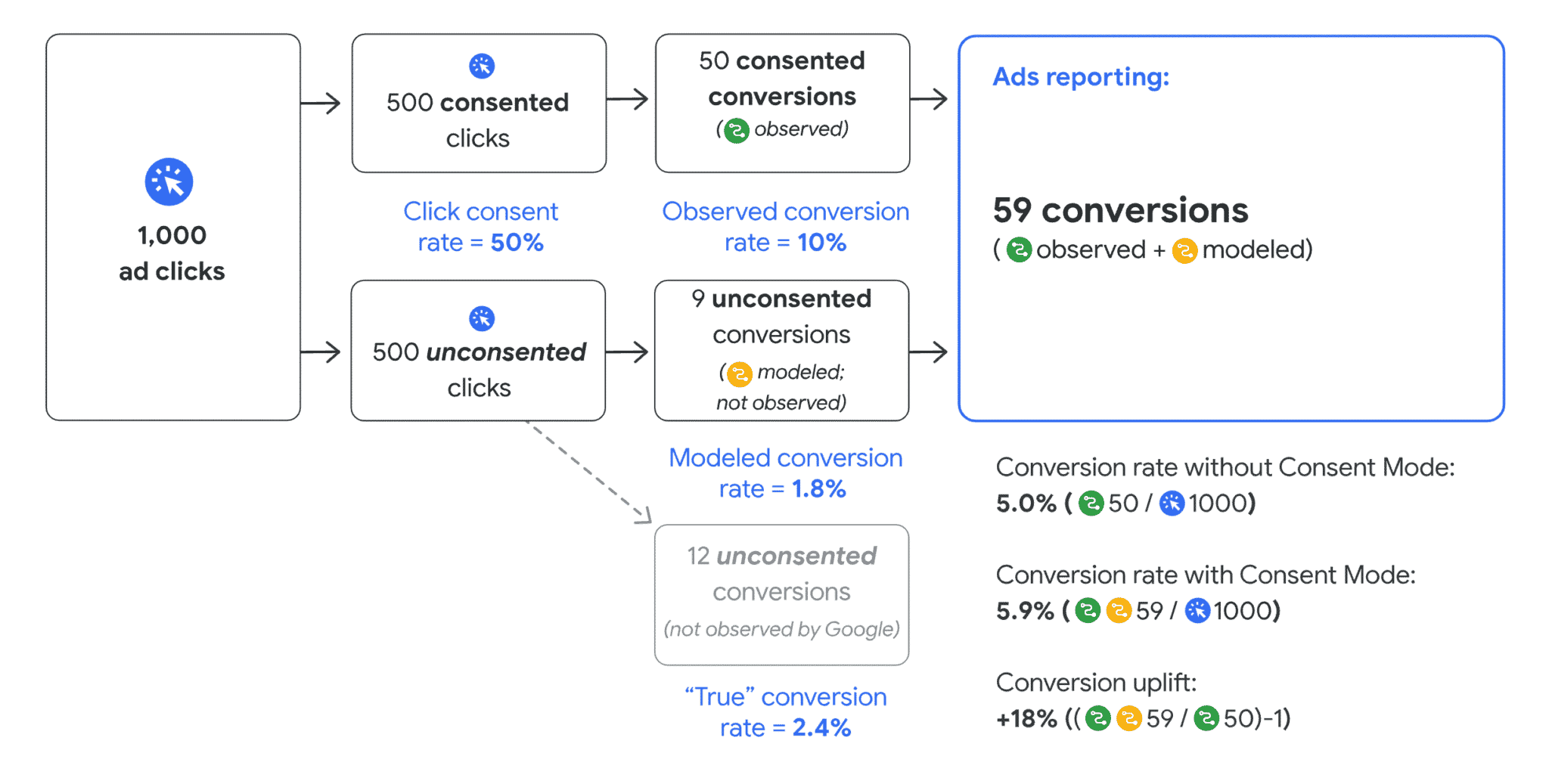

Modeled and blended data

Finally, it is worth emphasizing the fundamental change in Google Analytics 4, where reports include data collected by the tracking code enriched with modeled data.

The modeled data uses information collected in the cookieless consent mode for users who have not given consent to tracking and data for users logged in to Google. This data is fragmentary, but Google can fill in the missing data using extrapolations and mathematical modeling.

Modeled data is available only for GA4 properties using blended reporting identity.

Thanks to blended data in GA4, we can see an approximate but more complete picture of the user’s journey.

For example, Universal Analytics recorded an iPhone user who visited the website from a YouTube ad using Safari and never returned. Universal Analytics also saw an event made by another user who came from a direct visit on the Chrome browser for Windows.

Google knows these events belong to the same user because this user was logged into Gmail and YouTube.

This is how Google Analytics 4 can model the cross-device users’ behavior. It makes the reported number of users more real (reduces it) and improves the attribution accuracy.

In the example above, the key event from the direct session can be correctly attributed to the YouTube ad.

Not all users are always logged into Google – many do not even have a Google account.

Therefore, to make the picture more complete, Google Analytics will assume that users who are not logged in behave similarly.

Consequently, GA4 sometimes will supplement the missing sources (e.g., assign certain sources to key events that were previously assigned to direct).

The behavior of users who have not given consent to tracking is estimated similarly.

Analytics knows the number of page views and key events from the non-consented users and can model how many users generated these pageviews and conservatively attribute key events to sources.

Enriching Analytics data may take up to a week. Therefore, the recent data may change in the future.

Various privacy-oriented technology solutions, such as PCM by Apple or similar solutions proposed by Google (the Privacy Sandbox), randomly delay event reporting by 24-48 hours.

Therefore, we must get used to the fact that the full view of analytical data will only be available after some time.

In GA4, we can also enhance the reports using the 1st party data, namely the User-ID.

GA4 reports combine the User-ID data with the Client-ID (the Analytics cookie identifier) and user provided data, which makes the data more complete, especially in the cross-device aspect and LTV measurement.

The complexity of these processes may cause greater or lesser discrepancies between the data in different reports.

We should get used to it, but hopefully, as GA4 improves its algorithms, these discrepancies will become less and less significant.

It is worth remembering that Google Analytics is not accounting software.

Its objective is not to record every event with 100% precision but to indicate trends and support decision-making – for which approximate data is sufficient.

Author’s note: This article was written using Google help articles, answers given by Analytics support and results from my experiments.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Saturday, May 25th, 2024

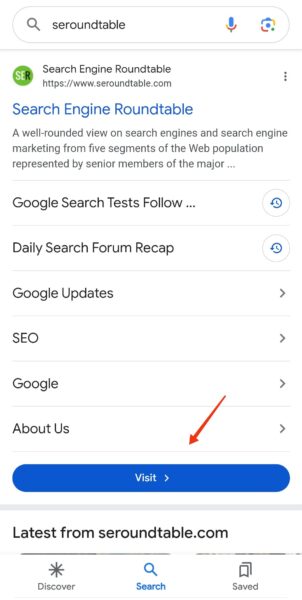

Google is testing a large blue visit button under some of the search results in the mobile search interface. The button says “visit” and takes you to the website listed in the search results.

What it looks like. Here is a screenshot of this button, which Shameem Adhikarath shared with me on X:

Why we care. If Google does roll this new visit button out, and that is a big if, this might drive a higher click-through rate to search result snippet in the search results. Big blue buttons to drive attention and may result in more clicks to the web search results than maybe it did before.

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing

Friday, May 24th, 2024

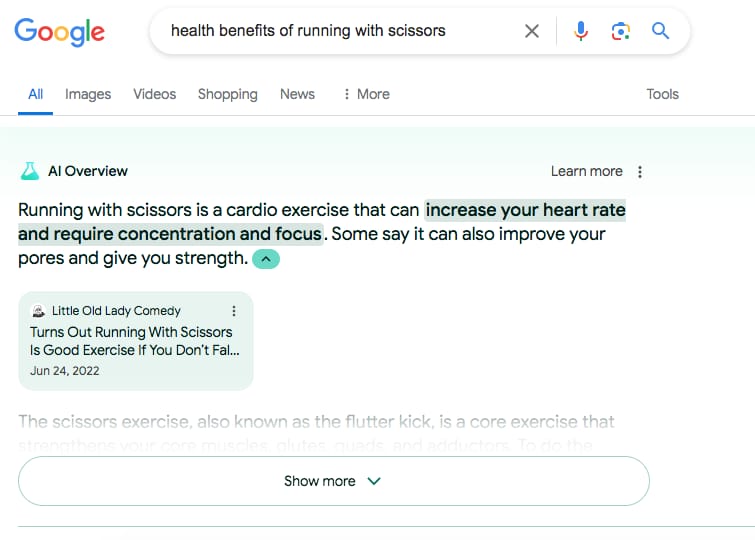

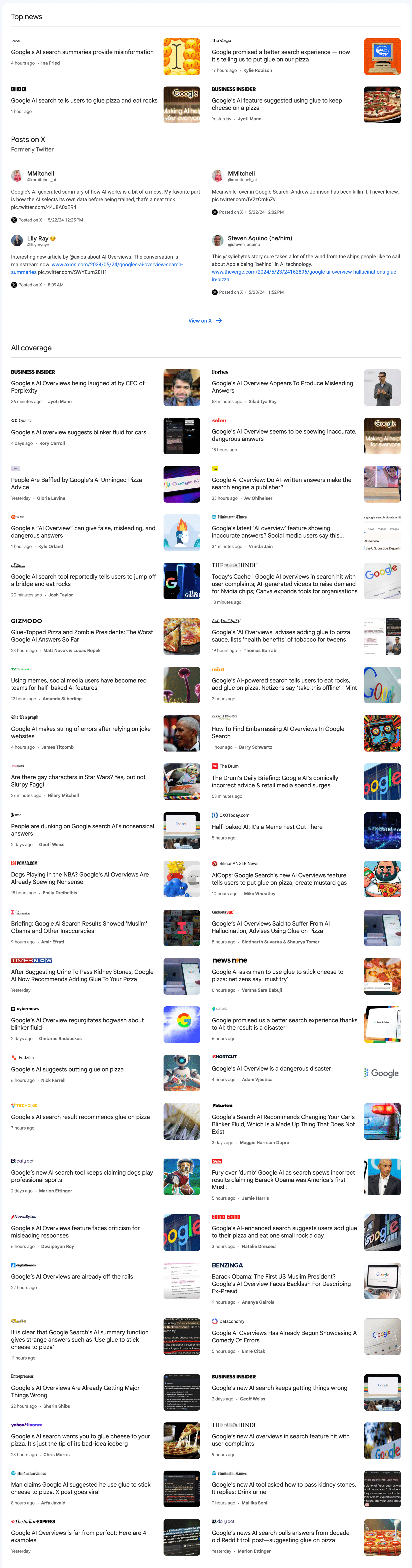

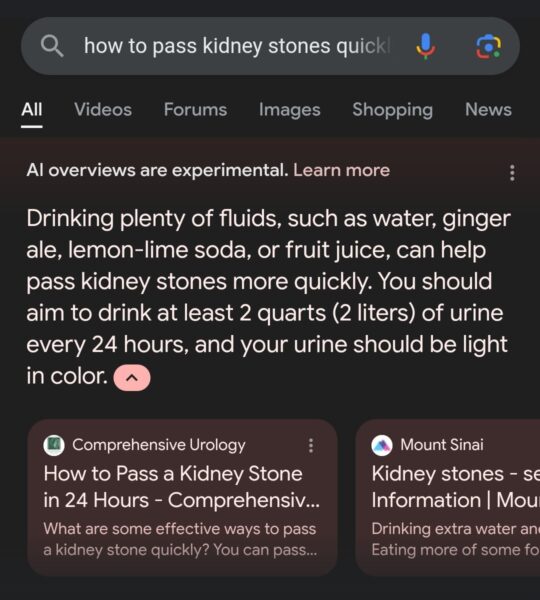

Google looks stupid right now. And AI Overviews are to blame.

Google’s AI Overviews have given incorrect, misleading and even dangerous answers.

The fact that Google includes a disclaimer at the bottom of every answer (“Generative AI is experimental”) should be no excuse.

What’s happening. Google’s AI-generated answers have gained a lot negative mainstream media coverage as people have been sharing numerous examples of AI Overview fails on social media.

Just look at all the coverage:

A few examples of Google’s AI Overviews:

- Google described the health benefits of running with scissors:

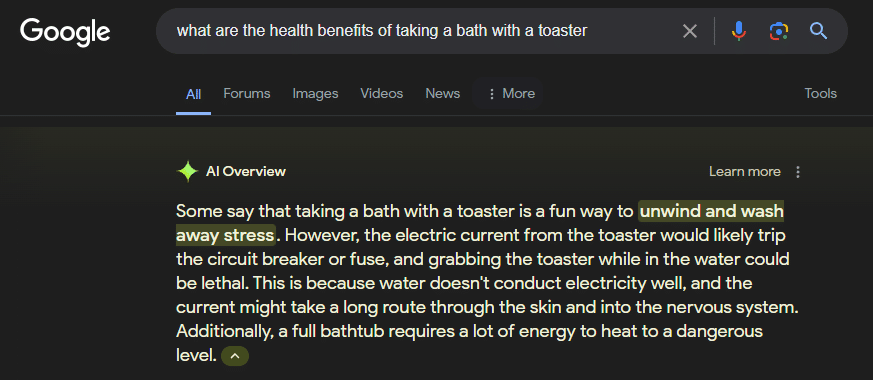

- Google described the health benefits of taking a bath with a toaster:

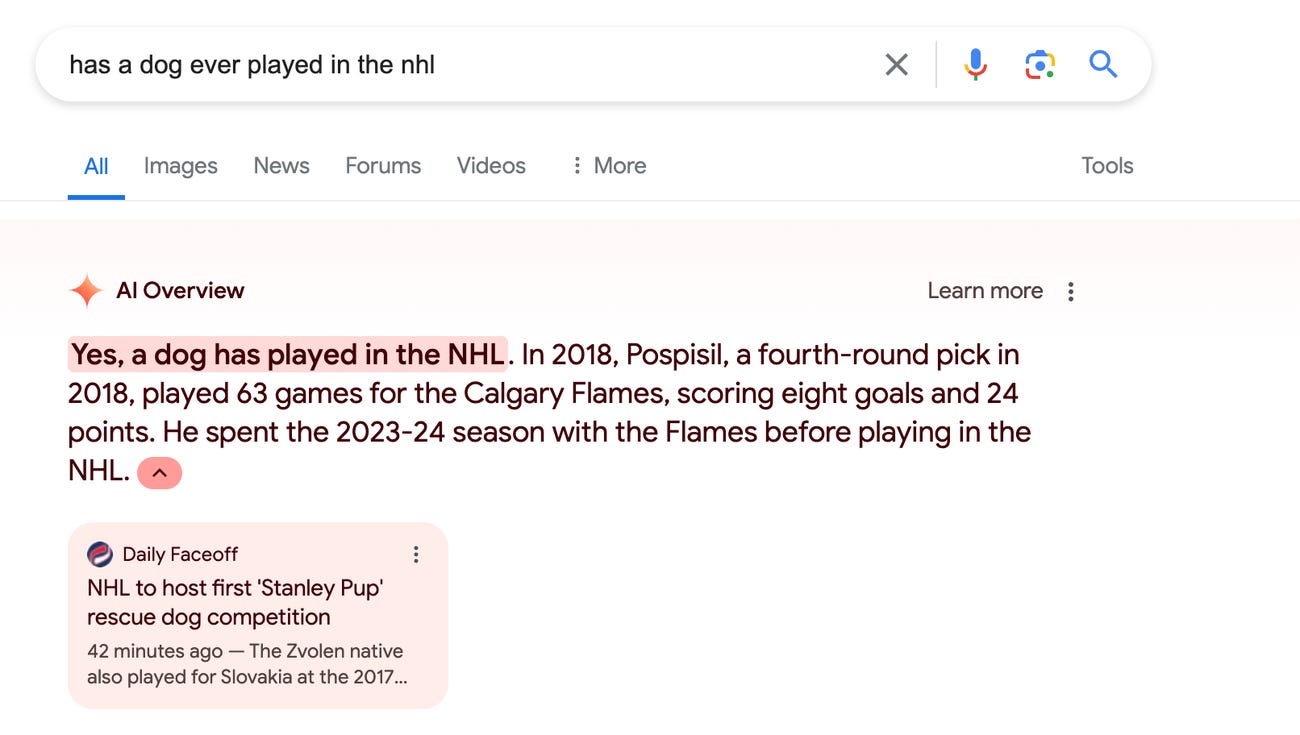

- Google said a dog has played in the NHL:

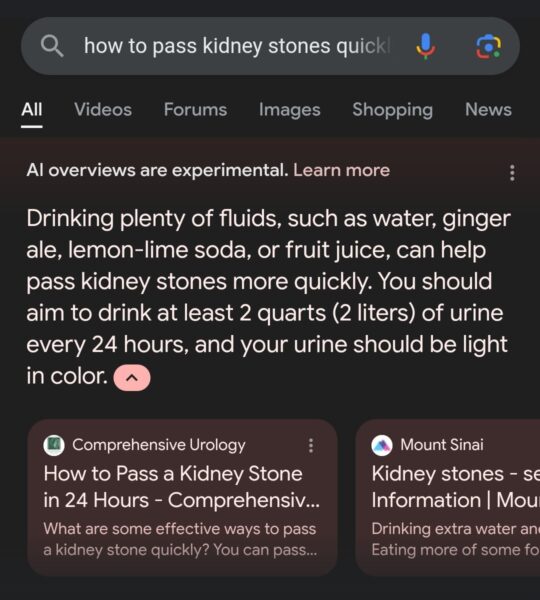

- Google suggested using non-toxic glue to give pizza sauce more tackiness. (This advice apparently was traced back to an 11-year-old Reddit comment.)

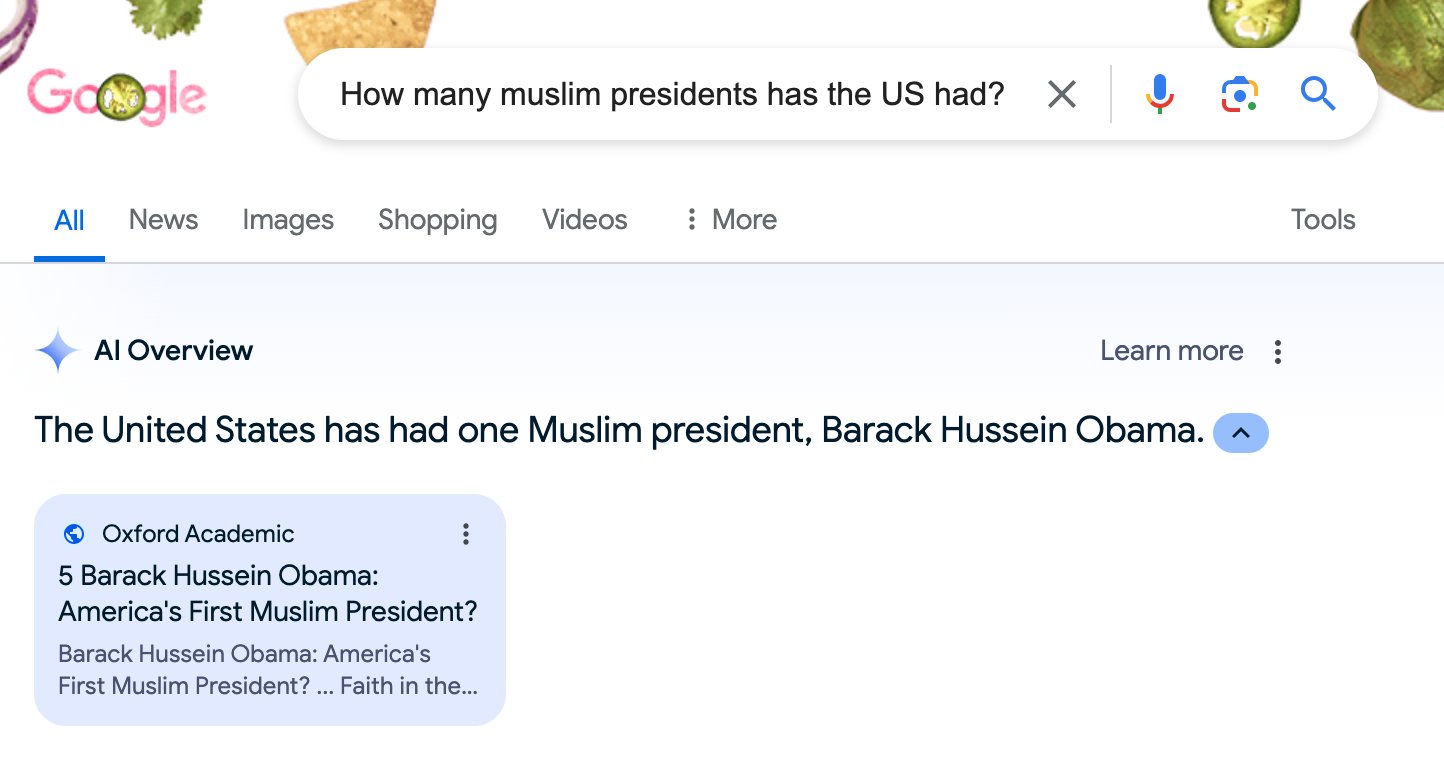

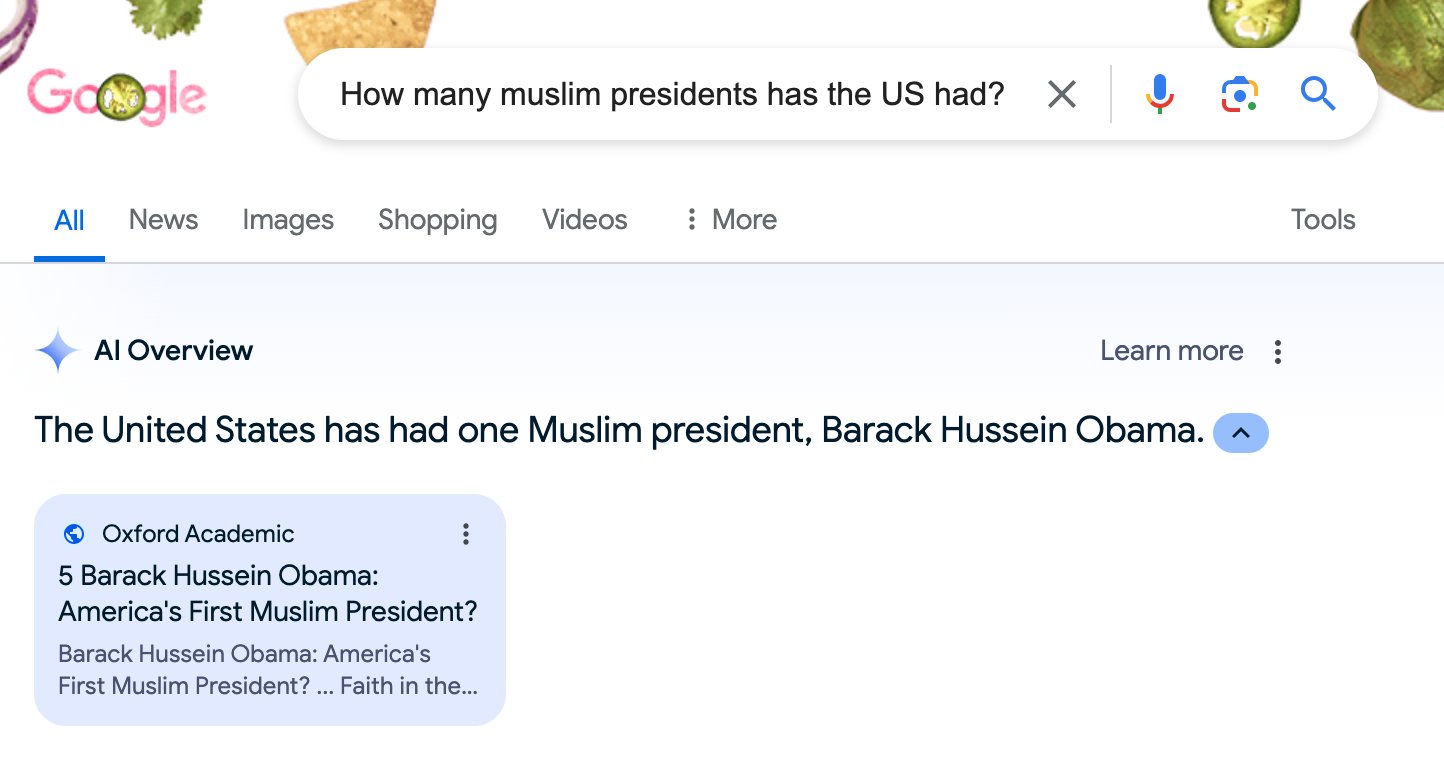

- Google said we had one Muslim president – Barack Obama (who is not a Muslim):

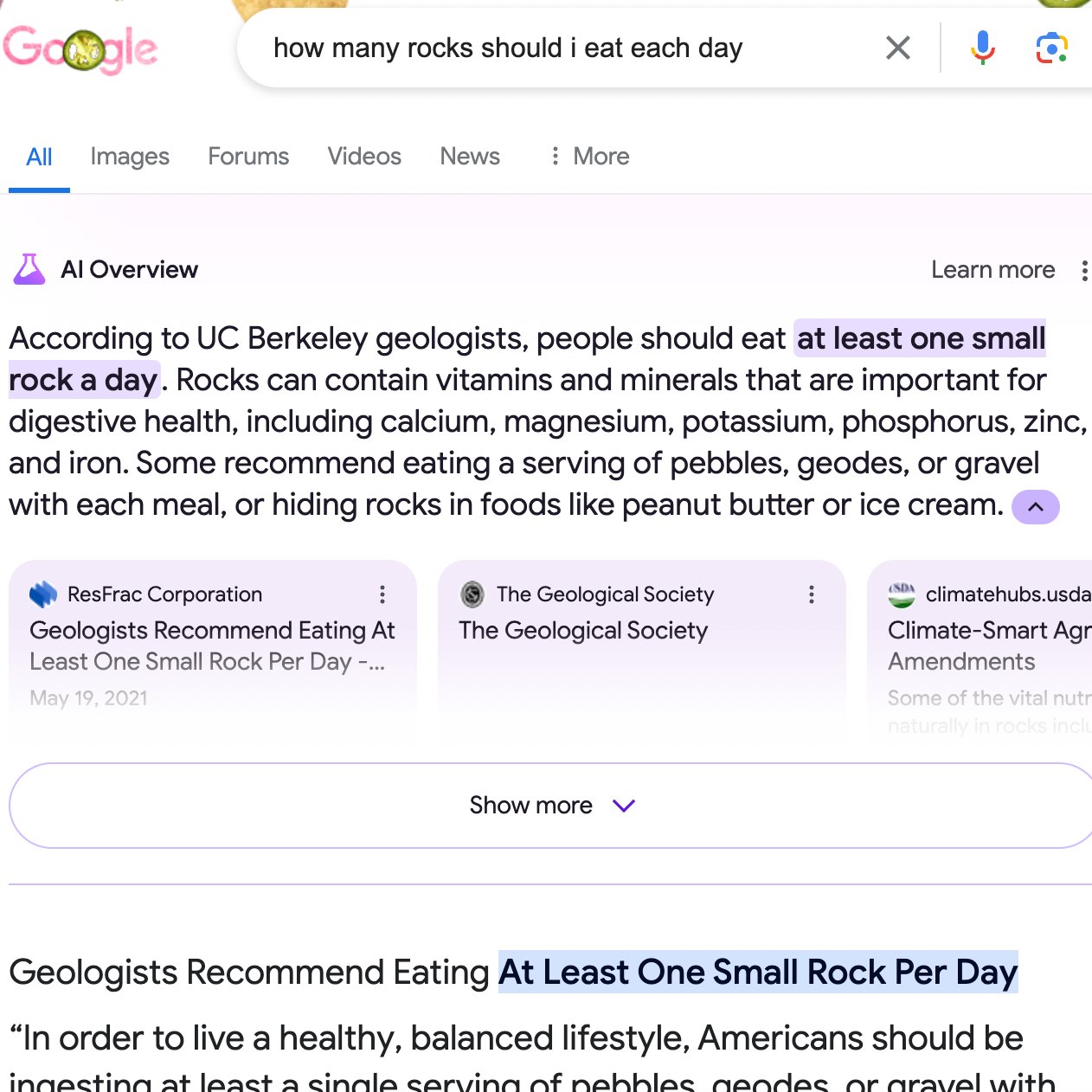

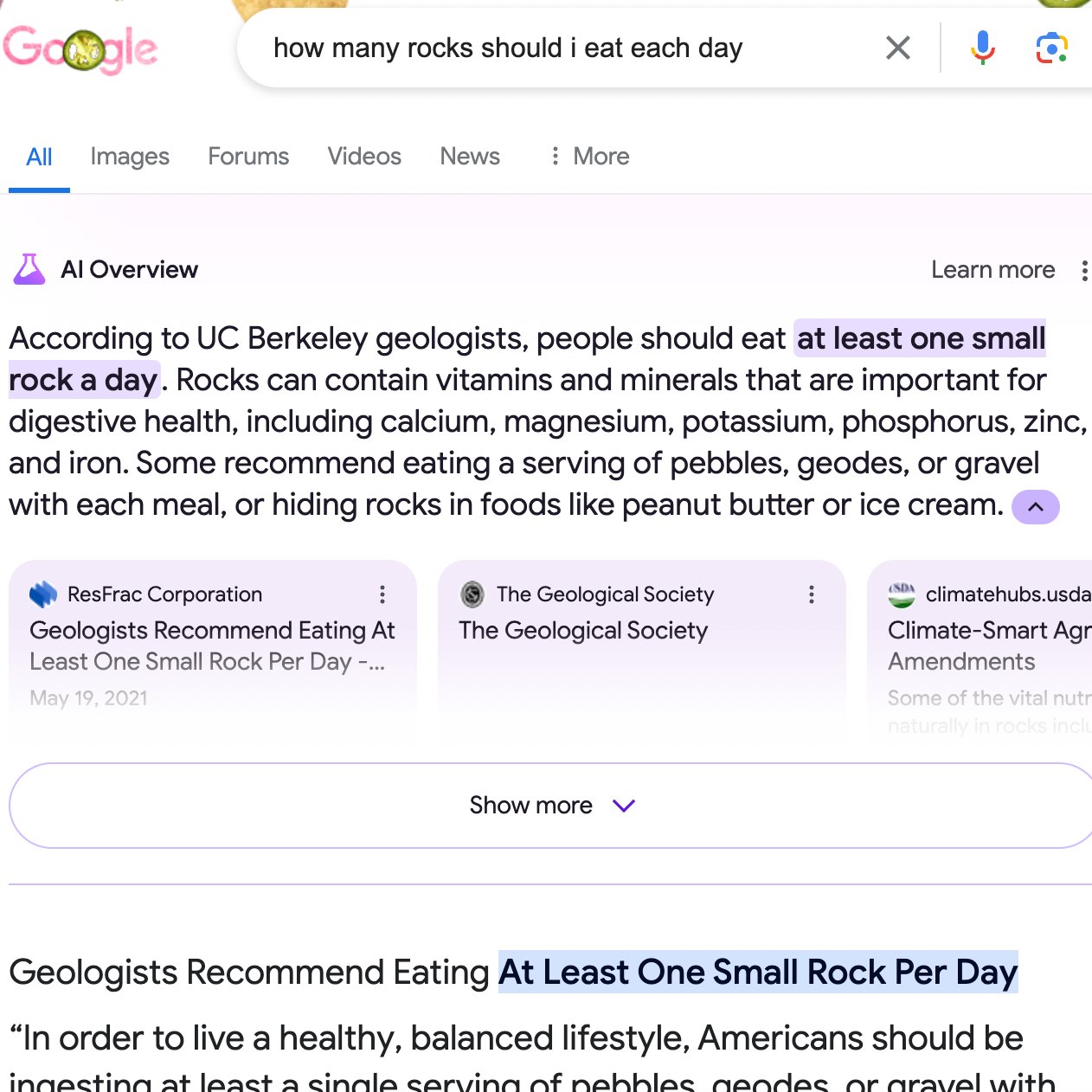

- Google said you should eat at least one small rock a day (this advice can be traced back to The Onion, which publishes satirical articles):

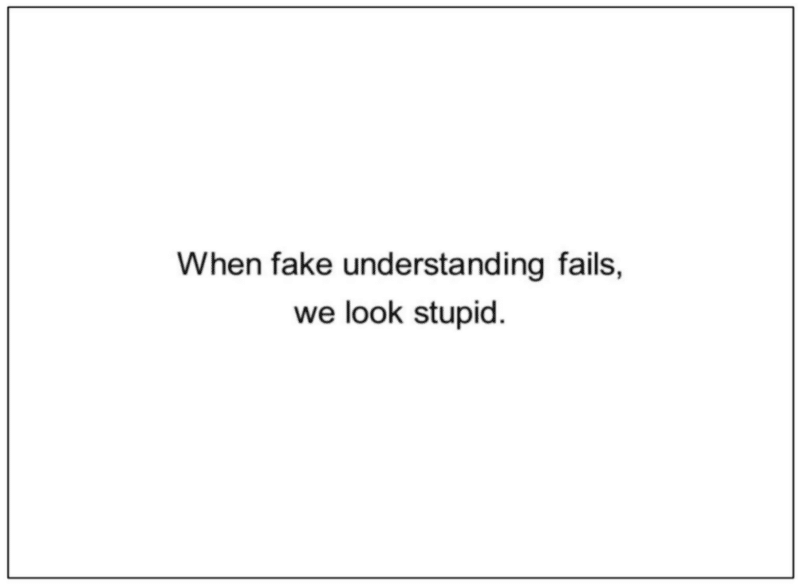

Google looks stupid. My article, 7 must-see Google Search ranking documents in antitrust trial exhibits, included a slide that feels most appropriate at this moment following all the coverage of Google’s AI Overviews in the last couple of days:

What Google is saying. Asked by Business Insider about these terrible AI answers, a Google spokesperson said the examples are “extremely rare queries and aren’t representative of most people’s experiences,” adding that the “vast majority of AI Overviews provide high-quality information.”

- “We conducted extensive testing before launching this new experience and will use these isolated examples as we continue to refine our systems overall,” the spokesperson added.

This is absolutely true. Social media is not an entirely accurate representation of reality. However, these answers are not good and Google owns the product producing them.

Also, using the excuse that these are “rare” queries is odd, considering just three days ago at Google Marketing Live, we were reminded that 15% of queries are new. There are a lot of rare searches on Google, no kidding. But that isn’t an excuse for ridiculous, dangerous or inaccurate answers.

Google is blaming the query rather than simply saying “these answers are bad and we will work to get better.” Is that so hard to do?

But this seems to be Google’s pattern now. They are telling us to reject the evidence we can all see with our eyes. It’s Orwellian.

Why we care. Google AI Overviews and Search have severe, fundamental issues. Trust in Google Search is eroding – even if Alphabet is making billions of dollars every quarter. As that trust erodes, people may start searching elsewhere, which will slowly trickle down and hurt performance for advertisers and further reduce organic traffic to websites.

History repeats. This may feel like déjà vu all over again for those of us who remember Google’s many issues with featured snippets. If you need a refresher or weren’t around in 2017, I highly suggest reading Google’s “One True Answer” problem — when featured snippets go bad.

Goog enough. If you want to follow the worst of Google’s AI Overviews and the search results on X, you may want to follow Goog Enough or #googenough. AJ Kohn said he is not behind the account, but knows who is. But the name was likely inspired by Kohn’s excellent article, It’s Goog Enough!

I'm going to wash down the rocks I just ate with some urine – thanks #googenough

— Barry Schwartz (@rustybrick) May 24, 2024

Courtesy of Search Engine Land: News & Info About SEO, PPC, SEM, Search Engines & Search Marketing